📖 Papers and resources related to our survey("Explainability for Large Language Models: A Survey") are organized by the structure of the paper.

📧 Please feel free to reach out if you spot any mistake. We also highly value suggestions to improve our work, please don't hesitate to ping us at: [email protected].

- Explainability-for-Large-Language-Models: A Survey

- Table of Contents

- Overview

- Training Paradigms of LLMs

- Explanation for Traditional Fine-Tuning Paradigm

- Explanation for Prompting Paradigm

- Explanation Evaluation

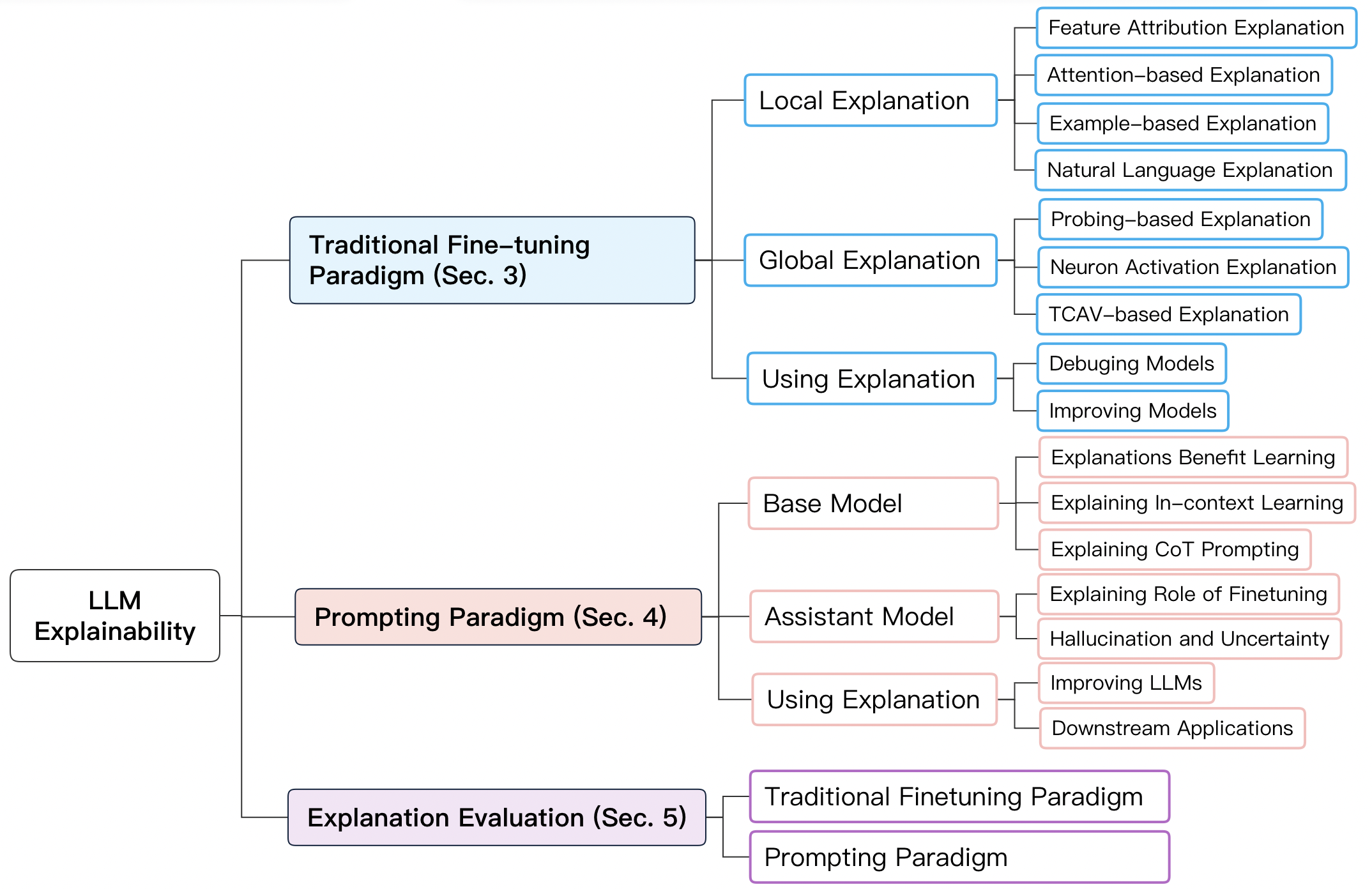

We categorize LLM explainability into two major paradigms. Based on this categorization, kinds of explainability techniques associated with LLMs belonging to these two paradigms are summarized as following:

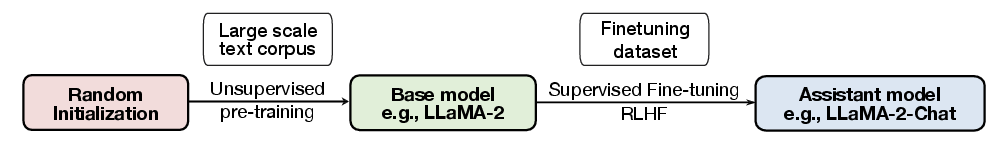

A language model is first pre-trained on a large corpus of unlabeled text data, and then fine-tuned on a set of labeled data from a specific downstream domain.

The prompting paradigm involves using prompts, such as natural language sentences with blanks for the model to fill in, to enable zero-shot or few-shot learning without requiring additional training data. Models under this paradigm can be categorized into two types, based on their development stages: base model and assistant model. In this scenario, LLMs undergo unsupervised pre-training with random initialization to create a base model. The base model can then be fine-tuned through instruction tuning and RLHF to produce the assistant model.

-

Base Model

GPT-3, OPT, LLaMA-1, LLaMA-2, Falcon, etc.

-

Assistant Model

GPT-3.5, GPT 4, Claude, LLaMA-2-Chat, Alpaca, Vicuna, etc.

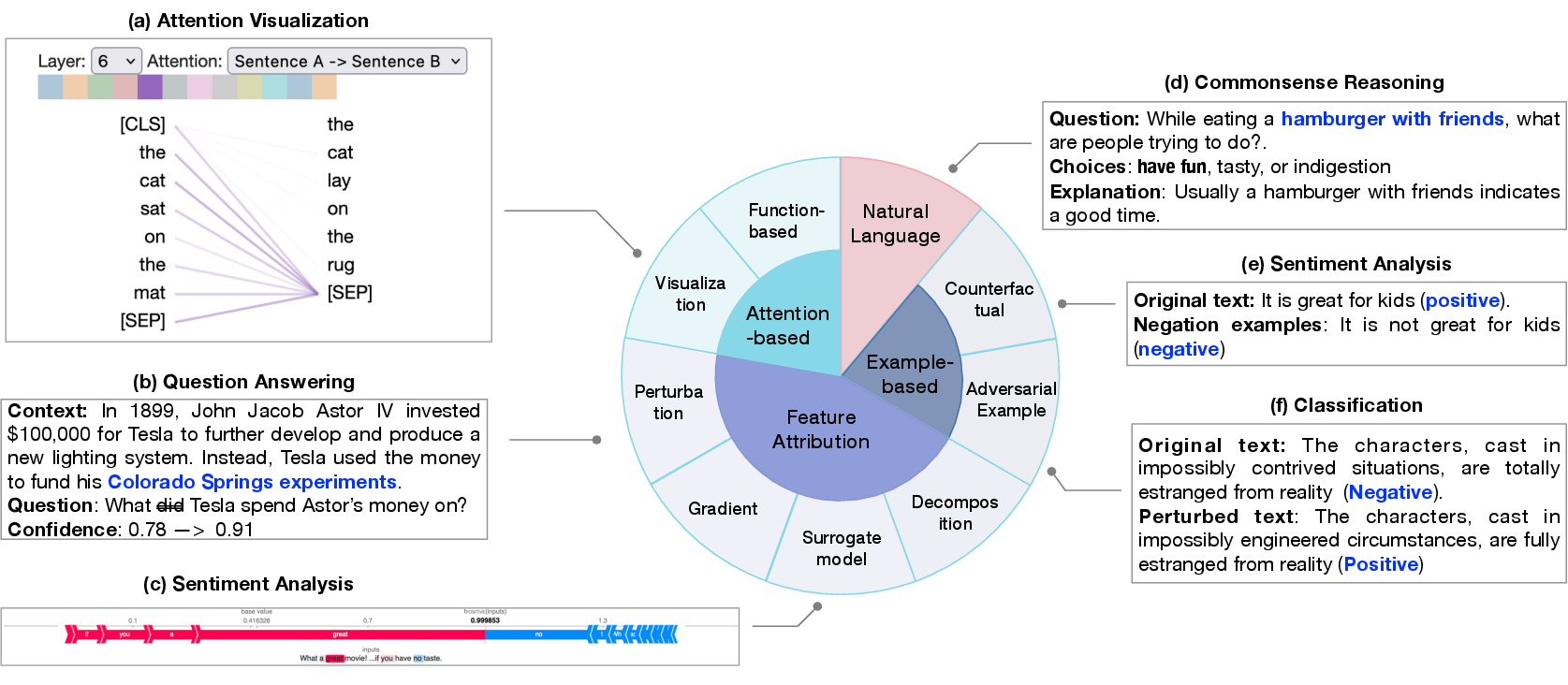

Local Explanation focus on understanding how a language model makes a prediction for a specific input instance.

- Visualizing and Understanding Neural Models in NLP. Jiwei Li et al. ACL 2016.

- Understanding Neural Networks through Representation Erasure. Jiwei Li et al. arXiv 2017.

- Pathologies of Neural Models Make Interpretations Difficult. Shi Feng et al. EMNLP 2018.

- Perturbed Masking: Parameter-free Probing for Analyzing and Interpreting BERT. Zhiyong Wu et al. ACL 2020.

- Resisting Out-of-Distribution Data Problem in Perturbation of XAI. Luyu Qiu et al. arXiv 2021.

- Towards a Deep and Unified Understanding of Deep Neural Models in NLP. Chaoyu Guan el al. PMLR 2019.

- The (Un)reliability of saliency methods. Pieter-Jan Kindermans et al. arXiv 2017.

- Axiomatic attribution for deep networks. Mukund Sundararajan et al. ICML 2017.

- Investigating Saturation Effects in Integrated Gradients. Vivek Miglani et al. ICML 2020.

- Exploring the Role of BERT Token Representations to Explain Sentence Probing Results. Hosein Mohebbi et al. EMNLP 2021.

- Integrated Directional Gradients: Feature Interaction Attribution for Neural NLP Models. Sandipan Sikdar et al. ACL 2021.

- Discretized Integrated Gradients for Explaining Language Models. Soumya Sanyal et al. EMNLP 2021.

- A Rigorous Study of Integrated Gradients Method and Extensions to Internal Neuron Attributions. Daniel D. Lundstrom et al. ICML 2022.

- Sequential Integrated Gradients: a simple but effective method for explaining language models. Joseph Enguehard. ACL 2023.

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier. Marco Tulio Ribeiro et al. arXiv 2016.

- A Unified Approach to Interpreting Model Predictions. Scott M Lundberg et al. NIPS 2017.

- BERT meets Shapley: Extending SHAP Explanations to Transformer-based Classifiers. Enja Kokalj et al. ACL 2021.

- Algorithms to estimate Shapley value feature attributions. Hugh Chen et al. Nature Machine Intelligence 2023.

- A Causality Inspired Framework for Model Interpretation. Chenwang Wu et al. KDD 2023.

- Explaining NonLinear Classification Decisions with Deep Taylor Decomposition Grégoire Montavon et al. arXiv 2015.

- On Attribution of Recurrent Neural Network Predictions via Additive Decomposition. Mengnan Du et al. arXiv 2019.

- Layer-wise relevance propagation: an overview. Grégoire Montavon et al. 2019.

- Analyzing Multi-Head SelfAttention: Specialized Heads Do the Heavy Lifting, the Rest Can Be Pruned.. Elena Voita et al. ACL 2019.

- Analyzing the Source and Target Contributions to Predictions in Neural Machine Translation. Elena Voita et al. ACL 2021.

- On Explaining Your Explanations of BERT: An Empirical Study with Sequence Classification. Zhengxuan Wu et al. arXiv 2021.

- Transformer Interpretability Beyond Attention Visualization. Hila Chefer et al. CVPR 2021.

- BertViz: A Tool for Visualizing Multi-Head Self-Attention in the BERT Model. Jesse Vig. arXiv 2019.

- SANVis: Visual Analytics for Understanding SelfAttention Networks. Cheonbok Park et al. arXiv 2019.

- exBERT: A Visual Analysis Tool to Explore Learned Representations in Transformer Models. Benjamin Hoover et al. ACL 2020.

- VisQA: X-raying Vision and Language Reasoning in Transformers. Theo Jaunet et al. arXiv 2021.

- Attention Flows: Analyzing and Comparing Attention Mechanisms in Language Models. Joseph F. DeRose et al. arXiv 2020.

- VisQA: X-raying Vision and Language Reasoning in Transformers. Theo Jaunet et al. CVPR 2021.

- AttentionViz: A Global View of Transformer Attention. Catherine Yeh et al. arXiv 2023.

- Grad-SAM: Explaining Transformers via Gradient Self-Attention Maps. Oren Barkan et al. CIKM 2021.

- Self-Attention Attribution: Interpreting Information Interactions Inside Transformer. Yaru Hao et al. AAAI 2021.

- Is Attention Interpretable? Sofia Serrano et al. ACL 2019.

- Attention is not Explanation. Sarthak Jain et al. NAACL 2019.

- Generating token-level explanations for natural language inference. James Thorne et al. NAACL 2019.

- Are interpretations fairly evaluated? a definition driven pipeline for post-hoc interpretability. Ninghao Liu et al. arXiv 2020.

- Attention Flows are Shapley Value Explanations Kawin Ethayarajh et al. ACL 2021.

- Towards Transparent and Explainable Attention Models. Akash Kumar Mohankumar et al. ACL 2020.

- Why attentions may not be interpretable? Bing Bai et al. KDD 2021.

- On identifiability in transformers. Gino Brunner et al. ICLR 2020.

- Order in the court: Explainable ai methods prone to disagreement. Michael Neely et al. ICML 2021.

- Attention is not not Explanation. Sarah Wiegreffe et al. arXiv 2019.

- What Does BERT Look at? An Analysis of BERT’s Attention. Kevin Clark et al. ACL 2019.

- Measuring and improving faithfulness of attention in neural machine translation. Pooya Moradi et al. ACL 2021.

- Marta: Leveraging human rationales for explainable text classification. Ines Arous et al. AAAI 2021.

- Is bert really robust? natural language attack on text classification and entailment Di Jin et al. AAAI 2020.

- BAE: BERT-based Adversarial Examples for Text Classification. Siddhant Garg et al. EMNLP 2020.

- Contextualized Perturbation for Textual Adversarial Attack. Dianqi Li et al. ACL 2021.

- SemAttack: Natural Textual Attacks via Different Semantic Spaces. Boxin Wang et al. ACL 2022.

- Learning the Difference that Makes a Difference with Counterfactually-Augmented Data. Divyansh Kaushik et al. ICLR 2020.

- Polyjuice: Generating Counterfactuals for Explaining, Evaluating, and Improving Models. Tongshuang Wu et al. ACL 2021.

- Explaining nlp models via minimal contrastive editing (mice). Alexis Ross et al, ACL 2021.

- Crest: A joint framework for rationalization and counterfactual text generation. Marcos Treviso et al. ACL 2023.

- Representer point selection for explaining deep neural networks. Chih-Kuan Yeh et al. NIPS 2018.

- Understanding black-box predictions via influence functions. Pang Wei Koh et al. PMLR 2017.

- Data shapley: Equitable valuation of data for machine learning. Amirata Ghorbani et al. PMLR 2019.

- Data cleansing for models trained with sgd. Satoshi Hara et al. NIPS 2019.

- Estimating training data influence by tracing gradient descent. Garima Pruthi et al. NIPS 2020.

- Fastif: Scalable influence functions for efficient model interpretation and debugging. Han Guo. ACL 2020.

- Studying large language model generalization with influence functions. Roger Grosse et al. arXiv 2023.

- Explain Yourself! Leveraging Language Models for Commonsense Reasoning. Nazneen Fatema Rajani et al. ACL 2019.

- Local Interpretations for Explainable Natural Language Processing: A Survey. Siwen Luo et al. arXiv 2022.

- Few-Shot Out-of-Domain Transfer Learning of Natural Language Explanations in a Label-Abundant Setup. Yordan Yordanov et al. EMNLP 2022.

Global Explanation aims to provide a broad understanding of how the LLM work in the level of model components, such as neurons, hidden layers and larger modules.

- Evaluating Layers of Representation in Neural Machine Translation on Part-of-Speech and Semantic Tagging Tasks. Yonatan Belinkov et al. ACL 2017.

- Deep Biaffine Attention for Neural Dependency Parsing. Timothy Dozat et al. ICLR 2017.

- Dissecting Contextual Word Embeddings: Architecture and Representation. Matthew E. Peters et al. ACL 2018.

- Deep RNNs Encode Soft Hierarchical Syntax.. Terra Blevins et al. ACL 2018.

- What Does BERT Learn about the Structure of Language?. Ganesh Jawahar et al. ACL 2019.

- What do you learn from context? Probing for sentence structure in contextualized word representations. Ian Tenney et al. ICLR 2019.

- A Structural Probe for Finding Syntax in Word Representations. John Hewitt et al. ACL 2019.

- Open sesame: getting inside bert’s linguistic knowledge. Yongjie Lin et al. ACL 2019.

- BERT Rediscovers the Classical NLP Pipeline. Ian Tenney et al. ACL 2019.

- How Does BERT Answer Questions?: A Layer-Wise Analysis of Transformer Representations. Betty Van Aken et al. ICKM 2019.

- Revealing the Dark Secrets of BERT. Olga Kovaleva et al. EMNLP 2019.

- What Does BERT Look at? An Analysis of BERT’s Attention.. Kevin Clark et al. ACL 2019.

- Designing and Interpreting Probes with Control Tasks. John Hewitt et al. EMNLP 2019.

- Classifier Probes May Just Learn from Linear Context Features. Jenny Kunz et al. ACL 2020.

- Probing for Referential Information in Language Models. Ionut-Teodor Sorodoc et al. ACL 2020.

- Structured Self-Attention Weights Encode Semantics in Sentiment Analysis. Zhengxuan Wu et al. ACL 2020.

- Probing BERT in Hyperbolic Spaces. Boli Chen et al. ICLR 2021.

- Do Syntactic Probes Probe Syntax? Experiments with Jabberwocky Probing. Rowan Hall Maudslay et al. arXiv 2021.

- Exploring the Role of BERT Token Representations to Explain Sentence Probing Results. Hosein Mohebbi et al. EMNLP 2021.

- How is BERT surprised? Layerwise detection of linguistic anomalies. Bai Li et al. ACL 2021.

- Probing Classifiers: Promises, Shortcomings, and Advances Yonatan Belinkov. Computational Linguistics 2022.

- Probing GPT-3’s Linguistic Knowledge on Semantic Tasks. Lining Zhang et al. ACL 2022.

- Targeted Syntactic Evaluation of Language Models. Rebecca Marvin et al. ACL 2018.

- Language Models as Knowledge Bases?. Fabio Petroni et al. EMNLP 2019.

- On the Systematicity of Probing Contextualized Word Representations: The Case of Hypernymy in BERT. Abhilasha Ravichander. ACL 2020.

- ALL Dolphins Are Intelligent and SOME Are Friendly: Probing BERT for Nouns’ Semantic Properties and their Prototypicality. Marianna Apidianaki et al. BlackboxNLP 2021.

- Factual Probing Is [MASK]: Learning vs. Learning to Recall. Zexuan Zhong et al. NAACL 2021.

- Probing via Prompting. Jiaoda Li et al. NAACL 2022.

- Identifying and Controlling Important Neurons in Neural Machine Translation. Anthony Bau et al. arXiv 2018.

- What Is One Grain of Sand in the Desert? Analyzing Individual Neurons in Deep NLP Models. Fahim Dalvi et al. AAAI 2019.

- Intrinsic Probing through Dimension Selection. Lucas Torroba Hennigen et al. EMNLP 2020.

- On the Pitfalls of Analyzing Individual Neurons in Language Models. Omer Antverg et al. ICML 2022.

- Language models can explain neurons in language models. OpenAI. 2023.

- Explaining black box text modules in natural language with language models. Chandan Singh et al. arXiv 2023.

- Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV). Been Kim et al. ICML 2018.

- Compositional Explanations of Neurons. Jesse Mu et al. NIPS 2020.

- Decomposing language models into understandable components?. Anthropic. 2023.

- Towards monosemanticity: Decomposing language models with dictionary learning. Trenton Bricken et al. 2023.

- Zoom In: An Introduction to Circuits. Chris Olah et al. 2020.

- Branch Specialization. Chelsea Voss et al. 2021.

- Weight Banding. Michael Petrov et al. 2021.

- Naturally Occurring Equivariance in Neural Networks. Catherine Olsson et al. 2020.

- A Mathematical Framework for Transformer Circuits. Catherine Olsson et al. 2021.

- In-context Learning and Induction Heads. Catherine Olsson et al. 2022.

- Transformer feed-forward layers are key-value memories. Mor Geva et al. arXiv 2020.

- Locating and editing factual associations in gpt. Kevin Meng et al. NIPS 2022.

- Transformer feed-forward layers build predictions by promoting concepts in the vocabulary space. Mor Geva et al. arXiv 2022.

- Residual networks behave like ensembles of relatively shallow networks. Andreas Veit et al. NIPS 2016.

- The hydra effect: Emergent self-repair in language model computations. Tom Lieberum et al. arXiv 2023.

- Does circuit analysis interpretability scale? evidence from multiple choice capabilities in chinchilla. Tom Lieberum et al. arXiv 2023.

- Towards interpreting and mitigating shortcut learning behavior of nlu models. Mengnan Du et al. NAACL 2021.

- Adversarial training for improving model robustness? look at both prediction and interpretation. Hanjie Chen et al. AAAI 2022.

- Shortcut learning of large language models in natural language understanding. Mengnan Du et al. CACM 2023.

- Er-test: Evaluating explanation regularization methods for language models. Brihi Joshi et al. EMNLP 2022.

- Supervising model attention with human explanations for robust natural language inference. Joe Stacey et al. AAAI 2022.

- Unirex: A unified learning framework for language model rationale extraction. Aaron Chan et al. ICML 2022.

- Xmd: An end-to-end framework for interactive explanation-based debugging of nlp models. Dong-Ho Lee et al. arXiv 2022.

- Post hoc explanations of language models can improve language models. Jiaqi Ma et al. arXiv 2023.

In prompting paradigm, LLMs have shown impressive reasoning abilities including few-shot learning, chain-of-thought prompting ability and phenomena like hallucination, which lack in conventional fine-tuning paradigm. Given these emerging properties, the explainability research is expected to investigate the underlying mechanisms. The explanation towards prompting paradigm can be categorized into two folds following model development stages: base model explanation and assistant model explanation.

- Chain-of-thought prompting elicits reasoning in large language models. Jason Wei et al. NIPS 2022.

- Towards understanding in-context learning with contrastive demonstrations and saliency maps. Zongxia Li et al. arXiv 2023.

- Larger language models do in-context learning differently. Jerry Wei et al. arXiv 2023.

- Analyzing chain-of-thought prompting in large language models via gradient-based feature attributions. Skyler Wu et al. ICML 2023.

- Text and patterns: For effective chain of thought, it takes two to tango. Aman Madaan et al. arXiv 2022.

- Towards understanding chain-of-thought prompting: An empirical study of what matters. Boshi Wang et al. ACL 2023.

- Representation engineering: A top-down approach to ai transparency. Andy Zou et al. arXiv 2023.

- The geometry of truth: Emergent linear structure in large language model representations of true/false datasets. Samuel Marks et al. arXiv 2023.

- Language models represent space and time. Wes Gurnee et al. arXiv 2023.

- Lima: Less is more for alignment. Chunting Zhou et al. arXiv 2023.

- The false promise of imitating proprietary llms. Arnav Gudibande et al. arXiv 2023.

- Llama-2: Open foundation and finetuned chat models. Hugo Touvron et al. 2023.

- From language modeling to instruction following: Understanding the behavior shift in llms after instruction tuning. Xuansheng Wu et al. arXiv 2023.

- Siren’s Song in the AI Ocean: A Survey on Hallucination in Large Language Models. Yue Zhang et al. arXiv 2023.

- Look before you leap: An exploratory study of uncertainty measurement for large language models. Yuheng Huang et al. arXiv 2023.

- [On the Origin of Hallucinations in Conversational Models: Is it the Datasets or the Models?](Nouha Dziri). Nouha Dziri et al. ACL 2022.

- Large Language Models Struggle to Learn Long-Tail Knowledge. Nikhil Kandpal et al. arXiv 2023.

- Scaling Laws and Interpretability of Learning from Repeated Data. Danny Hernandez et al. arXiv 2022.

- Sources of Hallucination by Large Language Models on Inference Tasks. Nick McKenna et al. arXiv 2023.

- Do PLMs Know and Understand Ontological Knowledge?. Weiqi Wu et al. ACL 2023.

- The Reversal Curse: LLMs trained on "A is B" fail to learn "B is A". Lukas Berglund et al. arXiv 2023.

- Investigating the Factual Knowledge Boundary of Large Language Models with Retrieval Augmentation. Ruiyang Ren et al. arXiv 2023.

- Impact of Co-occurrence on Factual Knowledge of Large Language Models. Cheongwoong Kang et al. arXiv 2023.

- Simple synthetic data reduces sycophancy in large language models. Jerry Wei et al. arXiv 2023.

- PaLM: Scaling Language Modeling with Pathways, Aakanksha Chowdhery et al. arXiv 2022.

- When Do Pre-Training Biases Propagate to Downstream Tasks? A Case Study in Text Summarization. Faisal Ladhak et al. ACL 2023.

- Look before you leap: An exploratory study of uncertainty measurement for large language models. Yuheng Huang et al. arXiv 2023.

- Shifting attention to relevance: Towards the uncertainty estimation of large language models. Jinhao Duan et al. arXiv 2023.

- Orca: Progressive learning from complex explanation traces of gpt-4. Subhabrata Mukherjee et al. arXiv 2023.

- Did you read the instructions? rethinking the effectiveness of task definitions in instruction learning. Fan Yin et al. ACL 2023.

- Show your work: Scratchpads for intermediate computation with language models. Maxwell Nye et al. arXiv 2021.

- The unreliability of explanations in few-shot prompting for textual reasoning. Xi Ye et al. NIPS 2022.

- Explanation selection using unlabeled data for chain-of-thought prompting. Xi Ye et al. arXiv 2023.

- Learning transferable visual models from natural language supervision. Alec Radford et al. CVPR 2021.

- A Chatgpt aided explainable framework for zero-shot medical image diagnosis. Jiaxiang Liu et al. ICML 2023.

Explanation can be evaluated in multiple dimensions according to different metrics, such as plausibility, faithfulness, stability, etc. For each dimension, metrics can hardly align well with each other. Constructing standard metrics still remains an open challenge. In this part, we focus on two dimension: plausibility and faithfulness. And quantitative properties and metrics, which are usually more reliable than qualitative ones, are presented in detail.

- An Interpretability Evaluation Benchmark for Pre-trained Language Models. Yaozong Shen et al. arXiv 2022.

- Hatexplain: A benchmark dataset for explainable hate speech detection. Binny Mathew et al. AAAI 2021.

- ERASER: A Benchmark to Evaluate Rationalized NLP Models. Jay DeYoung et al. ACL 2020.

- Axiomatic attribution for deep networks. Mukund Sundararajan et al. ICML 2017.

- The (Un)reliability of saliency methods. Pieter-Jan Kindermans et al. arXiv 2017.

- Rethinking Attention-Model Explainability through Faithfulness Violation Test. Yibing Liu et al. ICML 2022.

- Framework for Evaluating Faithfulness of Local Explanations. Sanjoy Dasgupta et al. arXiv 2022.

- Improving the Faithfulness of Attention-based Explanations with Task-specific Information for Text Classification. George Chrysostomou et al. ACL 2021.

- "Will You Find These Shortcuts?" A Protocol for Evaluating the Faithfulness of Input Salience Methods for Text Classification. Jasmijn Bastings et al. arXiv 2022.

- A comparative study of faithfulness metrics for model interpretability methods. Chun Sik Chan et al. ACL 2022.

- Attention is not Explanation. Sarthak Jain et al. NAACL 2019.

- Faithfulness Tests for Natural Language Explanations. Pepa Atanasova et al. ACL 2023.

- Rev: information-theoretic evaluation of free-text rationales. Hanjie Chen et al. ACL 2023.

- Do models explain themselves? counterfactual simulatability of natural language explanations. Yanda Chen et al. arXiv 2023.

- Language models don’t always say what they think: Unfaithful explanations in chain-of-thought prompting. Miles Turpin et al. arXiv 2023.

- Measuring faithfulness in chain-ofthought reasoning. Tamera Lanham et al. arXiv 2023.

- Question decomposition improves the faithfulness of model-generated reasoning. Ansh Radhakrishnan et al. arXiv 2023.

@article{zhao2023explainability,

title={Explainability for Large Language Models: A Survey},

author={Zhao, Haiyan and Chen, Hanjie and Yang, Fan and Liu, Ninghao and Deng, Huiqi and Cai, Hengyi and Wang, Shuaiqiang and Yin, Dawei and Du, Mengnan},

journal={arXiv preprint arXiv:2309.01029},

year={2023}

}