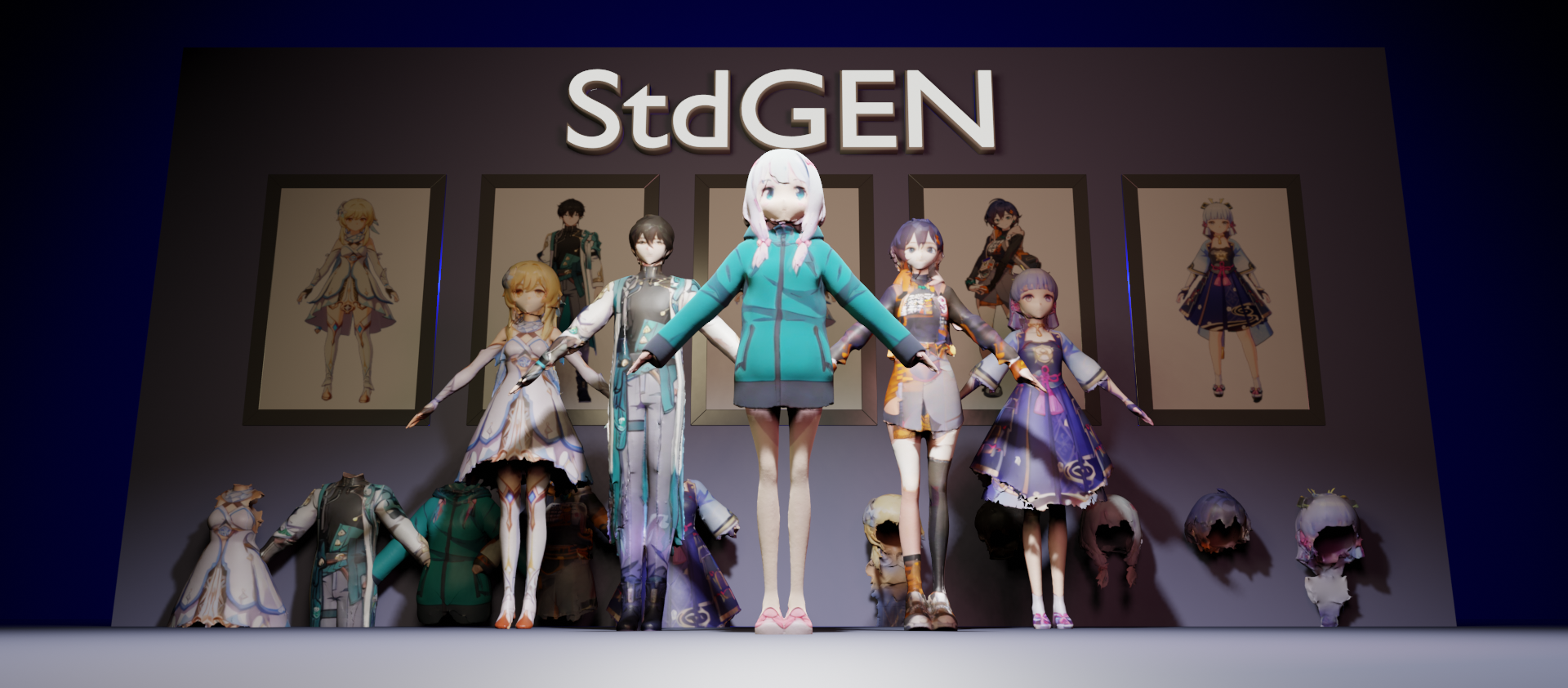

"Std" stands for [S]eman[t]ic-[D]ecomposed, also inspired by the "std" namespace in C++ for standardization.

demo_.mp4

[2025/03/17] Online HuggingFace Gradio demo is released!

[2025/03/04] Inference code, dataset, pretrained checkpoints are released!

[2025/02/27] Our paper is accepted by CVPR 2025!

Set up a Python environment and install the required packages:

conda create -n stdgen python=3.9 -y

conda activate stdgen

# Install torch, torchvision, xformers, torch-scatter based on your machine configuration

pip install torch==2.1.0 torchvision==0.16.0 --index-url https://download.pytorch.org/whl/cu118

pip install xformers==0.0.22.post4 --index-url https://download.pytorch.org/whl/cu118

pip install torch-scatter -f https://data.pyg.org/whl/torch-2.1.0+cu118.html

# Install other dependencies

pip install -r requirements.txtDownload pretrained weights from our 🤗 Huggingface repo (download here) and ViT-H SAM model, place the files in the ./ckpt/ directory.

# stage 1.1: reference image to A-pose image

python infer_canonicalize.py --input_dir ./input_cases --output_dir ./result/apose

# stage 1.2: A-pose image to multi-view image

# decomposed generation (by default)

python infer_multiview.py --input_dir ./result/apose --output_dir ./result/multiview

# If you only need non-decomposed generation, then run:

python infer_multiview.py --input_dir ./result/apose --output_dir ./result/multiview --num_levels 1

# stage 2: S-LRM reconstruction

python infer_slrm.py --input_dir ./result/multiview --output_dir ./result/slrm

# stage 3: multi-layer refinement

# decomposed generation (by default)

python infer_refine.py --input_mv_dir result/multiview --input_obj_dir ./result/slrm --output_dir ./result/refine

# If you only need non-decomposed generation, then run:

python infer_refine.py --input_mv_dir result/multiview --input_obj_dir ./result/slrm --output_dir ./result/refine --no_decompose- The script

infer_canonicalize.pyautomatically determines whether to userm_anime_bgfor background removal based on the alpha channel. - After running

infer_canonicalize.py, you can modify the A-pose character images inresult/aposeto achieve more desirable outcomes. - The refinement of the hair section partially depends on the hair mask prediction. Check the results in

result/refined/CASE_NAME/distract_mask.pngto decide whether to adjust the--outside_ratioparameter (default is 0.20). If the mask includes unwanted information, decrease the value; otherwise, increase it. - If the results are not satisfactory, try experimenting with different seeds.

Due to policy restrictions, we are unable to redistribute the raw VRM format 3D character data. However, you can download the Vroid dataset by following the instructions provided in PAniC-3D. In place of the raw data, we are offering the train/test data list rendering script to render images and semantic maps.

First, install Blender and download the VRM Blender Add-on. Then, install the add-on using the following command:

blender --background --python blender/install_addon.py -- VRM_Addon_for_Blender-release.zipNext, execute the Blender rendering script:

cd blender

python distributed_uniform.py --input_dir /PATH/TO/YOUR/VROIDDATA --save_dir /PATH/TO/YOUR/SAVEDIR --workers_per_gpu 4The train/test data list can be found at data/train_list.json and data/test_list.json.

If you find our work useful, please kindly cite:

@article{he2024stdgen,

title={StdGEN: Semantic-Decomposed 3D Character Generation from Single Images},

author={He, Yuze and Zhou, Yanning and Zhao, Wang and Wu, Zhongkai and Xiao, Kaiwen and Yang, Wei and Liu, Yong-Jin and Han, Xiao},

journal={arXiv preprint arXiv:2411.05738},

year={2024}

}

Some of the code in this repo is borrowed from InstantMesh, Unique3D, Era3D and CharacterGen. We sincerely thank them all.

Our released checkpoints are also for research purposes only. Users are granted the freedom to create models using this tool, but they are obligated to comply with local laws and utilize it responsibly. The developers disclaim responsibility for user-generated content and will not assume any responsibility for potential misuse by users.