Automated README file generator, powered by large language model APIs

Objective

Readme-ai is a developer tool that auto-generates README.md files using a combination of data extraction and generative ai. Simply provide a repository URL or local path to your codebase and a well-structured and detailed README file will be generated for you.

Motivation

Streamlines documentation creation and maintenance, enhancing developer productivity. This project aims to enable all skill levels, across all domains, to better understand, use, and contribute to open-source software.

CLI Usage

readmeai-cli-demo.mov

Offline Mode

readmeai-streamlit-demo.mov

Tip

Offline mode is useful for generating a boilerplate README at no cost. View the offline README.md example here!

- Flexible README Generation: Robust repository context extraction combined with generative AI.

- Multiple LLM Support: Compatible with

OpenAI,Ollama,Google GeminiandOffline Mode. - Customizable Output: Dozens of CLI options for styling, badges, header designs, and more.

- Language Agnostic: Works with a wide range of programming languages and project types.

- Offline Mode: Generate a boilerplate README without calling an external API.

See a few examples of the README-AI customization options below:

See the Configuration section for a complete list of CLI options.

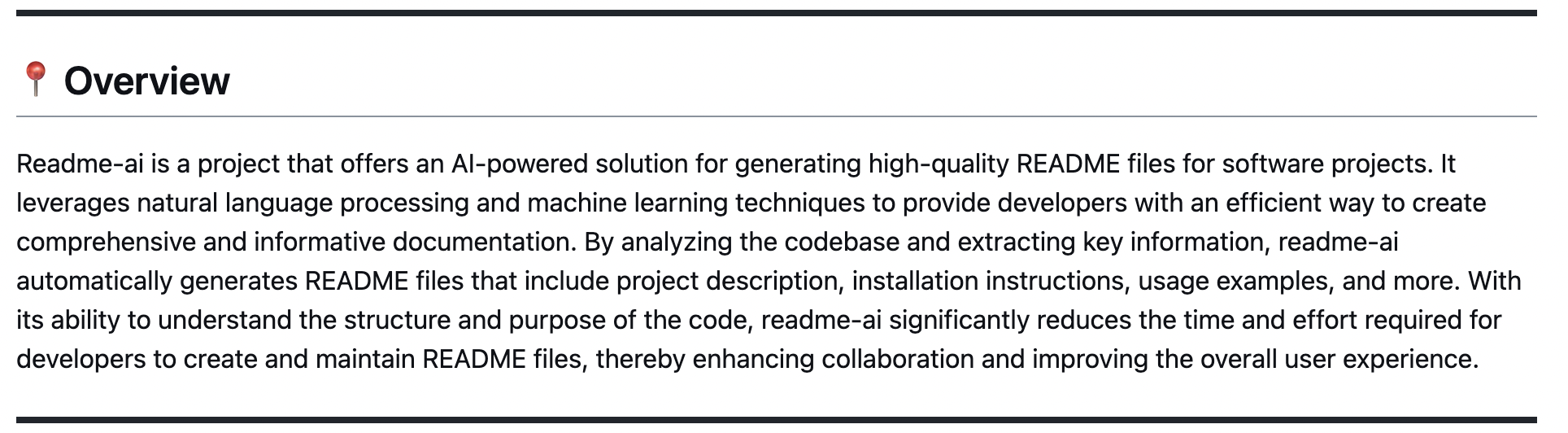

👋 Overview

| Overview

|

|

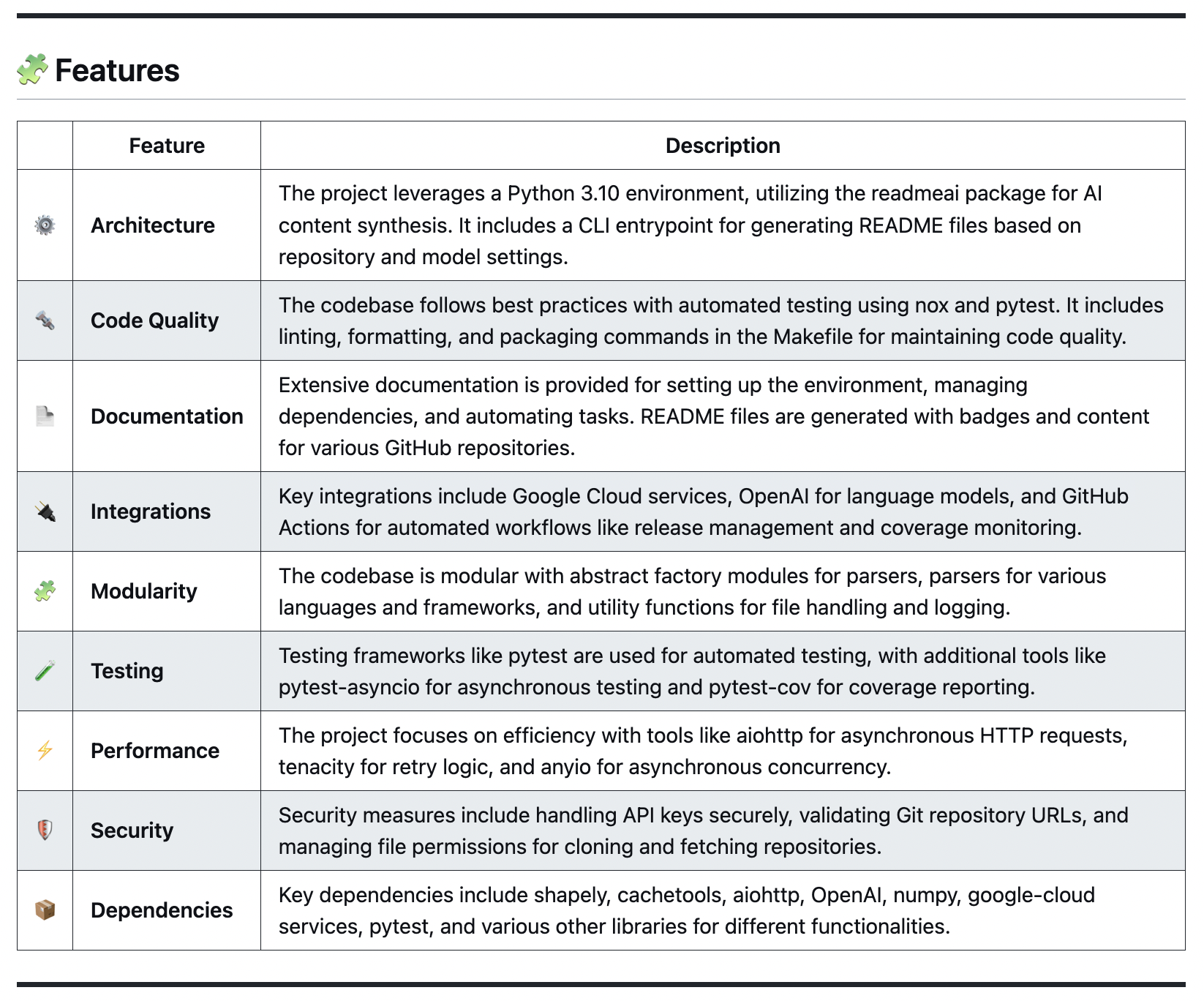

🧩 Features

| Features Table

|

|

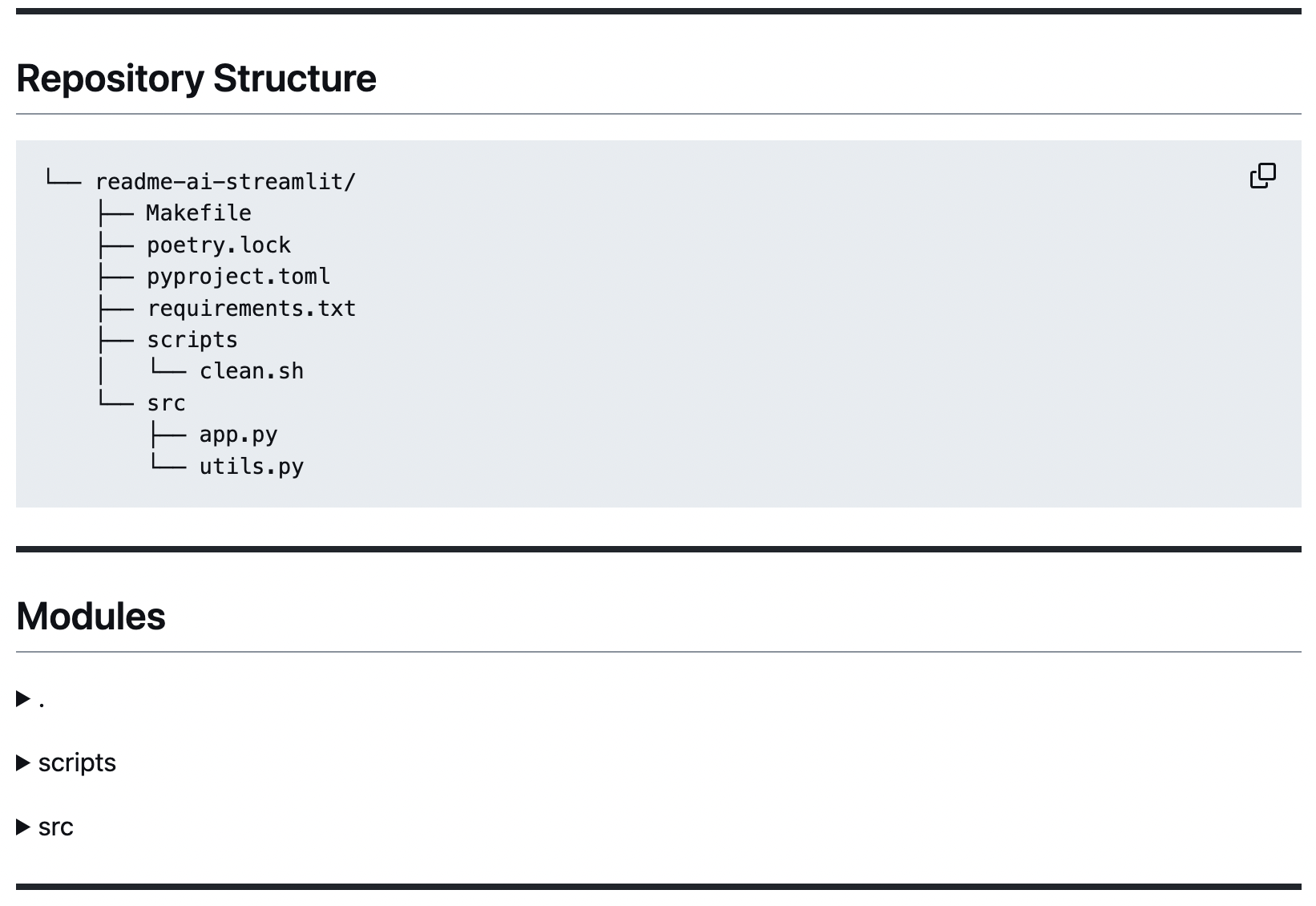

📄 Codebase Documentation

| Repository Structure

|

|

|

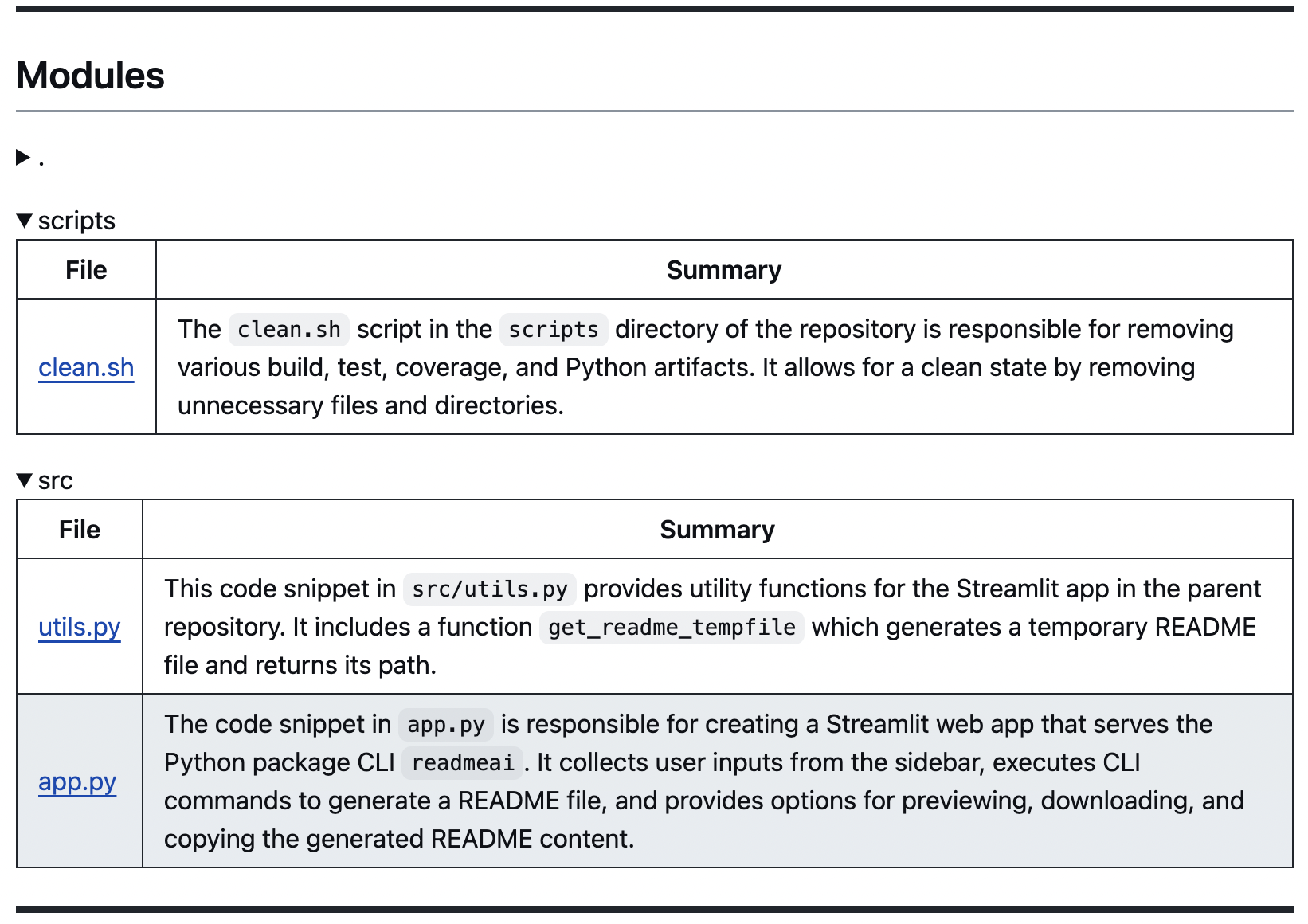

File Summaries

|

|

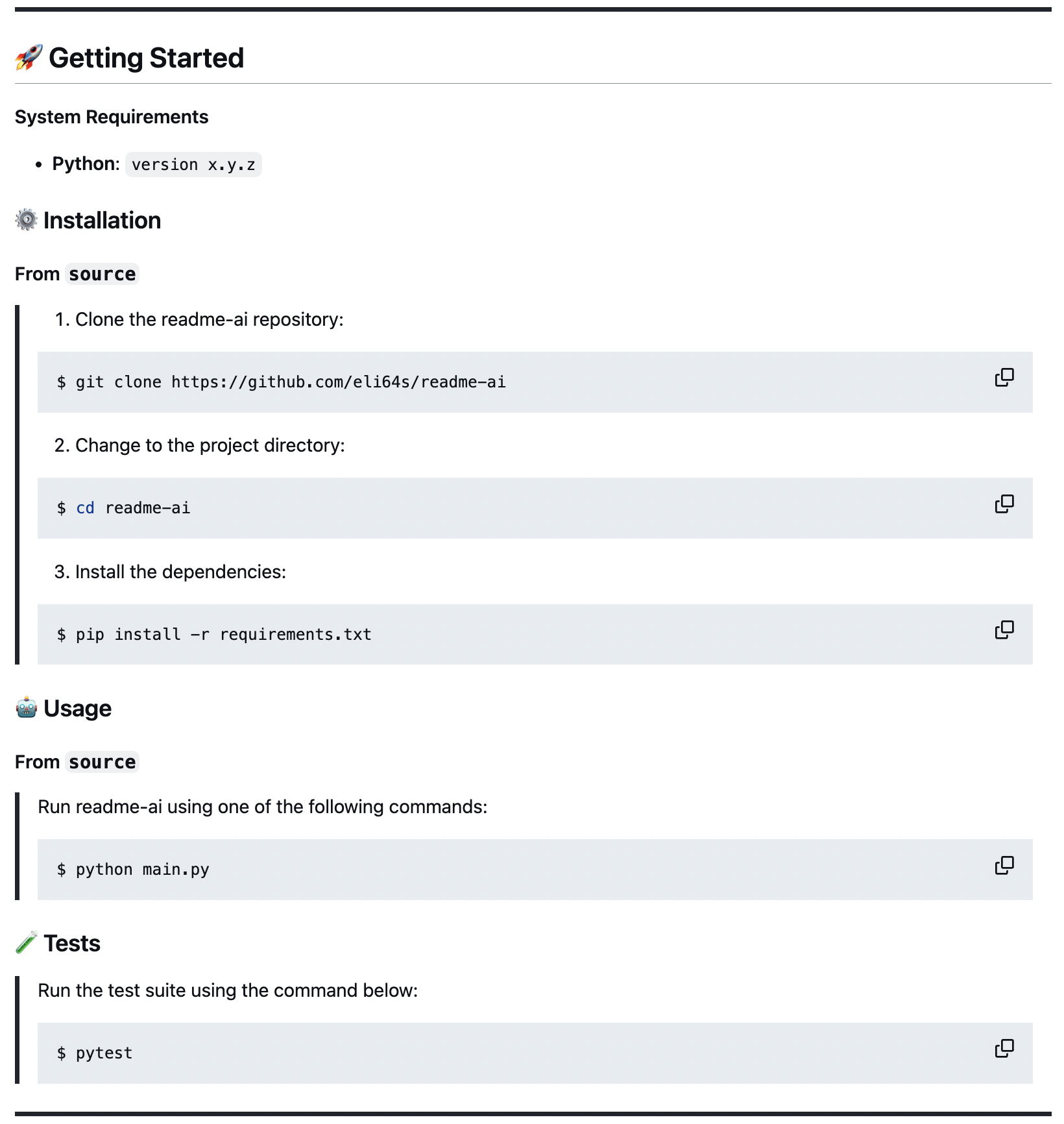

🚀 Quickstart Commands

| Getting Started

Install, Usage, and Test guides are supported for many languages.

|

|

🔰 Contributing Guidelines

System Requirements:

- Python 3.9+

- Package manager/Container:

pip,pipx,docker - LLM service:

OpenAI,Ollama,Google Gemini,Offline ModeAnthropicandLiteLLMcoming soon!

Repository URL or Local Path:

Make sure to have a repository URL or local directory path ready for the CLI.

Select an LLM API Service:

- OpenAI: Recommended, requires an account setup and API key.

- Ollama: Free and open-source, potentially slower and more resource-intensive.

- Google Gemini: Requires a Google Cloud account and API key.

- Offline Mode: Generates a boilerplate README without making API calls.

❯ pip install readmeai❯ pipx install readmeaiTip

Use pipx to install and run Python command-line applications without causing dependency conflicts with other packages!

❯ docker pull zeroxeli/readme-ai:latestBuild readme-ai

Clone repository and navigate to the project directory:

❯ git clone https://github.com/eli64s/readme-ai

❯ cd readme-ai❯ bash setup/setup.sh❯ poetry installOpenAI

Generate a OpenAI API key and set it as the environment variable OPENAI_API_KEY.

# Using Linux or macOS

❯ export OPENAI_API_KEY=<your_api_key>

# Using Windows

❯ set OPENAI_API_KEY=<your_api_key>Ollama

Pull model of your choice from the Ollama registry as follows:

# i.e. mistral, llama3, gemma2, etc.

❯ ollama pull mistral:latestStart the Ollama server:

❯ export OLLAMA_HOST=127.0.0.1 && ollama serveFor more details, check out the Ollama repository.

Google Gemini

Generate a Google API key and set it as the environment variable GOOGLE_API_KEY.

❯ export GOOGLE_API_KEY=<your_api_key>With OpenAI API:

❯ readmeai --repository https://github.com/eli64s/readme-ai \

--api openai \

--model gpt-3.5-turboWith Ollama:

❯ readmeai --repository https://github.com/eli64s/readme-ai \

--api ollama \

--model llama3With Gemini:

❯ readmeai --repository https://github.com/eli64s/readme-ai \

--api gemini

--model gemini-1.5-flashAdvanced Options:

❯ readmeai --repository https://github.com/eli64s/readme-ai \

--api openai \

--model gpt-4-turbo \

--badge-color blueviolet \

--badge-style flat-square \

--header-style compact \

--toc-style fold \

--temperature 0.1 \

--tree-depth 2

--image LLM \

--emojis \❯ docker run -it \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

-v "$(pwd)":/app zeroxeli/readme-ai:latest \

-r https://github.com/eli64s/readme-ai

Using readme-ai

❯ conda activate readmeai

❯ python3 -m readmeai.cli.main -r https://github.com/eli64s/readme-ai❯ poetry shell

❯ poetry run python3 -m readmeai.cli.main -r https://github.com/eli64s/readme-ai❯ make pytest❯ nox -f noxfile.pyTip

Use nox to test application against multiple Python environments and dependencies!

Customize your README generation using these CLI options:

| Option | Description | Default |

|---|---|---|

--align |

Text align in header | center |

--api |

LLM API service (openai, ollama, offline) | offline |

--badge-color |

Badge color name or hex code | 0080ff |

--badge-style |

Badge icon style type | flat |

--base-url |

Base URL for the repository | v1/chat/completions |

--context-window |

Maximum context window of the LLM API | 3999 |

--emojis |

Adds emojis to the README header sections | False |

--header-style |

Header template style | default |

--image |

Project logo image | blue |

--model |

Specific LLM model to use | gpt-3.5-turbo |

--output |

Output filename | readme-ai.md |

--rate-limit |

Maximum API requests per minute | 5 |

--repository |

Repository URL or local directory path | None |

--temperature |

Creativity level for content generation | 0.9 |

--toc-style |

Table of contents template style | bullets |

--top-p |

Probability of the top-p sampling method | 0.9 |

--tree-depth |

Maximum depth of the directory tree structure | 2 |

Tip

For a full list of options, run readmeai --help in your terminal.

The --badge-style option lets you select the style of the default badge set.

| Style | Preview |

|---|---|

| default |     |

| flat |  |

| flat-square |  |

| for-the-badge |  |

| plastic |  |

| skills | |

| skills-light | |

| social |  |

When providing the --badge-style option, readme-ai does two things:

- Formats the default badge set to match the selection (i.e. flat, flat-square, etc.).

- Generates an additional badge set representing your projects dependencies and tech stack (i.e. Python, Docker, etc.)

❯ readmeai --badge-style flat-square --repository https://github.com/eli64s/readme-ai

{... project logo ...}

{... project name ...}

{...project slogan...}

Developed with the software and tools below.

{... end of header ...}

Select a project logo using the --image option.

| blue | gradient | black |

| cloud | purple | grey |

For custom images, see the following options:

- Use

--image customto invoke a prompt to upload a local image file path or URL. - Use

--image llmto generate a project logo using a LLM API (OpenAI only).

| Language/Framework | Output File | Input Repository | Description |

|---|---|---|---|

| Python | readme-python.md | readme-ai | Core readme-ai project |

| TypeScript & React | readme-typescript.md | ChatGPT App | React Native ChatGPT app |

| PostgreSQL & DuckDB | readme-postgres.md | Buenavista | Postgres proxy server |

| Kotlin & Android | readme-kotlin.md | file.io Client | Android file sharing app |

| Python & Streamlit | readme-streamlit.md | readme-ai-streamlit | Streamlit UI for readme-ai |

| Rust & C | readme-rust-c.md | CallMon | System call monitoring tool |

| Go | readme-go.md | docker-gs-ping | Dockerized Go app |

| Java | readme-java.md | Minimal-Todo | Minimalist todo app |

| FastAPI & Redis | readme-fastapi-redis.md | async-ml-inference | Async ML inference service |

| Python & Jupyter | readme-mlops.md | mlops-course | MLOps course materials |

| Flink & Python | readme-local.md | Local Directory | Example using local files |

Note

See additional README file examples here.

- v1.0 release with new features, bug fixes, and improved performance.

- Develop

readmeai-vscodeextension to generate README files (WIP). - Add new CLI options to enhance README file customization.

-

--auditto review existing README files and suggest improvements. -

--templateto select a README template style (i.e. ai, data, web, etc.) -

--languageto generate README files in any language (i.e. zh-CN, ES, FR, JA, KO, RU)

-

- Develop robust documentation generator to build full project docs (i.e. Sphinx, MkDocs)

- Create community-driven templates for README files and gallery of readme-ai examples.

- GitHub Actions script to automatically update README file content on repository push.

To grow the project, we need your help! See the links below to get started.