This is a code implementation of the "High Fidelity Neural Audio Compression" paper by Meta AI.

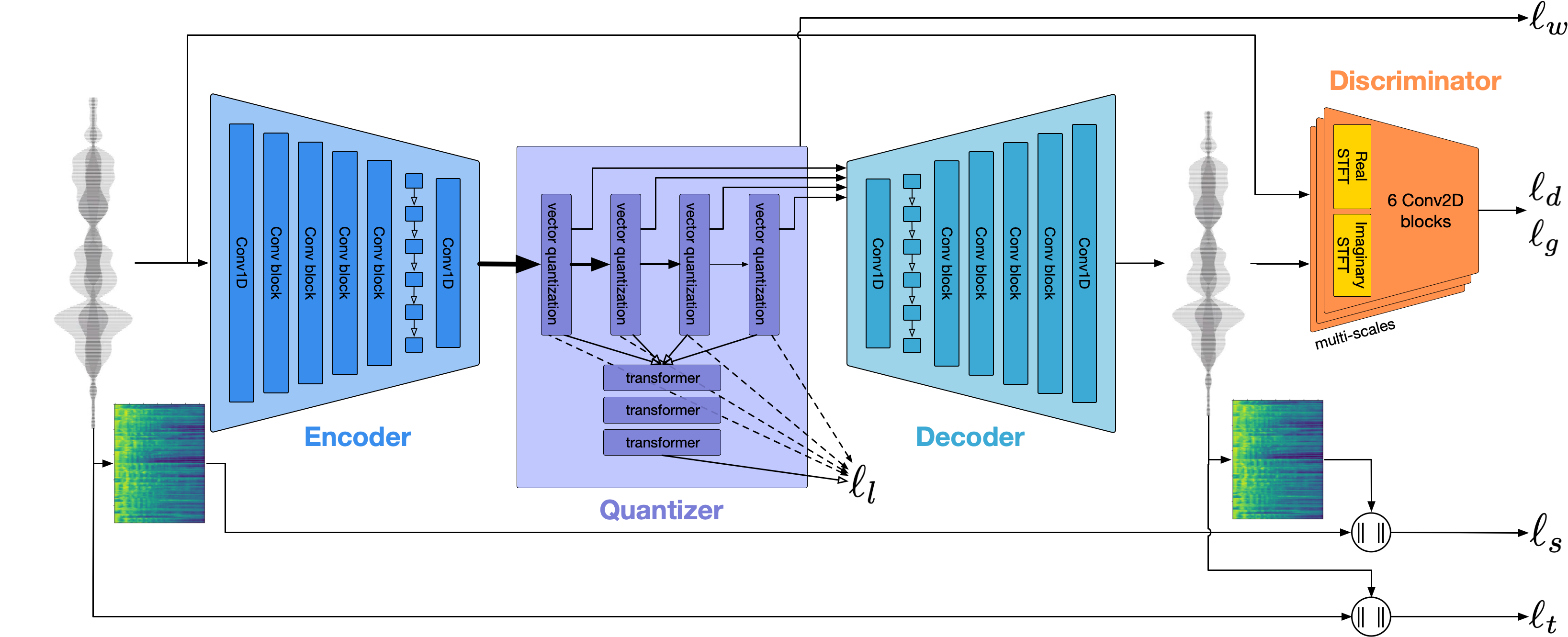

This project aims to reproduce the Encodec model architecture as per the paper. The core model consists of a convolution based encoder-decoder network with an additional residual vector quantizer (RVQ) in between for furthur compression of the latent embeddings into discrete codes.

A MS-STFT Discriminator is furthur used to enhance the output audio quality by training it using adversarial losses.

The entire model is trained on multiple loss components including reconstruction loss, perceptual loss and discriminator losses. The loss terms are scaled with coefficients to balance the loss between the terms:

l_g- adversarial loss for the generatorl_feat- relative feature matching loss for the generator.l_w- commitment loss for the RVQl_f- linear combination of L1 and L2 losses across freq. domain on a mel scalel_t- L1 loss across time domian

The entire model was trained on the LibriSpeech ASR corpus developement dataset with the following hyperparamters:

num_epochs = 50

batch_size = 2

sample_rate = 24000

learning_rate = 0.001

target_bandwidths=[1.5, 3, 6, 12, 24]

norm = 'weight_norm'

causal=FalseReleased under the MIT license as found in the LICENSE file.