This is a OpenAI chat web application that uses flask as backend. The goal of this app is to be deployed to AWS.

- Accessing the Web-App over port 5000 using

Gunicorn/Flask - HTML, CSS and JavaScript frontend that allows sending chats to

- Sending Text to backend where it is wrapped in a

HumanMessage-object from the Langchain library - Sending

HumanMessage-object to the OpenAI API to get a anAIMessage-object with the answer - Saving both

HumanMessage-request andAIMessage-response to SQLite database - Continuously updating the frontend using the database content.

Create and activate a virtual environment

python3.9 -m venv venv

source venv/bin/activateInstall dependencies

pip install -r requirements.txt(Optional): Creating a virtual environment with devops-related dependencies

python3.9 -m venv venv-dev

source venv-dev/bin/activate

pip install -r requirements-devtxtSetting the OpenAI api key to access the llm:

export OPENAI_API_KEY=...Run the app locally

python3 run.pyOpen the app in the browser at http://localhost:5000 or https://127.0.0.1:5000

Building the docker container

VERSION=...

docker build --build-arg OPENAI_API_KEY=${OPENAI_API_KEY} -t flaskgpt:${VERSION} .Running the app locally using Docker

docker run -it -p 5000:5000 flaskgpt:${VERSION}demo.mp4

The instructions can be found in the devops-folder

Requirements:

- AWS account

- OpenAI account with sufficient credits and API token

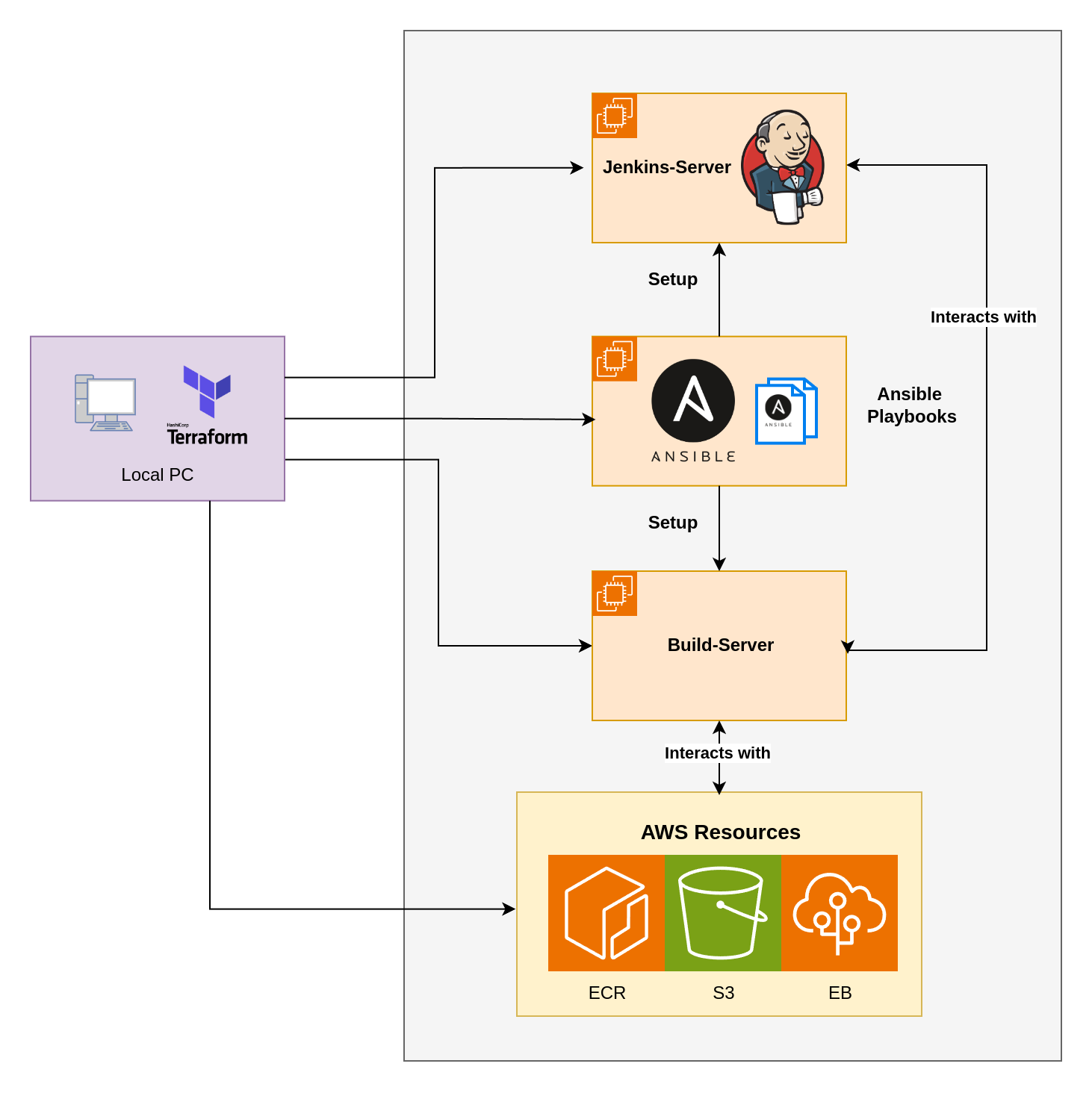

- Using Terraform to provision

Ansible-Server (EC2): Server for setting up build-server and Jenkins-server programs and dependencies (using Ansible-Playbooks)Jenkins-Server (EC2): For orchestrating the CI/CD-process in conjunction with the build-serverBuild-Server (EC2): Server that serves the Jenkins-server for everything related building, execution and deployment of codeElastic Container Registry (ECR): Used as artifact storage for dockerized code that was built in the Jenkins-pipelineS3 Bucket (S3): Storage for deployment configuration files of AWS Elastic Beanstalk (EB)Elastic Beanstalk (EB): Resource for deployment of code on AWS (requires S3 with config and ECR with dockerized program)

Steps of Pipeline:

- Clone Git repo on each git push (triggers webhook)

- Setup

virtiualenvwith dependencies and obtaining version information frompyproject.toml - Testing and linting the webapp Python-code with Pytest and Pylint

- Dockerizing the code and pusing it to AWS Elastic Container Registry (ECR) to be retrieved later in deployment

- Dynamically creating AWS Elastic Beanstalk (EB) config (Deockerrun.aws.json) and saving it to dedicated S3 bucket

- Deploying Docker container from ECR to EB

- Access the OpenAI chatbot webapp with the URL that is provided by AWS EB