This is the official implementation of Manydepth2.

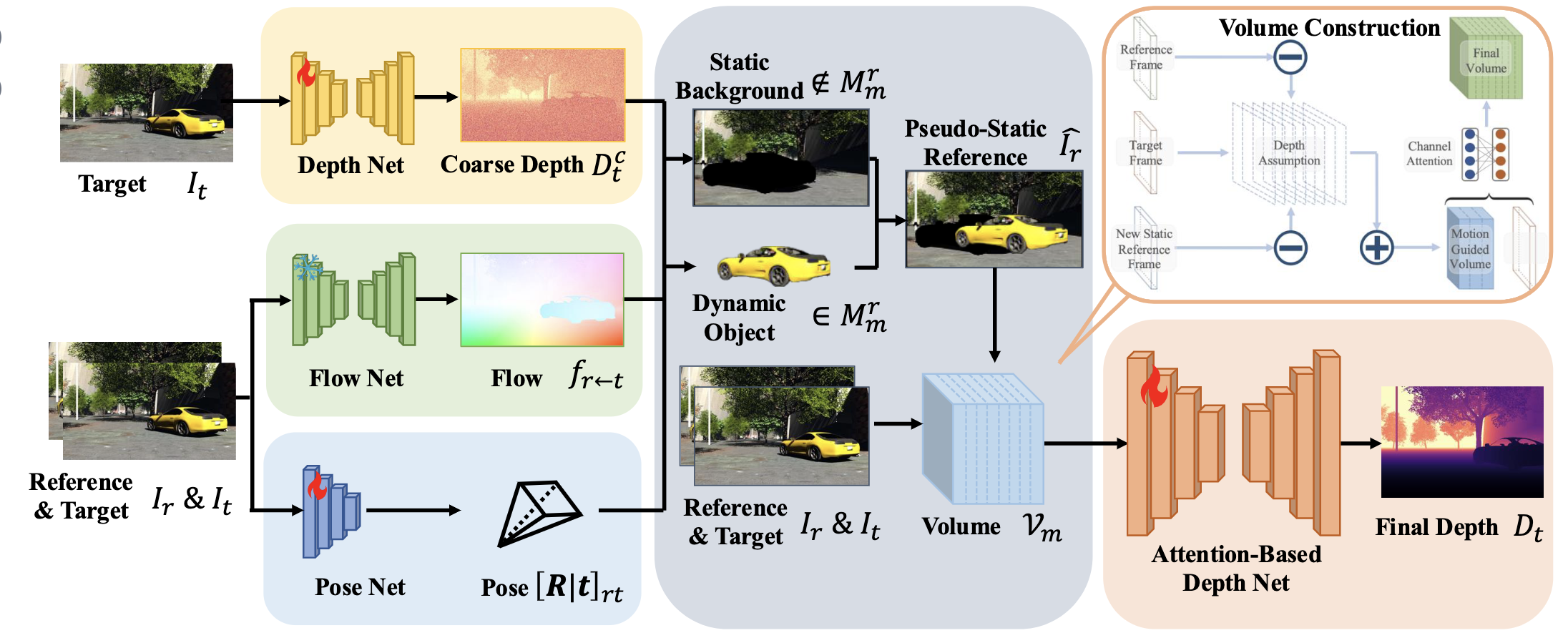

We introduce Manydepth2, a Motion-Guided Depth Estimation Network, to achieve precise monocular self-supervised depth estimation for both dynamic objects and static backgrounds

- ✅ Self-supervised: Training from monocular video. No depths or poses are needed at training or test time.

- ✅ Accurate: Accurate depth estimation for both dynamic objects and static background.

- ✅ Efficient: Only one forward pass at test time. No test-time optimization needed.

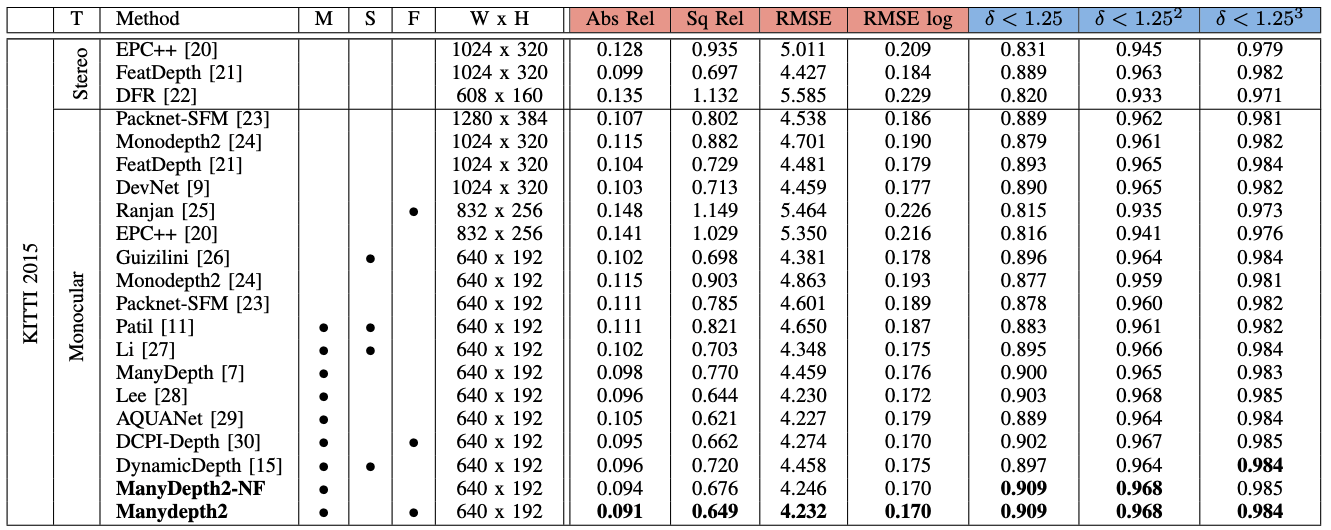

- ✅ State-of-the-art: Self-supervised monocular-trained depth estimation on KITTI.

- ✅ Easy to implement: No need to pre-compute any information.

- ✅ Multiple-Choice: Offer both the motion-aware and standard versions, as both perform effectively.

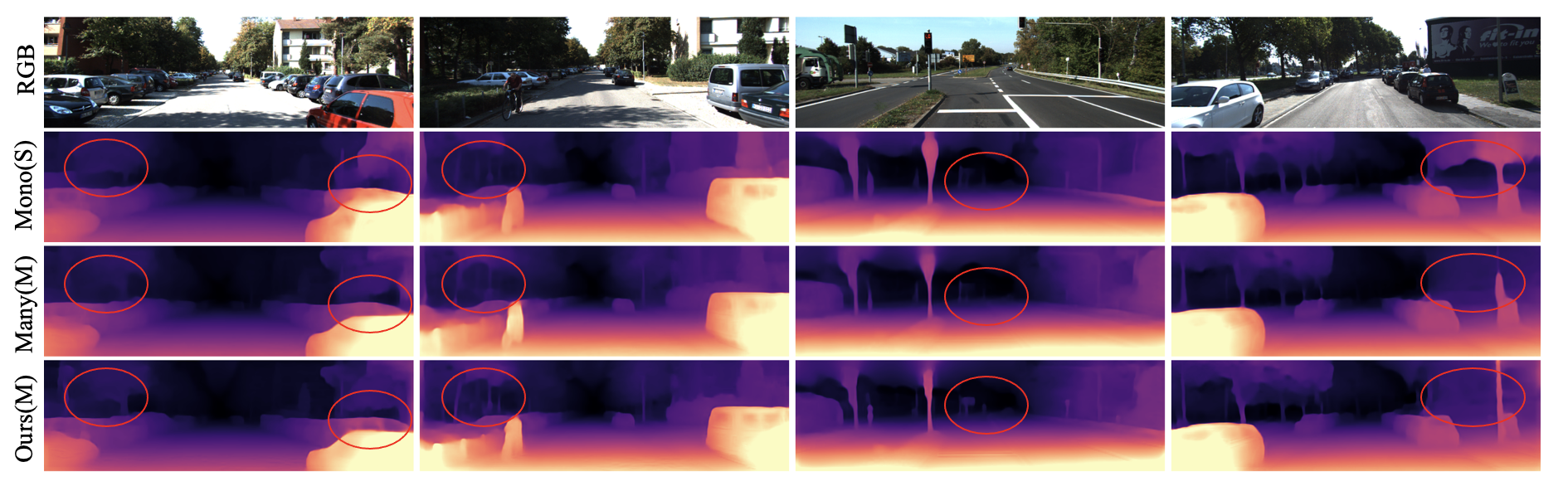

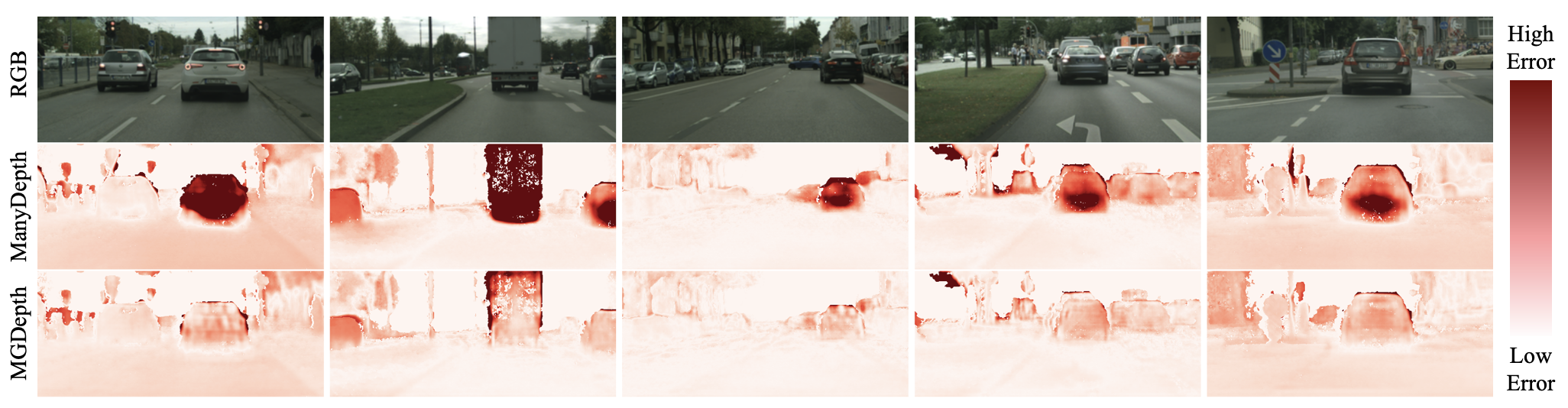

Manydepth constructs the cost volume using both the reference and target frames, but it overlooks the dynamic foreground, which can lead to significant errors when handling dynamic objects:

This phenomenon arises from the fact that real optical flow consists of both static and dynamic components. To construct an accurate cost volume for depth estimation, it is essential to extract the static flow. The entire pipeline of our approach can be summarized as follows:

In our paper, we:

- Propose a method to construct a static reference frame using optical flow to mitigate the impact of dynamic objects.

- Build a motion-aware cost volume leveraging the static reference frame.

- Integrate both channel attention and non-local attention into the framework to enhance performance further.

Our contributions enable accurate depth estimation on both the KITTI and Cityscapes datasets:

If you find our work useful or interesting, please cite our paper:

Manydepth2: Motion-Aware Self-Supervised Monocular Depth Estimation in Dynamic Scenes

Kaichen Zhou, Jia-Wang Bian, Qian Xie, Jian-Qing Zheng, Niki Trigoni, Andrew Markham Our Manydepth2 method outperforms all previous methods in all subsections across most metrics with the same input size, whether or not the baselines use multiple frames at test time. See our paper for full details.

For instructions on downloading the KITTI dataset, see Monodepth2.

Make sure you have first run export_gt_depth.py to extract ground truth files.

You can also download it from this link KITTI_GT, and place it under splits/eigen/.

For instructions on downloading the Cityscapes dataset, see SfMLearner.

To replicate the results from our paper, please first create and activate the provided environment:

conda env create -f environment.yaml

Once all packages have been installed successfully, please execute the following command:

conda activate manydepth2

Next, please download and install the pretrained FlowNet weights using this Weights For GMFLOW. And place it under /pretrained.

After finishing the dataset and environment preparation, you can train Manydehtp2, by running:

sh train_many2.shTo reproduce the results on Cityscapes, we froze the teacher model at the 5th epoch and set the height to 192 and width to 512.

To train Manydepth2-NF, please run:

sh train_many2-NF.shTo evaluate a model on KITTI, run:

sh eval_many2.shTo evaluate a model (W/O Optical Flow)

sh eval_many2-NF.shRunning Manydepth:

sh train_many.shTo evaluate Manydepth on KITTI, run:

sh eval_many.shYou can download the weights for several pretrained models here and save them in the following directory:

--logs

--models_many

--...

--models_many2

--...

--models_many2-NF

--...

Great Thank to GMFlow, SfMLearner, Monodepth2 and Manydepth.