Scripts, figures, and working notes for the participation in FungiCLEF 2022, part of the LifeCLEF labs at the 13th CLEF Conference, 2022.

Implementation Stack: Python, Keras/Tensorflow, XGBoost, Scikit-learn.

The following references will help in reproducing this implementation and to extend the experiments for further analyses.

If you find our work useful in your research, don't forget to cite us!

@article{desingu2022classification,

url = {https://ceur-ws.org/Vol-3180/paper-162.pdf},

title={Classification of fungi species: A deep learning based image feature extraction and gradient boosting ensemble approach},

author={Desingu, Karthik and Bhaskar, Anirudh and Palaniappan, Mirunalini and Chodisetty, Eeswara Anvesh and Bharathi, Haricharan},

keywords={Ensemble Learning, Convolutional Neural Networks, Gradient Boosting Ensemble, Metadata-aided Classification, Image Classification, Transfer Learning},

journal={Conference and Labs of the Evaluation Forum},

publisher={Conference and Labs of the Evaluation Forum},

year={2022},

ISSN={1613-0073},

copyright = {Creative Commons Attribution 4.0 International}

}

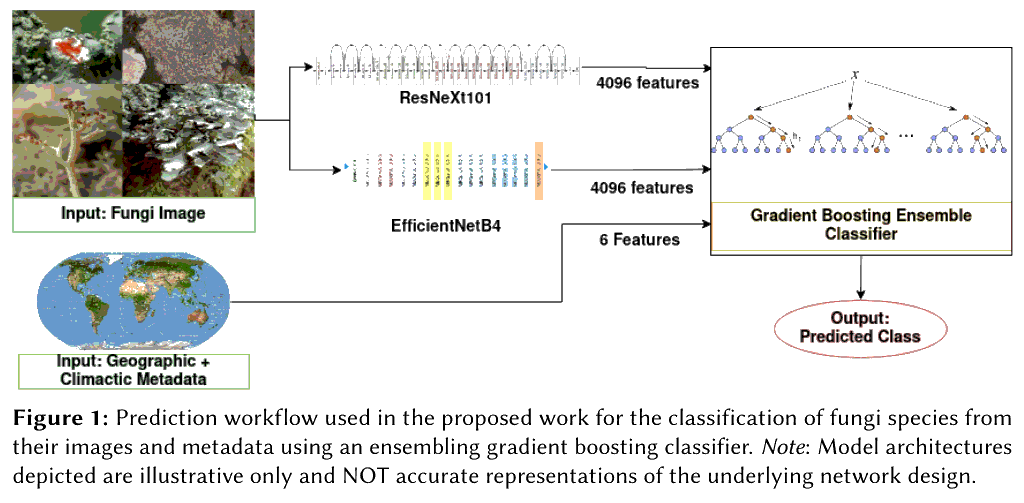

- A deep learning -based feature extraction and subsequent boosting ensemble approach for fungi species classification is proposed.

- Leverages state-of-the-art deep learning architectures such as ResNeXt and Efficient-Net among others and trains them by transfer learning onto a fungi image dataset for feature extraction.

- Finally, integrates the output representation vectors with geographic metadata to train a gradient boosting ensemble classifier that predicts the fungi species.

- The authors trained multiple deep learning architectures, assessed their individual performance, and selected effective feature extraction models.

- Each observation in the dataset is made up of numerous fungus photos and its contextual geographic information like nation, exact area where the photograph was taken on four layers, along with specific attributes like substrate and habitat.

- Each image in an observation is preprocessed before being fed through the two feature extraction networks to generate two 4096-element-long representation vectors. - These vectors are combined with numeric encoded nation, location at three-level precision, substrate, and habitat metadata for the image to produce a final vector with a size of 8198.

- The boosting ensemble classifier is fed all the 8198 features to generate a probability distribution over all potential fungi species classes.

- The class probability values collected for each image in an observation are averaged to obtain a single aggregate distribution of probabilities over all classes.

This workflow is depicted below,

- Some fungi classes in the dataset were exclusive to the test set, and were not exposed to the architicture during model training. These classes are herein referred to as out-of-scope classes.

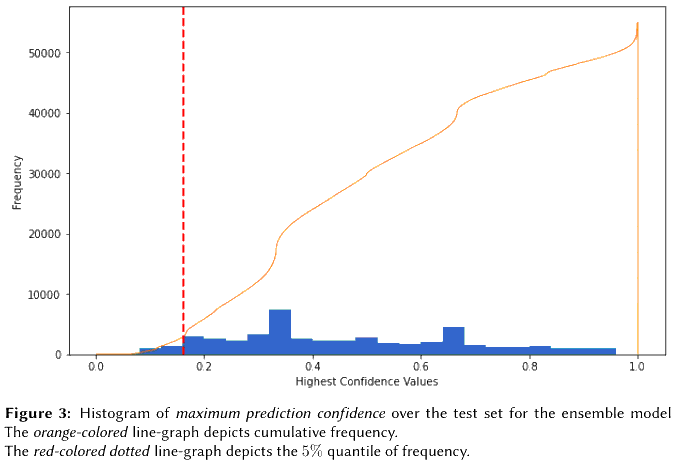

- A prediction confidence thresholding method was devised to handle out-of-the-scope classes.

- A threshold value is arrived at for each trained ensemble model by adopting a qualitative, trial-based method.

- First, a histogram of maximum prediction probabilities of the model for each observation in the test set is plotted.

The histogram for the best performing model instance is depeicted below,

- The x-axis represents the maximum confidence values for predictions on the observations, while the y-axis tracks the frequency of these maximum confidence values.

- Subsequently, the x-axis point of 5% cumulative frequency is identified (denoted by the red-colored dotted-line above).

- Multiple points — typically, 2-4 points during experiments — on the left-hand side of this 5% quantile line are chosen as threshold values, and predictions are made based on each of these threshold values.

A more detailed and mathematical treatment of the proposed strategy can be found in our paper.

- The ensembling approach was found to be an effective option for applying to data-intensive and high-complexity image classification tasks that are commonly released at LifeCLEF.

- The inclusion of contextual information showed a strong impact on the classification results — the F1-scores for the best models improved from 61.72% and 41.95% to 63.88% and 42.74%.

- We further conjecture that training the individual models to convergence, and subsequently applying the boosting ensembler with hyperparameter tuning will culminate in a superior prediction performance, that exhausts the proposed architectures’ and methodology’s potential.

- In addition, approaches involving input image resolution variations, usage of alternative pre-trained weights (see A. Joly et al.), as well as the inclusion of custom training layers to the frozen base model when transfer learning (see M. Zhong et al.) can greatly improve the quality of feature extraction.