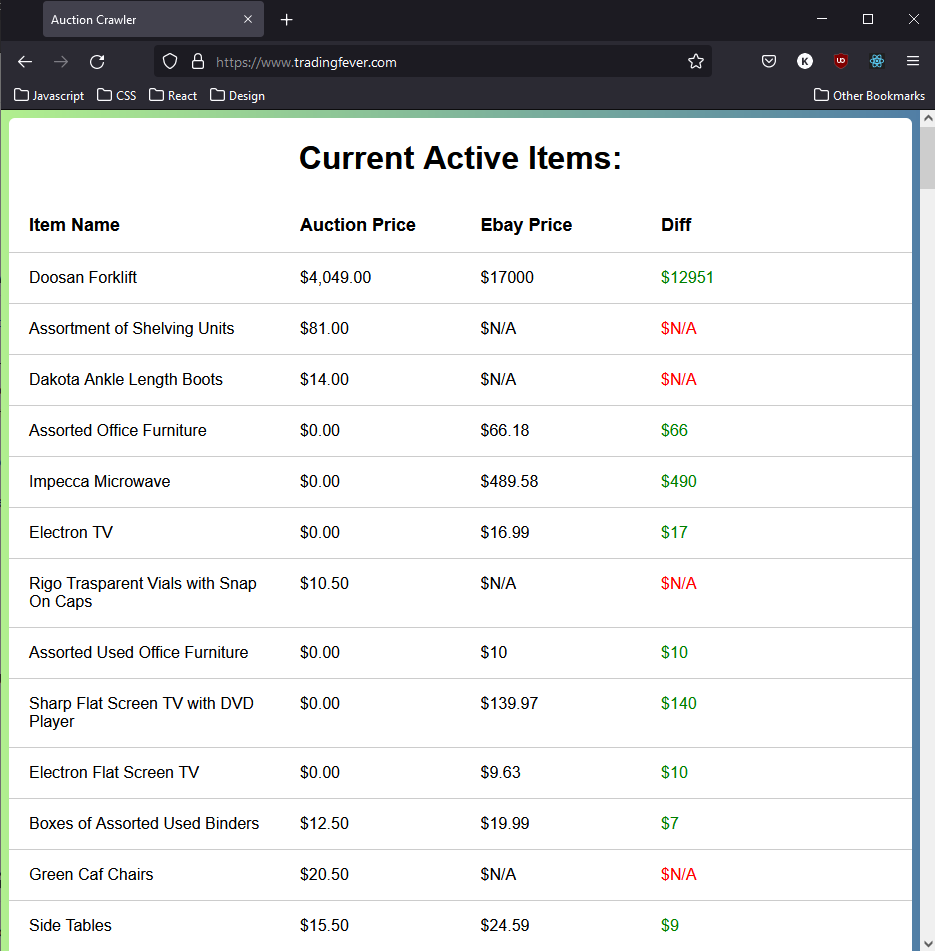

Backend CRUD and web crawler to populate and store auction data for price comparison

View Demo

·

Report Bug

·

Request Feature

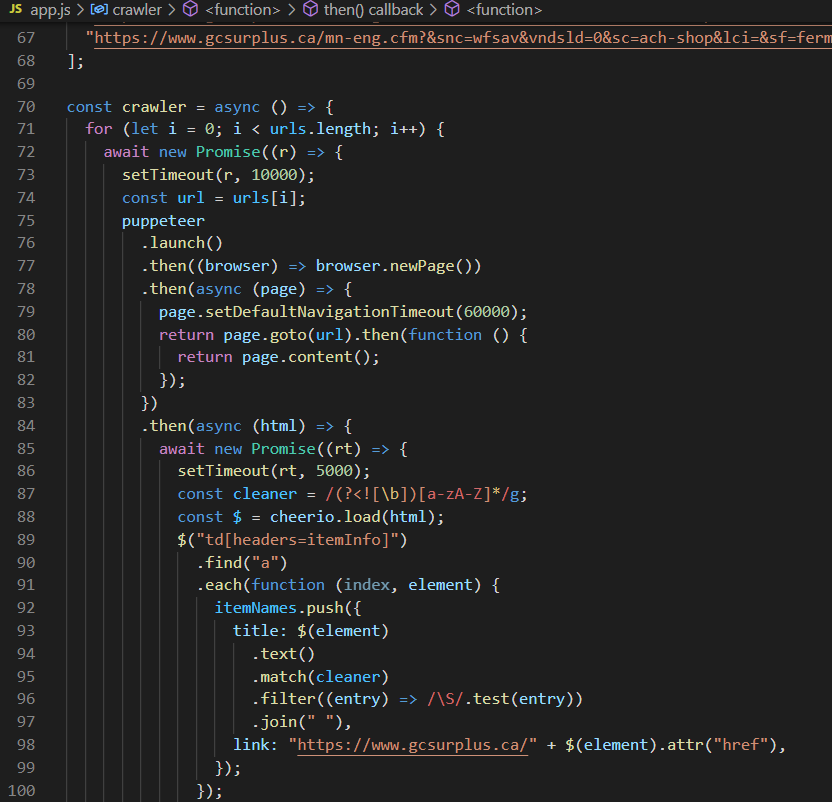

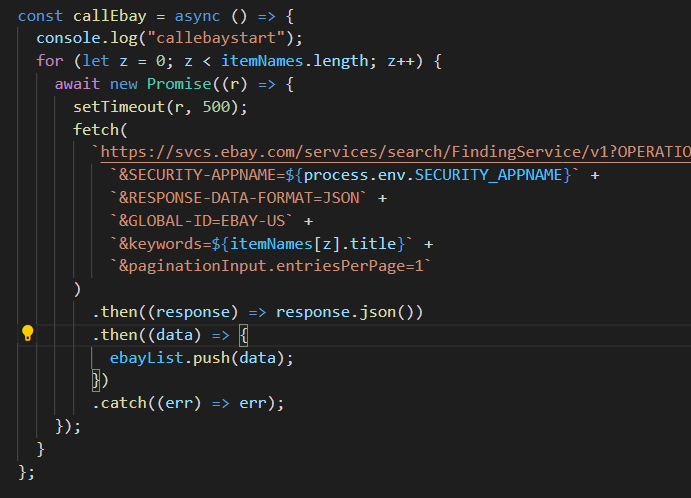

This is the backend portion of a full stack web crawler price comparison. We are using puppeteer to create instances of a chrome browser to navigate to government surplus auction listings and scraping keywords, prices, and urls with filtering done by cheerio. The keywords pulled are then sent to Ebay's "finding" service api to find matches and receives the listing URL and price. All this data is then sent to a redis(free) hosted data storage, where express will route fetch requests from the frontend.

Here's why I built this:

- The ability to compare over 250 items at once saves a huge amount of time

- The backend can self update listings as auctions expire or prices change

- Express is a great boilerplate solution for handling routing and fetch requests.

- Cost of hosting is zero.

To get a local copy up and running follow these simple steps.

This is an example of how to list things you need to use the software and how to install them.

- npm

npm install npm@latest -g

- Clone the repo

git clone https://github.com/kitoshi/auctioncrawl-api.git

- Install NPM packages

npm install

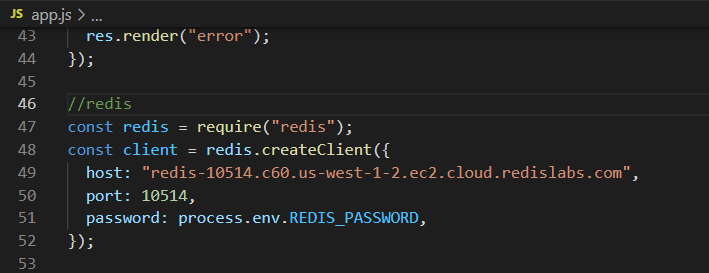

- You will need a redis host to set "client" in app.js with a .env file for the password

- You will also need an auction site to crawl (see urls & link in app.js), the one in the release is GCSurplus (Canadian Federal Gov't)

- Last account is an Ebay Developer account needed for access to the API. You will have to replace SECURITY_APPNAME with your personal string.

- Run with npm start - console will return messages while crawler is in progress. Done after two OK replies from redis

- Server will run default on http://localhost:9000/, GET data available on routes http://localhost:9000/crawlerAPI, http://localhost:9000/ebayAPI

- For production, backend can be hosted on google cloud platform using their app engine. I used their SDK to port to the cloud service by command line in my code editor.

See the open issues for a list of proposed features (and known issues).

Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE for more information.

Robert K. Charlton - [email protected]

Project Link: https://github.com/kitoshi/auctioncrawl-api