Now I will gather all my finished codes about the projects of Sensor Fusion Engineer of four projects. Learn to detect obstacles in lidar point clouds through clustering and segmentation, apply thresholds and filters to radar data in order to accurately track objects, and augment your perception by projecting camera images into three dimensions and fusing these projections with other sensor data. Combine this sensor data with Kalman filters to perceive the world around a vehicle and track objects over time.

|

|

|

|---|---|

|

|

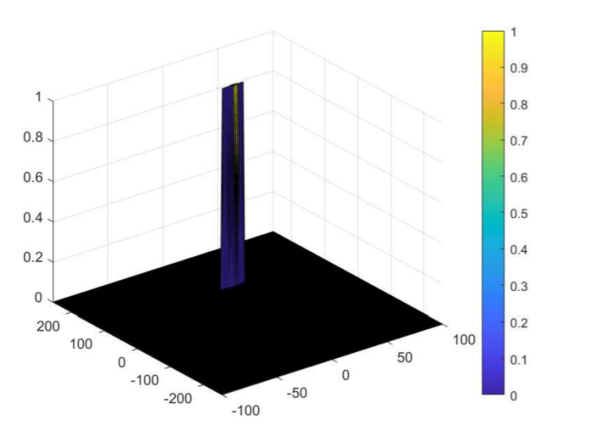

Summary: Process raw lidar data with PCL library to detect other vehicles on the road. Firstly, filter raw data using VoxGrid so as to compute rapidly and crop the interesting region . Secondly, RanSac with a plane model to segment the Point Cloud Data into pointd that are part of road and points that are not. And then, cluster the obstacle points using KD-Trees.

Keywords: PCL, VoxGrid, RanSac, KD-Trees

Summary: Analyze FMCW radar signatures to detect and track objects. Using processing techniques like Range/Doppler FFT, CFAR to create the Range Doppler Maps (RDM). Estimate the range and velocity of target using FMCW radar.

Keywords: FMCW, FFT, Doppler, CFAR

Summary: Find the best detector/descriptor combination from camera images and project the lidar data into two dimensions to fuse with camera image. Combine the yolov3 model to group the keypoints and lidar data belong to the preceding car to compute TTC(time-to-collision).

Keywords: YOLOv3, homogeneous coordinate, Camera intrinsic and extrinsic matrix

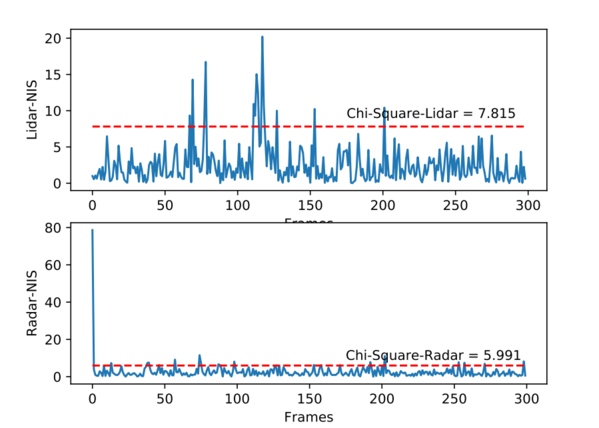

Summary: Fuse data from multiple sources using Kalman filters, and build extended and Unscented Kalman Filters(UKF) for tracking nonlinear movement. UKF model combining with sigma points generation, sigma point prediction, state mean and covariance prediction and measurement prediction to track the objections. NIS is used to check KF model consistency.

Keywords: Kalman Filter, UKF, Sigma points, CTRV model, NIS consistency