Zuyan Liu*,1,2 Yuhao Dong*,2,3 Ziwei Liu3 Winston Hu2 Jiwen Lu1,✉ Yongming Rao2,1,✉

1Tsinghua University 2Tencent 3S-Lab, NTU

* Equal Contribution ✉ Corresponding Author

Oryx SFT Data: Collected from open-source datasets, prepared data comming soon

- [20/09/2024] 🔥 🚀Introducing Oryx! The Oryx models (7B/34B) support on-demand visual perception, achieve new state-of-the-art performance across image, video and 3D benchmarks, even surpassing advanced commercial models on some benchmarks.

- [Paper]: Detailed introduction of on-demand visual perception, including native resolution perception and dynamic compressor!

- [Checkpoints]: Try our advanced model on your own.

- [Scripts]: Start training models with customized data.

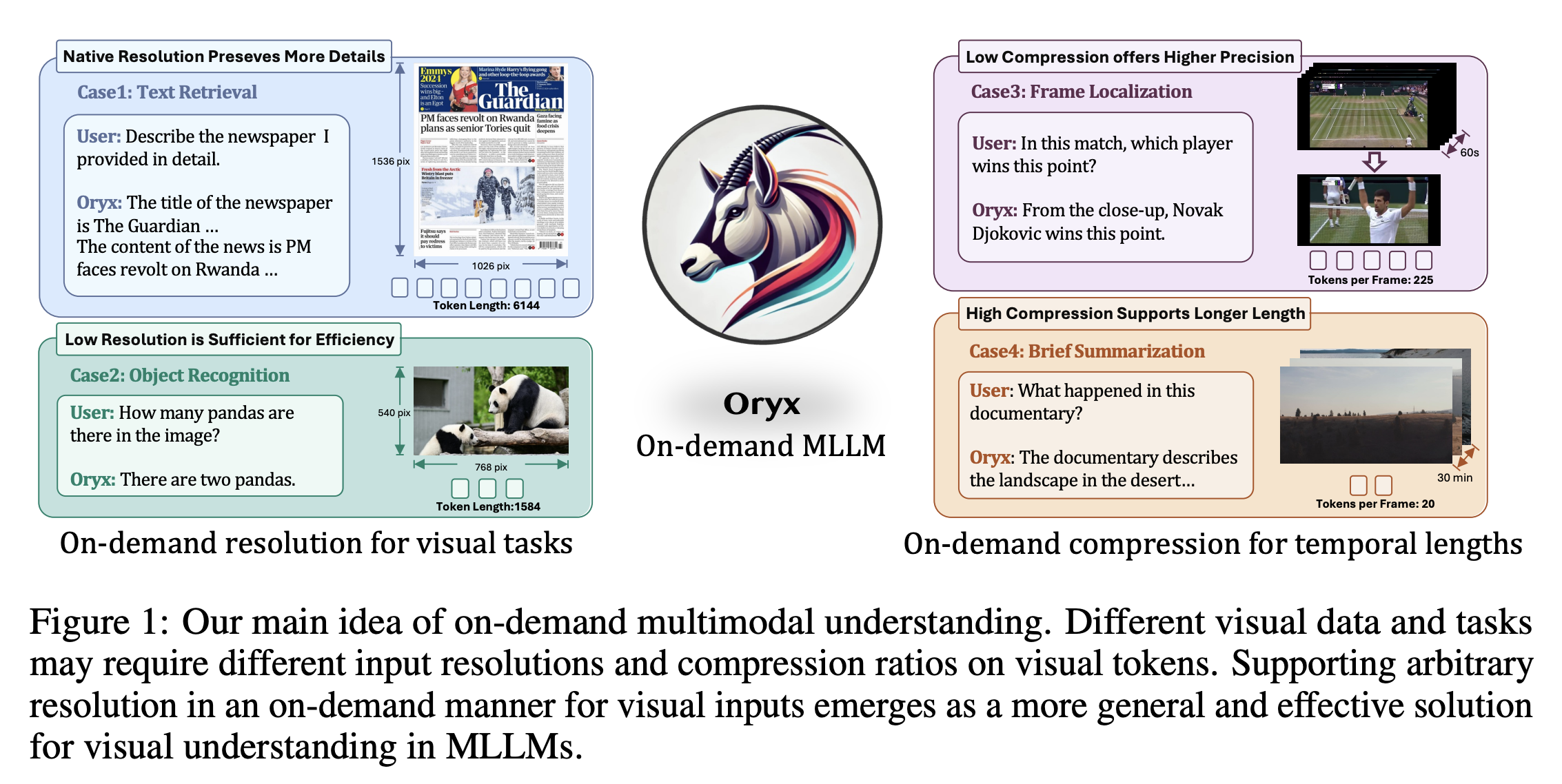

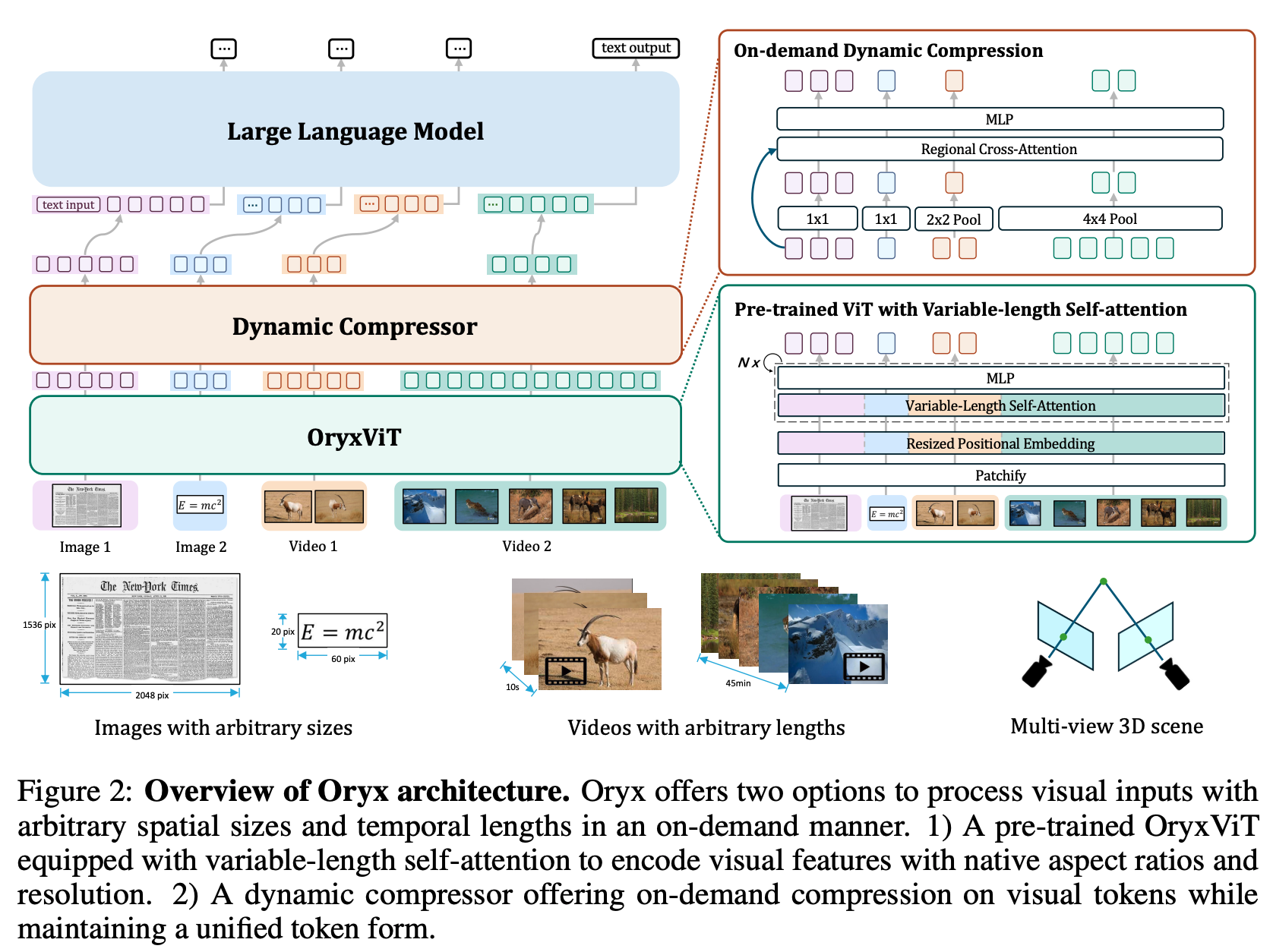

Oryx is a unified multimodal architecture for the spatial-temporal understanding of images, videos, and multi-view 3D scenes. Oryx offers an on-demand solution to seamlessly and efficiently process visual inputs with arbitrary spatial sizes and temporal lengths. Our model achieve strong capabilities in image, video, and 3D multimodal understanding simultaneously.

- Release all the model weights.

- Release OryxViT model.

- Demo code for generation.

- All the training and inference code.

- Evaluation code for image, video and 3D multi-modal benchmark.

- Oryx SFT Data.

- Oryx chatbox.

- Enhanced Oryx model with latest LLM base models and better SFT data.

- Introducing our explorations for OryxViT.

You can try the generation results of our strong Oryx model with the following steps:

1. Download the Oryx model from our huggingface collections.

2. Download the Oryx-ViT vision encoder.

3. Replace the path for "mm_vision_tower" in the config.json with your local path for Oryx-ViT. (We will simplify step 1, 2 and 3 in an automatically manner soon.)

python inference.pygit clone https://github.com/Oryx-mllm/oryx

cd oryxconda create -n oryx python=3.10 -y

conda activate oryx

pip install --upgrade pip

pip install -e🚧 We will release the instruction for collecting training data soon. We will also release our prepared data in patches.

Modify the following lines in the scripts at your own environments:

export PYTHONPATH=/PATH/TO/oryx:$PYTHONPATH

VISION_TOWER='oryx_vit:PATH/TO/oryx_vit_new.pth'

DATA="PATH/TO/DATA.json"

MODEL_NAME_OR_PATH="PATH/TO/7B_MODEL"Scripts for training Oryx-7B

bash scripts/train_oryx_7b.shScripts for training Oryx-34B

bash scripts/train_oryx_34b.shIf you find it useful for your research and applications, please cite our paper using this BibTeX:

@article{liu2024oryx,

title={Oryx MLLM: On-Demand Spatial-Temporal Understanding at Arbitrary Resolution},

author={Liu, Zuyan and Dong, Yuhao and Liu, Ziwei and Hu, Winston and Lu, Jiwen and Rao, Yongming},

journal={arXiv preprint arXiv:2409.12961},

year={2024}

}