Neural Network Library written in Python and built on top of JAX, an open-source high-performance automatic differentiation library.

- JAX for automatic differentiation.

- Mypy for static typing Python3 code.

- Matplotlib for plotting.

- Pandas for data analysis / manipulation.

- tqdm for displaying progress bar.

- NumPy for randomization.

- Enables high-performance machine learning research.

- Supports FFNN and CNN models.

- Built-in support of popular optimization algorithms and activation functions.

- Easy to use with high-level Keras-like APIs.

- Runs seamlessly on CPU, GPU and even TPU! without any configuration required.

from dnet import datasets

from dnet.layers import FC

from dnet.models import Sequential

(x_train, y_train), (x_val, y_val) = datasets.tiny_mnist(flatten=True, one_hot_encoding=True)

model = Sequential()

model.add(FC(units=500, activation="relu"))

model.add(FC(units=50, activation="relu"))

model.add(FC(units=1, activation="sigmoid"))

model.compile(loss="binary_crossentropy", optimizer="momentum", lr=1e-03, bs=128)

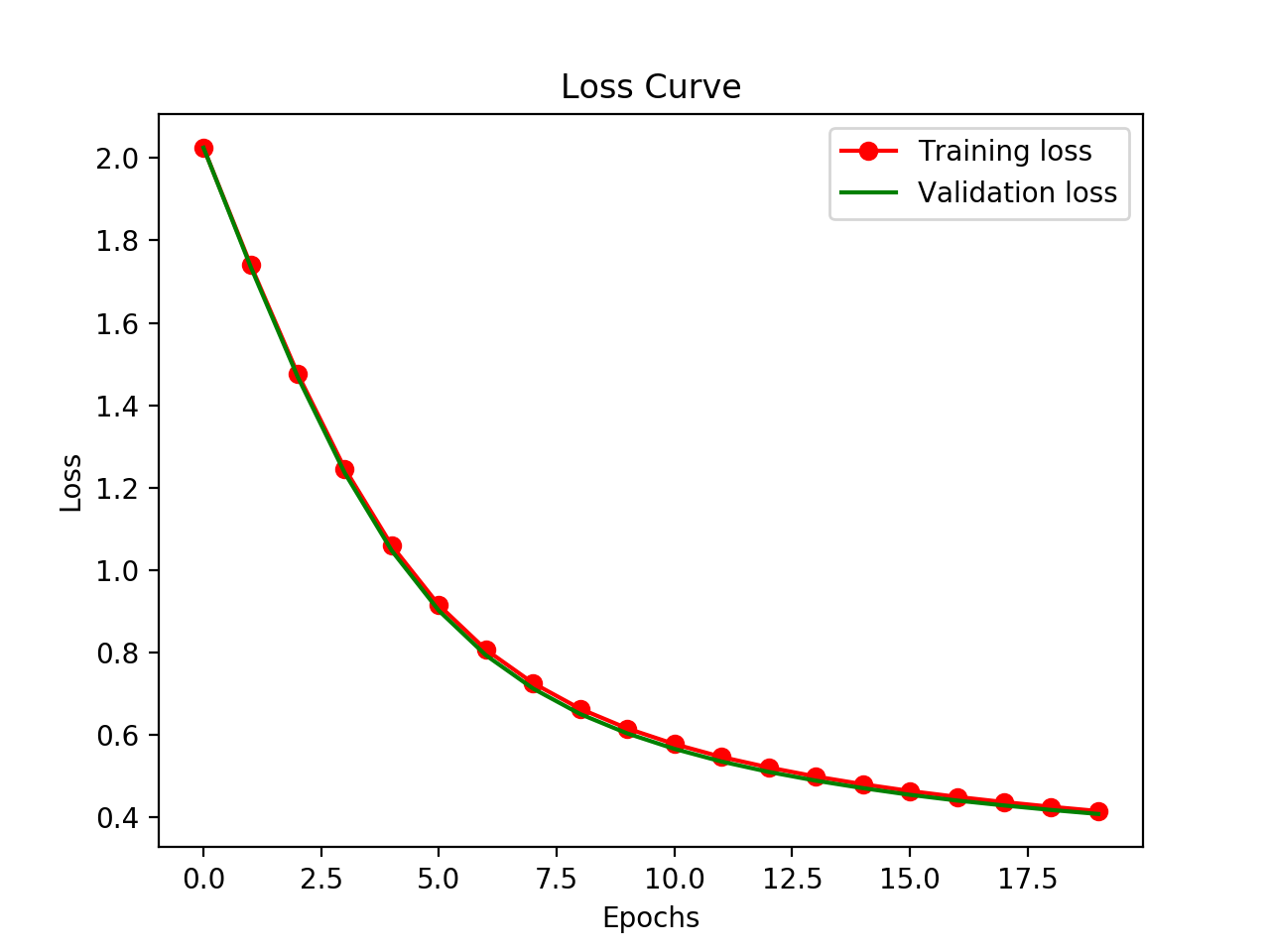

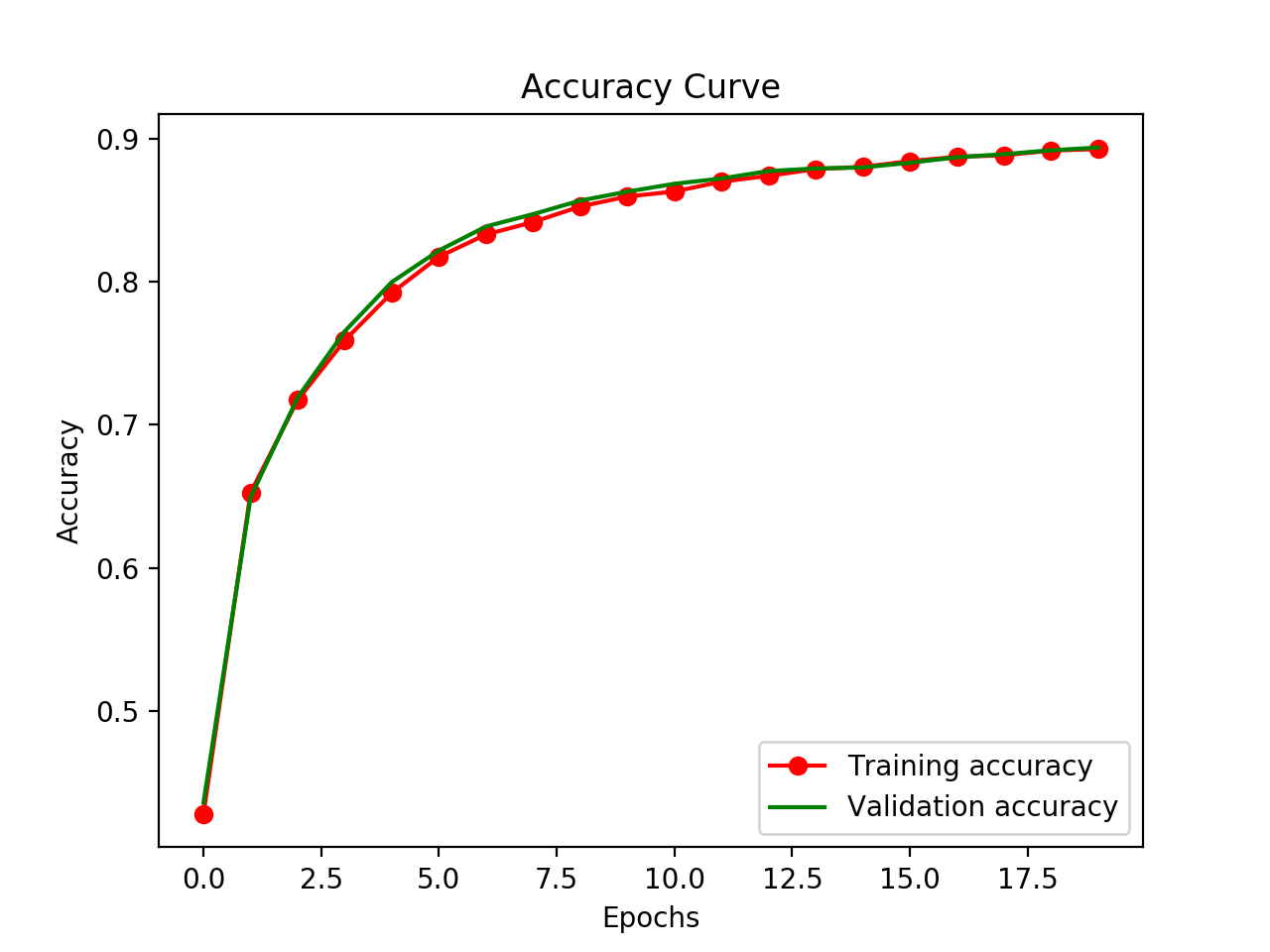

model.fit(inputs=x_train, targets=y_train, epochs=20, validation_data=(x_val, y_val))

model.plot_losses()

model.plot_accuracy()/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/jax/lib/xla_bridge.py:119: UserWarning: No GPU/TPU found, falling back to CPU.

warnings.warn('No GPU/TPU found, falling back to CPU.')

Epoch 20, Batch 40: 100%|██████████| 20/20 [00:15<00:00, 1.30it/s, Validation accuracy => 0.8935999870300293]

Process finished with exit code 0

from dnet import datasets

from dnet.layers import Conv2D, MaxPool2D, Flatten, FC

from dnet.models import Sequential

(x_train, y_train), (x_val, y_val) = datasets.tiny_mnist(flatten=False, one_hot_encoding=True)

model = Sequential()

model.add(Conv2D(filters=6, kernel_size=(5, 5), activation="relu"))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Conv2D(filters=16, kernel_size=(5, 5), activation="relu"))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(FC(units=120, activation="relu"))

model.add(FC(units=84, activation="relu"))

model.add(FC(units=1, activation="sigmoid"))

model.compile(loss="binary_crossentropy", optimizer="sgd", lr=1e-03, bs=32)

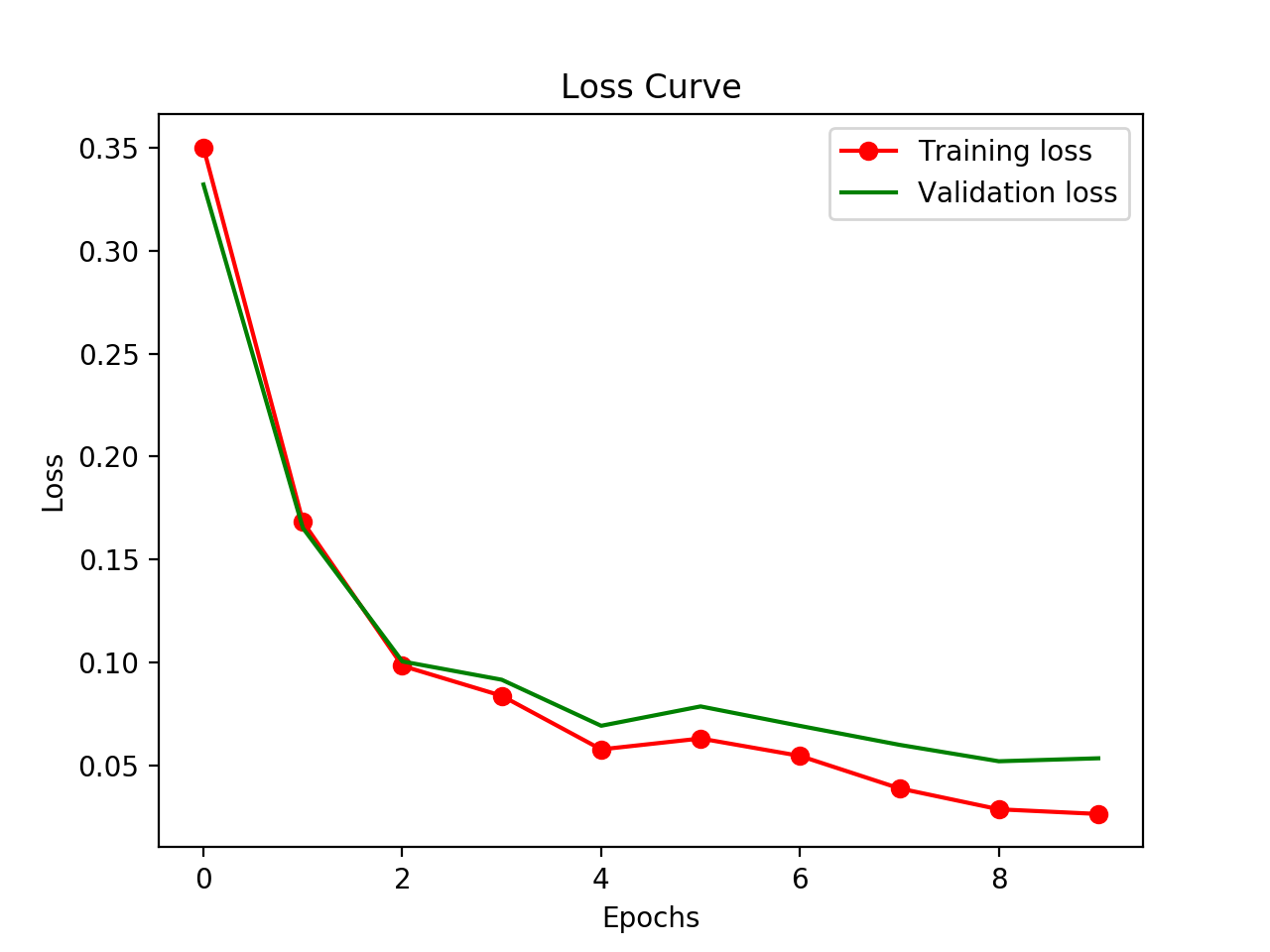

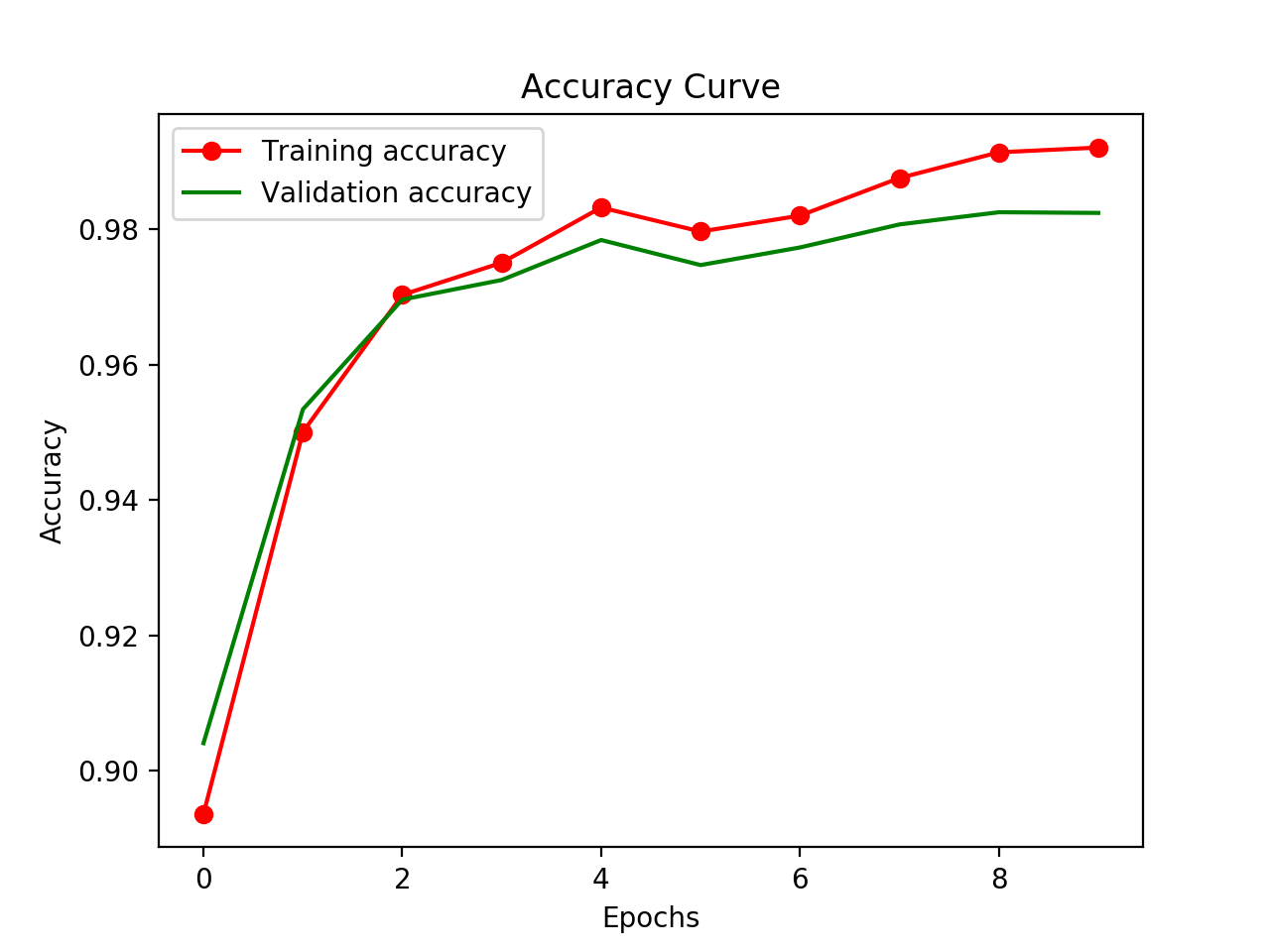

model.fit(inputs=x_train, targets=y_train, epochs=10, validation_data=(x_val, y_val))

model.plot_losses()

model.plot_accuracy()/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/jax/lib/xla_bridge.py:119: UserWarning: No GPU/TPU found, falling back to CPU.

warnings.warn('No GPU/TPU found, falling back to CPU.')

Epoch 10, Batch 40: 100%|██████████| 10/10 [01:41<00:00, 10.17s/it, Validation accuracy => 0.9824000000953674]

Process finished with exit code 0

Check the roadmap of this project. This will show you the progress in the development of this library.