💛 Costs of RAG based applications

💙 Follow Joanna on LinkedIn ➕ Follow Magdalena on LinkedIn

🤍 Sign up to DataTalksClub LLM Zoomcamp

⭐ Give this repository a star to support the initiative!

👉 Let’s make sure that your LLM application doesn’t burn a hole in your pocket.

👉 Let’s instead make sure your LLM application generates a positive ROI for you, your company and your users.

👉 A nice side effect of choosing cheaper models over expensive models: the response time is shorter!

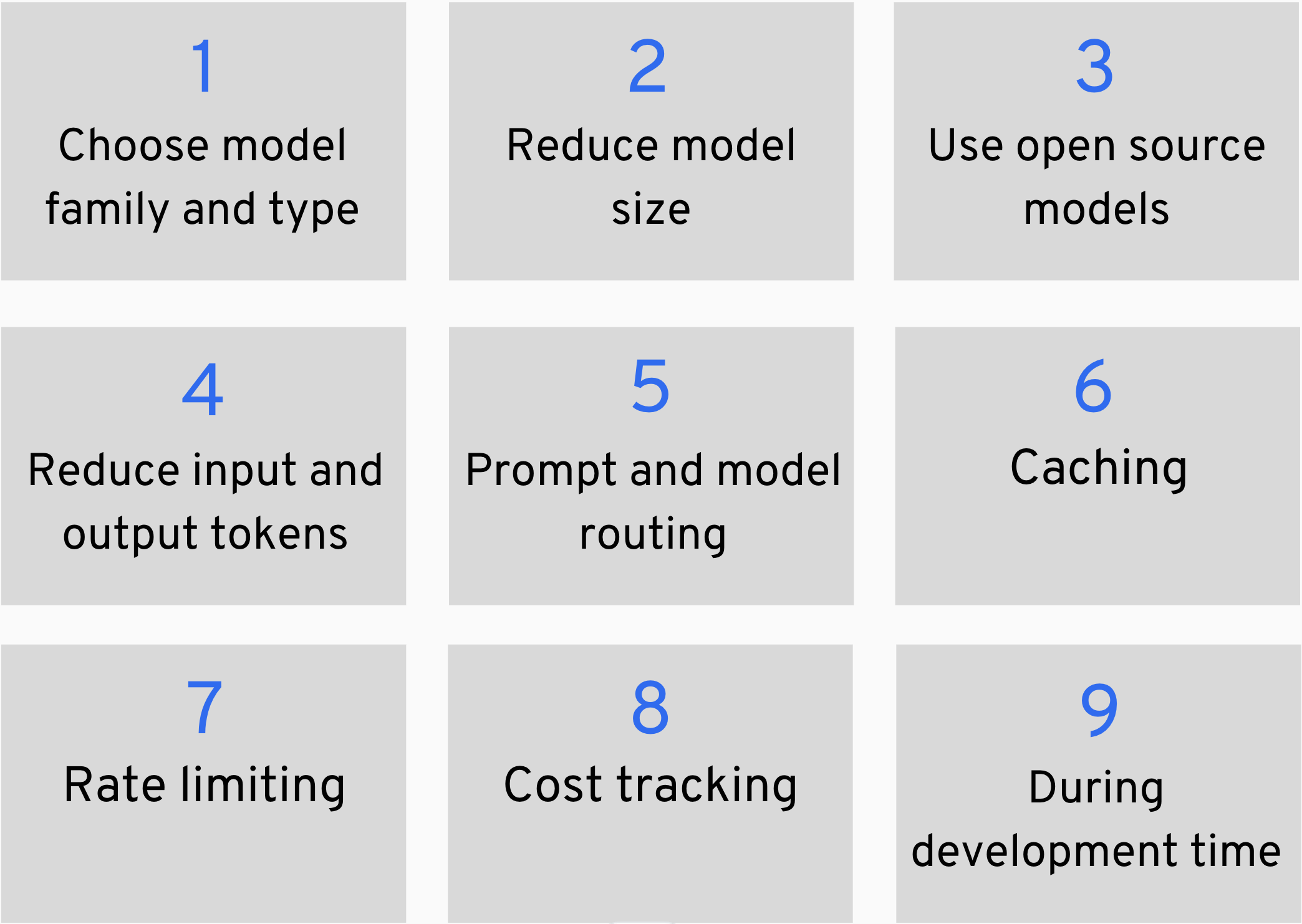

Selecting a suitable model or combination of models based on factors, such as speciality, size and benchmark results, builds the foundation for developing cost-sensible LLM applications. The aim is to choose a model that fits the complexity of the task. Same as you wouldn't take your BMW 8 Series M to a grocery store, you don't need to use a high-end LLM for simple tasks.

- Naveed, Humza, et al. "A comprehensive overview of large language models." arXiv preprint arXiv:2307.06435 (2023).

- Minaee, Shervin, et al. "Large Language Models: A Survey." arXiv preprint arXiv:2402.06196 (2024).

- MTEB (Massive Text Embedding Benchmark) Leaderboard by Huggingface

- Models by Huggingface

After chosing the suitable model family, you should consider models with fewer parameters and other techniques that reduce model size.

- Model parameter size (i.e. 7B, 13B ... 175B)

- Quantization (= reducing the precision of the model's parameters)

- Pruning (= removing unnecessary weights, neurons, channels or layers)

- Knowledge Distillation (= training smaller model that mimics a larger model)

- Frantar and Alistarh "SparseGPT: massive Language Models Can be Accurately Pruned in One-Shot" arXiv preprint arXiv:2301.00774 (2023).

- Hnton, Vinyals and Dean "Distilling the Knowledge in a Neural Network arXiv:1503.02531

- PyTorch Prune

- PyTorch Quantization

- LoRA and QLoRA make training large models more efficient

- Basics of quantization in ML

- How LLM quantization impacts model quality

- mlabonne/llm-course#quantization

Consider self-hosting models instead of using proprietary models if you have capable developers in house. Still, have an oversight of Total Cost of Ownership, when benchmarking managed LLMs vs. setting up everything on your own.

- 🗣️ call-for-contributions 🗣️

A key cost driver is the amount of input tokens (user prompt + context) and output tokens, that you allow for your LLM. Different techniques to reduce the amount of tokens help in saving costs.

Input tokens:

- Chunking of input documents

- Compression of input tokens

- Summarization of input tokens

- Test viability of zero-shot prompting before adding few-shot examples

- Experiment with simple, concise prompts before adding verbose explanations and details

Output tokens:

- Prompting to instruct the LLM how many output tokens are desired

- Prompting to instruct the LLM to be concise in the answer, adding no explanation text to the expected answer

- 🗣️ call-for-contributions 🗣️

- LLMLingua by Microsoft to compress input prompts

- 🗣️ call-for-contributions 🗣️

- General Tips for Designing Prompts | Prompt Engineering Guide

- 🗣️ call-for-contributions 🗣️

Send your incoming user prompts to a model router (= Python logic + SLM) to automatically choose a suitable model for actually answering the question. Follow Least-Model-Principle, which means to by default use the simplest possible logic or LM to answer a users question and only route to more complex LMs if necessary (aka. "LLM Cascading").

- LLamaIndex Routers and LLMSingleSelector to select the best fitting model from a range of potential models

- Nemo guardrails to detect and route based on intent

- Dynamically route logic based on input with LangChain, prompting and output parsing

If your users tend to send semantically similar or repetitive prompts to your LLM system, you can reduce costs by using different caching techniques. The key lies in developing a caching strategy, that does not only look for exact matches, but rather semantic overlap to have a decent cache hit ratio.

- 🗣️ call-for-contributions 🗣️

- GPTCache for semantic caching

- 🗣️ call-for-contributions 🗣️

Make sure one single customer is not able to penetrate your LLM and skyrocket your bill. Track amount of prompts per month per user and either hard limit to max amount of prompts or reduce response time when a user is hitting the limit. In addition, detect unnatural/sudden spikes in user requests (similar to DDOS attacks, users/competitors can harm your business by sending tons of requests to your model).

- Simple tracking and rate limiting logic can be implemented in native Python

- 🗣️ call-for-contributions 🗣️

"You can't improve what you don't measure" --> Make sure to know where your costs are coming from. Is it super active users? Is it a premium model? etc.

- Simple tracking and cost attribution logic can be implemented in native Python

- 🗣️ call-for-contributions 🗣️

- 🗣️ call-for-contributions 🗣️

- Make sure to not send endless API calls to your LLM during development and manual testing.

- Make sure to not send automated API calls to your LLM via automated CICD workflows, integration tests etc.

- We’re happy to review and accept your Pull Request on LLM cost reduction techniques and tools.

- We plan to divide the content into subpages to further structure all chapters.