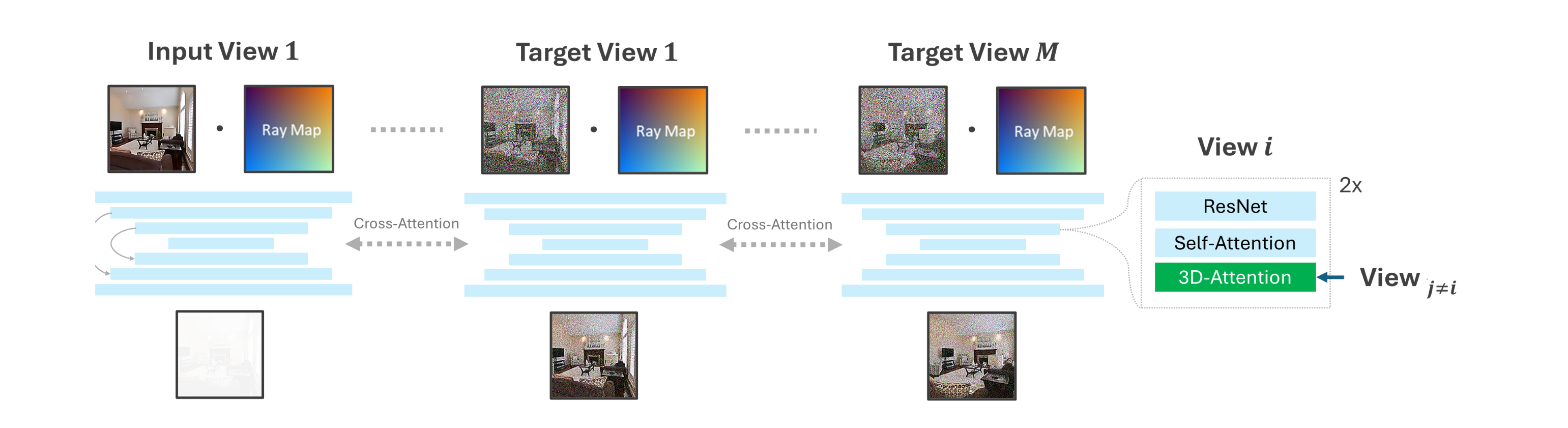

We propose an open-source multi-view diffusion model trained on RealEstate-10K dataset. The architecture follows similar structure as in CAT3D.

This is an extension of the codebase for

MET3R: Measuring Multi-View Consistency in Generated Images

Mohammad Asim, Christopher Wewer, Thomas Wimmer, Bernt Schiele, and Jan Eric Lenssen

Check out the project website here.

To get started, create a conda environment:

conda create -n diffsplat python=3.10

conda activate diffsplat

pip install torch==2.4.1 torchvision==0.19.1 torchaudio==2.4.1 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

Please move all dataset directories into a newly created datasets folder in the project root directory or modify the root path as part of the dataset config files in config/dataset.

For experiments on RealEstate10k, we use the same dataset version and preprocessing into chunks as pixelSplat. Please refer to their codebase here for information about how to obtain the data.

Trained checkpoint of MV-LDM for RealEstate10k are available on Hugging Face at asimbluemoon/mvldm-1.0.

The main entry point is src/scripts/generate_mvldm.py. Call it via:

Important

Sampling requires a GPU with at least 16 GB of VRAM.

python -m src.scripts.generate_mvldm +experiment=baseline mode=test dataset.root="<root-path-to-re10k-dataset>" scene_id="<scene-id>" checkpointing.load="<path-to-checkpoint>" dataset/view_sampler=evaluation dataset.view_sampler.index_path=assets/evaluation_index/re10k_video.json test.sampling_mode=anchored test.num_anchors_views=4 test.output_dir=./outputs/mvldm Note

scene_id="<scene-id>" either defines the specific integer index which refers to an ID of a scene ordered as in assets/evaluation_index/re10k_video.json or the sequence ID as a string e.g. "2d3f982ada31489c".

scene_id=25

scene_id="2d3f982ada31489c"To limit the number of frames in a given sequence, add the test.limit_frames argument to the above command as integer, e.g.,

test.limit_frames=80To define DDIM sampling steps, use the argument model.scheduler.num_inference_steps, e.g.,

model.scheduler.num_inference_steps=25Our code supports multi-GPU training. The above batch size is the per-GPU batch size.

Important

Training requires a GPU with at least 40 GB of VRAM.

python -m src.main +experiment=baseline

mode=train

dataset.root="<root-path-to-re10k-dataset>"

hydra.run.dir="<runtime-dir>"

hydra.job.name=trainWarning

In case of memory issues during training, we recommend lowering the batch size by appending data_loader.train.batch_size="<batch-size>" to the above command.

For running the training as a job chain on slurm or resuming training, always set the correct path in hydra.run.dir="<runtime-dir>" for each task.

If you are planning to use MV-LDM in your work, consider citing it as follows.

@misc{asim24met3r,

title = {MET3R: Measuring Multi-View Consistency in Generated Images},

author = {Asim, Mohammad and Wewer, Christopher and Wimmer, Thomas and Schiele, Bernt and Lenssen, Jan Eric},

booktitle = {arXiv},

year = {2024},

}