Demonstrates Azure Kinect DK camera body tracking features. Watch this youtube video to see the features in action.

Read the accompanying medium article for a deeper understanding of the APIs and geometry involved.

Note: This project has been developed on Ubuntu 20.04.

Step 1: Get the Azure Kinect DK camera

The general steps are as outlined in Microsoft documentation; but with a couple of hacks to make things work on Ubuntu 20.04.

- use of 18.04 repo, even though OS is 20.04

- installed lower versions of tools and libraries (as latest versions of sensor and body tracker don't seem to be compatible on 20.04)

$ curl -sSL https://packages.microsoft.com/keys/microsoft.asc | sudo apt-key add -

$ sudo apt-add-repository https://packages.microsoft.com/ubuntu/18.04/prod

$ curl -sSL https://packages.microsoft.com/config/ubuntu/18.04/prod.list | sudo tee /etc/apt/sources.list.d/microsoft-prod.list

$ curl -sSL https://packages.microsoft.com/keys/microsoft.asc | sudo apt-key add -

$ sudo apt-get update

$ sudo apt install libk4a1.3-dev

$ sudo apt install libk4abt1.0-dev

$ sudo apt install k4a-tools=1.3.0

- Verify sensor library by launching camera viewer

$ k4aviewer

-

Gnu C Compiler(gcc 9.3.0+)

-

cmake

sudo apt-get install cmake -

ninja-build

sudo apt-get install ninja-build -

Eigen3

sudo apt-get install libeigen3-dev -

Obtain an Azure Vision subscription and store endpoint and key in

AZURE_VISION_ENDPOINTandAZURE_VISION_KEYenvironment variables respectively.

$ git clone --recursive https://github.com/mpdroid/bones

cilantrowill also be cloned as a submodule inproducts/extern/cilantro.

$ cd bones

$ mkdir build

$ cd build

$ cmake .. -GNinja

$ ninja

$ ./bin/bones

If all has gone well, you should see the below instructions flash by before camera display is rendered on your monitor;

- Press 'L' for light sabers...

- Press 'O' for object detection; Point with right hand to trigger detection...

- Press 'W' for air-writing; raise left hand above your head and start writing with your right...

- Press 'J' to display joint information...

The Azure Kinect DK is a camera device with four major hardware components:

- 12MP RGB camera

- 1MP Depth camera

- Microphone Array

- Motion sensor that includes gyroscope and accelerometer.

In this project, we make use of the RGB camera, the Depth camera, the Sensor and Body Tracking SDKs and Azure Cognitive Services to enhance how a person can interact with objects around them in 3-Dimensional space.

- Sensor API methods are wrapped inside

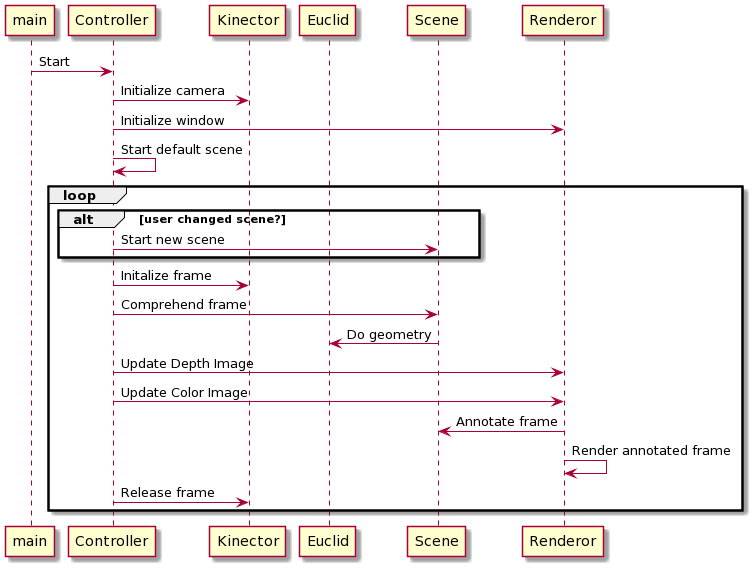

Kinector. Euclidwraps body tracking API and implements the geometry.Renderorhandles presenting camera frames with annotations on application window.*Sceneclasses implement scene comprehension and annotation.

The project applies this basic framework to implement a few body tracking applications:

- Displaying Joint Information: Visualizes the body tracking information by showing the depth position and orientation of a few key joints, directly on the color camera video feed.

- Light Sabers: Attaches light sabers to the hand of each body in the video, by making use of elbow, hand and thumb position and orientation. Demonstrates usage in augmented reality games without the need for expensive controllers physically attached to the human body.

- Air Writing: Lets the subject create letters or other artwork in the space around them by simply moving their hands. Demonstrates how to recognize gestures and use them to direct virtual or real-world action based on these gestures.

- Thing Finder: Recognizes objects being pointed at by the subject. This demonstrates how to combine body tracking with point cloud geometry and Azure cognitive services to create powerful 3-D vision AI applications.

- and others...

The project is designed to evolve as a platform. The goal is to make it easy to create new body tracking applications by simply implementing the AbstractScene interface.

Watch this youtube video to see the features in action.

Read the accompanying medium article for a deeper understanding of the APIs and geometry involved.

- Azure-Kinect-Sensor-SDK - Basics of camera capture and rendering in 2D and 3D

- Azure-Kinect-Samples - Advanced examples including body tracking

- kzampog/cilantro - Point cloud manipulation including clustering

- ocurnut/imgui - Rendering depth and camera images with drawing overlays

- deercoder/cpprestsdk-example - Using cpprestsdk to consume Azure vision services

- Note on Ray-Plane intersection - by Sam Symons

- Zampogiannis, Konstantinos and Fermuller, Cornelia and Aloimonos, Yiannis (2018). cilantro, A Lean and Efficient Library for Point Cloud Data Processing. kzampog/cilantro

- Symons, Sam. Note on Ray-Plane intersection