Search in Docs is a Web-Based interface to search local documents for answers using Llama 2 or other models supported by langchain. It can run on the CPU, GPU or mixed.

Based on Llama-2-Open-Source-LLM-CPU-Inference and this article.

The updates on this repo are:

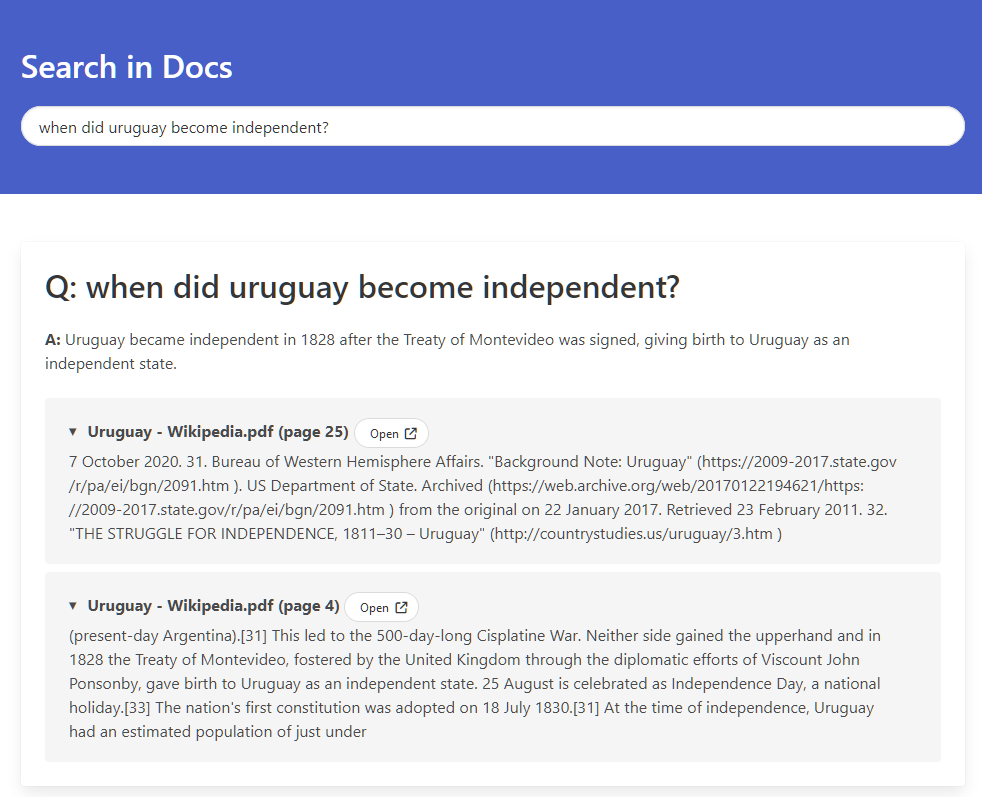

- New Web UI that allows you to search endlessly for documents, without having to re-load the entire model every time.

- Ability to expand the references and go to the source file at the specific page (works with PDFs by attaching

#page=<page>).

- Install the required packages (

pip install -r requirements.txt). If you want to use CUDA (GPUs), make sure to install Pytorch with CUDA. - The

datadirectory needs to hold all the files used for indexing and searching. Replace the fileUruguay - Wikipedia.pdfwith your.txtor.pdffiles. - Download the LLM into

models/. The orginal repo suggests downloading them from this huggingface to run the models on the CPU. If you don't know which one to use, start with this one that worked for me. - Tweak a few parameters on

config.yml:MODEL_BIN_PATH: The path to the model.DEVICE: If you want to use the GPU, set tocuda, otherwise usecpu.GPU_LAYERS: Try a few different values (higher is better). Depending on the VRAM of the GPU you may be able to fit more layers in the GPU. If your GPU runs out of VRAM, the setup will fail with an error, and you can lower the number. My setup has a `3060Ti`` and runs 30 layers correctly.MODEL_TYPE: If running a non-llama model, change the name here.

- Generate your indexed database of the documents. Run

python db_build.py. - Run the command line mode with

python endless.pyor the web UI withflask run.- If using the web UI, go to localhost:5000.

Note: Attempt first to run the command line app to test the initial setup. Once the command line works (the model loads properly in the GPU), you can try the Web UI.

Read more at the original repo that contains the single-use commandline interface.