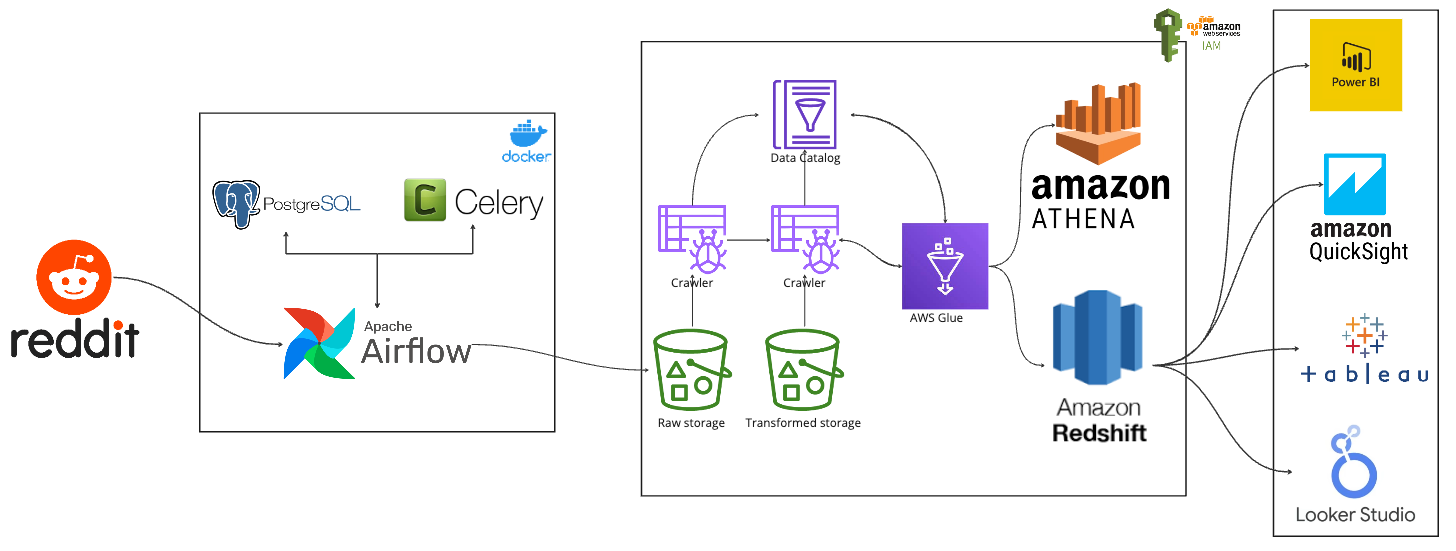

This project establishes an end-to-end data engineering solution to orchestrate the extraction, transformation, and loading (ETL) of Reddit data into a Redshift data warehouse. Leveraging Apache Airflow, Celery, PostgreSQL, Amazon S3, AWS Glue, Amazon Athena, and Amazon Redshift, the pipeline seamlessly processes data from Reddit's API through various stages for analytical purposes.

- Reddit API: Serves as the primary data source.

- Apache Airflow & Celery: Orchestrates the ETL process and efficiently manages task distribution.

- PostgreSQL: Temporary storage for intermediate data and metadata management.

- Amazon S3: Functions as the repository for raw data.

- AWS Glue: Performs data cataloging and ETL jobs.

- Amazon Athena: Facilitates SQL-based data transformation.

- Amazon Redshift: Serves as the data warehousing solution for analytics and querying.

##️ 🛠️ Prerequisites

Ensure the following prerequisites are met before setting up the system:

- AWS Account with appropriate permissions for S3, Glue, Athena, and Redshift.

- Reddit API credentials.

- Docker Installation

- Python 3.9 or higher

-

Clone the repository:

git clone https://github.com/airscholar/RedditDataEngineering.git

-

Create a virtual environment:

python3 -m venv venv

-

Activate the virtual environment:

source venv/bin/activate -

Install the dependencies:

pip install -r requirements.txt

-

Rename the configuration file and credentials:

mv config/config.conf.example config/config.conf

-

Start the containers:

docker-compose up -d

-

Launch the Airflow web UI:

open http://localhost:8080

Reddit ETL:

-

Install the required dependencies by running:

pip install -r requirements.txt

-

Execute the

etl_reddit_pipelineDAG in Apache Airflow using the provided DAG definition. -

The

reddit_extractiontask within the DAG extracts Reddit posts from the specified subreddit (dataengineeringby default) for the specified time period and limit. -

The extracted data is then transformed using the

transform_datafunction, applying necessary data type conversions. -

The transformed data is loaded into a CSV file, named based on the execution timestamp, and stored in the specified output path.

AWS ETL:

-

Ensure you have valid AWS credentials with appropriate permissions.

-

Install the required dependencies by running:

pip install -r requirements.txt

-

Execute the

etl_reddit_pipelineDAG in Apache Airflow to run thereddit_extractiontask. -

The

upload_s3task within the DAG uploads the CSV file containing Reddit data to an Amazon S3 bucket. -

Ensure that the

AWS_ACCESS_KEY_IDandAWS_ACCESS_KEYconstants in theutils.constantsmodule are appropriately set with your AWS credentials. -

Run the

upload_s3_pipelinescript, which will connect to the specified S3 bucket and upload the CSV file to the 'raw' folder. -

The Reddit data is now stored in the Amazon S3 bucket, ready for further processing and analytics.

Reddit ETL:

- The data source for this ETL process is Reddit, accessed through the Reddit API.

- The

connect_redditfunction establishes a connection to Reddit using the provided client ID, client secret, and user agent. - The

extract_postsfunction fetches Reddit posts from a specified subreddit using the established Reddit connection.

AWS ETL:

- The data source for the AWS ETL process is the output of the Reddit ETL stored in a CSV file.

- The

upload_s3_pipelinefunction uploads the extracted Reddit data to an Amazon S3 bucket for further processing.

Reddit ETL:

- The

transform_datafunction performs data transformation on the extracted Reddit posts.- Converts the 'created_utc' field to a datetime format.

- Converts the 'over_18' field to a boolean.

- Converts the 'edited' field to a boolean.

- Converts 'num_comments' and 'score' to integer types.

- Converts 'title' to a string.

AWS ETL:

- No specific data processing is performed in the AWS ETL pipeline; it focuses on uploading data to S3 for storage.

Reddit ETL:

- The transformed Reddit data is stored in a CSV file using the

load_data_to_csvfunction. - The output CSV file is named based on the provided

file_nameparameter and is saved in the specified output path.

AWS ETL:

- The Reddit data is stored in an Amazon S3 bucket.

- The

connect_to_s3function establishes a connection to the S3 bucket using the provided AWS access key ID and secret. - The

create_bucket_if_not_existfunction checks if the specified bucket exists and creates it if not. - The

upload_to_s3function uploads the Reddit data CSV file to the 'raw' folder within the specified S3 bucket.

This data pipeline ensures a structured flow from Reddit data extraction to its transformation and storage in both a local CSV file and an Amazon S3 bucket for further processing and analytics.

The ETL workflow is orchestrated using Apache Airflow with the following tasks:

-

Reddit Extraction Task (

reddit_extraction):- Description: Initiates the extraction of Reddit data using a custom Python function (

reddit_pipeline). - Parameters:

file_name: The name of the output file for the extracted data (e.g.,reddit_20231022).subreddit: The specific subreddit from which data is extracted (e.g.,dataengineering).time_filter: The time filter for Reddit data extraction (e.g.,day).limit: The limit on the number of posts to extract (e.g.,100).

- Functionality: Utilizes the

reddit_pipelinefunction to fetch data from the specified subreddit based on the given parameters. The extracted data is stored in a file with a timestamped postfix.

- Description: Initiates the extraction of Reddit data using a custom Python function (

-

S3 Upload Task (

s3_upload):- Description: Uploads the extracted Reddit data to an Amazon S3 bucket.

- Functionality: Invokes the

upload_s3_pipelinePython function, facilitating the transfer of the extracted data to an S3 bucket. The data is now available in the cloud storage, setting the stage for further processing.

The workflow ensures that the extraction from Reddit is followed by the seamless upload of the extracted data to Amazon S3. The schedule interval is set to daily (@daily), ensuring the workflow runs periodically.

This orchestrated workflow provides a structured and automated approach to the extraction and initial storage of Reddit data, facilitating subsequent processing stages.

Monitoring is facilitated through the Airflow web UI, providing insights into task execution, logs, and overall pipeline health.

Contribute to the project by submitting bug reports, feature requests, or code contributions. Follow the guidelines outlined in the CONTRIBUTING.md file.

This project is licensed under the MIT License.