ComfyUI reference implementation for IPAdapter models.

The code is mostly taken from the original IPAdapter repository and laksjdjf's implementation, all credit goes to them. I just made the extension closer to ComfyUI philosophy.

Example workflow

Download or git clone this repository inside ComfyUI/custom_nodes/ directory.

The pre-trained models are available on huggingface, download and place them in the ComfyUI/custom_nodes/ComfyUI_IPAdapter_plus/models directory.

For SD1.5 you need:

For SDXL you need:

Additionally you need the clip vision models:

- SD 1.5: pytorch_model.bin

- SDXL: pytorch_model.bin

You can rename them to something easier to remember (eg: ip-adapter_sd15-image-encoder.bin) and place them under ComfyUI/models/clip_vision/.

There's a basic workflow included in this repo and a few examples in the example directory.

IMPORTANT: To use the IPAdapter Plus models (base and face) you must use the new CLIP Vision Encode (IPAdapter) node. The non-plus version works with both the standard CLIP Vision Encode and the new one.

The model is very effective when paired with a ControlNet. In the example below I experimented with Canny. The workflow is in the examples directory.

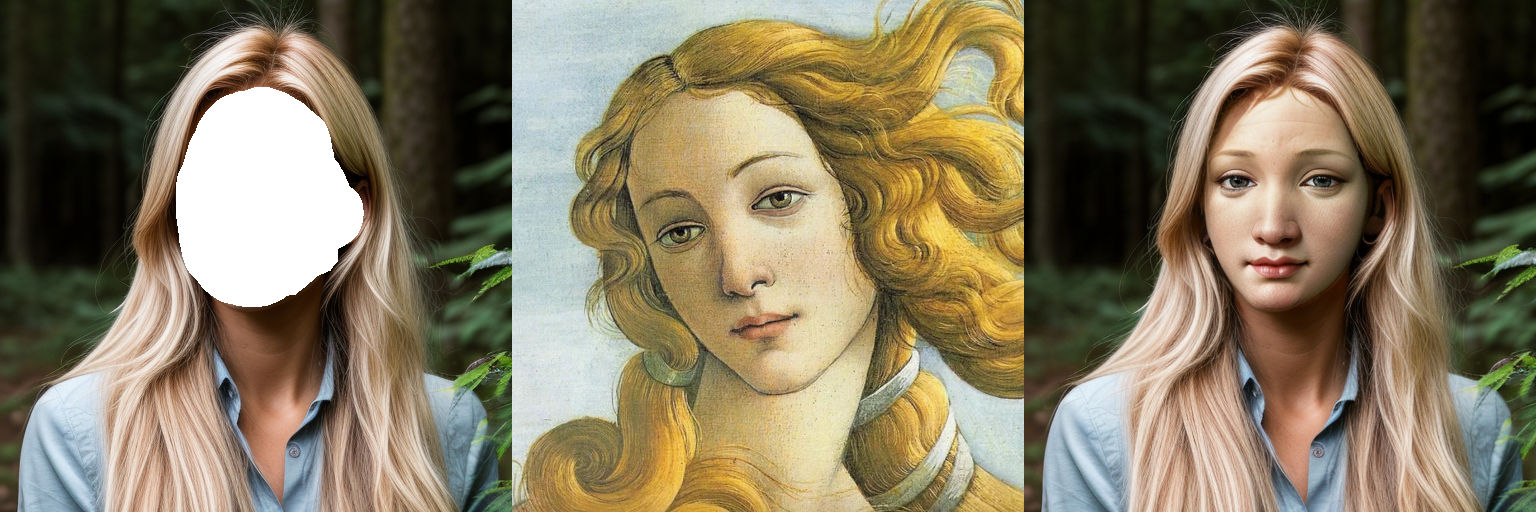

IPAdapter offers an interesting model for a kind of "face swap" effect. The workflow is provided.

Masking in img2img generally works but I find inpainting to be far more effective. The inpainting workflow uses the face model together with an inpainting checkpoint.

Important: when masking the IPAdapter Apply node be sure that the mask is of the same size of the latent.