-

Notifications

You must be signed in to change notification settings - Fork 10

sig.entropy

Computes the relative Shannon (1948) entropy of the input, used in information theory. In order to obtain a measure of entropy that is independent on the sequence length, sig.entropy actually returns the relative entropy.

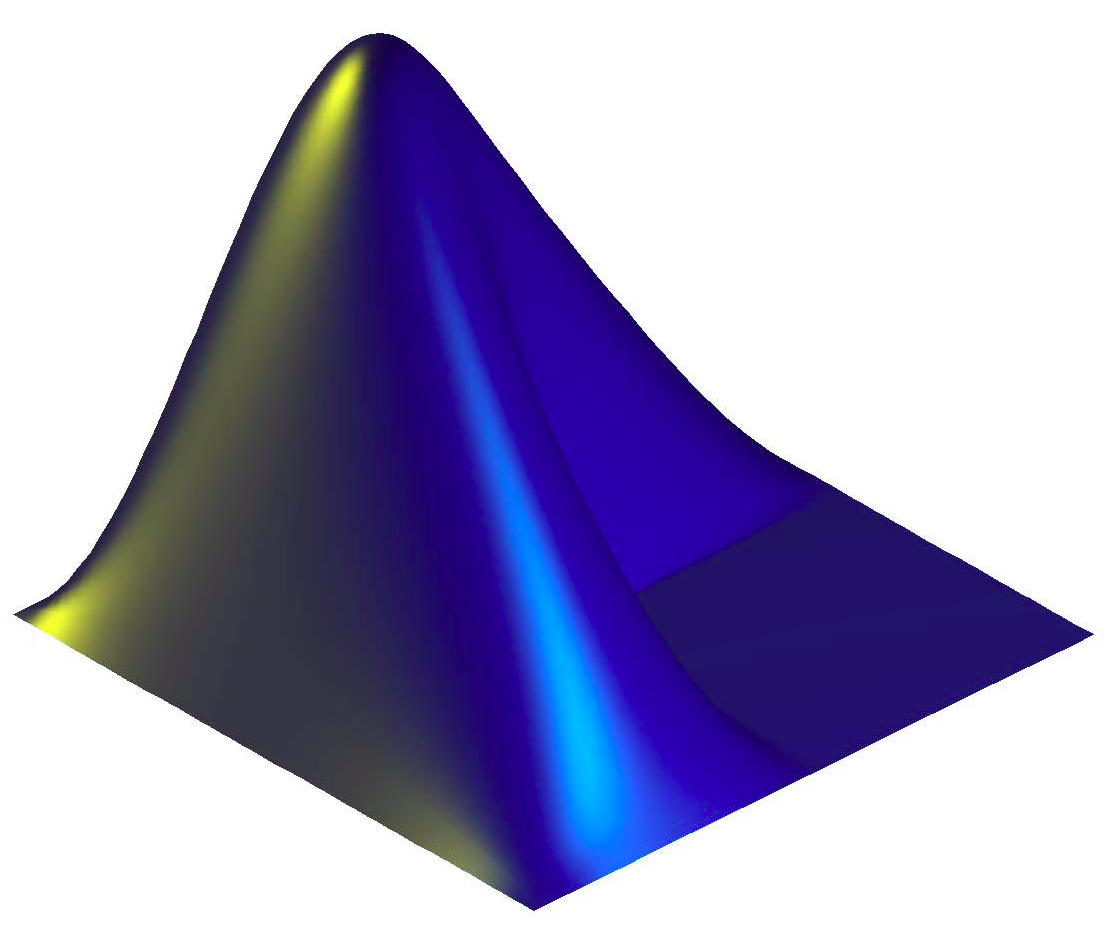

Shannon entropy offers a general description of the input curve p, and indicates in particular whether it contains predominant peaks or not. Indeed, if the curve is extremely flat, corresponding to a situation of maximum uncertainty concerning the output of the random variable X of probability mass function p(xi), then the entropy is maximal. Reversely, if the curve displays only one very sharp peak, above a flat and low background, then the entropy is minimal, indicating a situation of minimum uncertainty as the output will be entirely governed by that peak.

The equation of Shannon entropy can only be applied to functions p(xi) that follow the characteristics of a probability mass function: all the values must be non-negative and sum up to 1. Inputs of sig.entropy are transformed in order to respect these constraints:

- The non-negative values are replaced by zeros (i.e., half-wave rectification).

- The remaining data is scaled such that it sums up to 1.

Any data can be used as input. If the input is an audio waveform, a file name, or the ‘Folder’ keyword, the entropy is computed on the spectrum (spectral entropy).

- sig.entropy(..., ‘Center’) centers the input data before half-wave rectification.