[arXiv] [Website] [AcoustiX Code] [BibTex]

This repo contains the official implementation for AVR. For our simulator: AcoustiX Code, please use another repo.

Realistic audio synthesis that captures accurate acoustic phenomena is essential for creating immersive experiences in virtual and augmented reality. Synthesizing the sound received at any position relies on the estimation of impulse response (IR), which characterizes how sound propagates in one scene along different paths before arriving at the listener’s position. In this paper, we present Acoustic Volume Rendering (AVR), a novel approach that adapts volume rendering techniques to model acoustic impulse responses. While volume rendering has been successful in modeling radiance fields for images and neural scene representations, IRs present unique challenges as time-series signals. To address these challenges, we introduce frequency-domain volume rendering and use spherical integration to fit the IR measurements. Our method constructs an impulse response field that inherently encodes wave propagation principles and achieves state-of-the-art performance in synthesizing impulse responses for novel poses. Experiments show that AVR surpasses current leading methods by a substantial margin. Additionally, we develop an acoustic simulation platform, AcoustiX, which provides more accurate and realistic IR simulations than existing simulators.

Below is the instructions on how to install and set up the project.

- pytorch

- numpy

- scipy

- matplotlib

- librosa

- auraloss

In addition to above common python packages, we also use tinycudann to speed up the ray sampling. Installation of this repo and python extension is shown below.

# install tiny-cuda-nn PyTorch extension

pip install git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchAVR/

├── config_files/ # training and testing config files

│ ├── avr_raf_furnished.yml

│ ├── avr_raf_empty.yml

│ ├── avr_meshrir.yml

│ └── avr_simu.yml

├── logs/ # Log files

│ ├── meshrir # Meshrir logs

│ ├── RAF # RAF logs

│ └── simu # Simulation logs

├── tensorboard_logs/ # TensorBoard log files

├── data/ # Dataset

├── utils/ # Utility scripts

│ ├── criterion.py # Loss functions

│ ├── logger.py

│ ├── spatialization.py # Audio spatialization

│ └── metric.py # Metrics calculation.

├── tools/ # Tools to create datasets and more

│ └── meshrir_split.py # Create meshrir dataset split

├── avr_runner.py # AVR runner

├── datasets_loader.py # dataloader for different datasets

├── model.py # network

├── renderer.py # acoustic rendering file

├── README.md # Project documentation

└── .gitignore # Git ignore file- Train AVR on RAF-Furnished dataset

python avr_runner.py --config ./config_files/avr_raf_furnished.yml --dataset_dir ./data/RAF/FurnishedRoomSplit- Train AVR on RAF-Empty dataset

python avr_runner.py --config ./config_files/avr_raf_empty.yml --dataset_dir ./data/RAF/EmptyRoomSplit- Train AVR on MeshRIR dataset

python avr_runner.py --config ./config_files/avr_meshrir.yml --dataset_dir ./data/MeshRIRMeshRIR dataset: Refer to Create Meshrir Dataset Instructions

We show some visualization results from our paper:

We show the impusle response frequency spatial distribution with ground truth and different baseline methods. This is a bird-eye view of signal distributions.

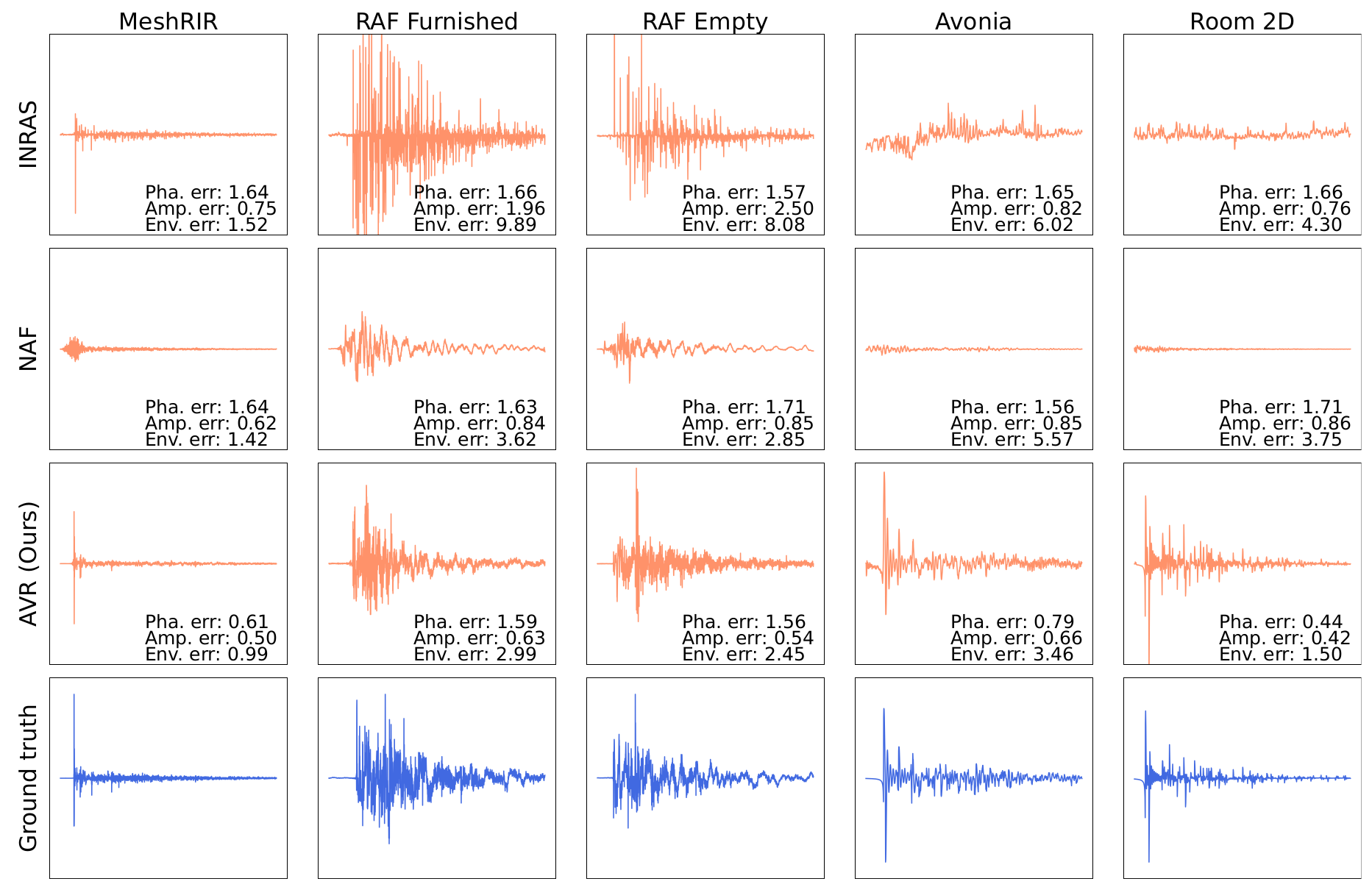

We show the estimated impulse response from different datasets and methods.

If you find this project to be useful for your research, please consider citing the paper.

@inproceedings{lanacoustic,

title={Acoustic Volume Rendering for Neural Impulse Response Fields},

author={Lan, Zitong and Zheng, Chenhao and Zheng, Zhiwei and Zhao, Mingmin},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems}

}