Audiobook Creator is an open-source project designed to convert books in various text formats (e.g., EPUB, PDF, etc.) into fully voiced audiobooks with intelligent character voice attribution. It leverages modern Natural Language Processing (NLP), Large Language Models (LLMs), and Text-to-Speech (TTS) technologies to create an engaging and dynamic audiobook experience. The project is licensed under the GNU General Public License v3.0 (GPL-3.0), ensuring that it remains free and open for everyone to use, modify, and distribute.

Sample multi voice audio for a short story : https://audio.com/prakhar-sharma/audio/generated-sample-multi-voice-audiobook

Watch the demo video:

The project consists of three main components:

-

Text Cleaning and Formatting (

book_to_txt.py):- Extracts and cleans text from a book file (e.g.,

book.epub). - Normalizes special characters, fixes line breaks, and corrects formatting issues such as unterminated quotes or incomplete lines.

- Extracts the main content between specified markers (e.g., "PROLOGUE" and "ABOUT THE AUTHOR").

- Outputs the cleaned text to

converted_book.txt.

- Extracts and cleans text from a book file (e.g.,

-

Character Identification and Metadata Generation (

identify_characters_and_output_book_to_jsonl.py):- Identifies characters in the text using Named Entity Recognition (NER) with the GLiNER model.

- Assigns gender and age scores to characters using an LLM via an OpenAI-compatible API.

- Outputs two files:

speaker_attributed_book.jsonl: Each line of text annotated with the identified speaker.character_gender_map.json: Metadata about characters, including name, age, gender, and gender score.

-

Audiobook Generation (

generate_audiobook.py):- Converts the cleaned text (

converted_book.txt) or speaker-attributed text (speaker_attributed_book.jsonl) into an audiobook using the Kokoro TTS model (Hexgrad/Kokoro-82M). - Offers two narration modes:

- Single-Voice: Uses a single voice for narration and another voice for dialogues for the entire book.

- Multi-Voice: Assigns different voices to characters based on their gender scores.

- Saves the audiobook in the selected output format to

generated_audiobooks/audiobook.{output_format}.

- Converts the cleaned text (

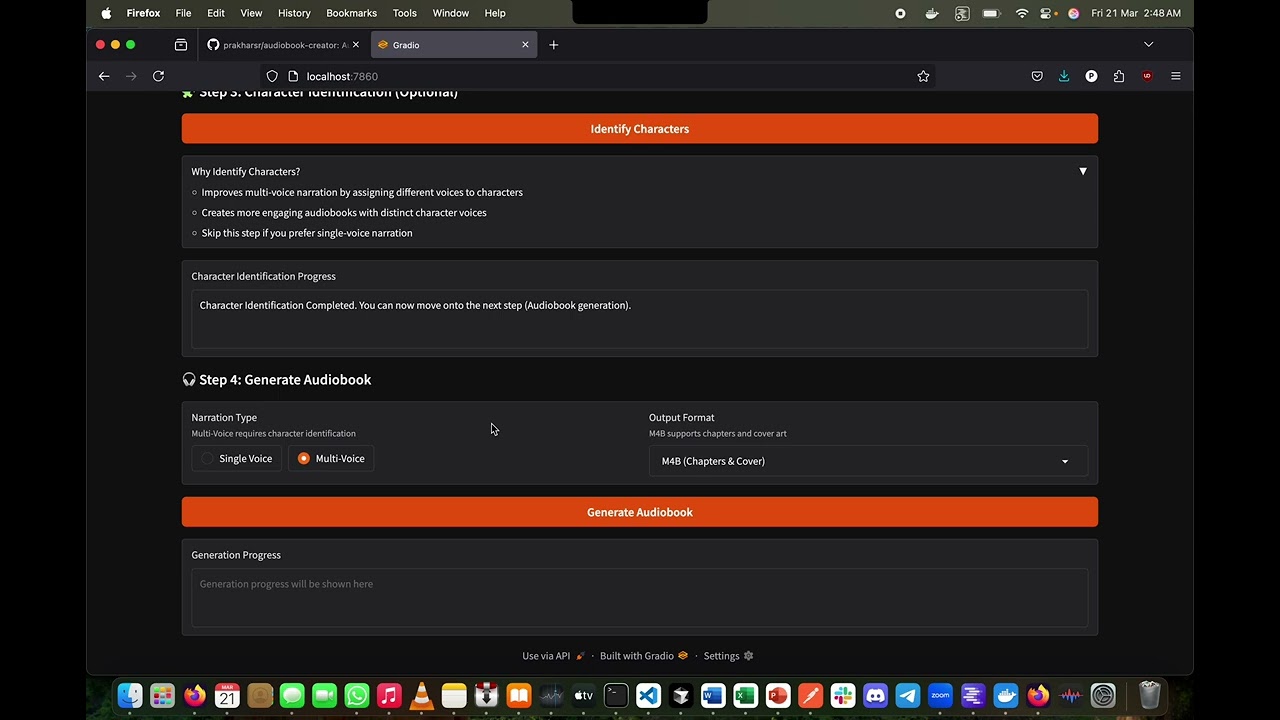

- Gradio UI App: Create audiobooks easily with an easy to use, intuitive UI made with Gradio.

- M4B Audiobook Creation: Creates compatible audiobooks with covers, metadata, chapter timestamps etc. in M4B format.

- Multi-Format Input Support: Converts books from various formats (EPUB, PDF, etc.) into plain text.

- Multi-Format Output Support: Supports various output formats: AAC, M4A, MP3, WAV, OPUS, FLAC, PCM, M4B.

- Docker Support: Use pre-built docker images/ build using docker compose to save time and for a smooth user experience.

- Text Cleaning: Ensures the book text is well-formatted and readable.

- Character Identification: Identifies characters and infers their attributes (gender, age) using advanced NLP techniques.

- Customizable Audiobook Narration: Supports single-voice or multi-voice narration for enhanced listening experiences.

- Progress Tracking: Includes progress bars and execution time measurements for efficient monitoring.

- Open Source: Licensed under GPL v3.

Expand

sample_book_and_audio/The Adventure of the Lost Treasure - Prakhar Sharma.epub: A sample short story in epub format as a starting point.sample_book_and_audio/The Adventure of the Lost Treasure - Prakhar Sharma.pdf: A sample short story in pdf format as a starting point.sample_book_and_audio/The Adventure of the Lost Treasure - Prakhar Sharma.txt: A sample short story in txt format as a starting point.sample_book_and_audio/converted_book.txt: The cleaned output after text processing.sample_book_and_audio/speaker_attributed_book.jsonl: The generated speaker-attributed JSONL file.sample_book_and_audio/character_gender_map.json: The generated character metadata.sample_book_and_audio/sample_multi_voice_audiobook.m4b: The generated sample multi-voice audiobook in M4B format with cover and chapters from the story.sample_book_and_audio/sample_multi_voice_audio.mp3: The generated sample multi-voice MP3 audio file from the story.sample_book_and_audio/sample_single_voice_audio.mp3: The generated sample single-voice MP3 audio file from the story.

-

Install Docker

-

Make sure host networking is enabled in your docker setup : https://docs.docker.com/engine/network/drivers/host/. Host networking is currently supported in Linux and in docker desktop. To use with docker desktop, follow these steps

-

Set up your LLM and expose an OpenAI-compatible endpoint (e.g., using LM Studio with

phi-4). -

Set up the Kokoro TTS model via Kokoro-FastAPI. To get started, run the docker image using the following command:

For CUDA based GPU inference (Apple Silicon GPUs currently not supported, use CPU based inference instead)

docker run \ --name kokoro_service \ --restart always \ --network host \ --gpus all \ ghcr.io/remsky/kokoro-fastapi-gpu:v0.2.2

For CPU based inference

docker run \ --name kokoro_service \ --restart always \ --network host \ ghcr.io/remsky/kokoro-fastapi-cpu:v0.2.2

-

Create a .env file from .env_sample and configure it with the correct values. Make sure you follow the instructions mentioned at the top of .env_sample to avoid errors.

cp .env_sample .env

-

After this, choose between the below options for the next step to run the audiobook creator app:

Quickest Start (docker run)

-

Make sure your .env is configured correctly and your LLM and Kokoro FastAPI are running. In the same folder where .env is present, run the below command

-

Choose between the types of inference:

For CUDA based GPU inference (Apple Silicon GPUs currently not supported, use CPU based inference instead)

docker run \ --name audiobook_creator \ --restart always \ --network host \ --gpus all \ --env-file .env \ -v model_cache:/app/model_cache \ ghcr.io/prakharsr/audiobook_creator_gpu:v1.0

For CPU based inference

docker run \ --name audiobook_creator \ --restart always \ --network host \ --env-file .env \ -v model_cache:/app/model_cache \ ghcr.io/prakharsr/audiobook_creator_cpu:v1.0

-

Wait for the models to download and then navigate to http://localhost:7860 for the Gradio UI

Quick Start (docker compose)

-

Clone the repository

git clone https://github.com/prakharsr/audiobook-creator.git cd audiobook-creator -

Make sure your .env is configured correctly and your LLM is running

-

If Kokoro docker container is already running, you can either stop and remove it or comment the kokoro_fastapi service in docker compose. If its not running then it will automatically start when you run docker compose up command

-

Copy the .env file into the audiobook-creator folder

-

Choose between the types of inference:

For CUDA based GPU inference (Apple Silicon GPUs currently not supported, use CPU based inference instead)

cd docker/gpu docker compose up --buildFor CPU based inference

cd docker/cpu docker compose up --build -

Wait for the models to download and then navigate to http://localhost:7860 for the Gradio UI

Direct run (via uv)

- Clone the repository

git clone https://github.com/prakharsr/audiobook-creator.git cd audiobook-creator - Make sure your .env is configured correctly and your LLM and Kokoro FastAPI are running

- Copy the .env file into the audiobook-creator folder

- Install uv

curl -LsSf https://astral.sh/uv/install.sh | sh - Create a virtual environment with Python 3.12:

uv venv --python 3.12

- Activate the virtual environment:

source .venv/bin/activate - Install Pip 24.0:

uv pip install pip==24.0

- Install dependencies (choose CPU or GPU version):

uv pip install -r requirements_cpu.txt

uv pip install -r requirements_gpu.txt

- Upgrade version of six to avoid errors:

uv pip install --upgrade six==1.17.0

- Install calibre (Optional dependency, needed if you need better text decoding capabilities, wider compatibility and want to create M4B audiobook). Also make sure that calibre is present in your PATH. For MacOS, do the following to add it to the PATH:

echo 'export PATH="/Applications/calibre.app/Contents/MacOS:$PATH"' >> ~/.zshrc source ~/.zshrc

- Install ffmpeg (Needed for audio output format conversion and if you want to create M4B audiobook)

- In the activated virtual environment, run

uvicorn app:app --host 0.0.0.0 --port 7860to run the Gradio app. After the app has started, navigate tohttp://127.0.0.1:7860in the browser.

-

Planned future enhancements:

- ⏳ Add support for choosing between various languages which are currently supported by Kokoro.

- ⏳ Add support for Zonos, Models: https://huggingface.co/Zyphra/Zonos-v0.1-hybrid, https://huggingface.co/Zyphra/Zonos-v0.1-transformer. Zonos supports voices with a wide range of emotions so adding that as a feature will greatly enhance the listening experience.

- ✅ Add support for running the app through docker.

- ✅ Create UI using Gradio.

- ✅ Try different voice combinations using

generate_audio_samples.pyand update thekokoro_voice_map.jsonto use better voices. - ✅ Add support for the these output formats: AAC, M4A, MP3, WAV, OPUS, FLAC, PCM, M4B.

- ✅ Add support for using calibre to extract the text and metadata for better formatting and wider compatibility.

- ✅ Add artwork and chapters, and convert audiobooks to M4B format for better compatibility.

- ✅ Give option to the user for selecting the audio generation format.

- ✅ Add extended pause when chapters end once chapter recognition is in place.

- ✅ Improve single-voice narration with a different dialogue voice from the narrator's voice.

- ✅ Read out only the dialogue in a different voice instead of the entire line in that voice.

For issues or questions, open an issue on the GitHub repository.

This project is licensed under the GNU General Public License v3.0 (GPL-3.0). See the LICENSE file for more details.

Contributions are welcome! Please open an issue or pull request to fix a bug or add features.

If you find this project useful and would like to support my work, consider donating:

PayPal

Enjoy creating audiobooks with this project! If you find it helpful, consider giving it a ⭐ on GitHub.