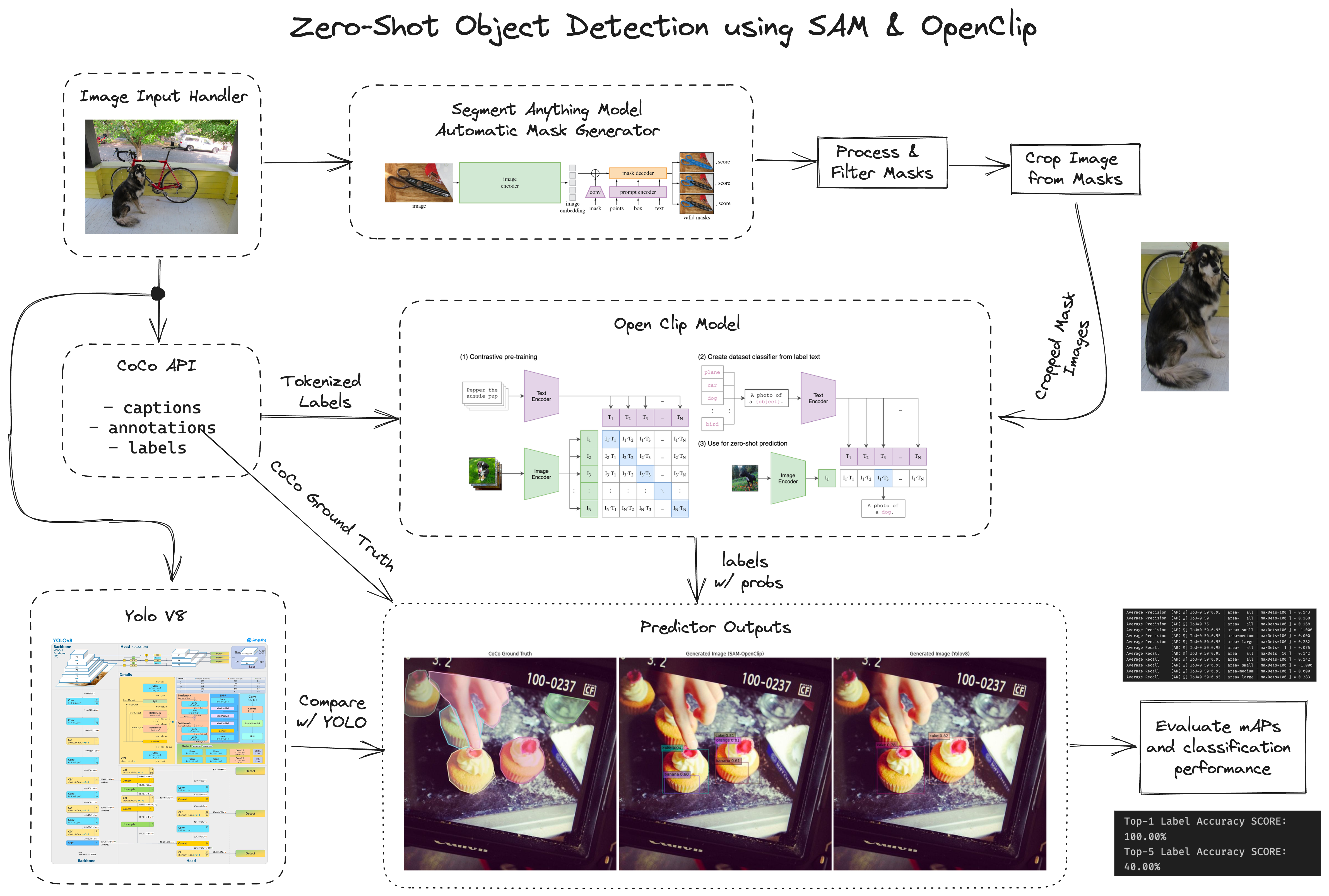

use SAM and OpenCLIP to perform zero-shot object detection using COCO 2017 val split.

Author: Sean Red Mendoza | 2020-01751 | [email protected]

- SegmentAnything

- OpenCLIP

- Coco 2017 Validation Dataset

- roatienza/mlops

- roatienza/Deep-Learning-Experiments

- Google Cloud G2 GPU VM (2x Nvidia L4)

- Integrate SAM with OpenCLIP

- Compare CoCo Ground Truth vs Model Prediction vs Yolov8 Prediction

- Support random image pick from CoCo 2017 validation split OR manual image upload (link)

- Show Summary Statistics

- Automatically generate object masks for source image using SAM AutomaticMaskGenerator

- Filter masks using predicted_iou, stability score, and mask image area to garner better masking results

- Crop source image based on generated masks

- Run each crop under OpenCLIP (trained with CoCo paper labels)

- Filter labels for most probable labels based on generated text probability

- Evaluate mask bounding boxes using mAPs score and label accuracy (Top-1 vs Top-5) from ground truth labels

- Tune SAM Model parameters to achieve better mAPs performance

- Select appropriate OpenCLIP pretrained model to achieve better mAPs and label accuracy

- It is recommended to use a CUDA-powered GPU with at least 40 GB VRAM, such as 2x L4s (current implementation), A100 40GB, or anything similar

- if hardware resources are limited, it is recommended to use lower

points_per_batchsetting in SAM as well as to use a lighter pretrained model in OpenCLIP

- if hardware resources are limited, it is recommended to use lower

- It is recommended to clone the repository for easier use, so you don't have to manually download any required files

- Due to hardware limitations, I am running the repo in a Google Cloud VM Instance. You may also consider leveraging Credits to make high-level computing accessible

- Ultimately, the performance of the system is limited because pretrained models are used instead of using the CoCo 2017 training dataset. This includes fine calibration of the SAM AutomaticMaskGenerator in comparison to the performance of Yolo v8. Similarly, the performance of OpenCLIP is bottlenecked by the quality of chosen labels and the pretrained model used.

- Duplicate this repository on a working directory

git clone https://github.com/reddiedev/197z-zshot-objdetection

cd 197z-zshot-objdetection- Install the necessary packages

pip install -r requirements.txtor alternatively, install them manually

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install matplotlib segment_anything opencv-contrib-python-headless

pip install open_clip_torch scikit-image validators

- Run the jupyter notebook You may use the test images

../images/dog_car.jpg

https://djl.ai/examples/src/test/resources/dog_bike_car.jpg

- To view complete logs and information, set

VERBOSE_LOGGINGtoTRUE