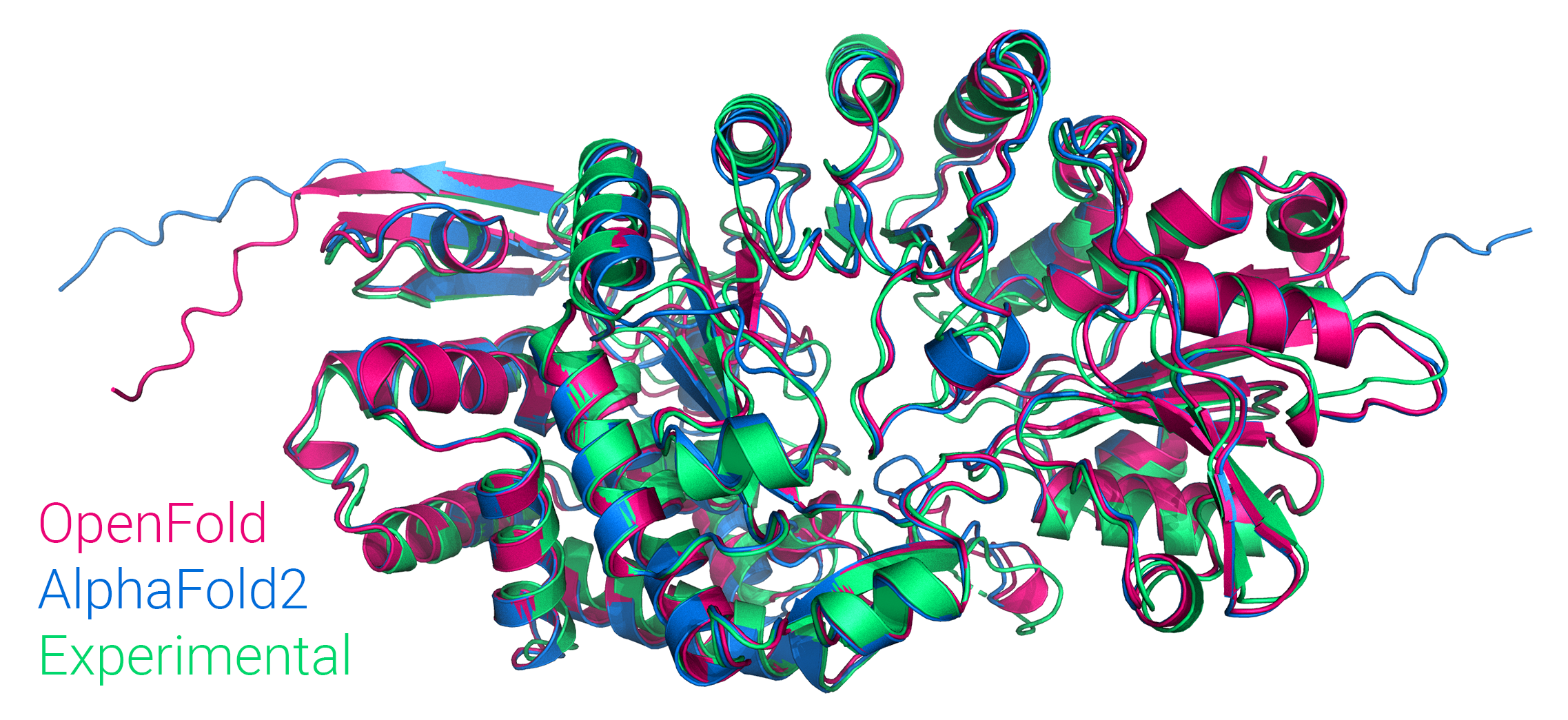

Figure: Comparison of OpenFold and AlphaFold2 predictions to the experimental structure of PDB 7KDX, chain B.

Figure: Comparison of OpenFold and AlphaFold2 predictions to the experimental structure of PDB 7KDX, chain B.

A faithful but trainable PyTorch reproduction of DeepMind's AlphaFold 2.

See our new home for docs at openfold.readthedocs.io, with instructions for installation and model inference/training.

Much of the content from this page may be found here.

While AlphaFold's and, by extension, OpenFold's source code is licensed under

the permissive Apache Licence, Version 2.0, DeepMind's pretrained parameters

fall under the CC BY 4.0 license, a copy of which is downloaded to

openfold/resources/params by the installation script. Note that the latter

replaces the original, more restrictive CC BY-NC 4.0 license as of January 2022.

If you encounter problems using OpenFold, feel free to create an issue! We also welcome pull requests from the community.

Please cite our paper:

@article {Ahdritz2022.11.20.517210,

author = {Ahdritz, Gustaf and Bouatta, Nazim and Floristean, Christina and Kadyan, Sachin and Xia, Qinghui and Gerecke, William and O{\textquoteright}Donnell, Timothy J and Berenberg, Daniel and Fisk, Ian and Zanichelli, Niccolò and Zhang, Bo and Nowaczynski, Arkadiusz and Wang, Bei and Stepniewska-Dziubinska, Marta M and Zhang, Shang and Ojewole, Adegoke and Guney, Murat Efe and Biderman, Stella and Watkins, Andrew M and Ra, Stephen and Lorenzo, Pablo Ribalta and Nivon, Lucas and Weitzner, Brian and Ban, Yih-En Andrew and Sorger, Peter K and Mostaque, Emad and Zhang, Zhao and Bonneau, Richard and AlQuraishi, Mohammed},

title = {{O}pen{F}old: {R}etraining {A}lpha{F}old2 yields new insights into its learning mechanisms and capacity for generalization},

elocation-id = {2022.11.20.517210},

year = {2022},

doi = {10.1101/2022.11.20.517210},

publisher = {Cold Spring Harbor Laboratory},

URL = {https://www.biorxiv.org/content/10.1101/2022.11.20.517210},

eprint = {https://www.biorxiv.org/content/early/2022/11/22/2022.11.20.517210.full.pdf},

journal = {bioRxiv}

}If you use OpenProteinSet, please also cite:

@misc{ahdritz2023openproteinset,

title={{O}pen{P}rotein{S}et: {T}raining data for structural biology at scale},

author={Gustaf Ahdritz and Nazim Bouatta and Sachin Kadyan and Lukas Jarosch and Daniel Berenberg and Ian Fisk and Andrew M. Watkins and Stephen Ra and Richard Bonneau and Mohammed AlQuraishi},

year={2023},

eprint={2308.05326},

archivePrefix={arXiv},

primaryClass={q-bio.BM}

}Any work that cites OpenFold should also cite AlphaFold and AlphaFold-Multimer if applicable.