This repository reproduces representative methods within the Generalized Out-of-Distribution Detection Framework,

aiming to make a fair comparison across methods that initially developed for anomaly detection, novelty detection, open set recognition, and out-of-distribution detection.

This codebase is still under construction.

Comments, issues, contributions, and collaborations are all welcomed!

|

|---|

| Timeline of the methods that OpenOOD supports. |

APS_modemeans Automatic (hyper)Parameter Searching mode, which enables the model to validate all the hyperparameters in the sweep list based on the validation ID/OOD set. The default value is False. Check here for example.

- 14 October, 2022: OpenOOD is accepted to NeurIPS 2022. Check the report here.

- 14 June, 2022: We release

v0.5. - 12 April, 2022: Primary release to support Full-Spectrum OOD Detection.

To setup the environment, we use conda to manage our dependencies.

Our developers use CUDA 10.1 to do experiments.

You can specify the appropriate cudatoolkit version to install on your machine in the environment.yml file, and then run the following to create the conda environment:

conda env create -f environment.yml

conda activate openood

pip install libmr==0.1.9 # if necessaryDatasets and pretrained models are provided here. Please unzip the files if necessary. We also provide an automatic data download script here.

Our codebase accesses the datasets from ./data/ and pretrained models from ./results/checkpoints/ by default.

├── ...

├── data

│ ├── benchmark_imglist

│ ├── images_classic

│ ├── images_medical

│ └── images_largescale

├── openood

├── results

│ ├── checkpoints

│ └── ...

├── scripts

├── main.py

├── ...

| OOD Benchmark | MNIST | CIFAR-10 | CIFAR-100 | ImageNet-1K |

|---|---|---|---|---|

| Accuracy | 98.50 | 95.24 | 77.10 | 76.17 |

| Checkpoint | link | link | link | link |

| OSR Benchmark | MNIST-6 | CIFAR-6 | CIFAR-50 | TIN-20 |

|---|---|---|---|---|

| Checkpoint | links | links | links | links |

The easiest hands-on script is to train LeNet-5 on MNIST and evaluate its OOD or FS-OOD performance with MSP baseline.

sh scripts/basics/mnist/train_mnist.sh

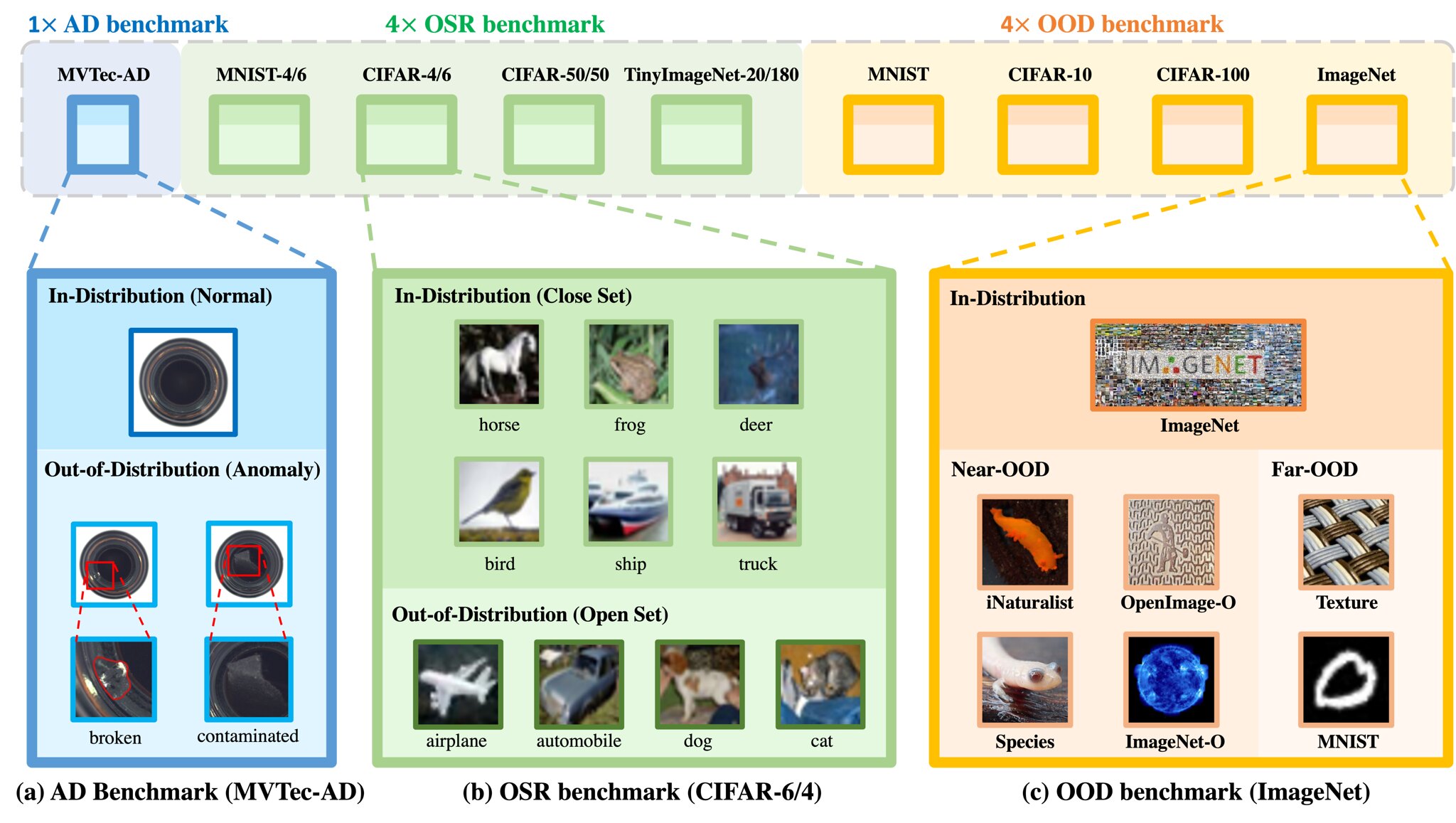

sh scripts/ood/msp/mnist_test_ood_msp.shThis part lists all the benchmarks we support. Feel free to include more.

Anomaly Detection (1)

Open Set Recognition (4)

Out-of-Distribution Detection (5)

- BIMCV (A COVID X-Ray Dataset)

Near-OOD:

CT-SCAN,X-Ray-Bone;

Far-OOD:MNIST,CIFAR-10,Texture,Tiny-ImageNet;

Robust-ID:ActMed;- MNIST

Near-OOD:

NotMNIST,FashionMNIST;

Far-OOD:Texture,CIFAR-10,TinyImageNet,Places365;

Robust-ID:SVHN;- CIFAR-10

Near-OOD:

CIFAR-100,TinyImageNet;

Far-OOD:MNIST,SVHN,Texture,Places365;

Robust-ID:CINIC-10;- CIFAR-100

Near-OOD:

CIFAR-10,TinyImageNet;

Far-OOD:MNIST,SVHN,Texture,Places365;

Robust-ID:CIFAR-100-C;- ImageNet-1K

Near-OOD:

Species,iNaturalist,ImageNet-O,OpenImage-O;

Far-OOD:Texture,MNIST;

Robust-ID:ImageNet-v2;

This part lists all the backbones we will support in our codebase, including CNN-based and Transformer-based models. Backbones like ResNet-50 and Transformer have ImageNet-1K/22K pretrained models.

CNN-based Backbones (4)

Transformer-based Architectures (2)

This part lists all the methods we include in this codebase. In v0.5, we totally support more than 32 popular methods for generalized OOD detection.

All the supported methodolgies can be placed in the following four categories.

We also note our supported methodolgies with the following tags if they have special designs in the corresponding steps, compared to the standard classifier training process.

Out-of-Distribution Detection (22)

Post-Hoc Methods (13):

Training Methods (6):

Training With Extra Data (3):

We appreciate all contributions to improve OpenOOD. We sincerely welcome community users to participate in these projects. Please refer to CONTRIBUTING.md for the contributing guideline.

If you find our repository useful for your research, please consider citing our paper:

@article{yang2022openood,

author = {Yang, Jingkang and Wang, Pengyun and Zou, Dejian and Zhou, Zitang and Ding, Kunyuan and Peng, Wenxuan and Wang, Haoqi and Chen, Guangyao and Li, Bo and Sun, Yiyou and Du, Xuefeng and Zhou, Kaiyang and Zhang, Wayne and Hendrycks, Dan and Li, Yixuan and Liu, Ziwei},

title = {OpenOOD: Benchmarking Generalized Out-of-Distribution Detection},

year = {2022}

}

@article{yang2022fsood,

title = {Full-Spectrum Out-of-Distribution Detection},

author = {Yang, Jingkang and Zhou, Kaiyang and Liu, Ziwei},

journal={arXiv preprint arXiv:2204.05306},

year = {2022}

}

@article{yang2021oodsurvey,

title={Generalized Out-of-Distribution Detection: A Survey},

author={Yang, Jingkang and Zhou, Kaiyang and Li, Yixuan and Liu, Ziwei},

journal={arXiv preprint arXiv:2110.11334},

year={2021}

}