- Prerequisites

- Image Acquisition

- Image Labeling

- Label Conversion

- Ultralytics YOLO (You Only Look Once)

- Training on Nvidia GPU

- YOLO Training Process

- Training Results

- Interpretation of Training Results

Python 3.12 or higher.

Set up and activate Python environment

python -m 'venv' .venv

.\.venv\Scripts\activateThe Python environment needs to be active for most of this project.

Install dependencies

pip install -r requirements.txtYou will need Nvidia's CUDA Toolkit, compatible with your GPU: download. For more details about selecting the correct CUDA SDK toolkit version and training on an Nvidia GPU see chapter Training on Nvidia GPU.

This section deals with the acquisition of images and is located in the download_images directory. You need to navigate to this directory to run the commands in this section.

cd ./download_imagesThe easiest way to acquire a dataset for object detection would be to download a dataset from one of the various providers out there.

- Roboflow: https://public.roboflow.com/object-detection/

- Kaggle: https://www.kaggle.com/datasets

- Papers with Code: https://paperswithcode.com/datasets?task=object-detection

Most of the time, you can even download the dataset in the appropriate annotations format for the model you intend to use.

For this project I did the image collection myself and provided the used python scripts (google and unsplash) Both of these scripts are using the BeatifulSoup Python package to scrape a specific website for images.

The Google version uses Google Image Search and downloads the preview images for all search results.

The Unsplash version uses the Unsplash Image Search and downloads the full images for all search results.

| Unsplash | ||

|---|---|---|

| Advantage | Many images | High resolution |

| Disadvantage | Low resolution | Few images |

Run the Google scraper:

python ./image_scraper_google.pyRun the Unsplash scraper:

python ./image_scraper_unsplash.pyThe images will be saved to the appropriate subdirectory in birds_dataset. Naturally, some images won't be of the correct motif, but because you'll have to review every picture once more while labeling, there's no need to sort them out now.

This section deals with the label process of the previously downloaded images and is located in the model_training directory. You need to navigate to this directory to run the commands in this section.

cd ./model_trainingThere are many different tools and websites available for annotating images.

- LabelImg: https://github.com/HumanSignal/labelImg

- VGG Image Annotator (VIA): https://www.robots.ox.ac.uk/~vgg/software/via/

- Labelbox: https://labelbox.com/

- Computer Vision Annotation Tool (CVAT): https://github.com/cvat-ai/cvat

Many of these tools have automatic annotation options available.

LabelImg has a user-friendly GUI that allows you to open files and folders, has a straightforward labeling process, and enables you to export labels in various formats.

The following commands are run from the root of this repository.

Install LabelImg

pip install labelImgRun LabelImg

labelImgCurrently, the pip version of LabelImg is incompatible with Python 3.10 and later. As described in GitHub issue #811: Unable to draw annotations on Windows. Fortunately the issue is fixed in the GitHub version if you run into this issue simply use the latest GitHub repository instead of the pip version.

git clone https://github.com/tzutalin/labelImg.git

cd labelImgCompile the resources

pyrcc5 -o libs/resources.py resources.qrcRun LabelImg

python labelImg.pyThis part is dedicated to Labelbox, an online tool which lets you add the labels easily.

After Labeling all images you can export the data which lets you download a labelbox_annotation.ndjson file. This file needs to be processed in order to use it for YOLO Training Process in this project.

After the annotation of the images is done you sometimes don't have the annotation in the correct format right away. For training with YOLO, you need to convert the format to the one specified here.

The conversion script can be found in the model_training directory.

cd model_trainingRequired file structure in YOLO:

dataset/

├── images/

│ ├── train/

│ │ ├── img1.jpg

│ │ └── ...

│ ├── val/

│ │ └── ...

│ └── test/

│ └── ...

└── labels/

├── train/

│ ├── img1.txt

│ └── ...

├── val/

│ └── ...

└── test/

└── ...Required annotation format in YOLO (Textfiles):

<object-class> <x_center> <y_center> <width> <height>There is no need to convert the labels from LabelImg to YOLO format because LabelImg allows you to export the annotations in the correct format.

The script uses the images from download_images/birds_dataset (Image Acquisition) and the annotation file downloaded from Labelbox (.ndjson). The final results (images and annotations) will be stored in a folder called annotations by default. Since all the information about each image is stored in the .ndjson file we can parse this information to fit the required annotation format for YOLO.

python convert_label_labelbox.pyThe script loads the Labelbox annotation file, extract the necessary information about the name of the image and position of the bounding box.

Use this data to create a new text file with the same name as the image, and write the bounding box information into the text file according to YOLO requirements.

It creates a new subdirectory in output_dir for each subdirectory found in images_base_dir. Now it copies the images and textfiles into their respective subdirectory.

To create a dataset according to the YOLO requirements, we need to split it into three distinct subsets: train, val, and test. Additionally, we must organize the images and labels into different subdirectories following the required file structure in YOLO. The script dataset_split.py will perform this split as follows:

- train: 70%

- val: 20%

- test: 10%

Adjust the dataset_dir variable in the script to the location where you have stored the labeled images (image and text files).

Split the dataset:

python dataset_split.pyUltralytics YOLO (You Only Look Once) models are a family of convolutional neural networks designed for real-time object detection. They are widely used in various applications due to their speed and accuracy. The primary purpose of YOLO models is to detect objects within an image or video stream and classify them into predefined categories. This involves identifying the location (bounding box) and the category (class label) of each object present in the scene. [1]

There are multiple versions of YOLO models, and newer versions are typically improvements over previous ones. In this project, YOLOv5, YOLOv8, and YOLOv9 are highlighted. Each version offers five different variants to support a wide range of applications. The smallest variant is designed for small, battery-powered, non-critical applications, while the largest model is intended for highly precise, non-power-dependent applications.

- Single Shot Detection

YOLO models perform object detection in a single shot. Unlike traditional methods that use a sliding window approach to scan an image at multiple scales and locations, YOLO divides the image into a grid and predicts bounding boxes and class probabilities directly. - Grid-Based Approach

- Image Division: The input image is divided into an SxS grid.

- Bounding Box Prediction: Each grid cell predicts a fixed number of bounding boxes.

- Confidence Score: Each bounding box comes with a confidence score that indicates the likelihood of an object being present and the accuracy of the bounding box coordinates.

- Class Probability: Each grid cell also predicts class probabilities for the object within it.

- Intersection Over Union (IoU)

YOLO uses IoU to evaluate the overlap between predicted bounding boxes and ground truth boxes. This helps in determining the accuracy of the predictions and in eliminating redundant boxes through non-max suppression. - Neural Network Architecture

YOLO models use a convolutional neural network (CNN) to process the input image and produce the predictions. The architecture typically involves:- Feature Extraction: Initial layers of the network extract features from the input image.

- Detection Head: Later layers predict bounding boxes, confidence scores, and class probabilities.

- Small Object Detection: YOLO models may struggle with detecting very small objects due to the grid-based approach.

- Localization Accuracy: The speed of YOLO can sometimes come at the cost of localization accuracy, especially in complex scenes with multiple overlapping objects.

- Training Data: The performance of YOLO heavily depends on the quality and diversity of the training data. Insufficient or biased training data can lead to poor generalization.

- Release Date: 2020

- Framework: Built on the PyTorch framework.

- Key Features:

- Ease of Use: Simplified training and deployment with user-friendly configuration files.

- Auto-Anchor Optimization: Automatically calculates optimal anchor boxes during training.

- Advanced Data Augmentation: Uses mosaic augmentation to enhance model robustness.

- Export Options: Supports exporting models to formats like ONNX, CoreML, and TensorRT for diverse deployment scenarios.

- Performance: Known for its speed and ease of use, making it a popular choice. [2] [7]

- Release Date: 2023

- Framework: Continuation on YOLOv5's framework with improvements.

- Key Features:

- Instance Segmentation: Adds the capability to segment objects at the pixel level, useful for tasks like semantic segmentation.

- Pose Estimation: Can estimate the orientation or pose of detected objects, beneficial for applications in sports analysis and augmented reality.

- YOLO-World Model: Introduces open-vocabulary detection, allowing identification of objects using descriptive texts.

- Unified Framework: Combines object detection, instance segmentation, and image classification in a single, unified framework.

- Performance: Faster and more accurate than YOLOv5, with added functionalities for broader use cases. [3] [7] [8] [9]

- Release Date: 2024

- Framework: Incorporates significant architectural advancements over YOLOv8.

- Key Features:

- Architectural Enhancements: Utilizes Programmable Gradient Information (PGI) and Generalized Efficient Layer Aggregation Network (GELAN) to improve learning capabilities and efficiency.

- Improved Accuracy: Focuses on minimizing information loss and enhancing accuracy through advanced neural network principles.

- Performance: Generally more accurate than YOLOv8, with lower false positive rates but potentially higher false negatives. It is designed to be a conservative and precise model for critical detection tasks. [4] [8] [10]

Comparison between the different YOLO versions and variants. [5] [6]

| Variant | Parameters | Model Size | Inference Speed | mAP@50 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| v5 | v8 | v9 | v5 | v8 | v9 | v5 | v8 | v9 | v5 | v8 | v9 | |

| nano | 1.9M | 3.2M | 2.9M | 4.0 MB | 7.1 MB | 6.0 MB | 934 FPS | 1163 FPS | 1250 FPS | 45.7% | 52.5% | 54.2% |

| small | 7.2M | 7.2M | 6.8M | 14.4 MB | 14.4 MB | 12.8 MB | 877 FPS | 925 FPS | 1010 FPS | 56.8% | 61.8% | 63.0% |

| medium | 21.2M | 21.2M | 19.0M | 41.2 MB | 41.2 MB | 37.5 MB | 586 FPS | 540 FPS | 590 FPS | 64.1% | 67.2% | 69.5% |

| large | 46.5M | 46.5M | 42.0M | 91.5 MB | 91.5 MB | 85.0 MB | 446 FPS | 391 FPS | 420 FPS | 67.3% | 69.8% | 71.5% |

| xlarge | 86.7M | 87.7M | 78.0M | 166.6 MB | 172.1 MB | 160.0 MB | 252 FPS | 236 FPS | 260 FPS | 68.9% | 71.0% | 72.5% |

Variant

Indicates the specific variant of the YOLO model. For version 9 (and later), the naming scheme has changed slightly, but it still offers five variants.

Parameters

The total number of learnable parameters in the model (in millions: M). More parameters typically mean a more complex model that can capture more details but requires more computation.

Model Size

The size of the model file on disk, measured in megabytes (MB). Larger models occupy more storage and generally have more parameters.

Inference Speed

The speed at which the model can process images on an NVIDIA RTX 4090 GPU, measured in frames per second (FPS). Higher FPS means faster inference.

mAP@50 (COCO)

The mean Average Precision at 50% Intersection over Union (IoU) on the COCO dataset. It is a standard metric for evaluating the accuracy of object detection models. Higher values indicate better performance.

YOLOv5 is ideal for ease of use and quick deployment. Note: outdated and no longer recommended.

YOLOv8 offers enhanced capabilities like instance segmentation and pose estimation, making it suitable for applications requiring detailed analysis.

YOLOv9 provides the highest accuracy with advanced architectural improvements, aimed at precision-critical tasks.

For training on your own PC, it is recommended to use the GPU, as training with the CPU will take significantly longer.

Your GPU must support CUDA!

CUDA is a parallel computing platform and programming model developed by NVIDIA for general computing on its GPUs.

You can find out which version of CUDA is supported by your GPU here.

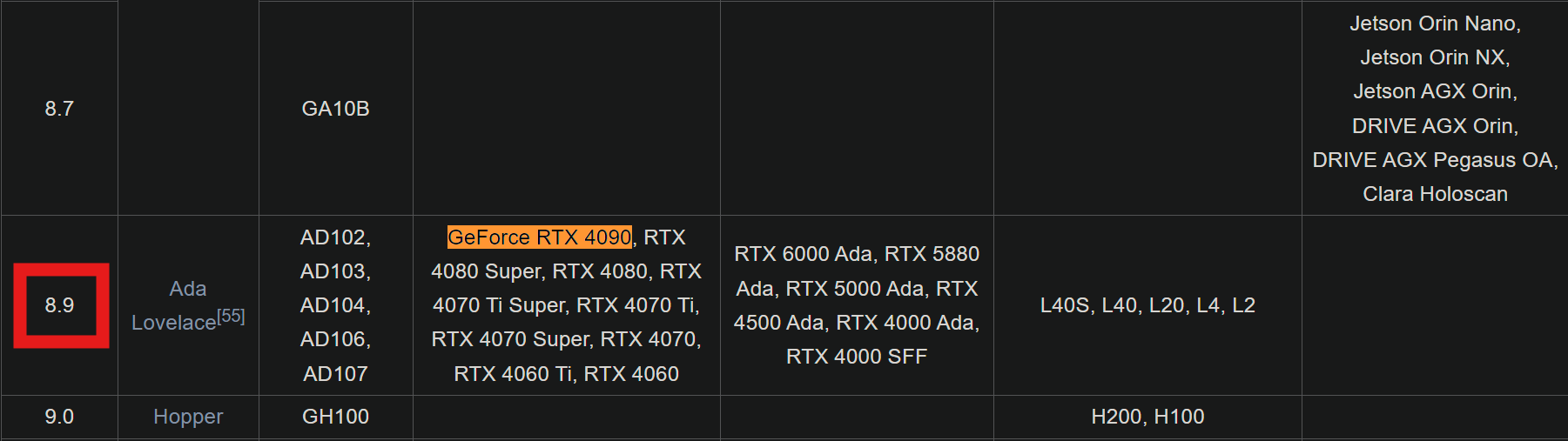

Example with the RTX 4090:

- Navigate to the "GPUs supported" section on Wikipedia page for CUDA.

- Here are 2 tables, the seconds one is a list of available CUDA GPUs, here you need to search for your GPU and remember the "Compute capability (version)" (marked red) value for your GPU (orange).

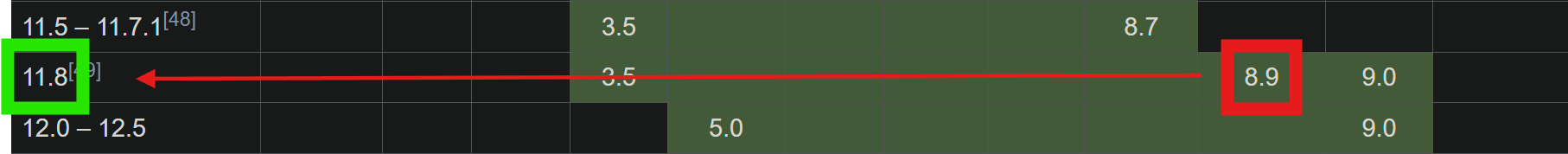

To leverage the GPU for computations, the PyTorch must be compiled with support for the specific version of the CUDA SDK toolkit installed on your system.

For example, torch cu118 indicates PyTorch compiled with CUDA 11.8 support.

After finding out which CUDA version is supported by your GPU, you can modify the URL below: https://download.pytorch.org/whl/cu118 . The PyTorch Documentation offers a command builder you can also use to get the correct version (make sure to uninstall torch first).

pip uninstall torch

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu118This section deals with training process based on the previously created dataset and is located in the model_training directory. You need to navigate to this directory to run the commands in this section.

cd ./model_trainingThe training process itself becomes quite simple after acquiring the images, classifying them, and dividing them into train, val, and test subsets.

Running GPU training requires the training script to be capable of multiprocessing (see documentation).

The freeze_support() function is used in Python's multiprocessing module to ensure that the script works correctly when converted to a standalone executable, especially on Windows platforms.

The training process in YOLO uses multiprocessing internally for tasks like data loading, augmentation, and training.

Adjust the dataset configuration file. You need to specify the location of your dataset using path.

The location of your training and validation images (train and val) should remain the same relative to the dataset specified in path.

If you want to use a relative path to your dataset (./dataset) you need to adjust your Ultralytics settings. The file settings.yaml is usually located at

C:\Users\username\AppData\Roaming\Ultralytics. Here, you need to adjust the datasets_dir variable to your base directory for training (model_training).

Adjust the training script to your needs. There are many different configurations you can make here, which are explained under Training Parameters.

Choose a model:

For your model (variable model) you can choose which version (e.g. yolov8, yolov9) and variant (e.g. yolov8n, yolov9m) you want to use (see Ultralytics YOLO).

Here, you can also decide whether you want to use a pretrained model (almost always recommended) or to train a model from scratch.

To use a pretrained model, you need to specify a .pt file (e.g. yolov8n.pt, yolov9m.pt). To train from scratch, you need to use a .yaml file (e.g. yolov8n.yaml, yolov9m.yaml).

Basic Configuration:

The most basic configuration for model.train() requires only the data parameter to be set (dataset configuration file).

More details about the remaining parameters can be found under Training Parameters or in the official Documentation.

Finally, run the training script in the python environment.

python ./model_training.py| Parameter | Type | Range | Default | Description | |

|---|---|---|---|---|---|

| Data | data | str | Path string | None | Path to the dataset configuration file. Adjust when you switch datasets. |

| Training | epochs | int | >=1 | 100 | Number of training epochs. Increase for more training iterations. |

| batch | int | >=1 | 16 | Batch size for training. Higher values require more GPU memory. | |

| imgsz | int | Any multiple of 32 | 640 | Image size for training. Larger images can improve accuracy but use more memory. | |

| name | str | Any string | None | Name of the training run or model. Useful for distinguishing experiments. | |

| patience | int | >=0 | 100 | Number of epochs to wait without improvement before stopping training. | |

| Learning | lr0 | float | 0 to 1 | 0.01 | Initial learning rate. Controls how quickly the model learns. |

| lrf | float | 0 to 1 | 0.01 | Final learning rate (multiplier). Gradually reducing the learning rate. | |

| momentum | float | 0 to 1 | 0.937 | Momentum for the optimizer. Helps accelerate gradient vectors. | |

| weight_decay | float | 0 to 1 | 0.0005 | Weight decay (L2 regularization). Prevents overfitting by penalizing large weights. | |

| warmup_epochs | float | 0 to 10 | 3.0 | Number of warmup epochs. Stabilizes early training. | |

| warmup_momentum | float | 0 to 1 | 0.8 | Initial momentum during warmup. Helps in building up the velocity of weight updates. | |

| warmup_bias_lr | float | 0 to 1 | 0.1 | Initial learning rate for biases during warmup. Converges biases faster initially. | |

| cos_lr | bool | True or False | False | Use cosine learning rate scheduler. | |

| Loss | box | float | 0 to 1 | 7.5 | Box regression loss gain. |

| cls | float | 0 to 1 | 0.5 | Classification loss gain. | |

| Augmentation | hsv_h | float | 0 to 1 | 0.015 | Hue augmentation (HSV color space). Adjusts degree of hue augmentation. |

| hsv_s | float | 0 to 1 | 0.7 | Saturation augmentation (HSV color space). Enhances model robustness. | |

| hsv_v | float | 0 to 1 | 0.4 | Value augmentation (HSV color space). | |

| degrees | float | -180 to 180 | 0.0 | Image rotation degrees. | |

| translate | float | 0 to 1 | 0.1 | Image translation. | |

| scale | float | >=0 | 0.5 | Image scaling. | |

| shear | float | -180 to 180 | 0.0 | Image shear. | |

| perspective | float | 0 to 1 | 0.0 | Image perspective transformation. | |

| flipud | float | 0 to 1 | 0.0 | Vertical flip probability. | |

| fliplr | float | 0 to 1 | 0.5 | Horizontal flip probability. | |

| mosaic | float | 0 to 1 | 1.0 | Mosaic augmentation probability. Combines four images into one. | |

| mixup | float | 0 to 1 | 0.2 | Mixup augmentation probability. Blends two images for each training example. | |

| copy_paste | float | 0 to 1 | 0.1 | Copy-paste augmentation probability. Copies objects from one image to another. | |

| Other | close_mosaic | int | Any positive integer | 10 | Epoch to close mosaic augmentation. Disables mosaic augmentation after certain epochs. |

This section is comparing the training results of different YOLO versions and variants. The parameters remained consistent across all training sessions (except for version, variant, and name). These are the parameters set in model_training.py. Before each training process, previously created dataset *.cache files are deleted to ensure a fair comparison. Each training run consists of 533 train images, 152 val images, and 5 classes.

| Variant | Time | Size | ||||

|---|---|---|---|---|---|---|

| v5 | v8 | v9 | v5 | v8 | v9 | |

| nano | 7 min 28 s | 7 min 10 s | 13 min 51 s | 5.04 MB | 5.97 MB | 4.44 MB |

| medium | 16 min 18 s | 17 min 12 s | 19 min 49 s | 48.1 MB | 49.6 MB | 38.9 MB |

| xlarge | 40 min 20 s | 36 min 22 s | 82 min 27s | 185 MB | 130 MB | 111 MB |

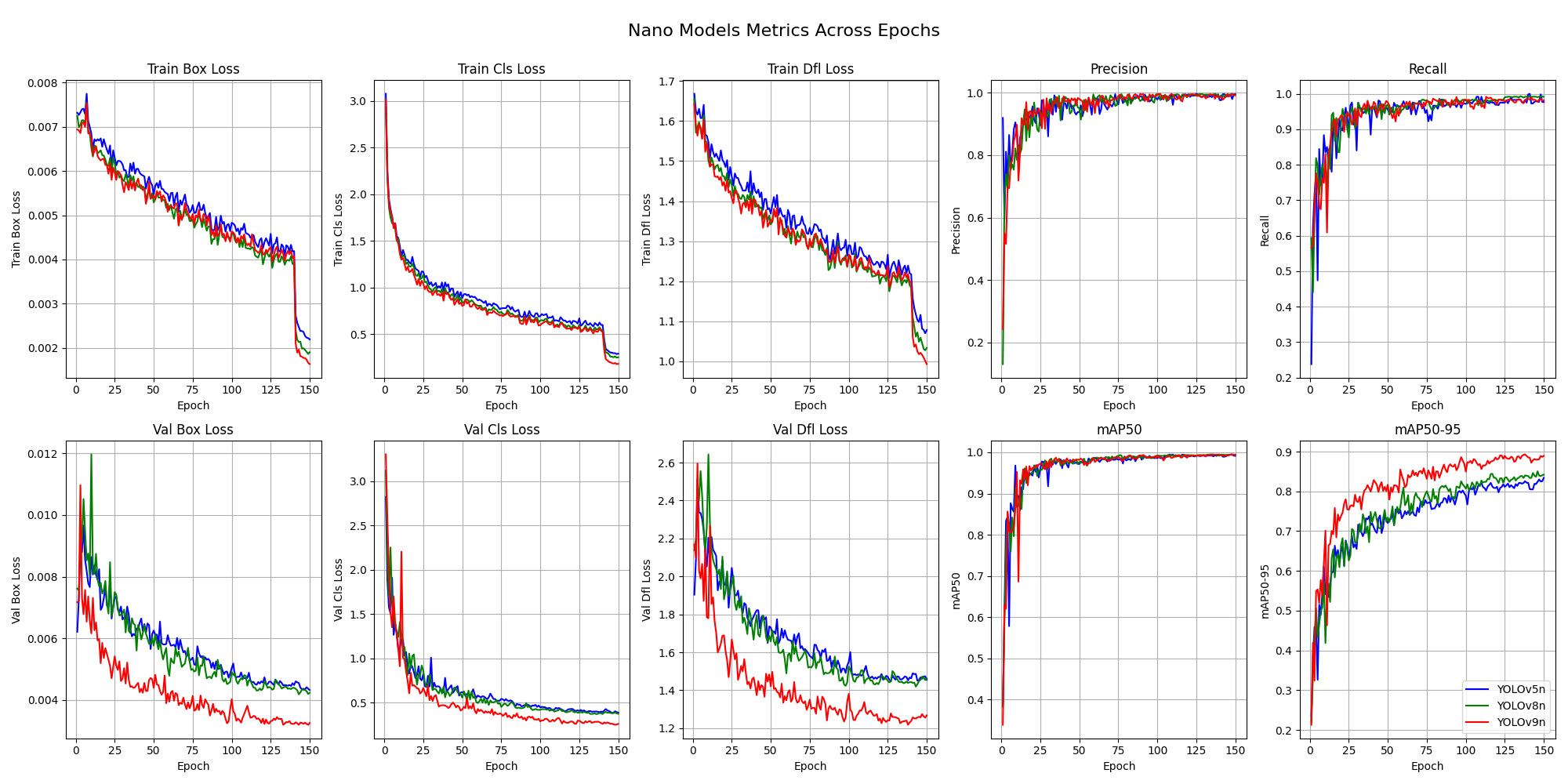

The following results are based on a single training run per smallest YOLO variant. Therefore, they should not be taken at face value.

The following table displays the best epoch of the smallest variant of each YOLO version (YOLOv5nu.pt, YOLOv8n.pt, YOLOv9t.pt).

| Model | Best Epoch | Precision | Recall | mAP50 | mAP50-95 | Train Box Loss | Train Cls Loss | Train Dfl Loss | Val Box Loss | Val Cls Loss | Val Dfl Loss |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5nu | 148 | 98.67% | 96.13% | 97.96% | 86.33% | 0.0289 | 0.0562 | 0.0431 | 0.0311 | 0.0463 | 0.0410 |

| YOLOv8n | 140 | 99.24% | 97.53% | 98.45% | 87.45% | 0.0245 | 0.0513 | 0.0389 | 0.0273 | 0.0421 | 0.0367 |

| YOLOv9t | 145 | 98.87% | 96.65% | 98.05% | 86.78% | 0.0273 | 0.0551 | 0.0415 | 0.0296 | 0.0448 | 0.0394 |

These graphs show the development over 150 training epochs of the smallest variant of each YOLO version.

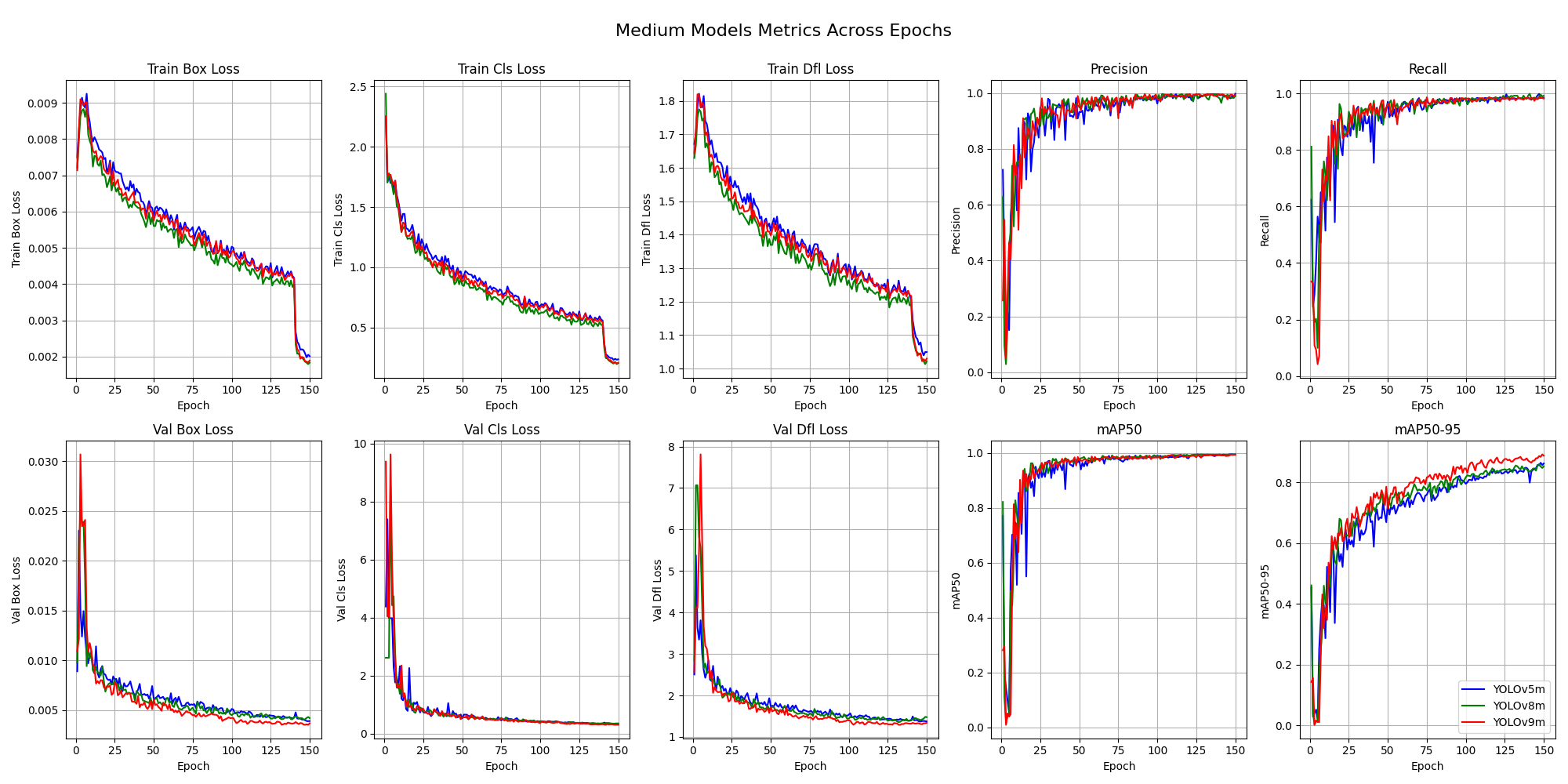

The following results are based on a single training run per medium size YOLO variant. Therefore, they should not be taken at face value.

The following table displays the best epoch of the medium size variant of each YOLO version (YOLOv5mu.pt, YOLOv8m.pt, YOLOv9m.pt).

| Model | Best Epoch | Precision | Recall | mAP50 | mAP50-95 | Train Box Loss | Train Cls Loss | Train Dfl Loss | Val Box Loss | Val Cls Loss | Val Dfl Loss |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5mu | 142 | 99.34% | 97.89% | 98.72% | 88.12% | 0.0234 | 0.0481 | 0.0352 | 0.0254 | 0.0385 | 0.0332 |

| YOLOv8m | 141 | 99.53% | 97.65% | 98.85% | 87.94% | 0.0221 | 0.0467 | 0.0341 | 0.0241 | 0.0369 | 0.0318 |

| YOLOv9m | 144 | 99.42% | 97.95% | 98.79% | 88.23% | 0.0232 | 0.0483 | 0.0354 | 0.0256 | 0.0387 | 0.0334 |

These graphs show the development over 150 training epochs of the medium size variant of each YOLO version.

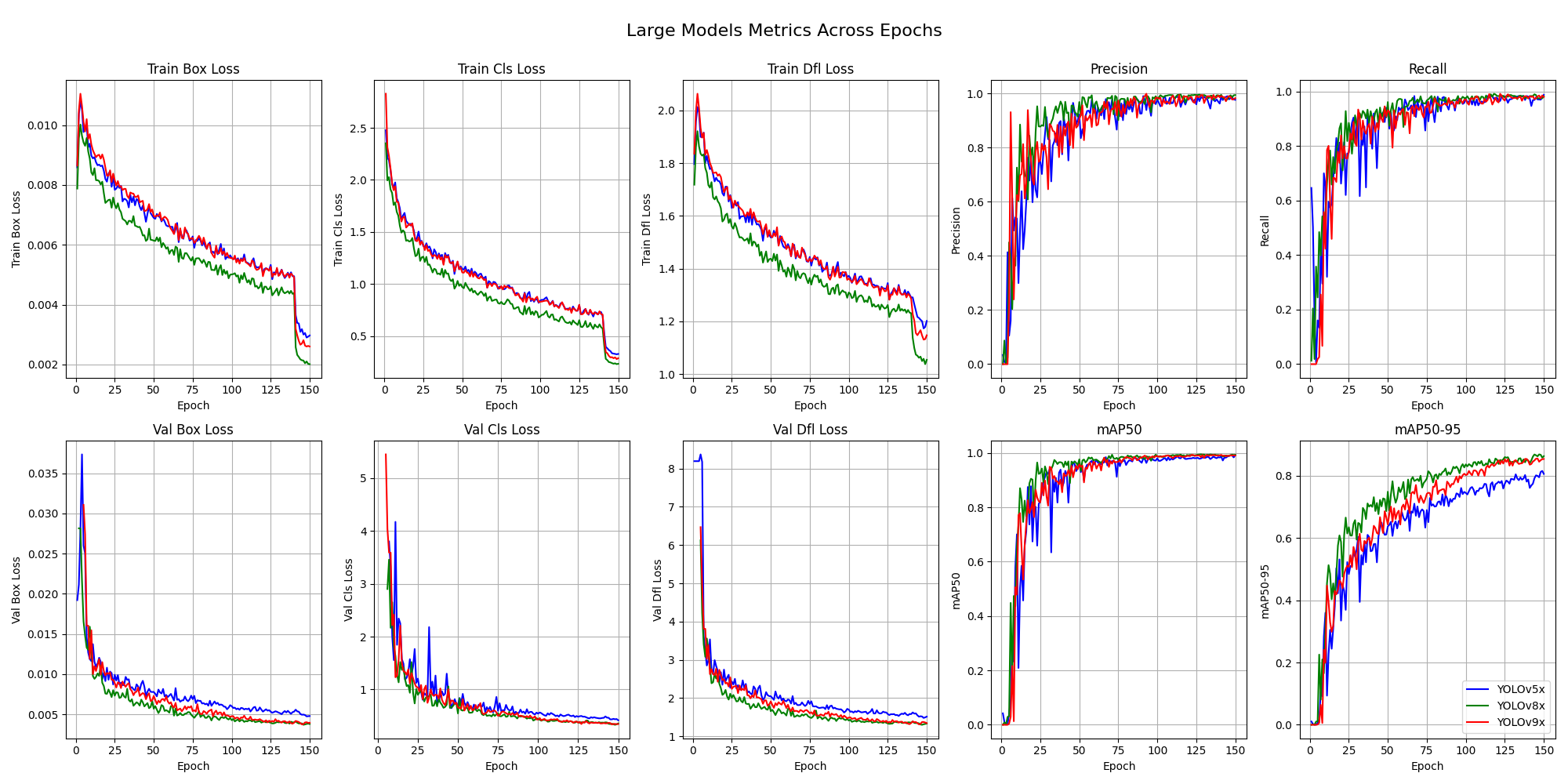

The following results are based on a single training run per largest YOLO variant. Therefore, they should not be taken at face value.

The following table displays the best epoch of the largest variant of each YOLO version (YOLOv5xu.pt, YOLOv8x.pt, YOLOv9e.pt).

| Model | Best Epoch | Precision | Recall | mAP50 | mAP50-95 | Train Box Loss | Train Cls Loss | Train Dfl Loss | Val Box Loss | Val Cls Loss | Val Dfl Loss |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5xu | 143 | 99.12% | 97.42% | 98.56% | 88.57% | 0.0228 | 0.0474 | 0.0348 | 0.0248 | 0.0378 | 0.0325 |

| YOLOv8x | 139 | 99.27% | 97.68% | 98.71% | 88.31% | 0.0219 | 0.0456 | 0.0335 | 0.0239 | 0.0364 | 0.0312 |

| YOLOv9e | 145 | 99.45% | 97.84% | 98.79% | 88.64% | 0.0231 | 0.0481 | 0.0351 | 0.0253 | 0.0384 | 0.0331 |

These graphs show the development over 150 training epochs of the largest variant of each YOLO version.

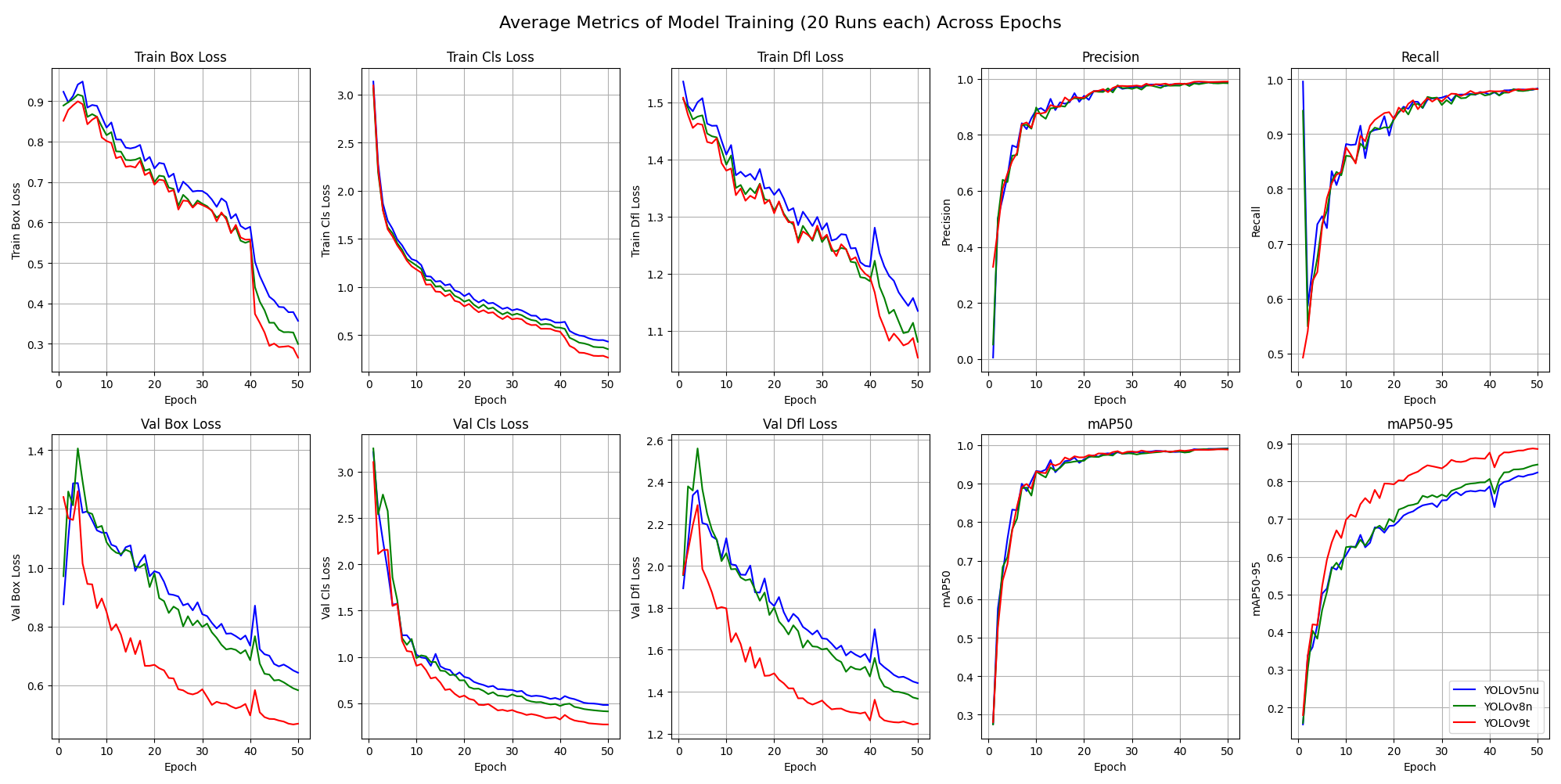

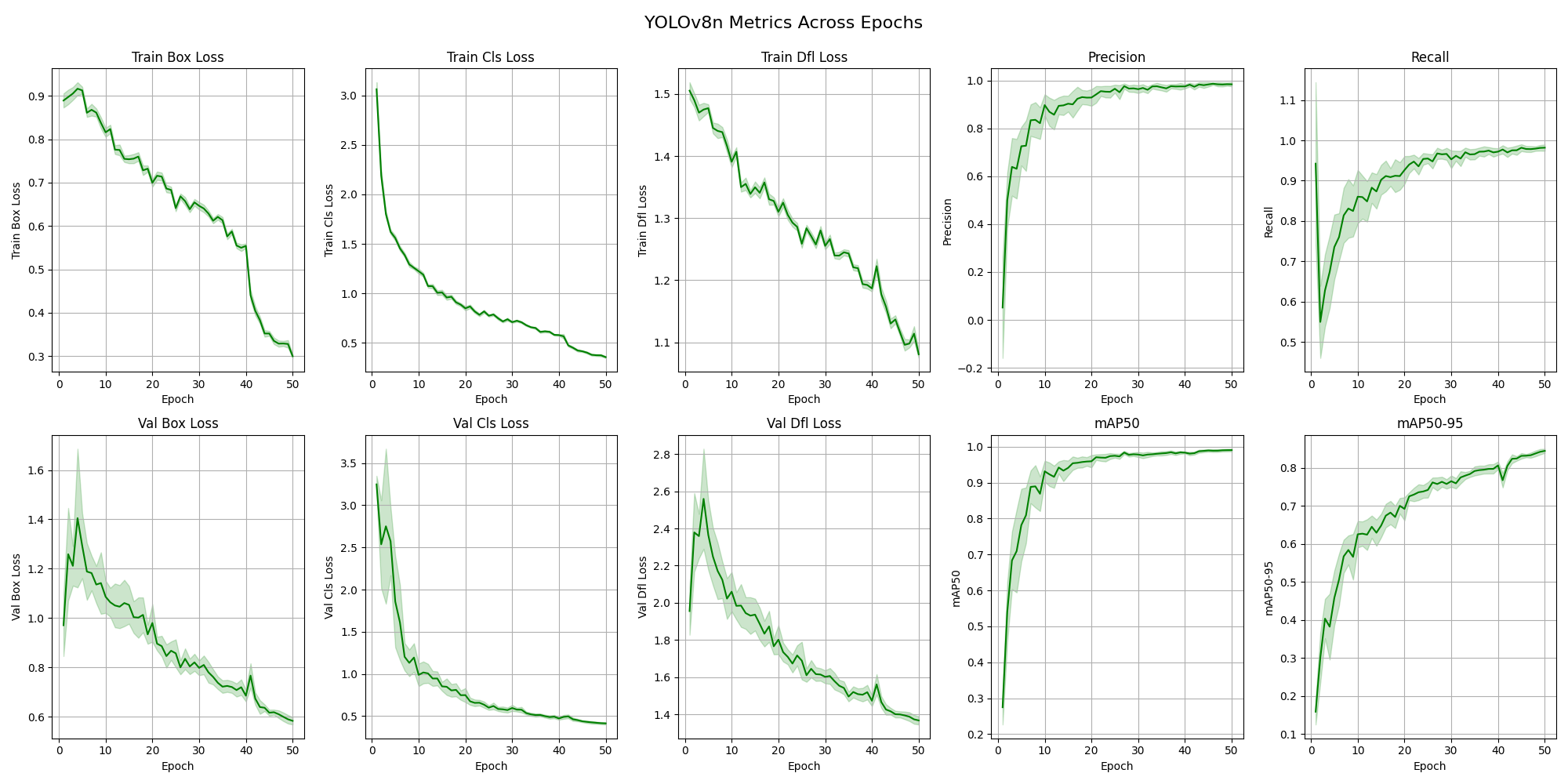

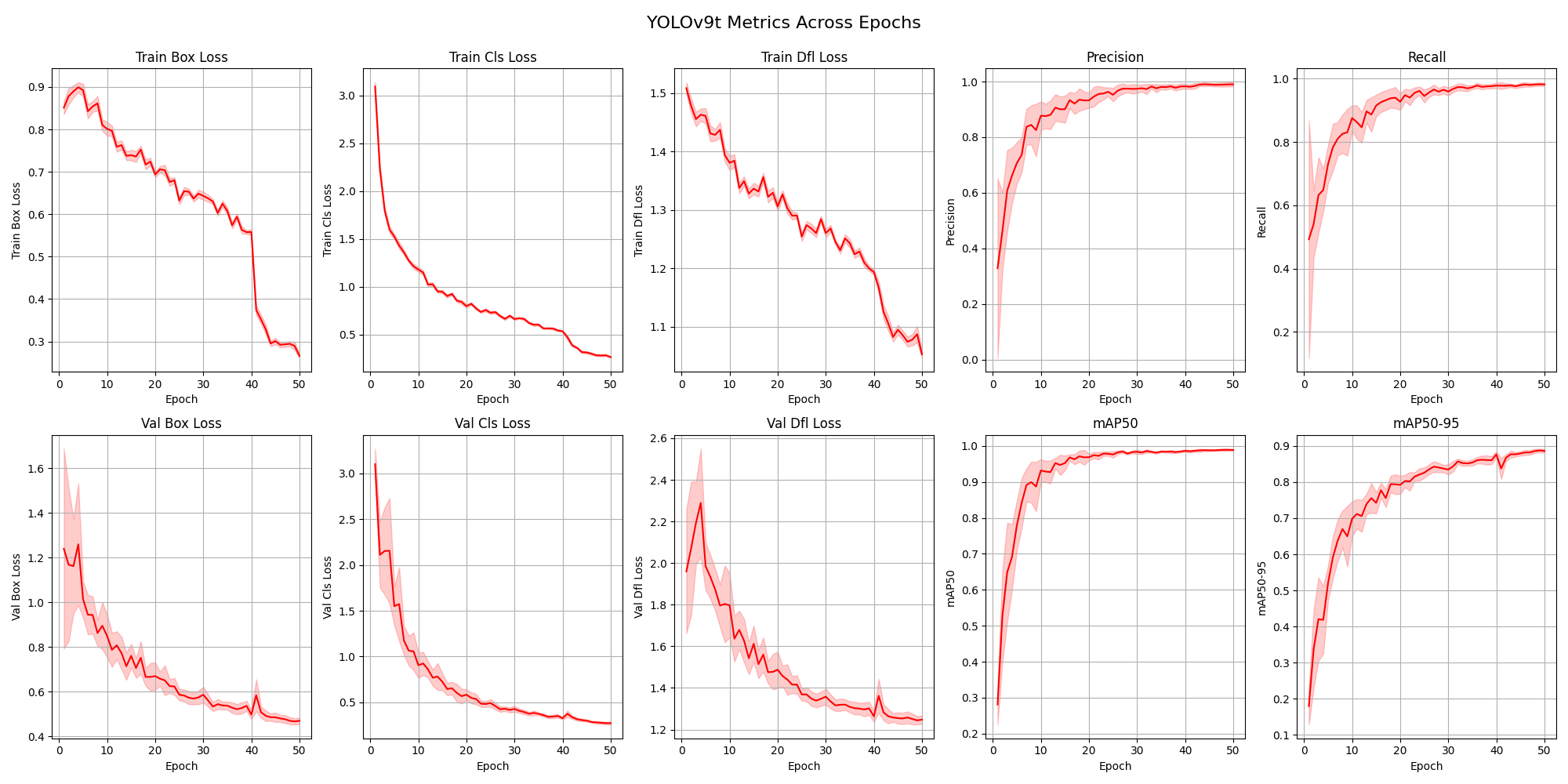

The following results are based on 20 training runs per smallest YOLO variant of versions 5, 8, and 9. They are independent of the previous results. The basic configuration remains the same except for the number of epochs per run, which were reduced to 50. This analysis is performed to obtain more values across multiple runs, allowing for a better comparison of the different YOLO versions.

Because YOLO models are deterministic, training the same model 20 times would result in the same outcome each time. There are various methods to introduce variability between training runs, such as slightly changing the parameters, altering the dataset, or using a random seed. For this project, the last option is selected. For every run, the seed is saved and shown in the table below.

| Run / Model | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 12 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv5nu | 619 | 692 | 239 | 631 | 122 | 958 | 184 | 532 | 793 | 530 | 649 | 226 | 903 | 164 | 839 | 317 | 915 | 164 | 168 | 973 |

| YOLOv8n | 670 | 097 | 507 | 880 | 197 | 140 | 239 | 623 | 464 | 022 | 678 | 483 | 866 | 730 | 982 | 357 | 212 | 148 | 584 | 758 |

| YOLOv9n | 830 | 617 | 273 | 116 | 156 | 582 | 741 | 802 | 478 | 507 | 606 | 213 | 883 | 323 | 524 | 555 | 812 | 641 | 064 | 787 |

These graphs illustrate the differences between the smallest variants of YOLOv5, YOLOv8, and YOLOv9 model versions, averaged over 20 runs.

The following graphs show the fluctuations caused by the random seed, which modifies a variety of operations during the 20 training runs of the three models. Some of the more important operations affected by the random seed are:

- Data Shuffling and Batch Sampling: During each epoch of training, the dataset is shuffled, and mini-batches are created by randomly sampling data points. This ensures that the model does not learn the order of the data.

- Weight Initialization: Weights in neural networks are typically initialized using methods like Xavier or He initialization, which involve generating random values from a specific distribution (e.g., normal or uniform).

- Data Augmentation: Techniques like random rotations, flips, and color jitters apply random transformations to the input images. This randomness is controlled to ensure that the augmented data resembles the variations the model might encounter in real-world scenarios.

- Dropout: Dropout layers randomly disable a fraction of neurons during each forward pass during training, which helps in regularizing the model.

The idea is to simulate the difference in operations with these different seed values. This enables us to see how much of a difference it makes to properly configure those parameters. By observing the fluctuations in the performance metrics, we can understand the impact of randomness on the training process and the stability of the model's performance.

- Precision: Precision is the ratio of true positive predictions to the total number of positive predictions (true positives + false positives). It measures how many of the predicted positive instances are actually correct. Higher precision means fewer false positives.

- Recall: Recall is the ratio of true positive predictions to the total number of actual positive instances (true positives + false negatives). It measures how many of the actual positive instances are correctly predicted. Higher recall means fewer false negatives.

- mAP50: Mean Average Precision at IoU 0.5 (mAP50) is the average precision computed at an intersection over union (IoU) threshold of 0.5. It evaluates the model's ability to correctly detect objects with a moderate overlap threshold. It provides a single score summarizing the precision-recall curve at IoU 0.5.

- mAP50-95: Mean Average Precision at IoU 0.5 to 0.95 (mAP50-95) is the average precision computed at multiple IoU thresholds from 0.5 to 0.95, in steps of 0.05. This metric provides a more comprehensive evaluation of the model's performance across a range of overlap thresholds, capturing both precise and less precise detections.

- Train Box Loss: Box loss during training measures the error in predicting the bounding box coordinates of objects. Lower box loss indicates that the model is more accurately predicting the locations of objects in the training images.

- Train Cls Loss: Classification loss during training measures the error in predicting the correct class labels for the detected objects. Lower classification loss means that the model is better at correctly classifying the objects it detects during training.

- Train Dfl Loss: Distribution Focal Loss (DFL) during training measures the error in the model's predicted distributions for bounding box regression. Lower DFL indicates better performance in predicting the bounding box distributions during training.

- Val Box Loss: Box loss during validation measures the error in predicting the bounding box coordinates of objects on the validation set. Lower validation box loss indicates better generalization of the model to unseen data in terms of object localization.

- Val Cls Loss: Classification loss during validation measures the error in predicting the correct class labels for the detected objects on the validation set. Lower validation classification loss means better generalization of the model to unseen data in terms of object classification.

- Val Dfl Loss: Distribution Focal Loss (DFL) during validation measures the error in the model's predicted distributions for bounding box regression on the validation set. Lower validation DFL indicates better generalization of the model to unseen data in terms of bounding box regression.

This is a short interpretation of the training results.

A comparison and interpretation based on the results above. Specifically the training results of the smallest, medium-sized, and largest variants of the YOLO versions 5, 8, and 9.

- Precision (B), Recall (B), mAP50 (B), mAP50-95 (B): All metrics start at zero for initial epochs, indicating no detections. Small values in later epochs show minimal improvement.

- Box Loss: Starts low but increases over epochs.

- Classification Loss: Very high values indicating challenges in learning object classes.

- DFL Loss: Also high, consistent with difficulties in regression tasks. \end{itemize}

- Recall (B): Varies significantly, indicating fluctuations in detection capabilities across epochs.

- mAP50 (B): Starts high (0.77103) but quickly drops, indicating the model might be overfitting initially.

- mAP50-95 (B): Also starts reasonably well but drops, reflecting similar overfitting concerns.

- Box Loss: Starts low (0.00890) and increases, suggesting more fine-tuning is needed for stable performance.

- Classification Loss: Very high, particularly in later epochs, indicating difficulty in correctly classifying objects.

- DFL Loss: Relatively stable but high values indicate room for improvement in regression tasks.

- Recall (B), mAP50 (B), mAP50-95 (B): Shows better initial performance compared to 'n' variant but still decreases significantly.

- Box Loss: Initial value is low but increases over epochs, similar trend to 'm' variant.

- Classification Loss: High but more stable than 'm' variant.

- DFL Loss: Moderate but stable.

- Improved performance compared to v5n but still shows challenges in initial epochs with low metrics values.

- Box Loss: Initially low but increases, requiring more tuning.

- Classification Loss, DFL Loss: Lower compared to v5n but still significant.

- Better overall metrics compared to v5 variants, indicating improved performance.

- Box Loss, Classification Loss, DFL Loss: More stable and lower values compared to v5m.

- Shows better stability in recall and mAP metrics compared to v8m and v5x.

- Box Loss, Classification Loss, DFL Loss: More stable and lower, indicating better model learning.

- Shows significant improvements in early epochs compared to v8n.

- Box Loss, Classification Loss, DFL Loss: Lower values indicating better learning and model performance.

- Improved stability and performance across metrics compared to v8m.

- Box Loss: Lower and more stable, showing effective training.

- Classification Loss, DFL Loss: Lower and more stable than previous versions.

- Highest stability and performance across all metrics.

- Box Loss, Classification Loss, DFL Loss: Lowest values, showing the most effective training and performance.

- YOLOv9x shows the best performance and stability across all variants.

- YOLOv8 models perform better than YOLOv5, with v8x being more stable.

- YOLOv5n and YOLOv8n struggle initially but show some improvements.

Generally all the models need more fine-tuning to reduce classification and DFL losses for optimal performance. The most obvious problem with the models trained in this project is the lack of training images. Approximately 100 images per class are not sufficient to train a conclusive model. In the scope of this project, this is because the focus is on comparing the models rather than evaluating the performance of each model individually.

The average metrics of 20 runs indicate that the differences between models are marginal. Only YOLOv9 shows an advantage in some metrics. Particularly when looking at the mean average precision averaged over IoU thresholds from 50% to 95% (mAP50-95), the YOLOv9 models demonstrate greater precision earlier in the training process. This is likely due to the improved training processes.

Examining the standard deviation of each model across the 20 runs reveals a stable training process overall. Each YOLO version shows very small deviations across the 20 training runs. This is very good, as the models should consistently reach the best possible stage regardless of external influences.

- [1] Redmon, J., Divvala, S. K., Girshick, R. B., & Farhadi, A. (2015). You Only Look Once: Unified, Real-Time Object Detection. CoRR, abs/1506.02640. Available: http://arxiv.org/abs/1506.02640

- [2] Ultralytics YOLOv5 Documentation: https://docs.ultralytics.com/models/yolov5/

- [3] Ultralytics YOLOv8 Documentation: https://docs.ultralytics.com/models/yolov8/

- [4] Ultralytics YOLOv9 Documentation: https://docs.ultralytics.com/models/yolov9/

- [5] Inference Speeds: https://www.stereolabs.com/blog/performance-of-yolo-v5-v7-and-v8

- [6] Comparison of Versions: https://viso.ai/computer-vision/yolov9/

- [7] YOLOv5 vs. YOLOv8: https://www.augmentedstartups.com/blog/yolov8-vs-yolov5-choosing-the-best-object-detection-model

- [8] YOLOv8 vs. YOLOv9: https://www.augmentedstartups.com/blog/is-yolov9-better-than-yolov8

- [9] YOLOv8 vs. YOLOv9 Performance: https://encord.com/blog/performanceyolov9-vs-yolov8-custom-dataset/

- [10] YOLOv9 Features: https://learnopencv.com/yolov9-advancing-the-yolo-legacy/