1* Sanjoy Chowdhury, 2* Sayan Nag, 3* Subhrajyoti Dasgupta, 4Jun Chen,

4 Mohamed Elhoseiny, 1 Ruohan Gao, 1 Dinesh Manocha

1 University of Maryland, 2 University of Toronto, 3 Mila and Université de Montréal, 4 KAUST

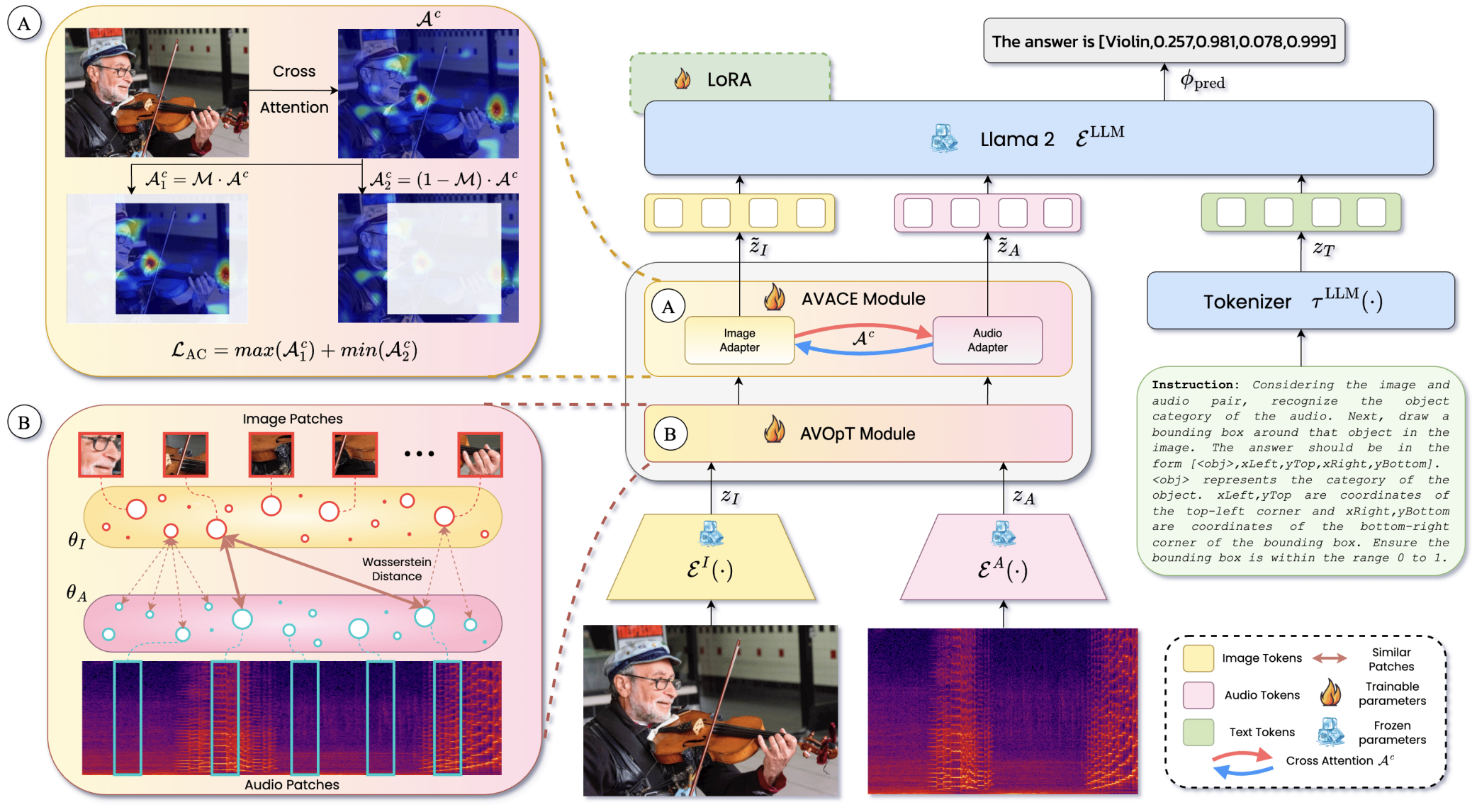

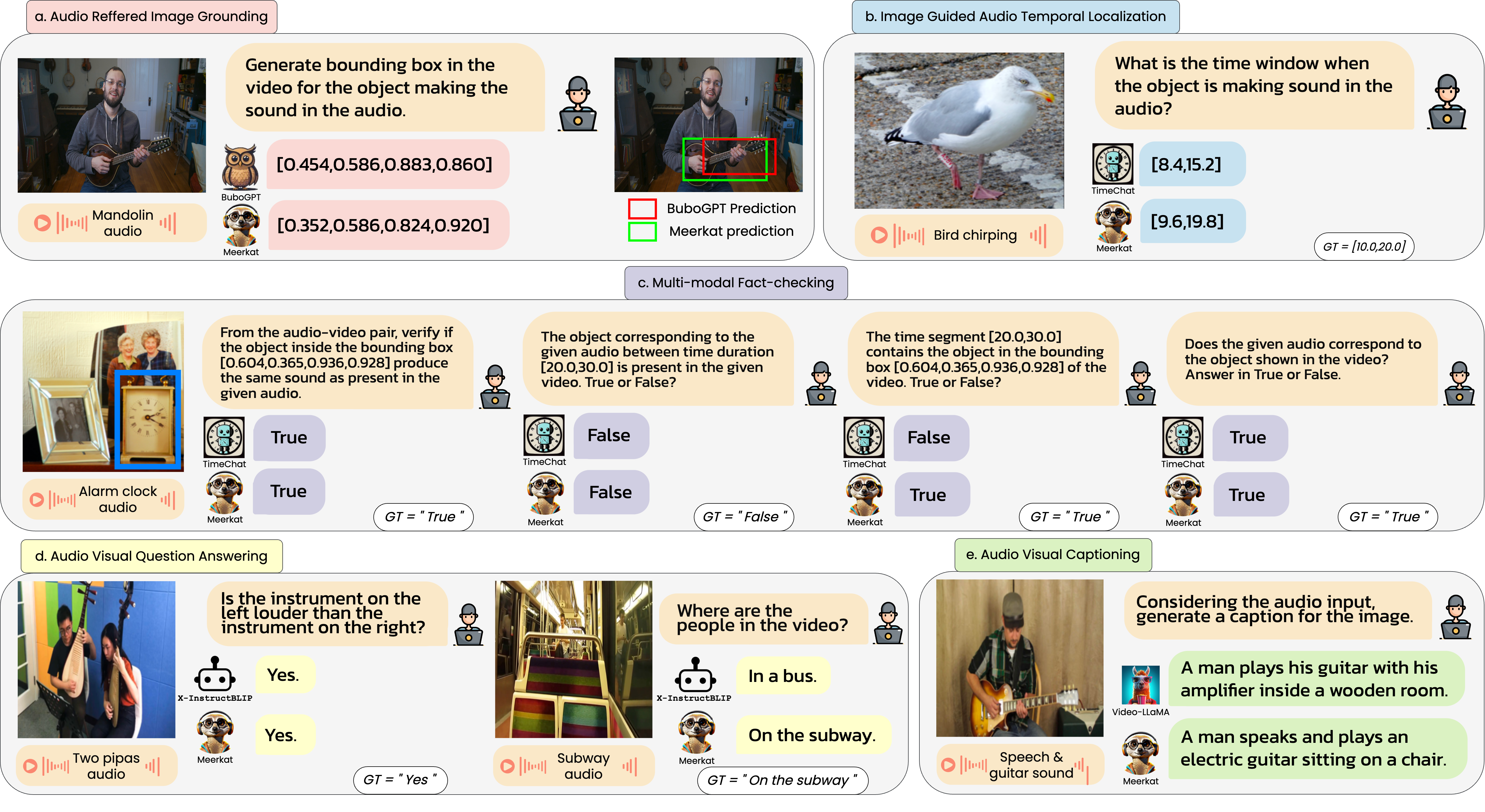

Meerkat is an audio-visual LLM equipped with a fine-grained understanding of image 🖼️ and audio 🎵, both spatially 🪐 and temporally 🕒.

📰 Paper 🗃️ Dataset 🌐 Project Page 🧱 Code

To install Meerkat, follow these steps:

# Clone the repository

git clone https://github.com/schowdhury671/meerkat.git

# Change to the Macaw-LLM directory

cd meerkat

# Install required packages

pip install -r requirements.txt

# Install ffmpeg

yum install ffmpeg -y

# Install apex

git clone https://github.com/NVIDIA/apex.git

cd apex

python setup.py install

cd ..-

Downloading dataset:

- Please download the dataset JSONs from here: Sharepoint Link

-

Dataset preprocessing:

- Extract frames and audio from videos

- The JSONs and data files should be placed following the directory structure:

data |--<dataset> |--<dataset>_train.json |--<dataset_test>.json |--frames |--<image>.jpg |-- ... |--audios |--<audio>.wav |-- ...- Transform supervised data to dataset:

python preprocess_data_supervised.py

-

Training:

- Execute the training script (you can specify the training parameters inside):

./train.sh

- Execute the training script (you can specify the training parameters inside):

-

Inference:

- Execute the inference script (you can give any customized inputs inside):

./inference.sh

- Execute the inference script (you can give any customized inputs inside):

We would like to express our gratitude to the Macaw-LLM and GOT repositories for their valuable contributions to Meerkat.

@inproceedings{chowdhury2024meerkat,

title={Meerkat: Audio-Visual Large Language Model for Grounding in Space and Time},

author={Chowdhury, Sanjoy and Nag, Sayan and Dasgupta, Subhrajyoti and Chen, Jun and Elhoseiny, Mohamed and Gao, Ruohan and Manocha, Dinesh},

journal={European Conference on Computer Vision (ECCV)},

year={2024}

}