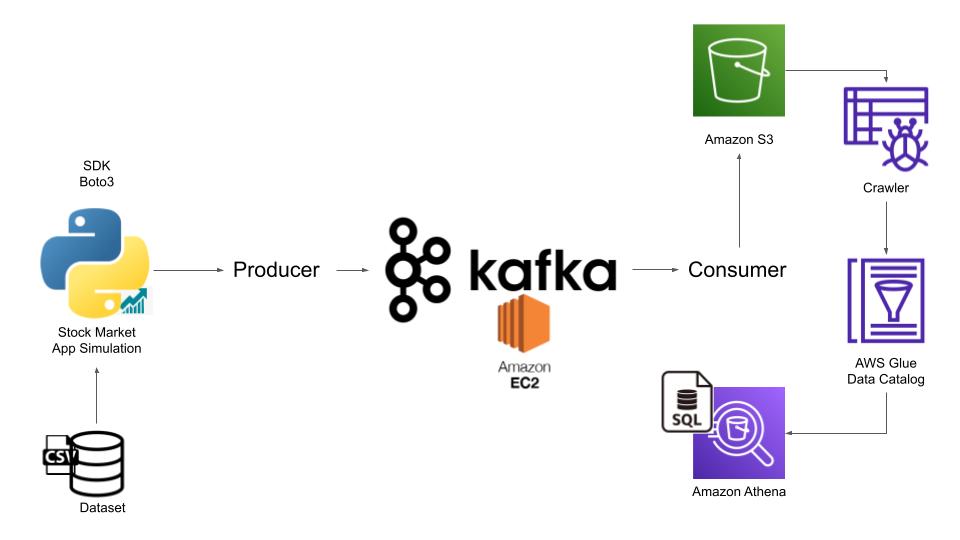

This project simulates stock market data streaming, processes the data in real-time using Kafka, and analyzes it with AWS Glue and Athena. Below, you'll find an overview of the architecture, setup instructions, and usage details.

- System Architecture

- Deployment Steps

- Implementation Steps

- Technology Stack

- Best Practices and Tips

- Resources

-

Launch EC2 Instance:

- Select an Amazon Linux 2023 AMI.

- Configure instance details (e.g., security group with port 9092 open).

-

Install Java Runtime:

sudo yum update -y sudo amazon-linux-extras enable java-openjdk11 sudo yum install java-11-openjdk -y -

Install and Configure Kafka:

- Download Kafka:

wget https://downloads.apache.org/kafka/3.5.1/kafka_2.12-3.5.1.tgz

- Extract and set up:

tar -xvf kafka_2.12-3.5.1.tgz cd kafka_2.12-3.5.1

- Download Kafka:

-

Start Kafka Services:

- Start Zookeeper:

bin/zookeeper-server-start.sh config/zookeeper.properties

- Start Kafka Broker:

bin/kafka-server-start.sh config/server.properties

- Start Zookeeper:

-

Create a Kafka Topic:

bin/kafka-topics.sh --create --topic stock-market-data --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1

- The producer script streams simulated stock market data to Kafka.

- The consumer script processes the data in real time and stores it in an S3 bucket.

- Use the producer Python script to read from a sample CSV dataset containing stock prices and trades.

- Configure the producer to publish records to the Kafka topic

stock-market-datain real-time.

- Set up an EC2 instance and install Kafka.

- Configure the

server.propertiesfile to set up the Kafka broker. - Create and verify the Kafka topic

stock-market-data.

- Deploy the consumer Python script to read messages from the Kafka topic.

- Store the consumed data in an Amazon S3 bucket, organized into partitions (e.g.,

/year/month/day/). - Use separate folders for

raw-data/andprocessed-data/.

- Create an AWS Glue crawler to scan the S3 bucket.

- Generate a schema and populate the AWS Glue Data Catalog.

- Schedule periodic crawls to keep the schema updated.

- Connect Amazon Athena to the AWS Glue Data Catalog.

- Write SQL queries to analyze the data

- Kafka: Data streaming.

- Python: Producer and consumer implementation.

- AWS EC2: Kafka hosting.

- AWS S3: Data storage.

- AWS Glue: Data catalog creation.

- Amazon Athena: Real-time querying.

- Kafka Configuration: Use appropriate partitioning and replication strategies for scalability.

- AWS Glue: Schedule crawlers for periodic updates.

- S3 Organization: Use a structured folder hierarchy for easier data management.

- Monitoring: Set up monitoring tools for Kafka and AWS resources to track performance and troubleshoot issues.

- Architecture Diagram: Included in this repository.

- Dataset: Simulated stock market data in CSV format.

- Python Notebooks:

- Producer notebook for data simulation.

- Consumer notebook for data processing.

- Kafka Configuration:

- Step-by-step instructions for setting up Kafka.

Feel free to fork this repository and contribute! 😊