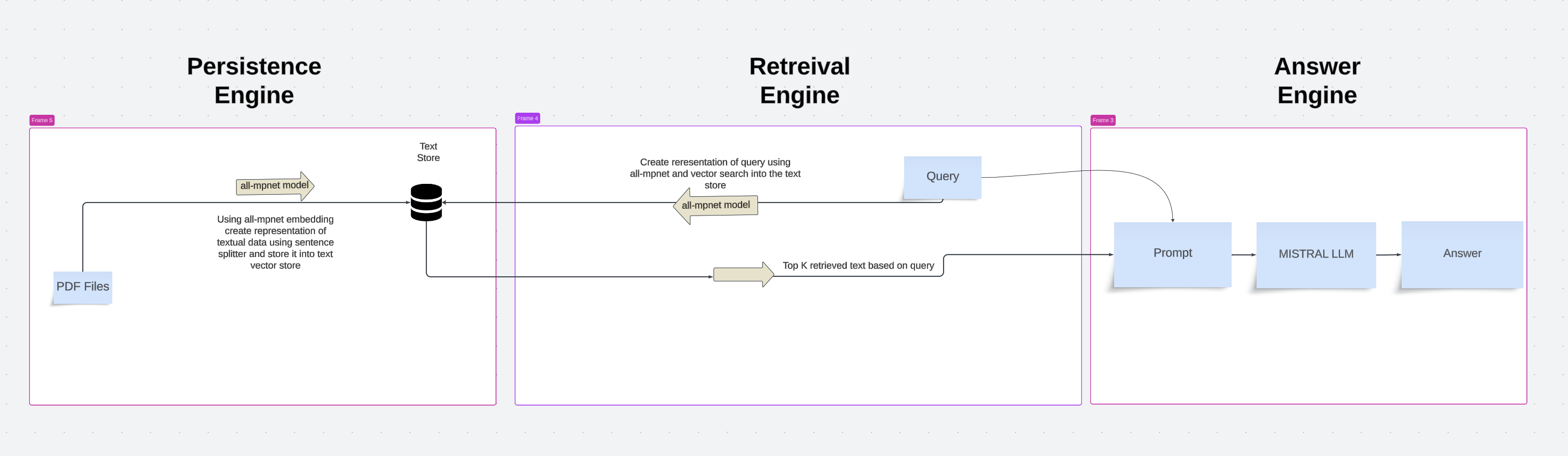

This project is designed to extract and process data from PDF files. The processed data is stored in vector databases, allowing for efficient retrieval and question-answering using large language models (LLMs).

The architecture of the project consists of three main engines:

- PDF Files: The process starts with the input PDF files.

- all-mpnet Model: Text data is embedded using the all-mpnet model, creating a representation of the textual data.

- Text Store & Image Store: The embeddings are stored in respective vector databases for text.

- Query: The user provides a query.

- all-mpnet Model: The query is embedded using the all-mpnet model.

- Vector Search (Text): A search is conducted in the text store to retrieve the top K relevant text embeddings.

- Prompt Formation: The retrieved text informations are combined to form a prompt.

- Mistral LLM: The prompt is processed using the Mistal LLM to generate an answer.

- Answer: The final answer is returned to the user.

├── app.py

├── data

│ ├── index

│ ├── pdf

│ ├── readme_images

├── src

│ ├── config

│ │ ├── config.py

│ ├── llama_handler

│ │ ├── llama_handler.py

│ ├── prompts

│ │ ├── prompts.py

├── static

│ ├── styles.css

├── templates

│ ├── index.html

├── requirements.txt

├── README.md

The LlamaHandler class handles the embedding and retrieval of data, as well as generating answers using LLMs. It performs the following tasks:

- Embeds text using pre-trained models.

- Stores embeddings in vector databases.

- Retrieves relevant data based on a query.

- Generates context for the answer engine.

- Uses Mistal LLM to provide answers.

To install the necessary dependencies, run:

pip install -r requirements.txtDownload Ollama from here Ollama

Run the following code after installing Ollama

ollama run mistralTo start the Flask application, run:

python app.pyNavigate to http://localhost:5000 in your web browser to access the application.

Add Pdf files at this location ./data/pdf

To process the PDFs and persist the data, send a POST request to the /persist endpoint:

curl -X POST http://localhost:5000/persistTo ask a question and receive an answer, send a POST request to the /answer endpoint with the question in the request body

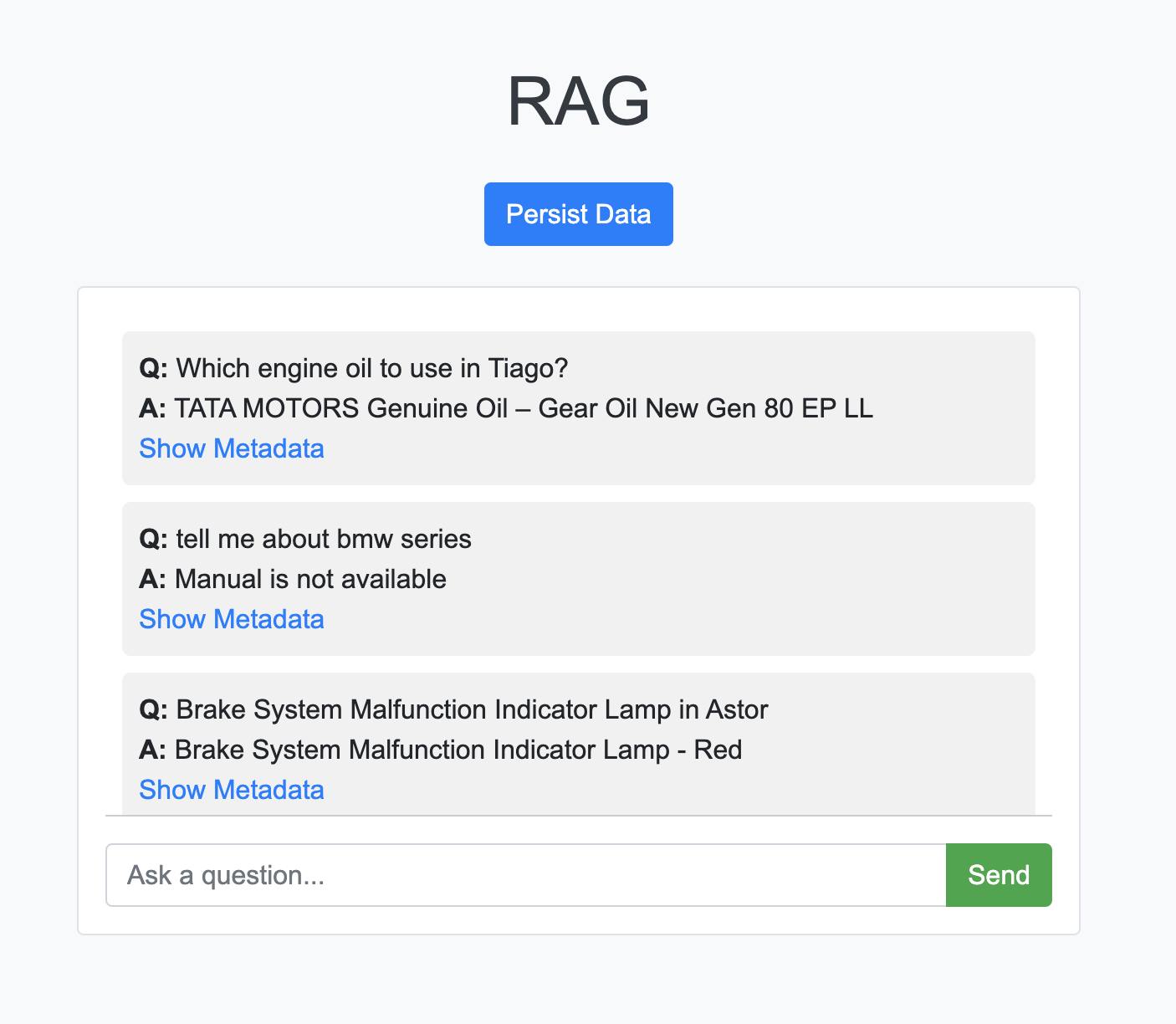

curl -X POST -H "Content-Type: application/json" -d '{"question": "Your question here"}' http://localhost:5000/answerThis how the interactive UI looks