This is a PyTorch implementation of the pipeline presented in A Systematic Comparison of Phonetic Aware Techniques for Speech Enhancement

paper published @ Interspeech2022.

Project page

A Systematic Comparison of Phonetic Aware Techniques for Speech Enhancement

Or Tal , Moshe Mandel , Felix Kreuk , Yossi Adi

23rd INTERSPEECH conference

Speech enhancement has seen great improvement in recent

years using end-to-end neural networks. However, most models are agnostic to the spoken phonetic content.

Recently, several studies suggested phonetic-aware speech enhancement,

mostly using perceptual supervision.

Yet, injecting phonetic features during model optimization can take additional forms

(e.g., model conditioning).

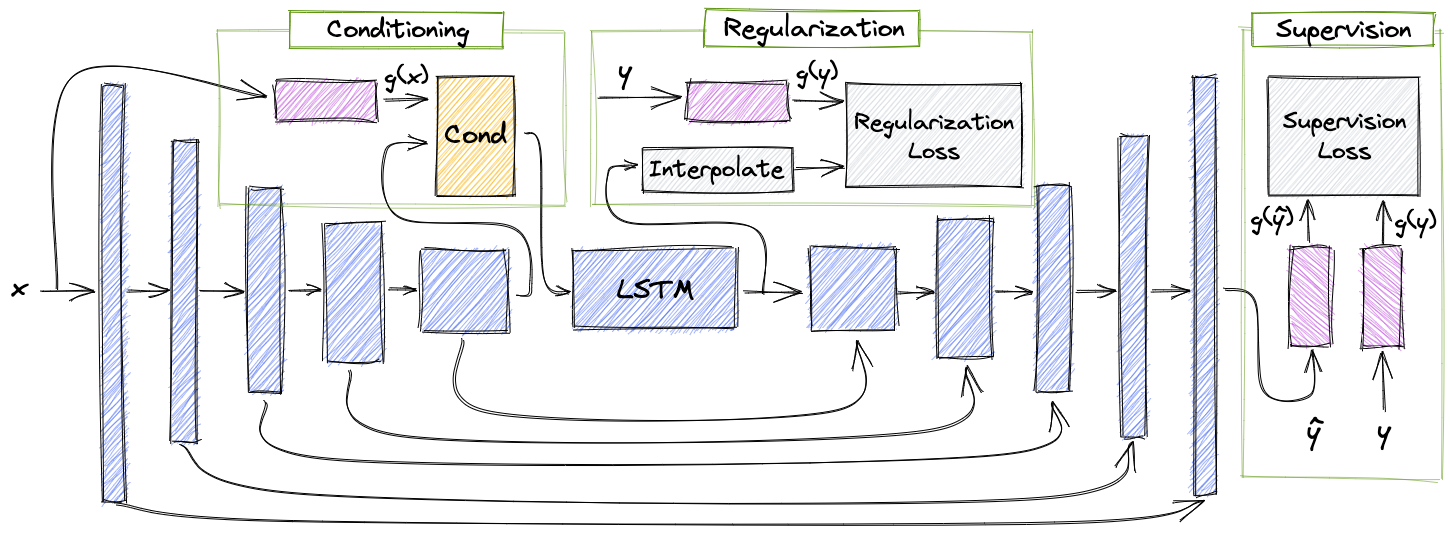

In this paper, we conduct a systematic comparison between different methods of incorporating phonetic information in a speech enhancement model.

By conducting a series of controlled experiments, we observe the

influence of different phonetic content models as well as various feature-injection techniques on enhancement performance,

considering both causal and non-causal models.

Specifically, we evaluate three settings for injecting phonetic information,

namely: i) feature conditioning; ii) perceptual supervision; and

iii) regularization.

Phonetic features are obtained using an intermediate layer of either a supervised pre-trained Automatic

Speech Recognition (ASR) model or by using a pre-trained

Self-Supervised Learning (SSL) model.

We further observe the effect of choosing different embedding layers on performance,

considering both manual and learned configurations.

Results suggest that using a SSL model as phonetic features outperforms the ASR one

in most cases.

Interestingly, the conditioning setting performs best among the evaluated configurations.

Paper.

If you find this implementation useful please consider citing our work:

@article{tal2022systematic,

title={A Systematic Comparison of Phonetic Aware Techniques for Speech Enhancement},

author={Tal, Or and Mandel, Moshe and Kreuk, Felix and Adi, Yossi},

journal={arXiv preprint arXiv:2206.11000},

year={2022}

}

git clone https://github.com/slp-rl/SC-PhASE.git

cd SC-PhASE

pip install -r requirements.txt.

Note: torch installation may depend on your cuda version. see Install torch

- Download all and unzip the Valentini dataset

- Down-sample each directory using:

bash data_preprocessing_scripts/general/audio_resample_using_sox.sh <path to data dir> <path to target dir> - Generate json files:

python data_preprocessing_scripts/speech_enhancement/valentini_egs_script.py --project_dir <full path to current project root> --dataset_base_dir <full path to the downsampled audio root, containing all downsampled dirs> --spk <num speakers in {28,56}, default=28>

Note: valentini_egs_script.py assumes that following dataset structure:

root dir

│

└─── noisy_trainset_{28/56}spk_wav

│ └─ Downsampled audio files

│

└─── clean_trainset_{28/56}spk_wav

│ └─ Downsampled audio files

│

└─── noisy_testset_wav

│ └─ Downsampled audio files

│

└─── clean_testset_wav

└─ Downsampled audio files

- Download pretrained weights link

- Copy full path to the pretrained .pt file to

features_config.state_dict_pathfield in: configurations/main_config.yaml

Example of running commands could be found in: run_commands_examples/

Train Demucs(hidden:48, stride:4, resample:4) baseline example:

python train.py \

dset=noisy_clean \

experiment_name=h48u4s4_baseline \

hidden=48 \

stride=4 \

resample=4 \

features_dim=768 \

features_dim_for_conditioning=768 \

include_ft=False \

get_ft_after_lstm=False \

use_as_conditioning=False \

use_as_supervision=False \

learnable=False \

ddp=True \

batch_size=16

Test the whole pipeline for a single epoch:

python train.py dset=debug eval_every=1 epochs=1 experiment_name=test_pipeline