WebGuard is an open-source tool designed to help identify and moderate potentially unsafe web content, making the internet a safer place for everyone, especially children. Utilizing the power of Vision and Natural Language AI models, along with ScraperAPI for efficient web crawling, WebGuard analyzes web pages for adult content, violence, and other categories that may not be suitable for younger audiences.

- Content Analysis: Leverages Google Cloud's Natural Language API to analyze text for various moderation categories.

- Image Safety Checks: Uses Google Cloud's Vision API to detect unsafe content in images, supporting

.jpgand.pngformats. - Efficient Web Crawling: Integrates with ScraperAPI to fetch and process web content, including dynamically loaded content via JavaScript.

- Customizable Moderation: Allows setting thresholds for different moderation categories to tailor content filtering according to specific needs.

To access Google Cloud APIs, you'll need to set up a service account:

- Create a Service Account: Visit the Google Cloud Console, navigate to "IAM & Admin" > "Service Accounts", and create a new service account.

- Grant Permissions: Assign the service account the necessary roles (Service Usage Consumer) for accessing the Vision and Natural Language APIs.

- Generate a Key: Create and download a JSON key for your service account.

Environment Variable:

Set the GOOGLE_SERVICE_ACCOUNT_KEY environment variable to the path of your downloaded JSON key file:

export GOOGLE_SERVICE_ACCOUNT_KEY="/path/to/your/service-account-file.json"Configuration:

Open `config.py` and set your `GCP_PROJECT_ID` and `SCRAPER_API_KEY` with the appropriate values.

ScraperAPI is used to crawl and fetch web content. To get started:

- Sign Up: Create an account on ScraperAPI and obtain your API key in your profile.

- Configuration: Store your ScraperAPI key in a configuration file or as an environment variable for easy access within the tool.

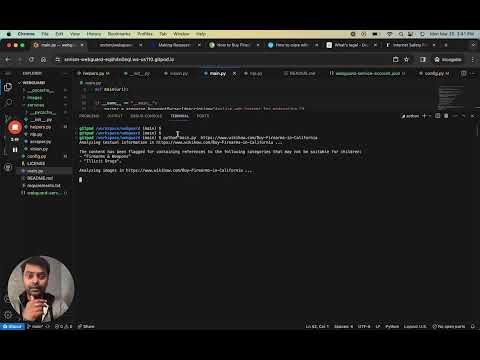

To analyze a web page, run main.py with the URL as an argument:

python main.py <URL_to_analyze like https://example.com>

Images found during web crawling are downloaded to the images/ folder for analysis by the Vision API. Before each run, the folder is cleared to ensure only current images are processed. Currently, only .jpg and .png images are supported.

Contributions to WebGuard are welcome! Whether it's adding new features, improving documentation, or reporting issues, your help makes WebGuard better for everyone.

WebGuard is released under the MIT License. See the LICENSE file for more details.