GPT Video Analysis in-a-Box - Image and Video Analysis with Azure OpenAI GPT-4 Turbo with Vision and Azure Data Factory

This solution is part of the the AI-in-a-Box framework developed by the team of Microsoft Customer Engineers and Architects to accelerate the deployment of AI and ML solutions. Our goal is to simplify the adoption of AI technologies by providing ready-to-use accelerators that ensure quality, efficiency, and rapid deployment. Read about and discover other AI-in-a-Box solutions at https://github.com/Azure/AI-in-a-Box.

Azure OpenAI GPT-4 Turbo with Vision (AOAI GPT-4V) allows you to gain insights from your images and videos without needing to develop and train your own model, which can be a time and cost consuming process. This opens up a multitude of test cases for different industries including:

Azure OpenAI GPT-4 Turbo with Vision (AOAI GPT-4V) allows you to gain insights from your images and videos without needing to develop and train your own model, which can be a time and cost consuming process. This opens up a multitude of test cases for different industries including:

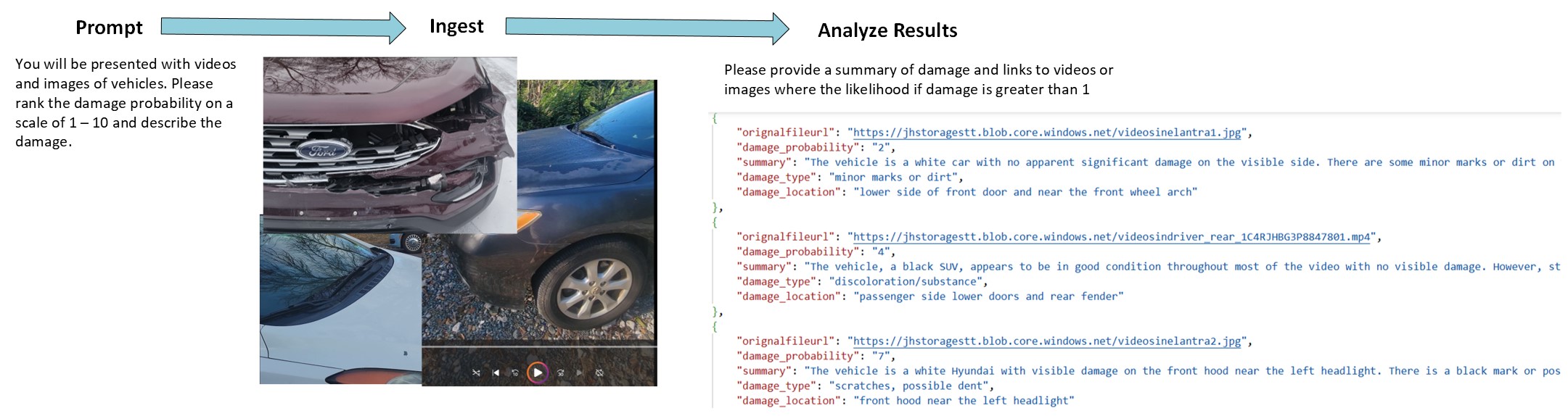

- Assessing videos or images provided for insurance claims

- Identifying product defects in a manufacturing process Tracking store traffic, including items browsed, loss protection

- Identifying animal movement in a forest or preserve without having to watch hours of videos

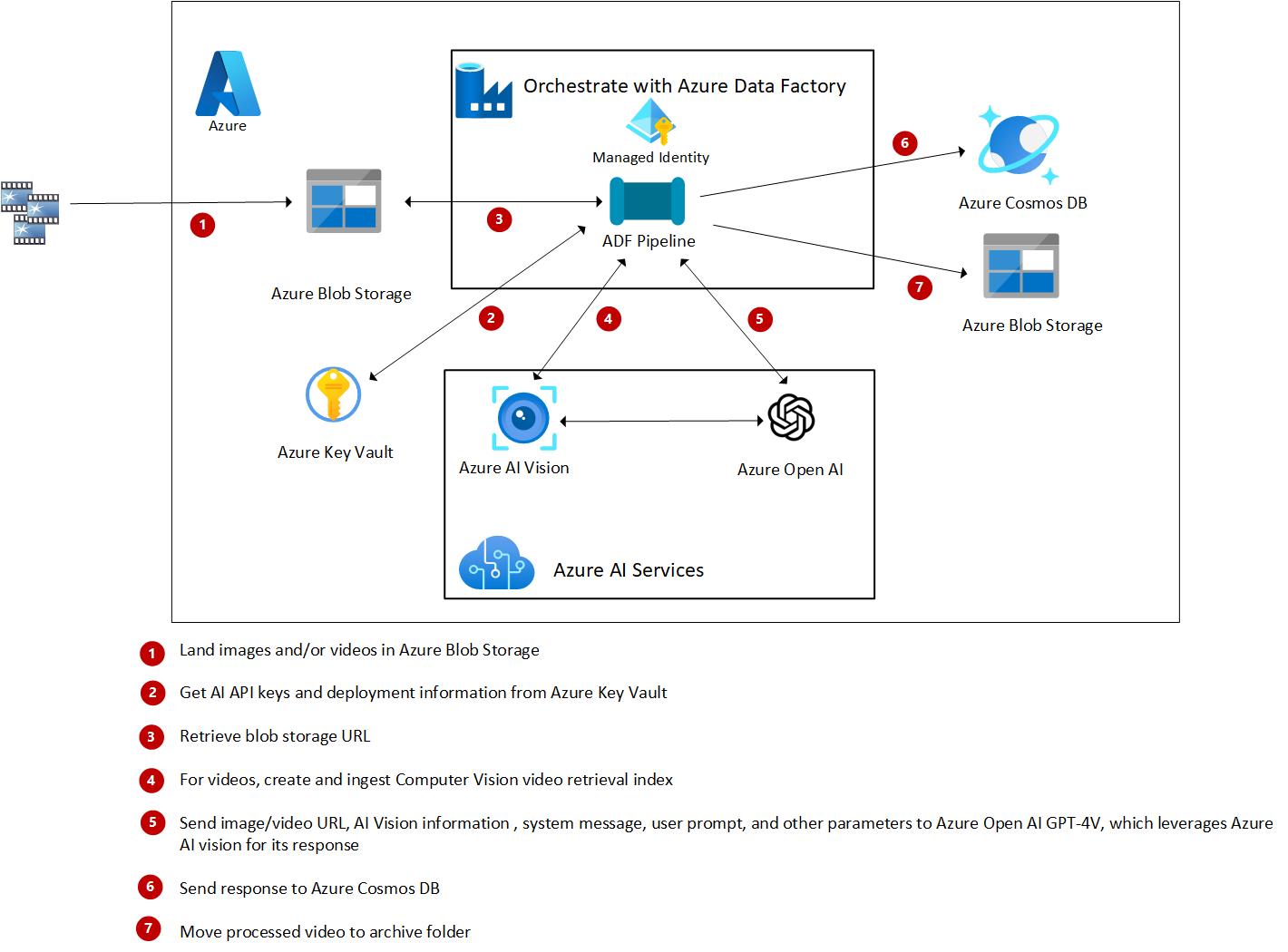

Yet, for those not versed in Python or .NET, tapping into Azure OpenAI's potential can seem daunting. Azure Data Factory (ADF) steps in as a low-code solution to orchestrate Azure OpenAI service calls and manage output ingestion. ADF has features that allow for easy configuration, customization, and parameterization of prompts and other AOAI inputs as well as data sources. These customization and parameterization features make pipelines reusable for ingesting different images and videos with different prompts and system messages.

- Sample images, videos, chat completion prompts to analyze images and videos of videos for damage

- Low-code solution using ADF

- Easy and secure integration with other Azure resources with Managed Identities

- Parameterization making a single data factory reuseable for many different scenarios

- Results stored in Azure Cosmos DB in Json Format

- Deployment of all resources needed for Image and Video Analysis with GPT-4V and ADF. This includes:

- Azure Key Vault

- Azure OpenAI With a GPT-4V (Preview) Deployment

- Azure AI Vision with Image Analysis

- Azure Blob Storage for ingesting and archiving the videos and images

- Azure Data Factory along with all Data Factory artifacts

- Artifacts include all pipelines and activities needed to call GPT-4V vision to analyze your videos and images and store the results in Azure Cosmos DB

- Azure Cosmos DB for storing chat comption results

For detailed information on this solution refer to:

Analytics Videos with Azure OpenAI GPT-4 Turbo with Vision and Azure Data Factory

Analytics Images with Azure OpenAI GPT-4 Turbo with Vision and Azure Data Factory

The solution is extremely adaptable for your own use cases.

- Add your own videos and images to the storage account and change the system and user prompts for the chat completion.

- The ADF orchestration pipeline processes all the files and images in a folder. This is perfect if you want to analyze images/videos on a batch basis. However, you may want to analyze a video or image as soon as it lands in the storage account. In that case, run the orchestration pipeline to test your system and user prompts in batch mode. Then add an event based trigger to analyze the video or image as soon as it lands in a storage account.

- Build vector analytics over the Cosmos DB upon chat completion results

Install, deploy, upload sample videos and/or images

- Install latest version of Azure CLI

- Install latest version of Bicep

- Install latest version of Azure Devloper CLI

- Install latest version of Azure Function Core Tools

-

Clone this repository locally

git clone https://github.com/Azure-Samples/gpt-video-analysis-in-a-box -

Deploy resources

azd auth loginazd up

You will be prompted for a subscription, a region for GPT-4V, a region for AI Vision, a resource group, a prefix and a suffix. The parameter called location must be a region that supports GPT-4V ; the parameter called CVlocation must be a region that supports AI Vision Image Analysis 4.0.

Upload images and videos of vehicles to your new storage account's videosin container using Azure Storage Explorer, AZ Copy or within the Azure Portal. You can find some sample images and videos at the bottom of this blog, Analyze Videos with Azure OpenAI GPT-4 Turbo with Vision and Azure Data Factory.

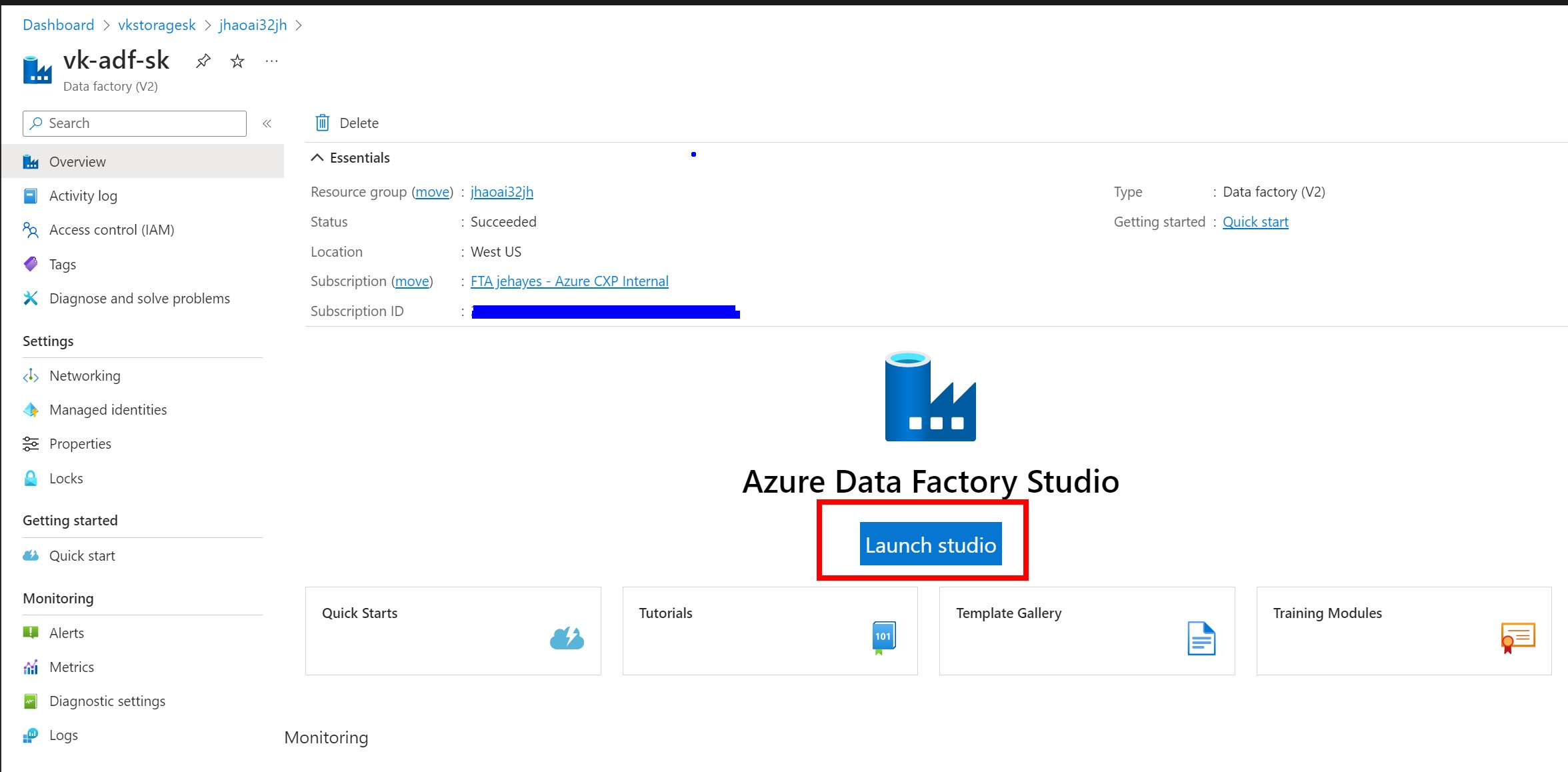

- In the Azure portal, go to your newly created Azure Data Factory Resource and click launch:

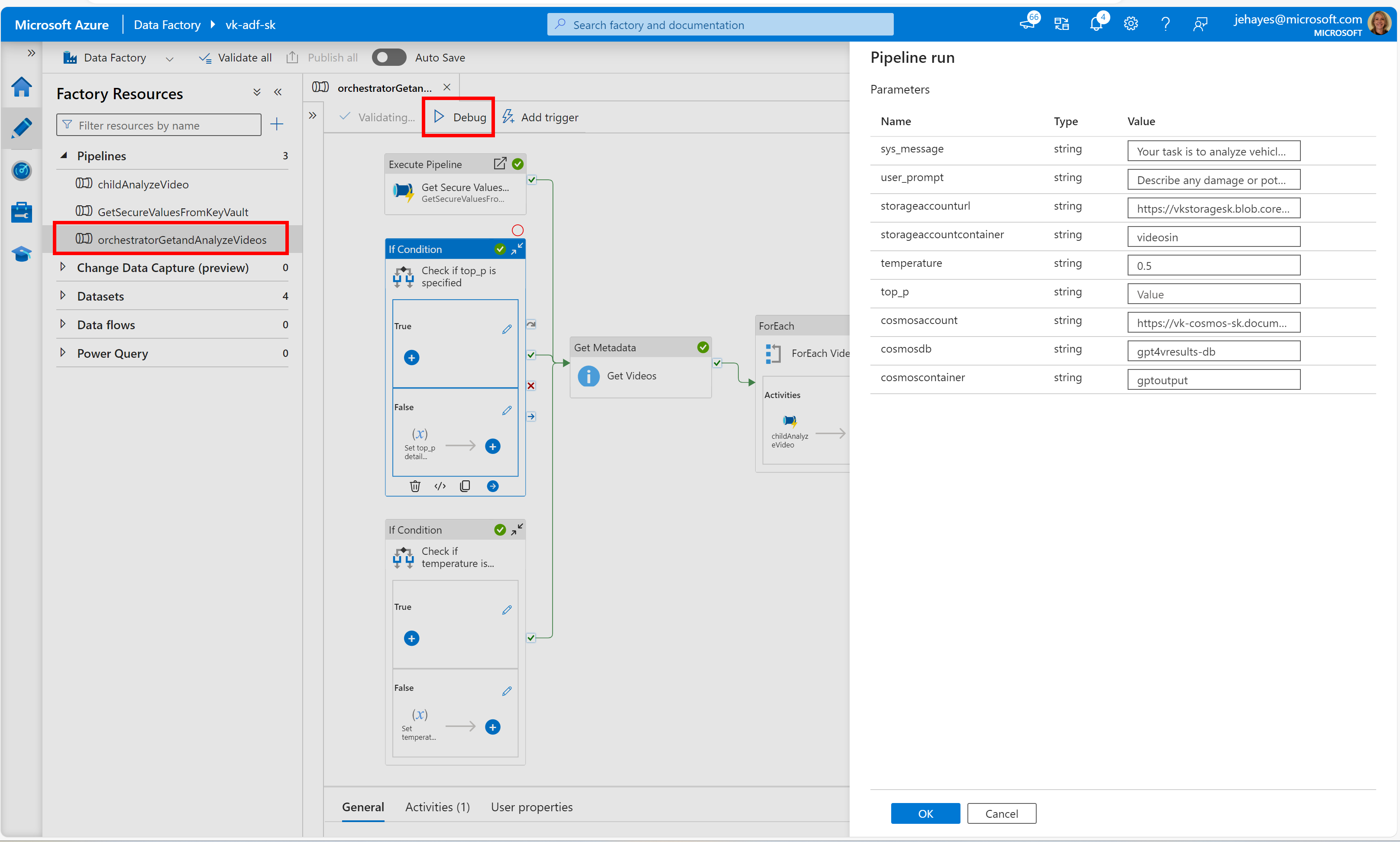

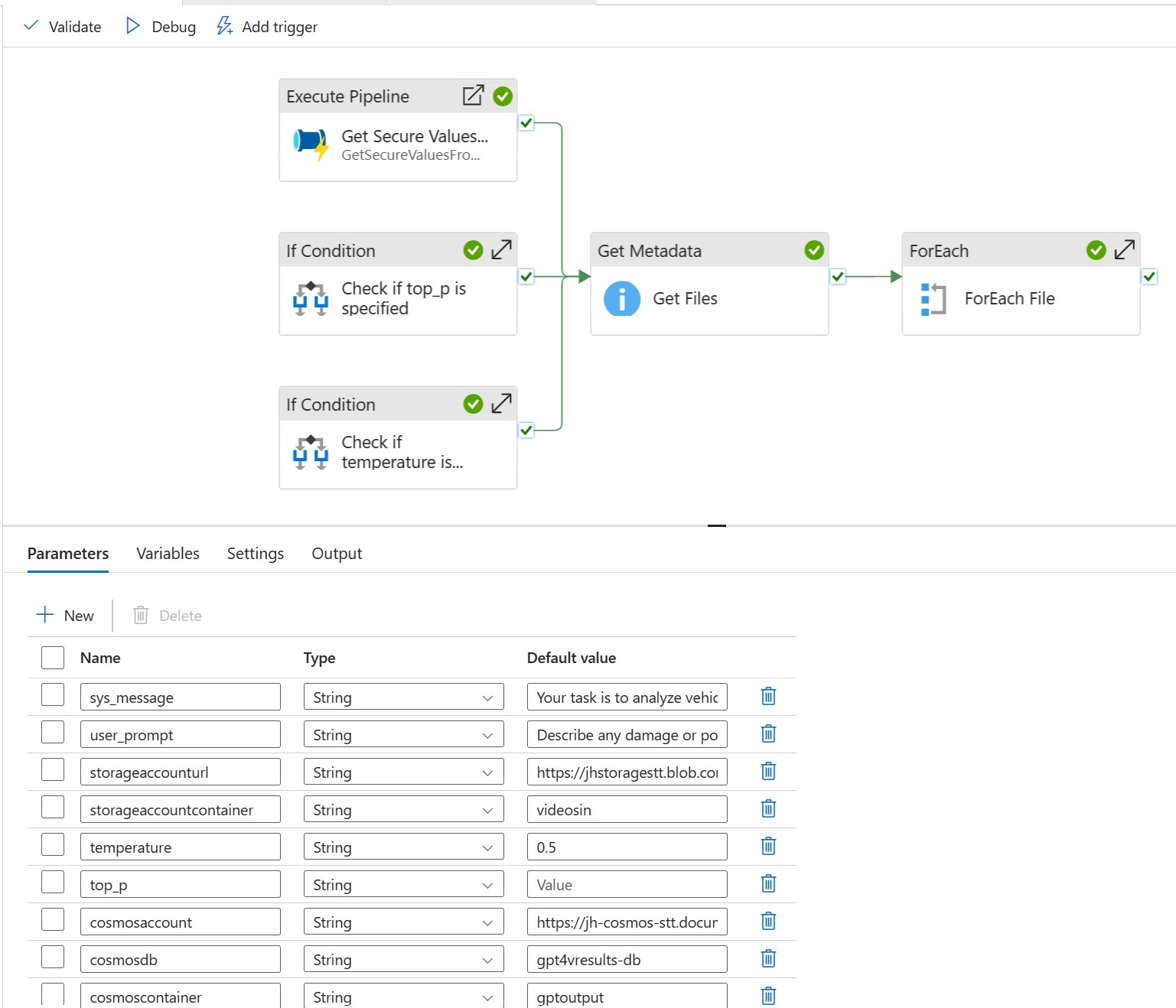

- Select the orchestratorGetandAnalyzeVideos pipeline, click Debug, and examine your preset pipeline parameter values. Then click OK to run.

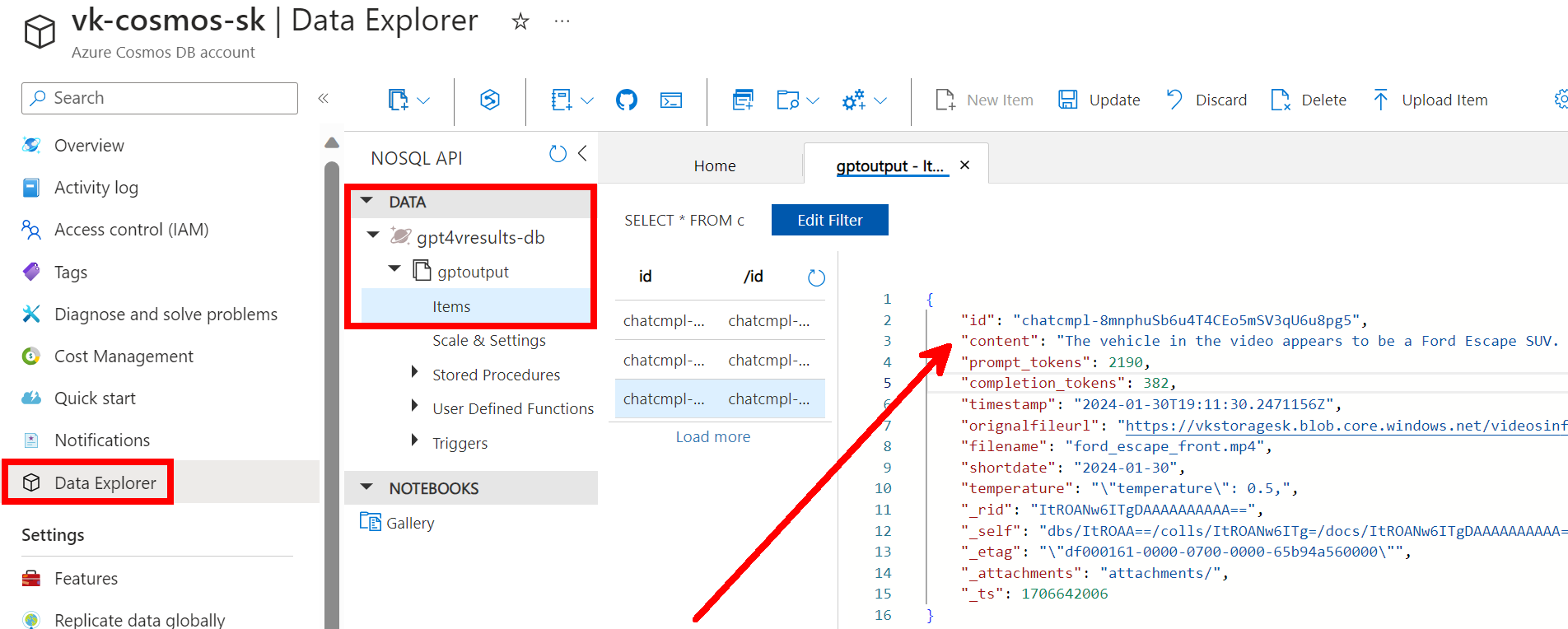

- After it runs successfully, go to your Azure Cosmos DB resource and examine the results in Data Explorer:

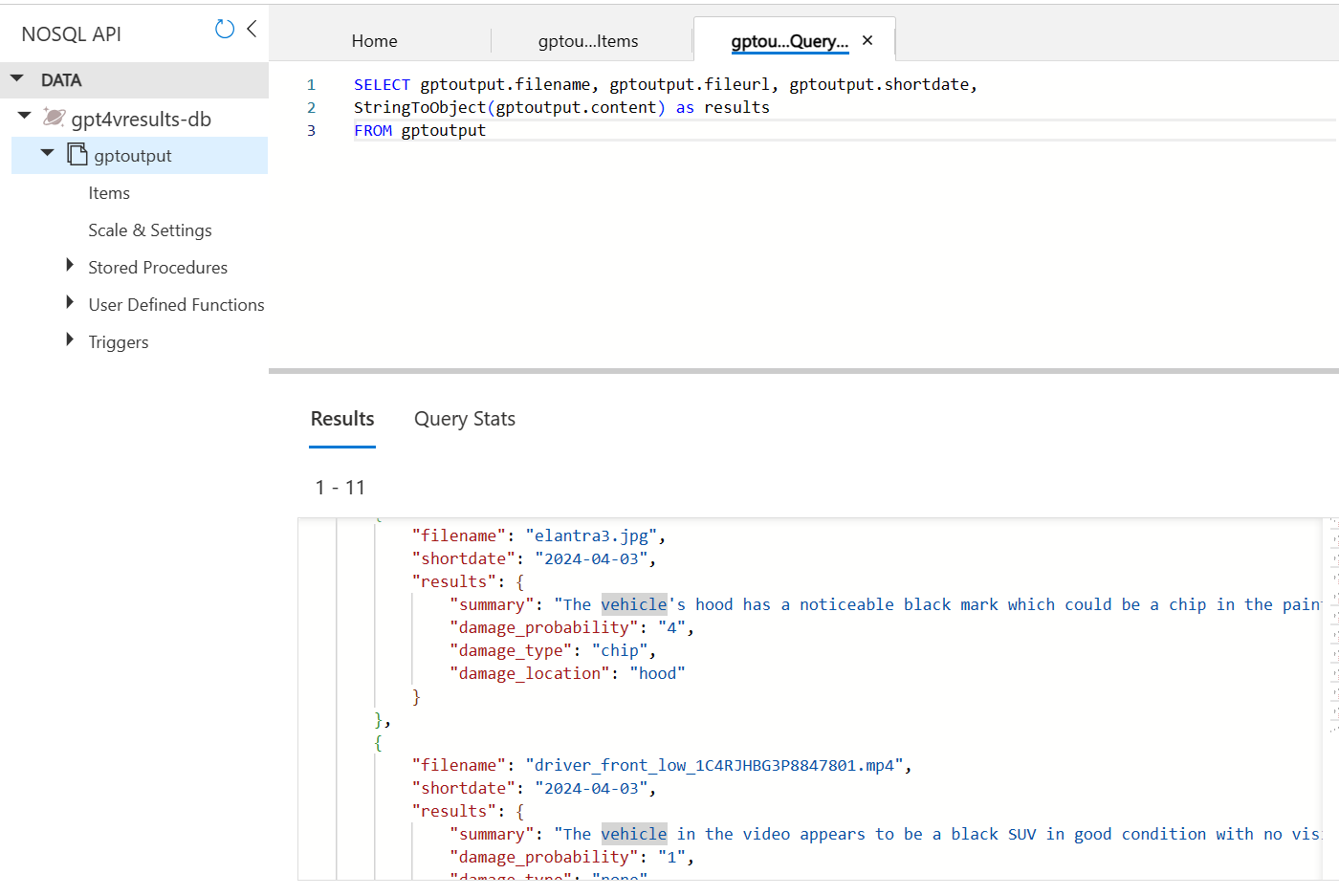

- At this time, GPT4-V does not support response_format={"type": "json_object"}. However, if we still specify the chat completion to return the results in Json, we can specify a Cosmos query to convert the string to a Json object:

Here's the Cosmos SQL code below:

SELECT gptoutput.filename, gptoutput.fileurl, gptoutput.shortdate,

StringToObject(gptoutput.content) as results

FROM gptoutput

This solution is highly customizable due to the parameterization capabilities in Azure Data Factory. Below are the features you can parameterize out-of-the-box.

When developing your solution, you can rerun it with different settings to get the best results from GPT-4V by tweaking the sys-message, user_prompt, temperature, and top_p values.

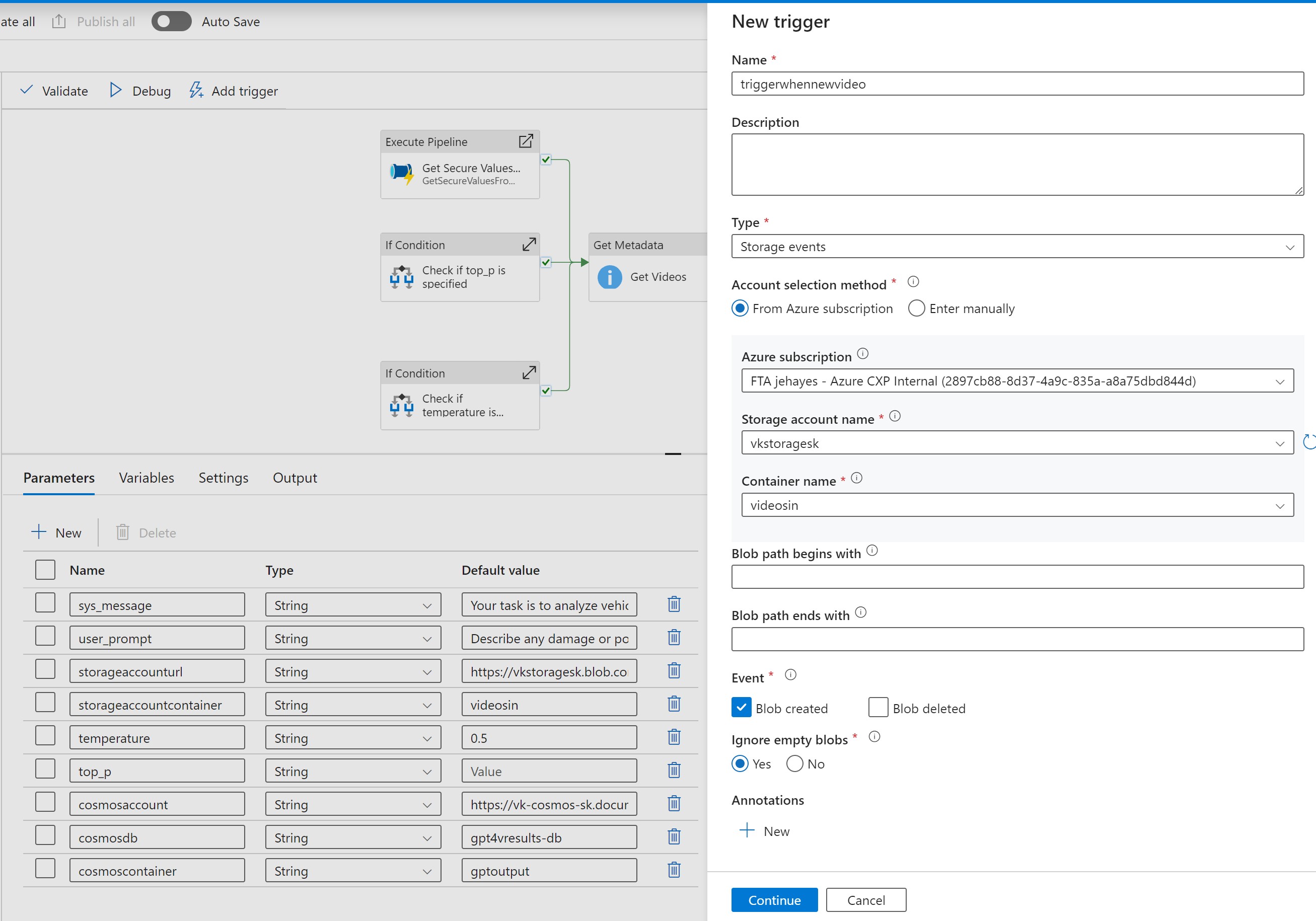

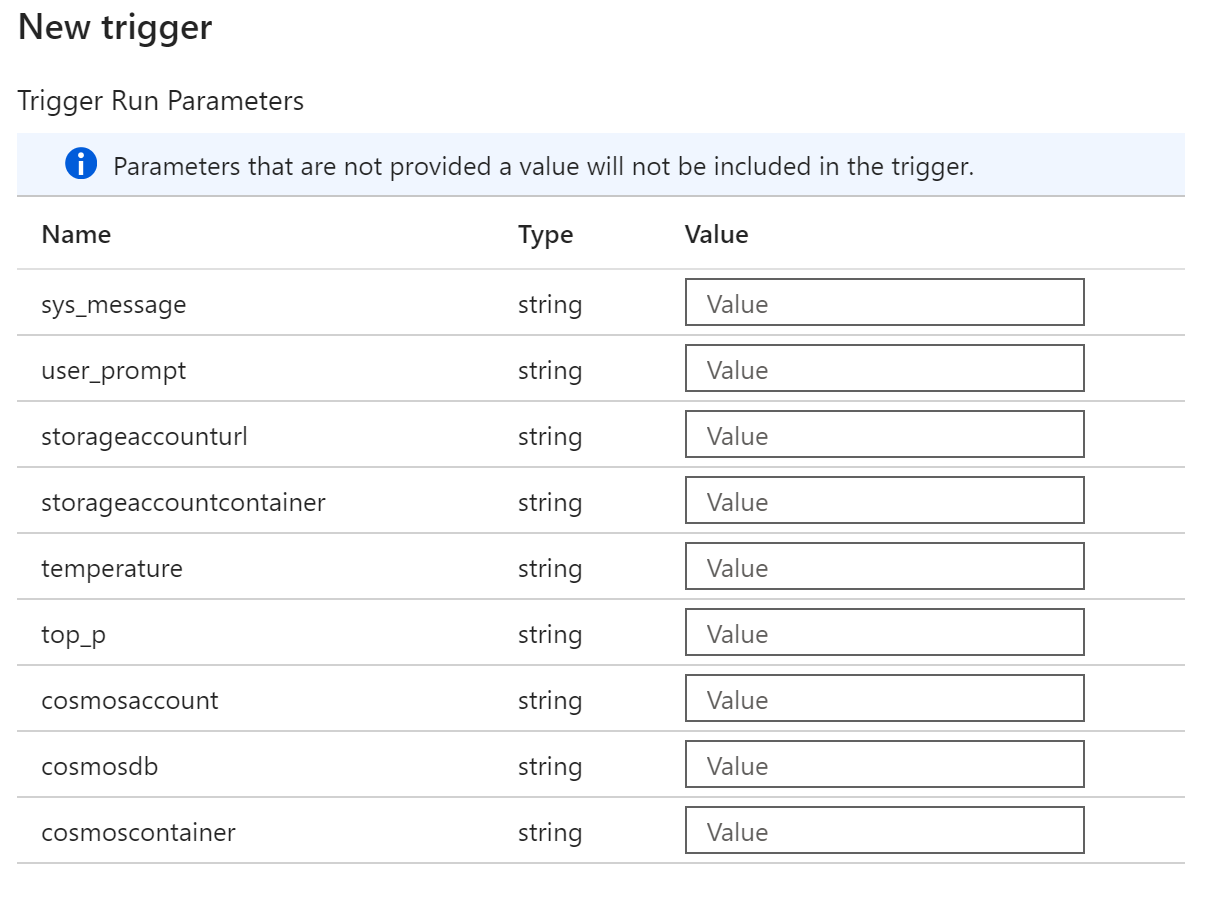

This solution is set to loop against a container of videos and images in batch, which is ideal for testing. However, when you move to production, you may want the video to be analyzed in real-time. To do this, you can set up a storage event trigger which will run when a file is landed in blob storage.

Move the If Activity inside the For Each loop to the main Orchestrator pipeline canvas and hen eliminate the Get Metadata and For Each activities. Call the If activity after the variables are set and the parameters are retrieved from Key Vault. You can get the file name from the trigger metadata.

Read more here for more information on ADF Storage event triggers

You can set up multiple triggers over your Azure Data Factory and pass different parameter values for whatever analysis you need to do:

- You can set up different storage accounts for landing the files, then adjust the storageaccounturl and storageaccountcontainer parameters to ingest and analyze the images and/or videos.

- You can have different prompts and other values sent to GPT-4V in the sys_message, user_prompt, temperature, and top_p values for different triggers.

- You can land the data in a different Cosmos Account, Database and/or Container when setting the cosmosaccount, and cosmosdb, and cosmoscontainer values.

If you are only analyzing images OR videos, you can delete the pipeline that is not needed (childAnalyzeImage or childAnalyzeVideo), eliminate the If activity inside the ForEach File activity and specify the Execute Pipeline activity for just the pipeline you need. However, it doesn't hurt to leave the unneeded pipeline there in case you want to use it in the future.

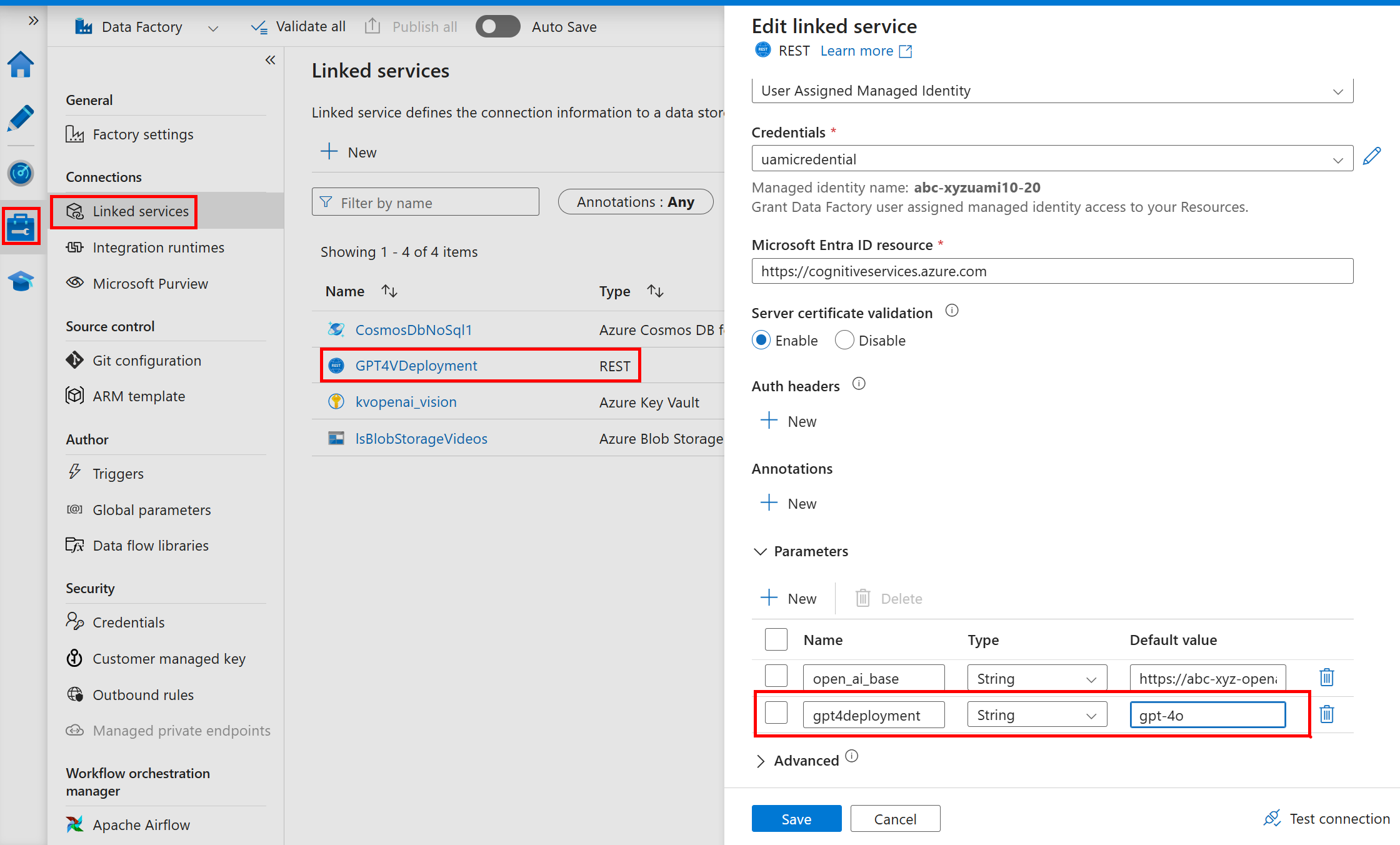

If you are only analyzing images, you can compare results and performance of GPT-4V and GPT-4o. After deploying this accelerator, create a GPT-4o deployment in the Azure OpenAI resource, naming it "gpt-4o". Then in ADF, open the Linked Service called "GPT4VDeployment". Change the parameter value for parameter "gpt4deployment" to your "gpt-4o".

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Highlight the main contacts for the project and acknowledge contributors. You can adapt the structure from AI-in-a-Box:

| Contact | GitHub ID | |

|---|---|---|

| Jean Hayes | @jehayesms | [email protected] |

| Chris Ayers | @codebytes | [email protected] |

If applicable, offer thanks to individuals, organizations, or projects that helped inspire or support your project.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

This project is part of the AI-in-a-Box series, aimed at providing the technical community with tools and accelerators to implement AI/ML solutions efficiently and effectively.