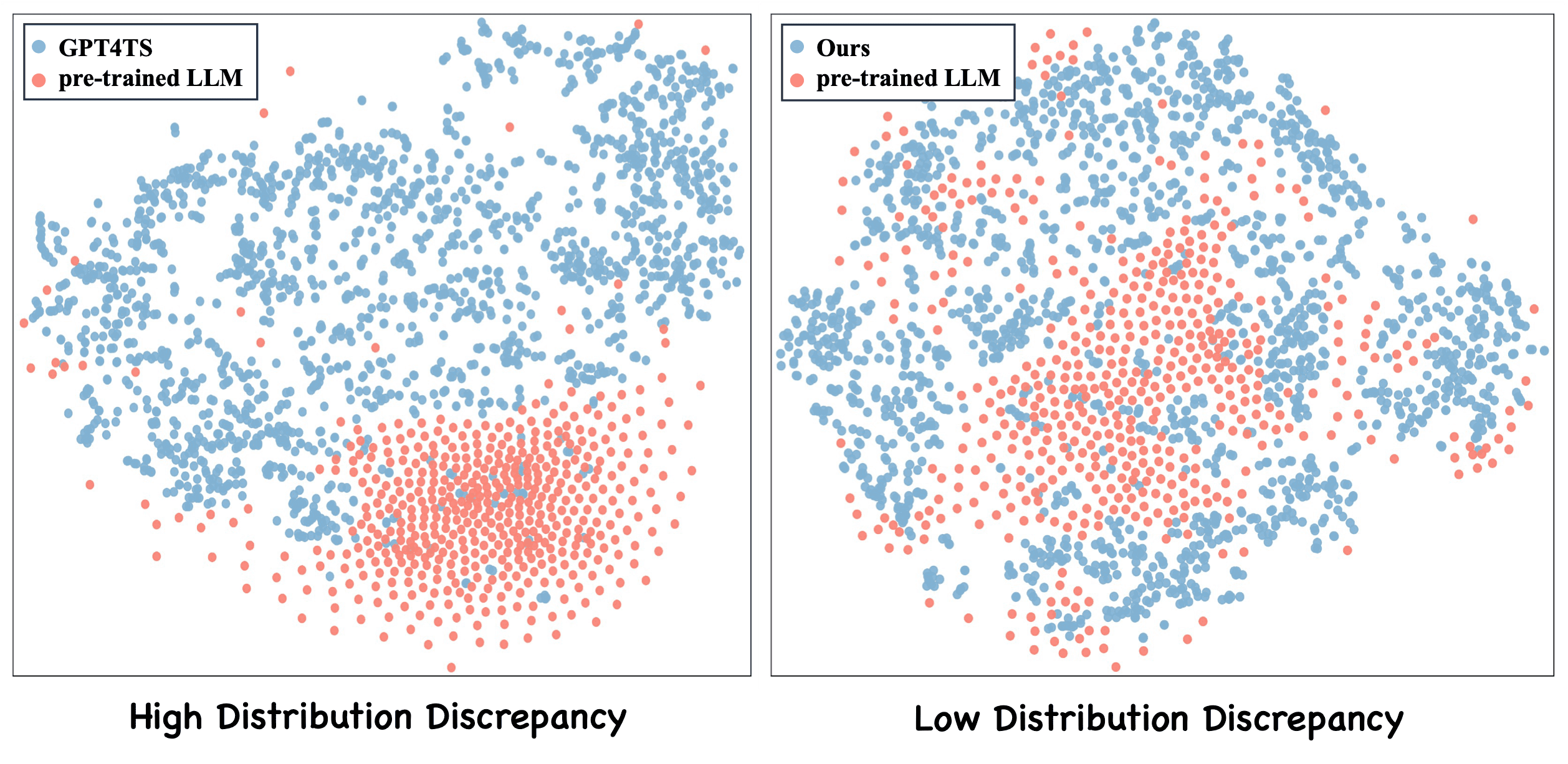

Figure 1: The t-SNE visualization of pre-trained word token embeddings of LLM with temporal tokens from GPT4TS (Left) and our method (Right). Our method shows more cohesive integration, indicating effective modality alignment.

Figure 2: Conceptual illustration of cross-modal fine-tuning technique.