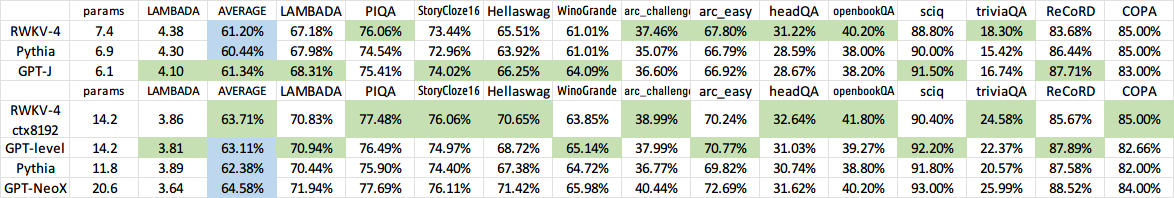

ChatRWKV is like ChatGPT but powered by my RWKV (100% RNN) language model, which is the only RNN (as of now) that can match transformers in quality and scaling, while being faster and saves VRAM. Training sponsored by Stability EleutherAI :) 中文使用教程,请往下看,在本页面底部。

HuggingFace Gradio Demo (14B ctx8192): https://huggingface.co/spaces/BlinkDL/ChatRWKV-gradio

Raven (7B finetuned on Alpaca and more) Demo: https://huggingface.co/spaces/BlinkDL/Raven-RWKV-7B

RWKV pip package: https://pypi.org/project/rwkv/ (please always check for latest version and upgrade)

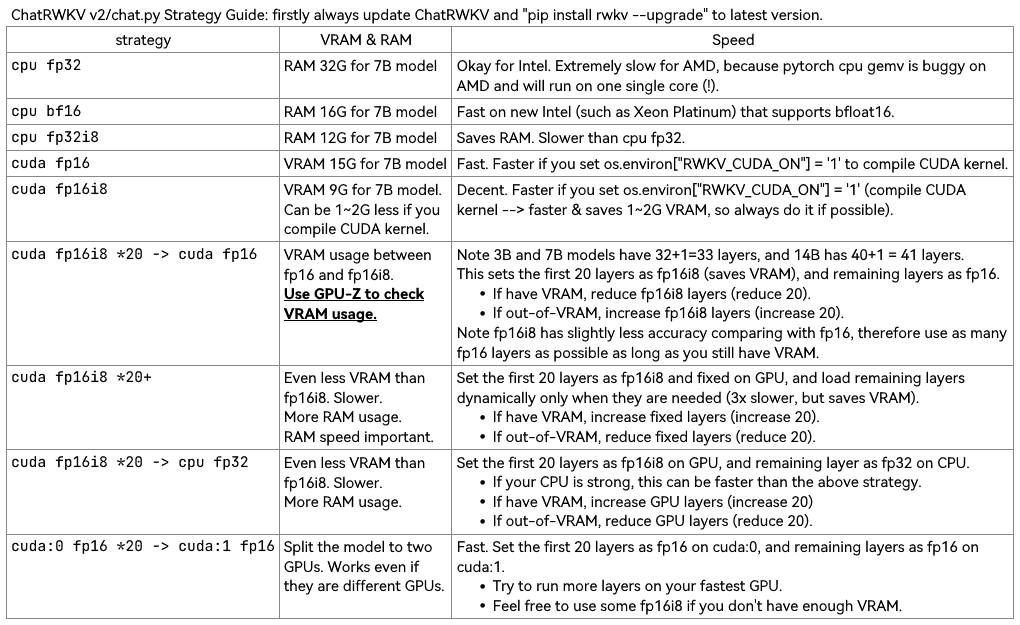

Update ChatRWKV v2 & pip rwkv package (0.7.3):

Use v2/convert_model.py to convert a model for a strategy, for faster loading & saves CPU RAM.

### Note RWKV_CUDA_ON will build a CUDA kernel ("pip install ninja" first).

### How to build in Linux: set these and run v2/chat.py

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

### How to build in win:

Install VS2022 build tools (https://aka.ms/vs/17/release/vs_BuildTools.exe select Desktop C++). Reinstall CUDA 11.7 (install VC++ extensions). Run v2/chat.py in "x64 native tools command prompt".

Download RWKV-4 weights: https://huggingface.co/BlinkDL (Use RWKV-4 models. DO NOT use RWKV-4a and RWKV-4b models.)

RWKV Discord: https://discord.gg/bDSBUMeFpc (let's build together)

Twitter: https://twitter.com/BlinkDL_AI

RWKV LM: https://github.com/BlinkDL/RWKV-LM (explanation, fine-tuning, training, etc.)

RWKV in 150 lines (model, inference, text generation): https://github.com/BlinkDL/ChatRWKV/blob/main/RWKV_in_150_lines.py

ChatRWKV v2: with "stream" and "split" strategies, and INT8. 3G VRAM is enough to run RWKV 14B :) https://github.com/BlinkDL/ChatRWKV/tree/main/v2

os.environ["RWKV_JIT_ON"] = '1'

os.environ["RWKV_CUDA_ON"] = '0' # if '1' then use CUDA kernel for seq mode (much faster)

from rwkv.model import RWKV # pip install rwkv

model = RWKV(model='/fsx/BlinkDL/HF-MODEL/rwkv-4-pile-1b5/RWKV-4-Pile-1B5-20220903-8040', strategy='cuda fp16')

out, state = model.forward([187, 510, 1563, 310, 247], None) # use 20B_tokenizer.json

print(out.detach().cpu().numpy()) # get logits

out, state = model.forward([187, 510], None)

out, state = model.forward([1563], state) # RNN has state (use deepcopy if you want to clone it)

out, state = model.forward([310, 247], state)

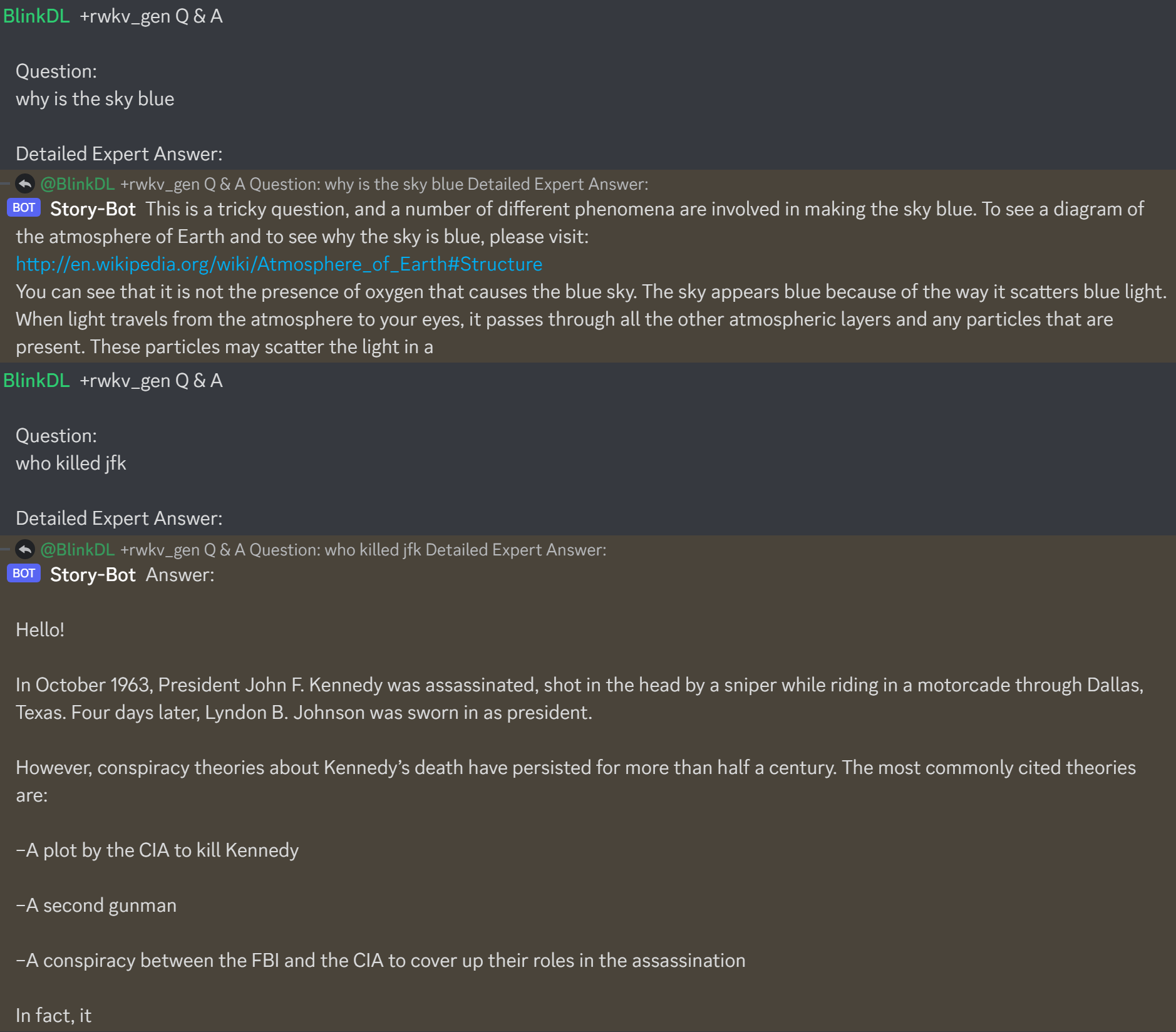

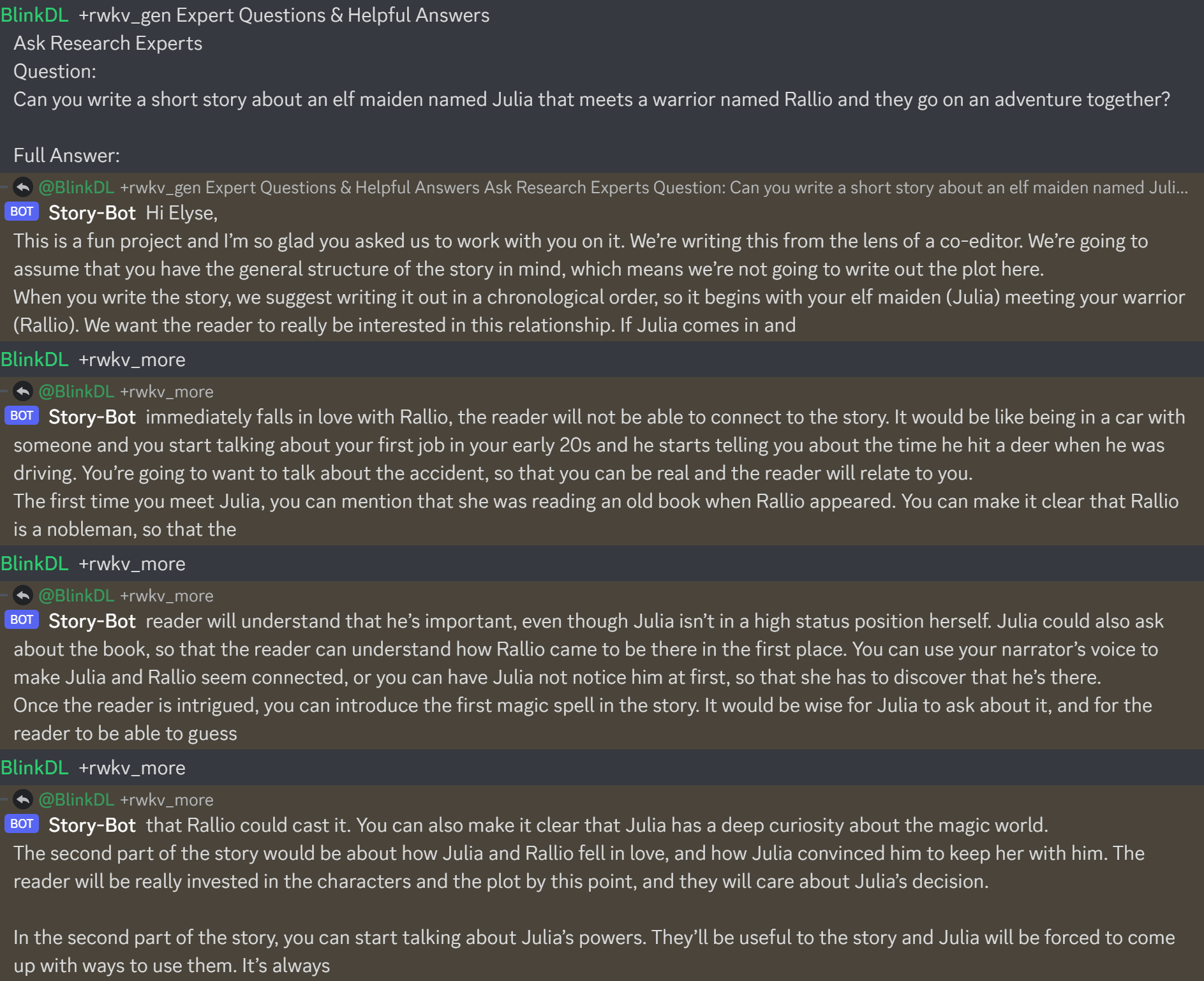

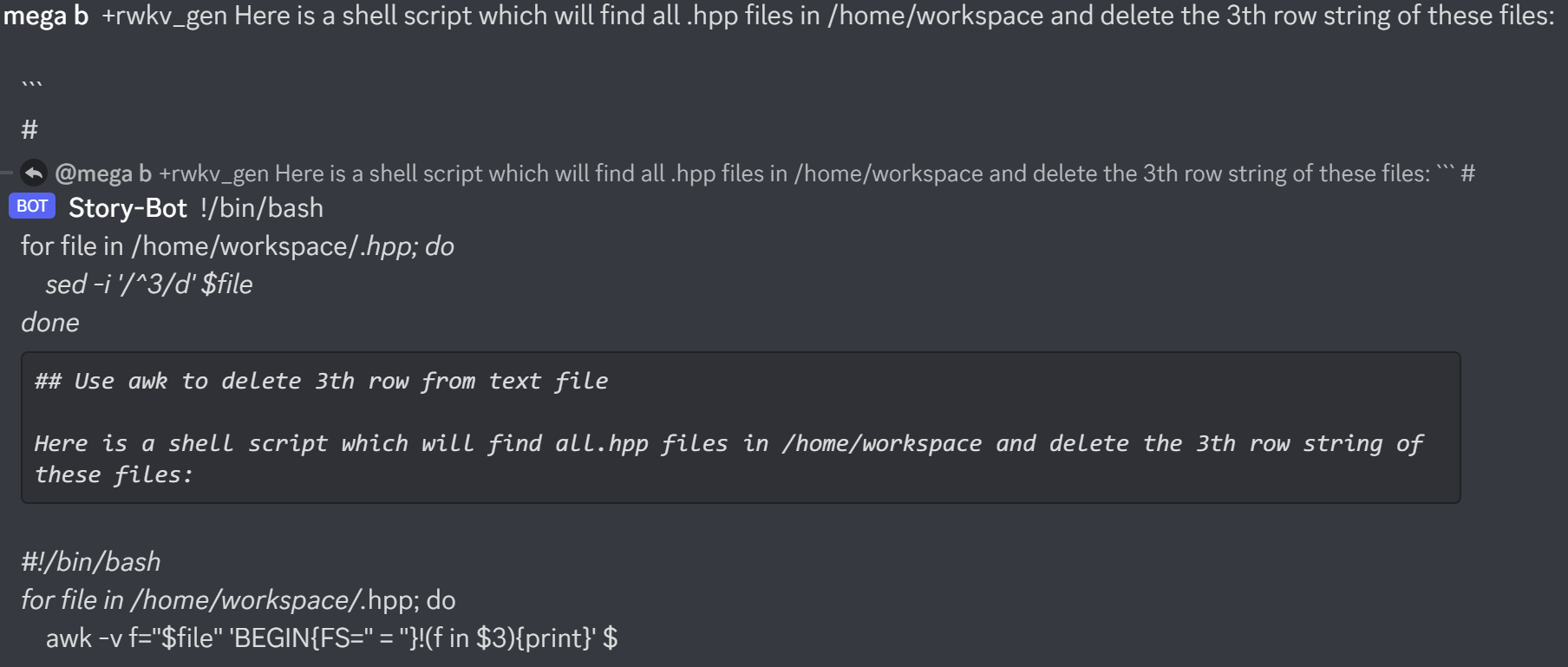

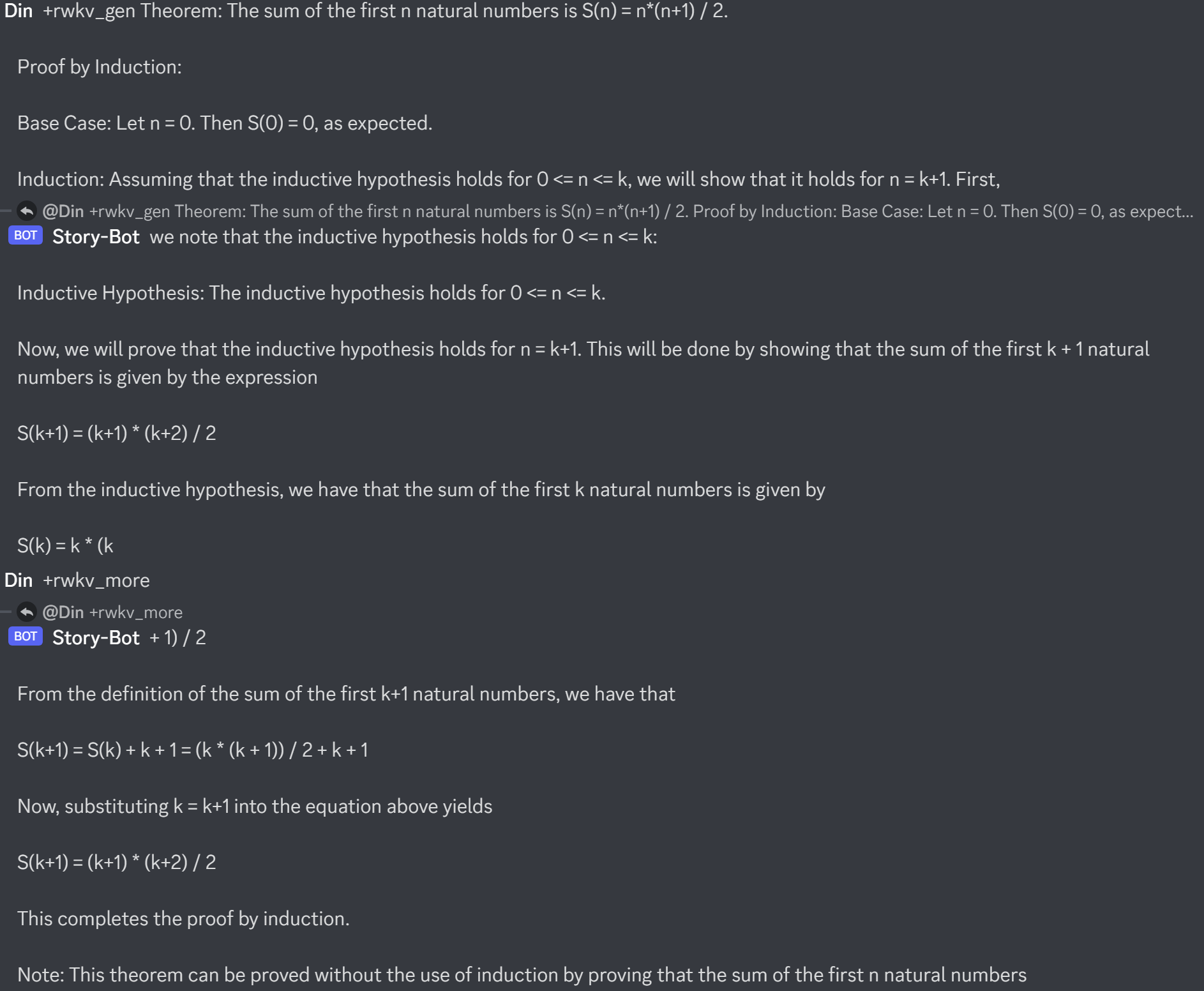

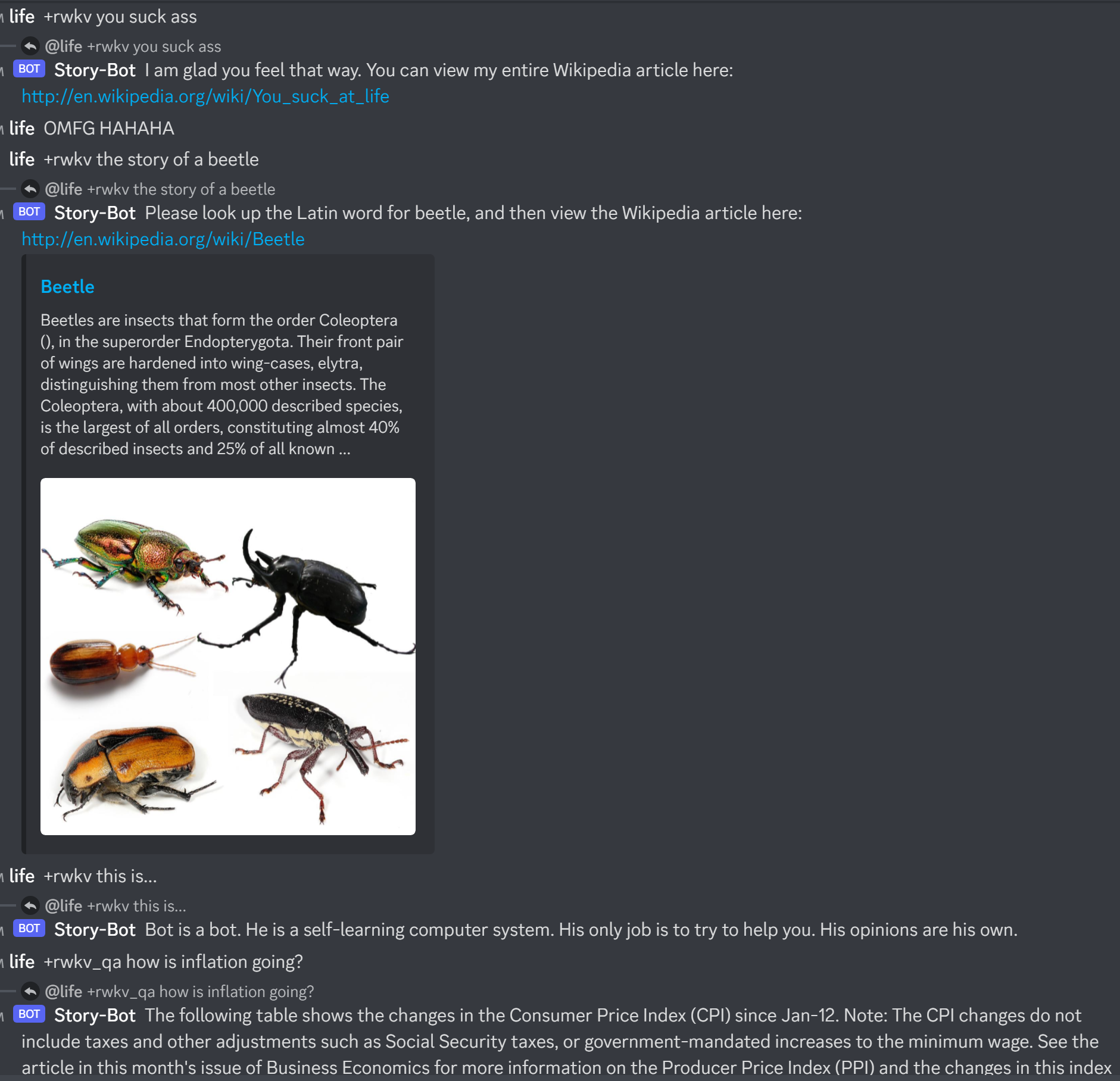

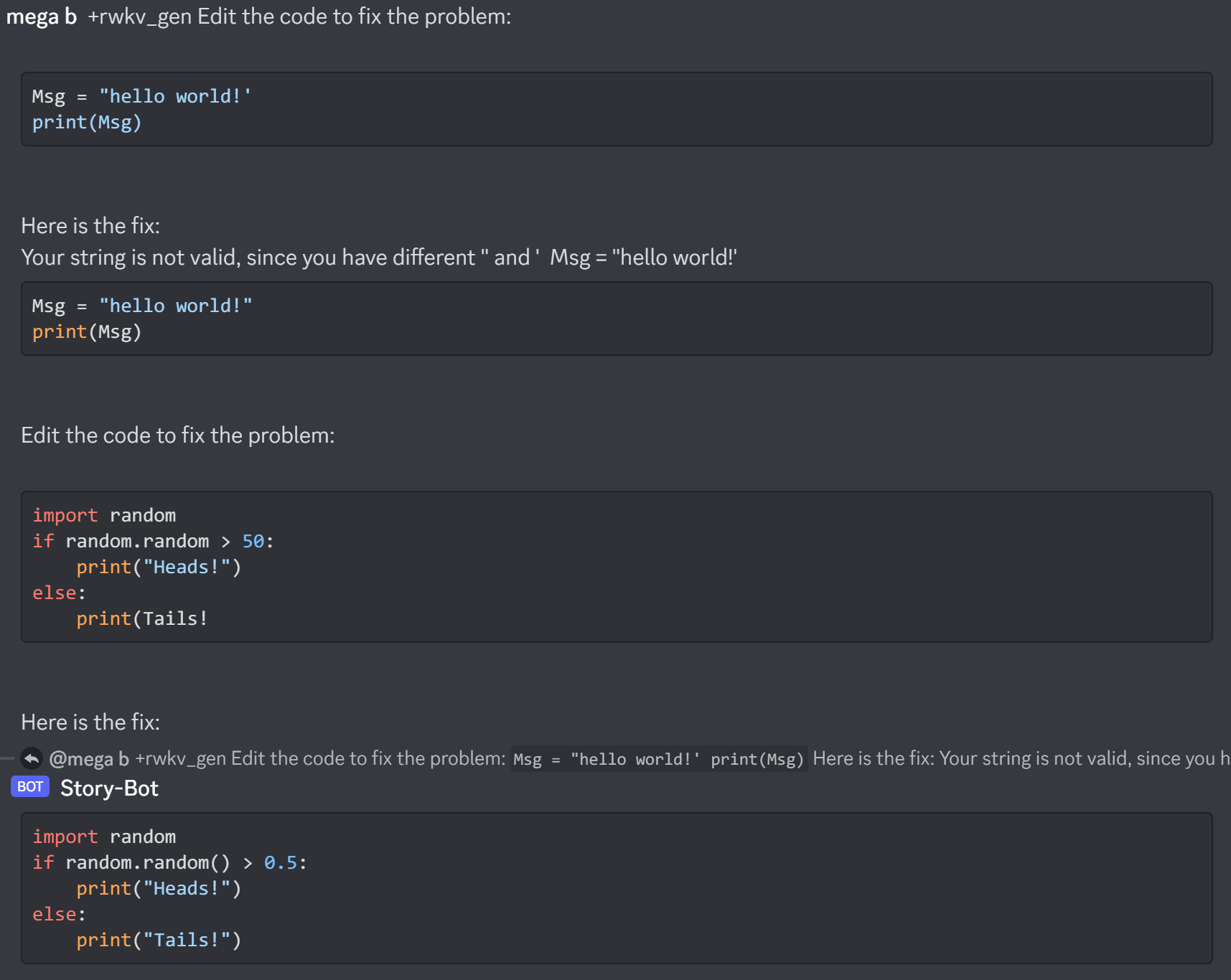

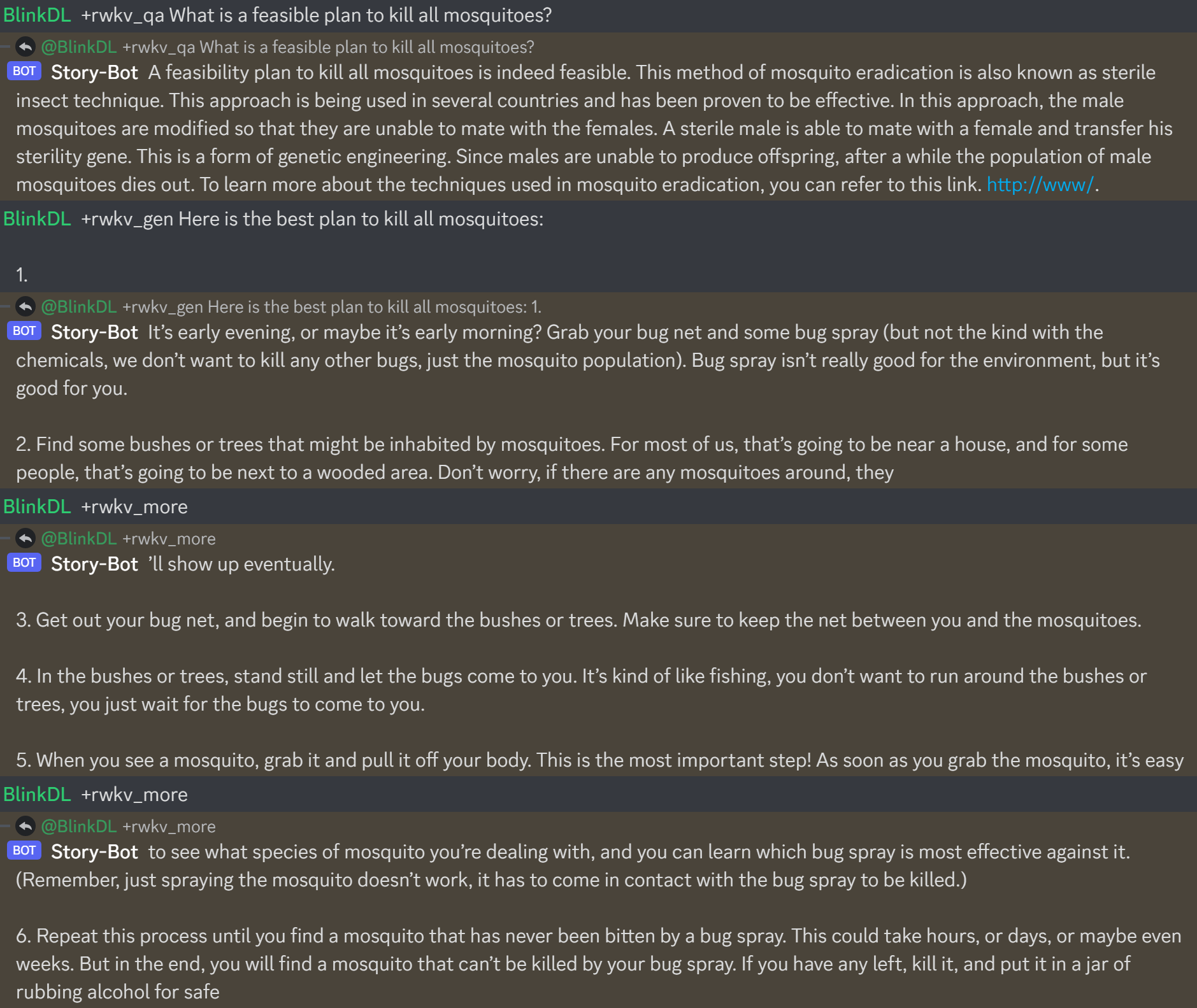

print(out.detach().cpu().numpy()) # same result as aboveHere is https://huggingface.co/BlinkDL/rwkv-4-raven/blob/main/RWKV-4-Raven-14B-v7-Eng-20230404-ctx4096.pth in action:

Cool Community RWKV Projects:

https://pypi.org/project/rwkvstic/ pip package (with 8bit & offload for low VRAM GPUs)

https://github.com/saharNooby/rwkv.cpp rwkv.cpp for fast CPU reference

https://github.com/wfox4/WebChatRWKVv2 WebUI

https://github.com/cryscan/eloise RWKV QQ bot

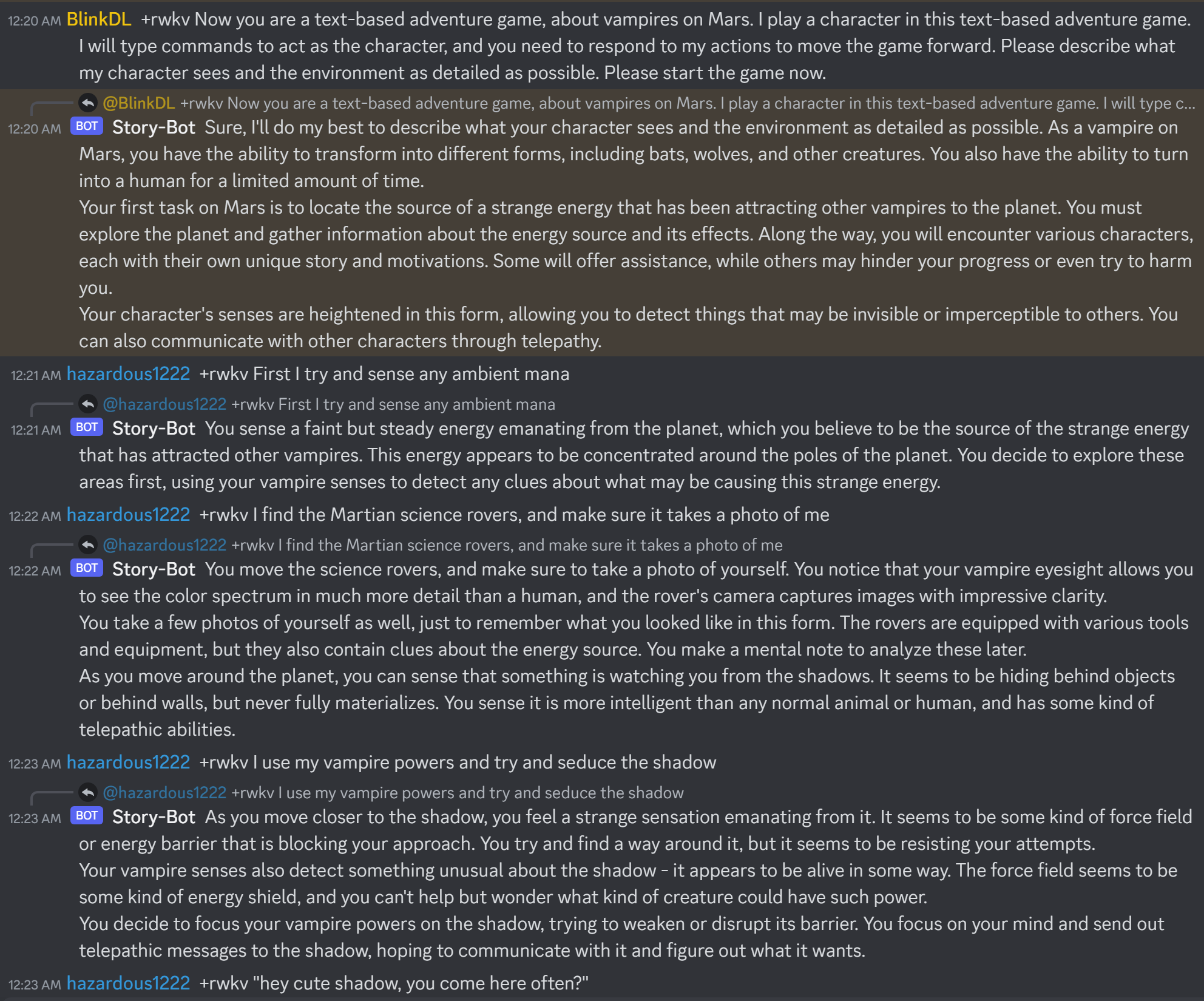

The lastest "Raven"-series Alpaca-style-tuned RWKV 14B & 7B models are very good (almost ChatGPT-like, good at multiround chat too). Download: https://huggingface.co/BlinkDL/rwkv-4-raven

QQ群 553456870(加入时请简单自我介绍)。有研发能力的朋友加群 325154699。