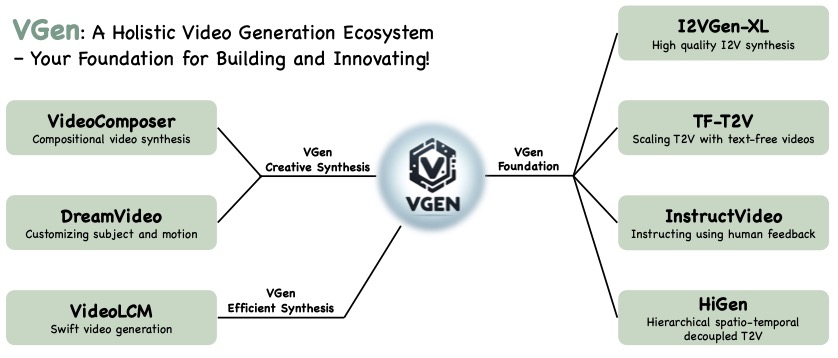

VGen is an open-source video synthesis codebase developed by the Tongyi Lab of Alibaba Group, featuring state-of-the-art video generative models. This repository includes implementations of the following methods:

- I2VGen-xl: High-quality image-to-video synthesis via cascaded diffusion models

- VideoComposer: Compositional Video Synthesis with Motion Controllability

- Hierarchical Spatio-temporal Decoupling for Text-to-Video Generation

- A Recipe for Scaling up Text-to-Video Generation with Text-free Videos

- InstructVideo: Instructing Video Diffusion Models with Human Feedback

- DreamVideo: Composing Your Dream Videos with Customized Subject and Motion

- VideoLCM: Video Latent Consistency Model

- Modelscope text-to-video technical report

VGen can produce high-quality videos from the input text, images, desired motion, desired subjects, and even the feedback signals provided. It also offers a variety of commonly used video generation tools such as visualization, sampling, training, inference, join training using images and videos, acceleration, and more.

- [2023.12] We release TF-T2V that can scale up existing video generation techniques using text-free videos, significantly enhancing the performance of both Modelscope-T2V and VideoComposer at the same time.

- [2023.12] We updated the codebase to support higher versions of xformer (0.0.22), torch2.0+, and removed the dependency on flash_attn.

- [2023.12] We release InstructVideo that can accept human feedback signals to improve VLDM

- [2023.12] We release the diffusion based expressive talking head generation DreamTalk

- [2023.12] We release the high-efficiency video generation method VideoLCM

- [2023.12] We release the code and model of I2VGen-XL and the ModelScope T2V

- [2023.12] We release the T2V method HiGen and customizing T2V method DreamVideo.

- [2023.12] We write an introduction document for VGen and compare I2VGen-XL with SVD.

- [2023.11] We release a high-quality I2VGen-XL model, please refer to the Webpage

- Release the technical papers and webpage of I2VGen-XL

- Release the code and pretrained models that can generate 1280x720 videos

- Release the code and pretrained models of HumanDiff

- Release models optimized specifically for the human body and faces

- Updated version can fully maintain the ID and capture large and accurate motions simultaneously

- Release other methods and the corresponding models

The main features of VGen are as follows:

- Expandability, allowing for easy management of your own experiments.

- Completeness, encompassing all common components for video generation.

- Excellent performance, featuring powerful pre-trained models in multiple tasks.

conda create -n vgen python=3.8

conda activate vgen

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu113

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

You also need to ensure that your system has installed the ffmpeg command. If it is not installed, you can install it using the following command:

sudo apt-get update && apt-get install ffmpeg libsm6 libxext6 -y

We have provided a demo dataset that includes images and videos, along with their lists in data.

Please note that the demo images used here are for testing purposes and were not included in the training.

git clone https://github.com/damo-vilab/i2vgen-xl.git

cd i2vgen-xl

Executing the following command to enable distributed training is as easy as that.

python train_net.py --cfg configs/t2v_train.yaml

In the t2v_train.yaml configuration file, you can specify the data, adjust the video-to-image ratio using frame_lens, and validate your ideas with different Diffusion settings, and so on.

- Before the training, you can download any of our open-source models for initialization. Our codebase supports custom initialization and

grad_scalesettings, all of which are included in thePretrainitem in yaml file. - During the training, you can view the saved models and intermediate inference results in the

workspace/experiments/t2v_traindirectory.

After the training is completed, you can perform inference on the model using the following command.

python inference.py --cfg configs/t2v_infer.yaml

Then you can find the videos you generated in the workspace/experiments/test_img_01 directory. For specific configurations such as data, models, seed, etc., please refer to the t2v_infer.yaml file.

If you want to directly load our previously open-sourced Modelscope T2V model, please refer to this link.

|

|

|

Click HERE to view the generated video. |

Click HERE to view the generated video. |

(i) Download model and test data:

!pip install modelscope

from modelscope.hub.snapshot_download import snapshot_download

model_dir = snapshot_download('damo/I2VGen-XL', cache_dir='models/', revision='v1.0.0')

or you can also download it through HuggingFace (https://huggingface.co/damo-vilab/i2vgen-xl):

# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://huggingface.co/damo-vilab/i2vgen-xl

(ii) Run the following command:

python inference.py --cfg configs/i2vgen_xl_infer.yaml

or you can run:

python inference.py --cfg configs/i2vgen_xl_infer.yaml test_list_path data/test_list_for_i2vgen.txt test_model models/i2vgen_xl_00854500.pth

The test_list_path represents the input image path and its corresponding caption. Please refer to the specific format and suggestions within demo file data/test_list_for_i2vgen.txt. test_model is the path for loading the model. In a few minutes, you can retrieve the high-definition video you wish to create from the workspace/experiments/test_list_for_i2vgen directory. At present, we find that the current model performs inadequately on anime images and images with a black background due to the lack of relevant training data. We are consistently working to optimize it.

Due to the compression of our video quality in GIF format, please click 'HERE' below to view the original video.

|

|

|

Input Image |

Click HERE to view the generated video. |

|

|

|

Input Image |

Click HERE to view the generated video. |

|

|

|

Input Image |

Click HERE to view the generated video. |

|

|

|

Input Image |

Click HERE to view the generated video. |

In preparation.

Our codebase essentially supports all the commonly used components in video generation. You can manage your experiments flexibly by adding corresponding registration classes, including ENGINE, MODEL, DATASETS, EMBEDDER, AUTO_ENCODER, VISUAL, DIFFUSION, PRETRAIN, and can be compatible with all our open-source algorithms according to your own needs. If you have any questions, feel free to give us your feedback at any time.

If this repo is useful to you, please cite our corresponding technical paper.

@article{2023videocomposer,

title={VideoComposer: Compositional Video Synthesis with Motion Controllability},

author={Wang, Xiang and Yuan, Hangjie and Zhang, Shiwei and Chen, Dayou and Wang, Jiuniu, and Zhang, Yingya, and Shen, Yujun, and Zhao, Deli and Zhou, Jingren},

booktitle={arXiv preprint arXiv:2306.02018},

year={2023}

}

@article{2023i2vgenxl,

title={I2VGen-XL: High-Quality Image-to-Video Synthesis via Cascaded Diffusion Models},

author={Zhang, Shiwei and Wang, Jiayu and Zhang, Yingya and Zhao, Kang and Yuan, Hangjie and Qing, Zhiwu and Wang, Xiang and Zhao, Deli and Zhou, Jingren},

booktitle={arXiv preprint arXiv:2311.04145},

year={2023}

}

@article{wang2023modelscope,

title={Modelscope text-to-video technical report},

author={Wang, Jiuniu and Yuan, Hangjie and Chen, Dayou and Zhang, Yingya and Wang, Xiang and Zhang, Shiwei},

journal={arXiv preprint arXiv:2308.06571},

year={2023}

}

@article{dreamvideo,

title={DreamVideo: Composing Your Dream Videos with Customized Subject and Motion},

author={Wei, Yujie and Zhang, Shiwei and Qing, Zhiwu and Yuan, Hangjie and Liu, Zhiheng and Liu, Yu and Zhang, Yingya and Zhou, Jingren and Shan, Hongming},

journal={arXiv preprint arXiv:2312.04433},

year={2023}

}

@article{qing2023higen,

title={Hierarchical Spatio-temporal Decoupling for Text-to-Video Generation},

author={Qing, Zhiwu and Zhang, Shiwei and Wang, Jiayu and Wang, Xiang and Wei, Yujie and Zhang, Yingya and Gao, Changxin and Sang, Nong },

journal={arXiv preprint arXiv:2312.04483},

year={2023}

}

@article{wang2023videolcm,

title={VideoLCM: Video Latent Consistency Model},

author={Wang, Xiang and Zhang, Shiwei and Zhang, Han and Liu, Yu and Zhang, Yingya and Gao, Changxin and Sang, Nong },

journal={arXiv preprint arXiv:2312.09109},

year={2023}

}

@article{ma2023dreamtalk,

title={DreamTalk: When Expressive Talking Head Generation Meets Diffusion Probabilistic Models},

author={Ma, Yifeng and Zhang, Shiwei and Wang, Jiayu and Wang, Xiang and Zhang, Yingya and Deng Zhidong},

journal={arXiv preprint arXiv:2312.09767},

year={2023}

}

@article{2023InstructVideo,

title={InstructVideo: Instructing Video Diffusion Models with Human Feedback},

author={Yuan, Hangjie and Zhang, Shiwei and Wang, Xiang and Wei, Yujie and Feng, Tao and Pan, Yining and Zhang, Yingya and Liu, Ziwei and Albanie, Samuel and Ni, Dong},

booktitle={arXiv preprint arXiv:2312.12490},

year={2023}

}

@article{TFT2V,

title={A Recipe for Scaling up Text-to-Video Generation with Text-free Videos},

author={Wang, Xiang and Zhang, Shiwei and Yuan, Hangjie and Qing, Zhiwu and Gong, Biao and Zhang, Yingya and Shen, Yujun and Gao, Changxin and Sang, Nong},

journal={arXiv preprint arXiv:2312.15770},

year={2023}

}We would like to express our gratitude for the contributions of several previous works to the development of VGen. This includes, but is not limited to Composer, ModelScopeT2V, Stable Diffusion, OpenCLIP, WebVid-10M, LAION-400M, Pidinet and MiDaS. We are committed to building upon these foundations in a way that respects their original contributions.

This open-source model is trained with using WebVid-10M and LAION-400M datasets and is intended for RESEARCH/NON-COMMERCIAL USE ONLY.