Date Added: September 19, 2023 2:00 PM

💡 This template documents how to review code. Helpful for new and remote employees to get and stay aligned.npm create vite@latestYou'll be asked for

- App Name

- Which Framework to use like React, Angular, or Vue? Choose React

- Then, Typescript or Javascript. Choose as you wish

Vite Project Initialization

Now switch to the project directory

cd [your project name]This step is required to map the port between Docker container and your React app

Now replace this code snippet in vite.config

export default defineConfig({

plugins: [react()],

});to

export default defineConfig({

plugins: [react()],

server: {

watch: {

usePolling: true,

},

host: true, // needed for the Docker Container port mapping to work

strictPort: true,

port: 5173, // you can replace this port with any port

}Create a file called Dockerfile in the root of your project directory.

Copy these commands to your Dockerfile

# Stage 0, "build-stage", based on Node.js, to build and compile the frontend

FROM tiangolo/node-frontend:10 as build-stage

WORKDIR /app

COPY package*.json /app/

RUN npm install

COPY ./ /app/

RUN npm run build

# Stage 1, based on Nginx, to have only the compiled app, ready for production with Nginx

FROM nginx:1.15

COPY --from=build-stage /app/build/ /usr/share/nginx/html

# Copy the default nginx.conf provided by tiangolo/node-frontend

COPY --from=build-stage /nginx.conf /etc/nginx/conf.d/default.conf

The explanation for these commands:

- This will tell Docker that we will start with a base image node:10-alpine which in turn is based on the Node.js official image, notice that you won’t have to install and configure Node.js inside the Linux container or anything, Docker does that for you:

FROM node:10-alpine as builder

- Our working directory will be

/react-nginx-docker. This Docker "instruction" will create that directory and go inside of it, all the next steps will "be" in that directory:

WORKDIR /react-nginx-docker

- Now, this instruction copies all the files that start with

packageand end with.jsonfrom your source to inside the container. With thepackage*.jsonit will include thepackage.jsonfile and also thepackage-lock.jsonif you have one, but it won't fail if you don't have it. Just that file (or those 2 files), before the rest of the source code, because we want to install everything the first time, but not every time we change our source code. The next time we change our code and build the image, Docker will use the cached "layers" with everything installed (because thepackage.jsonhasn't changed) and will only compile our source code:

COPY package.json package-lock.json ./

- Install all the dependencies, this will be cached until we change the

package.jsonfile (changing our dependencies). So it won't take very long installing everything every time we iterate in our source code and try to test (or deploy) the production Docker image, just the first time and when we update the dependencies (installed packages):

RUN npm install

- Now, after installing all the dependencies, we can copy our source code. This section will not be cached that much, because we’ll be changing our source code constantly, but we already took advantage of Docker caching for all the package install steps in the commands above. So, let’s copy our source code:

COPY . .

- Then build the React app with:

RUN npm run build

…that will build our app, to the directory ./build/. Inside the container will be in /app/build/.

- In the same

Dockerfilefile, we start another section (another "stage"), like if 2Dockerfiles were concatenated. That's Docker multi-stage building. It almost just looks like concatenatingDockerfiles. So, let's start with an official Nginx base image for this "stage":

FROM nginx:alpine

…if you were very concerned about disk space (and you didn’t have any other image that probably shares the same base layers), or if, for some reason, you are a fan of Alpine Linux, you could change that line and use an Alpine version.

- Here’s the Docker multi-stage trick. This is a normal

COPY, but it has a-from=builder. Thatbuilderrefers to the name we specified above in theas builder. Here, although we are in a Nginx image, starting from scratch, we can copy files from a previous stage. So, we can copy the compiled fresh version of our app. That compiled version is based on the latest source code, and that latest compiled version only lives in the previous Docker "stage", for now. But we'll copy it to the Nginx directory, just as static files:

COPY --from=builder /react-nginx-docker/build /usr/share/nginx/html

- This configuration file directs everything to

index.html, so that if you use a router like React router it can take care of it's routes, even if your users type the URL directly in the browser:

COPY ./.nginx/nginx.conf /etc/nginx/nginx.conf

…that’s it for the Dockerfile! Doing that with scripts or any other method would be a lot more cumbersome.

Now we can build our image, doing it will compile everything and create a Nginx image ready for serving our app.

- Build your image and tag it with a name:

docker build -t react-nginx-docker .To check that your new Docker image is working, you can start a container based on it and see the results.

- To test your image start a container based on it:

docker run -p 80:80 react-nginx-docker…you won’t see any logs, just your terminal hanging there.

- Open your browser in http://localhost.

Open the Browser and access http://localhost:[Port you mentioned in the docker run command] as per the configuration we did so far it should be <http://localhost:8080>

Date Added: September 19, 2023 2:01 PM

💡 This template documents how to review code. Helpful for new and remote employees to get and stay aligned.Amazon Elastic Container Registry (ECR) is a fully-managed Docker container registry that makes it easy for developers to store, manage, and deploy Docker container images. Amazon ECR is integrated with Amazon Elastic Container Service (ECS), simplifying your development to production workflow.

Amazon ECS works with any Docker registry such as Docker Hub, etc. But, in this post, we see how we can use Amazon ECR to store our Docker images. Once you set up the Amazon account and create an IAM user with Administrator access the first thing you need to create a Docker repository.

You can create your first repository either by AWS console or AWS CLI

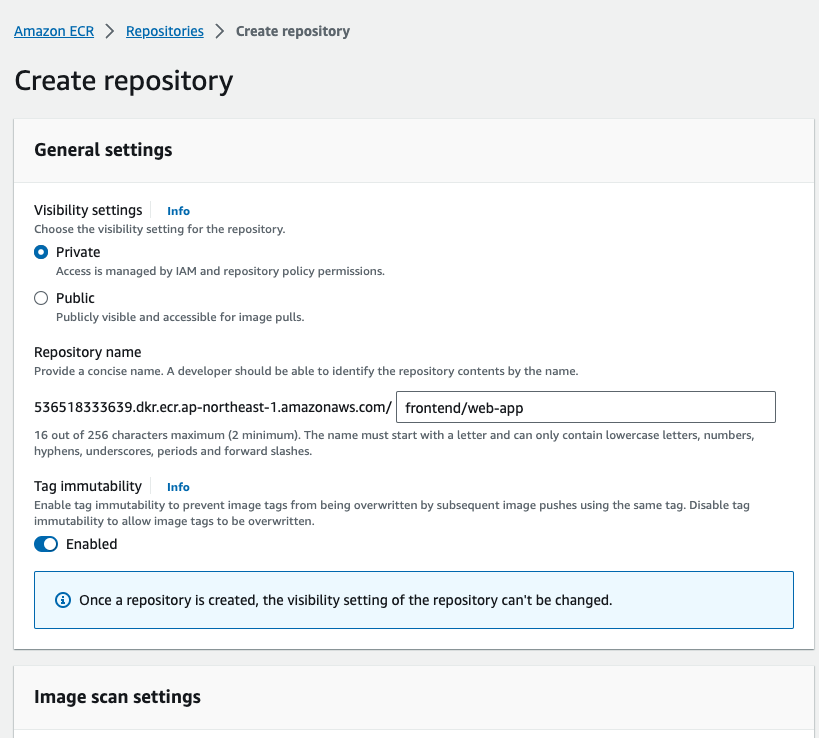

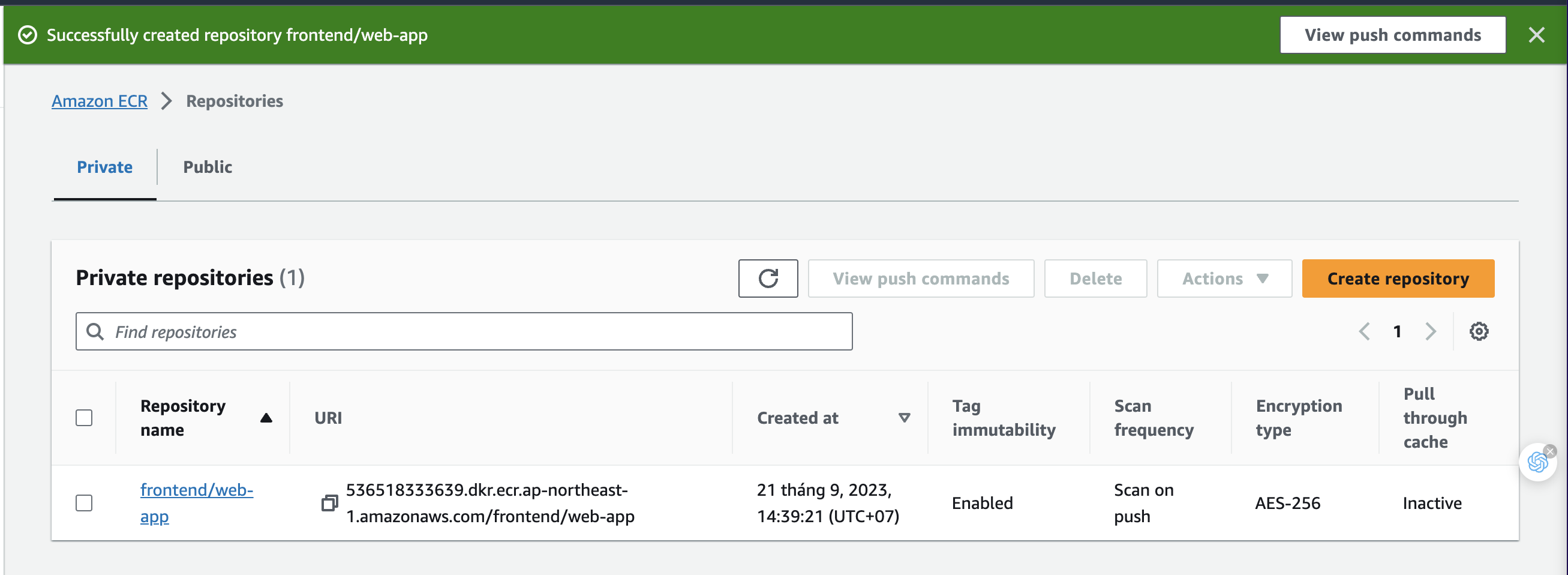

Creating a repository with AWS console is straightforward and all you need to give a name.

creating repository

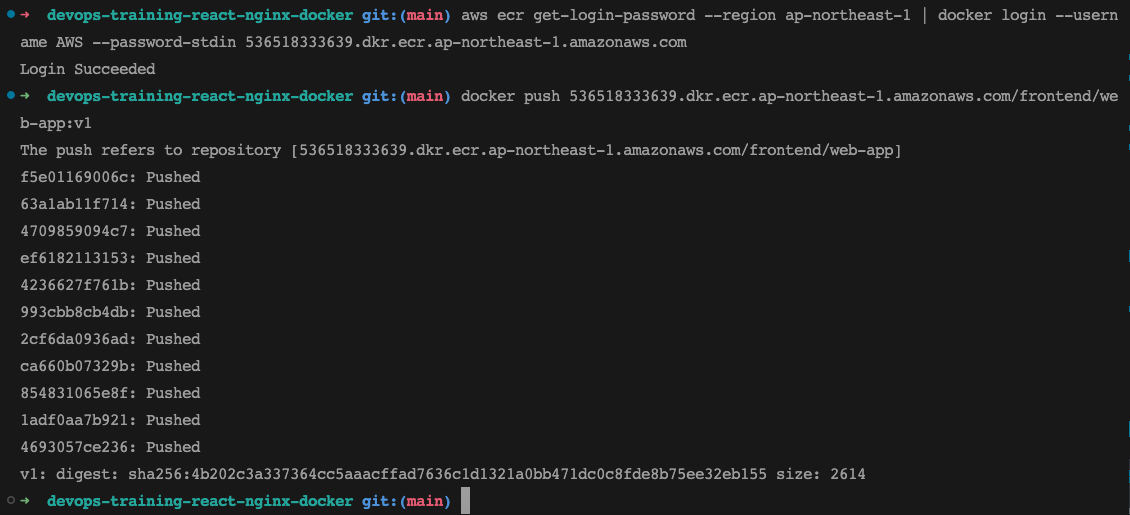

Retrieve an authentication token and authenticate your Docker client to your registry

aws ecr get-login-password --region ap-northeast-1 | docker login --username AWS --password-stdin 536518333639.dkr.ecr.ap-northeast-1.amazonaws.comYou have created a Docker image on your local machine earlier. It’s time to tag that image with this repository URI in the above image.

docker tag react-nginx-ui:latest 536518333639.dkr.ecr.ap-northeast-1.amazonaws.com/frontend/web-app:v1Once you tag the image and it’s time to push the Docker image into your repository.

// list the images

docker images

// push the image

docker push 536518333639.dkr.ecr.ap-northeast-1.amazonaws.com/frontend/web-app:v1Pushing Docker image

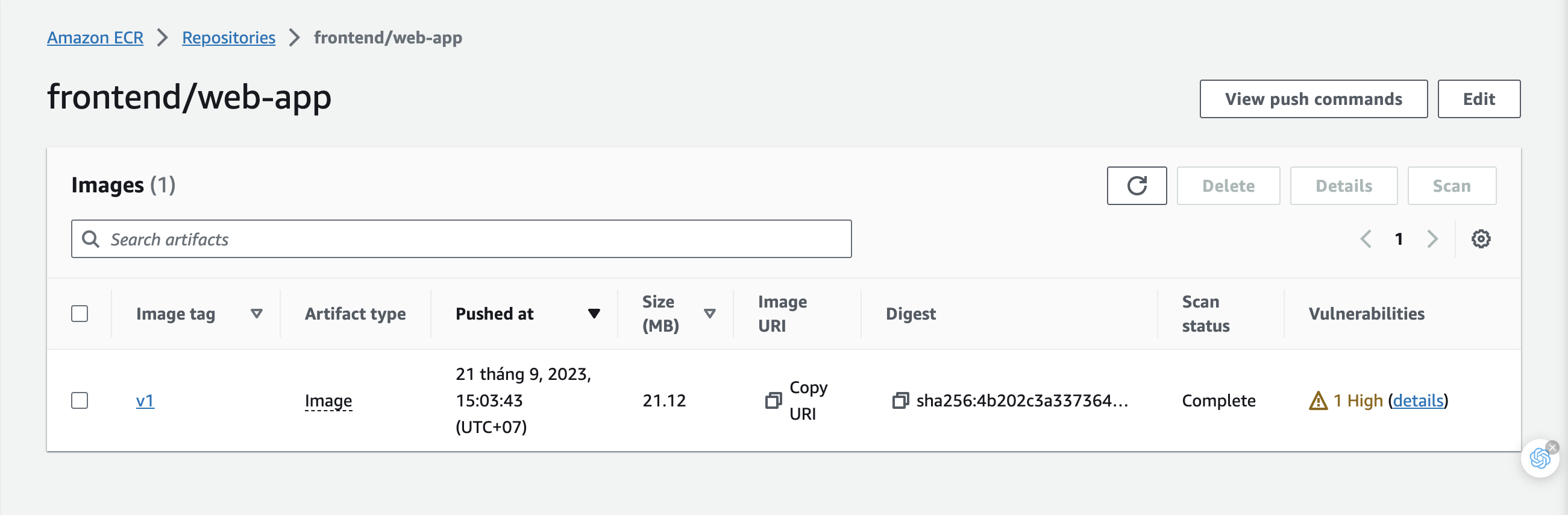

Docker image with tag v1

Getting started with AWS EKS is easy all you need to do the following steps

- We need to create an AWS EKS cluster with AWS console, SDK, or AWS CLI.

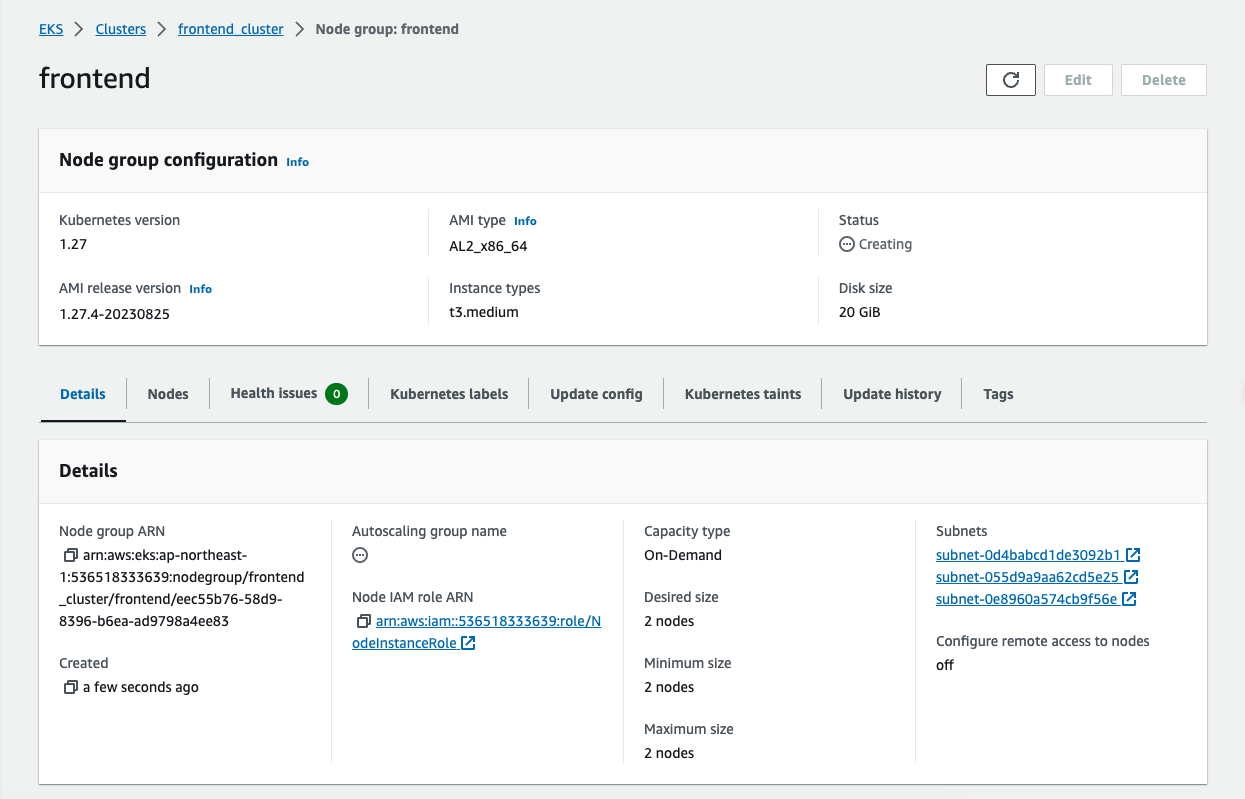

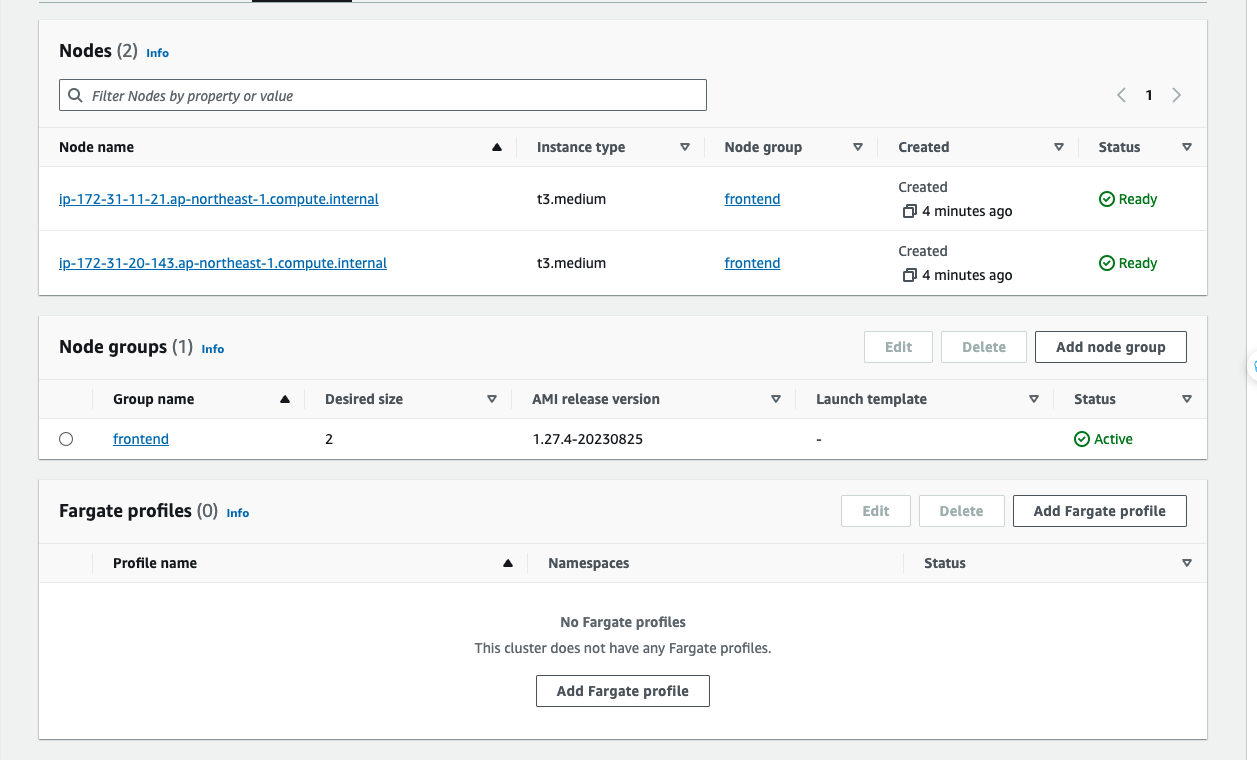

- Create a worker node group that registers with EKS Cluster

- When your cluster is ready, you can configure kubectl to communicate with your cluster.

- Deploy and manage your applications on the cluster

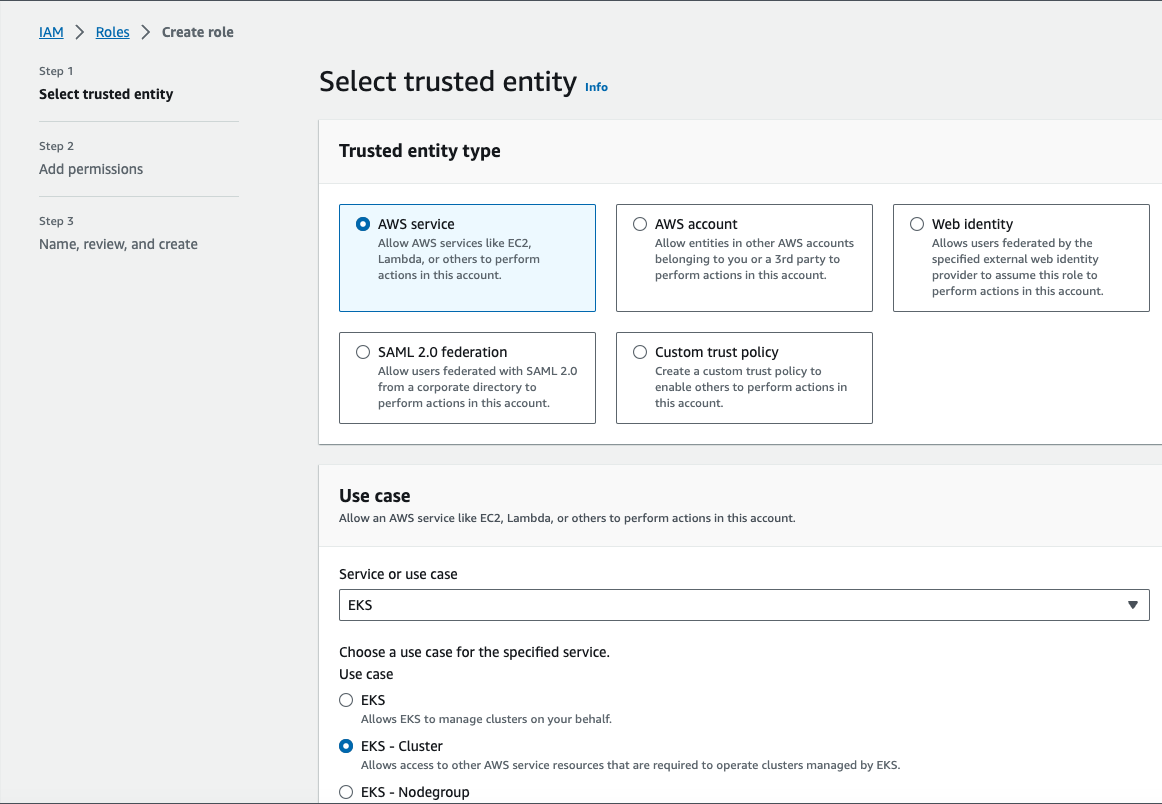

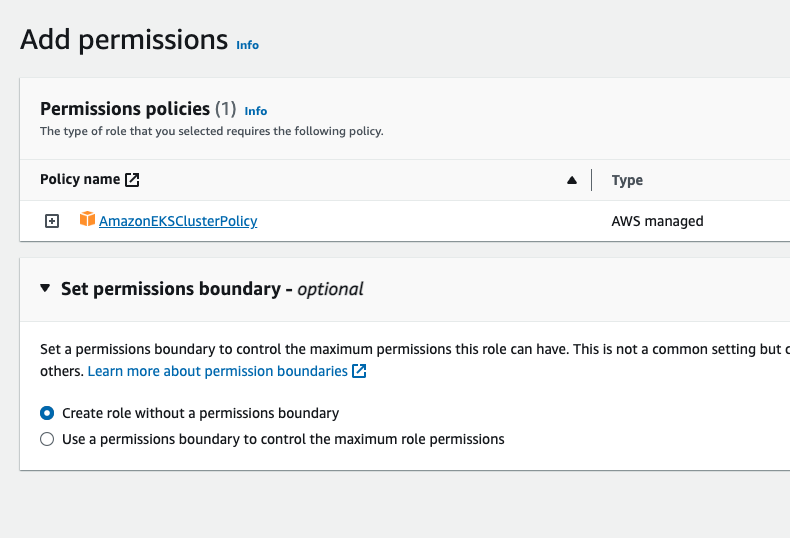

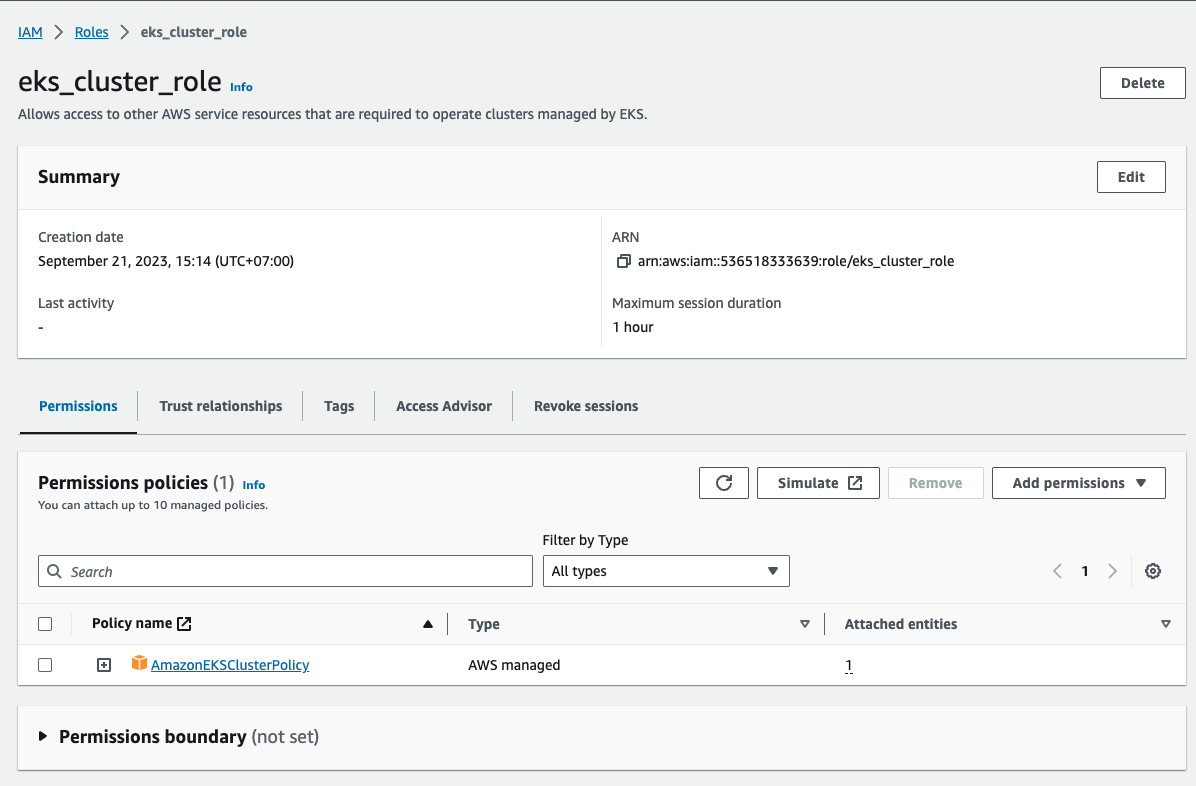

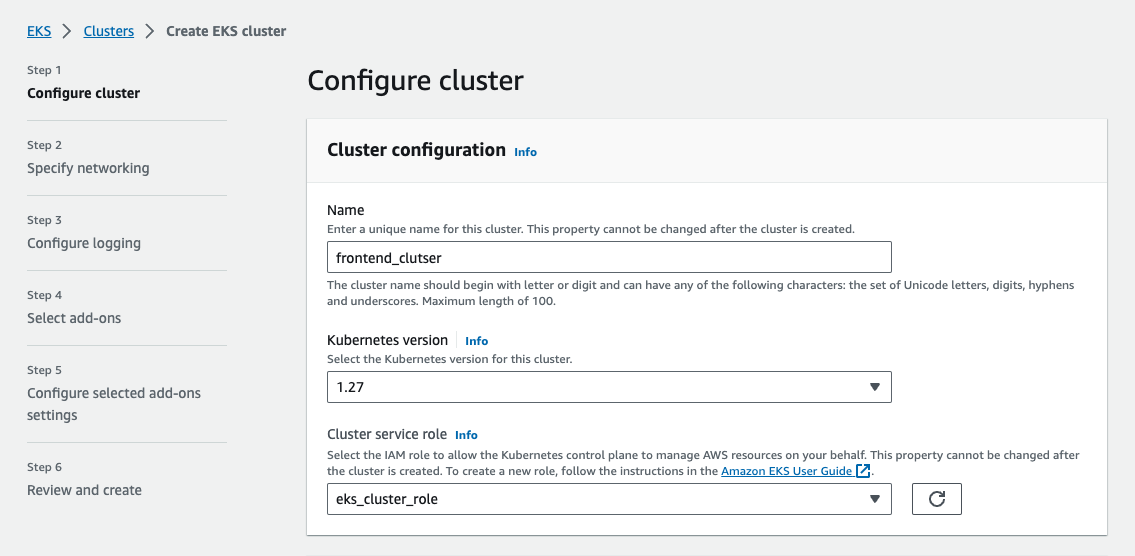

Let’s create a cluster by following this guide here. Make sure you created a role for the EKS to allow Amazon EKS and the Kubernetes control plane to manage AWS resources on your behalf. I created a role called eks_cluster_role. Here is a link to create a cluster role.

Let’s create a cluster by giving the below information.

It takes some time for the cluster to get created and it should be in the active state once it is created.

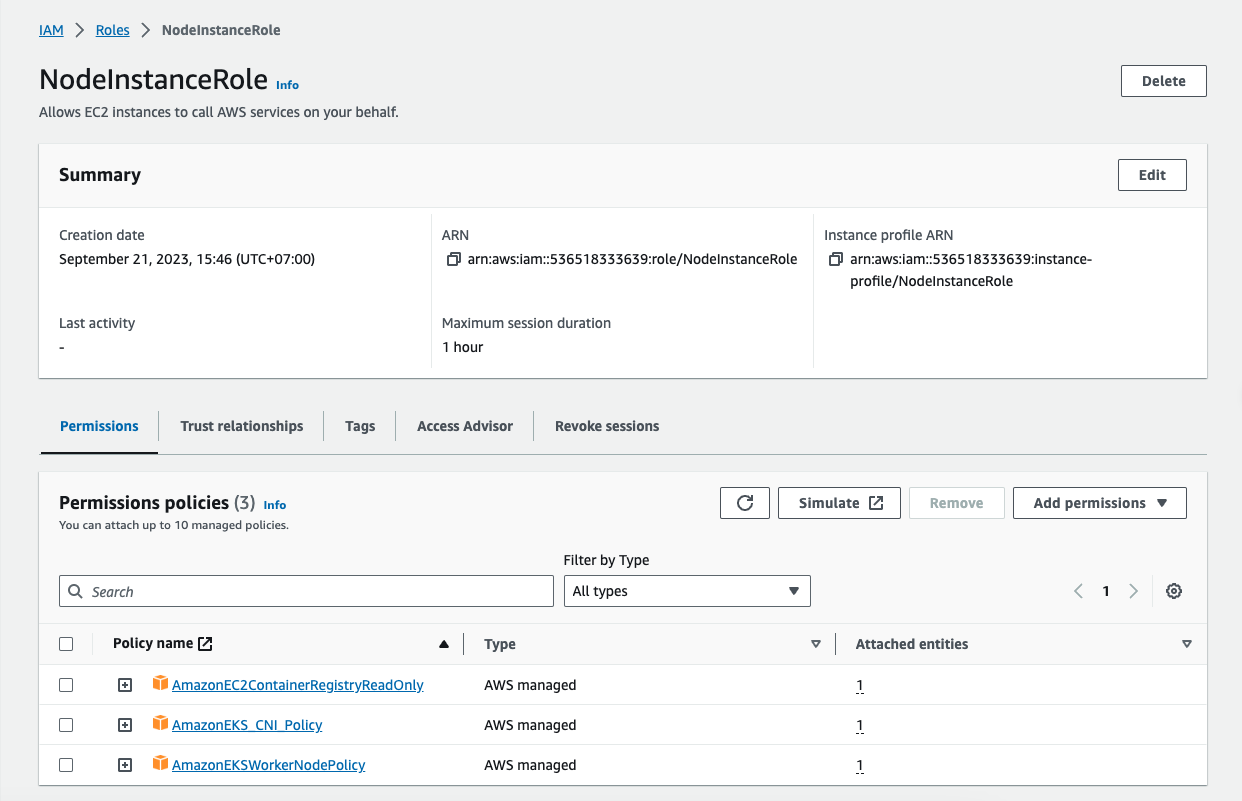

It’s time to create nodes and before you do that we have to create this role called NodeInstanceRole (EC2). Follow this guide to create one.

Follow this guide to create a node group after the role is created.

- We need to install kubectl on our machine, follow this guide to install depending on your OS.

- The next thing we need to do is to install an aws-iam-authenticator. Follow this guide. We need this to authenticate the cluster and it uses the same user as AWS CLI is authenticated with.

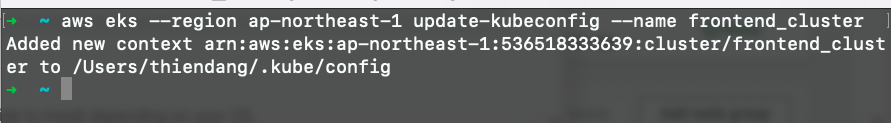

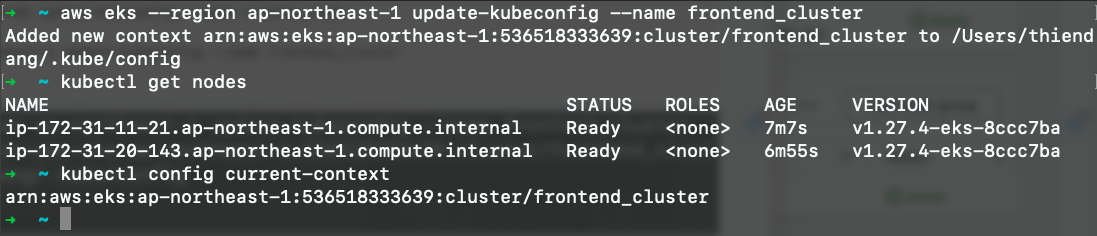

- Use the AWS CLI update-kubeconfig command to create or update your kubeconfig for your cluster. Here region-code is ap-northeast-1 and cluster_name is frontend_cluster

aws eks --region region-code update-kubeconfig --name cluster_name

// like this

aws eks --region ap-northeast-1 update-kubeconfig --name frontend_clusterYou can check with these commands.

// get the service

kubectl get nodes

// get the current context

kubectl config current-contextNow we have configured kubectl to use AWS EKS from our own machine. Let’s create deployment and service objects and use the image from the AWS ECR. Here is the manifest file which contains these objects.

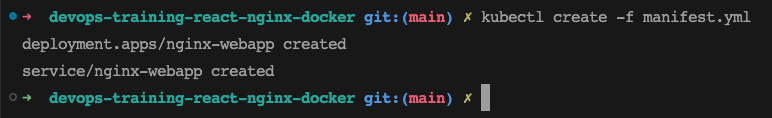

At the root folder just use this command to create objects kubectl create -f manifest.yml

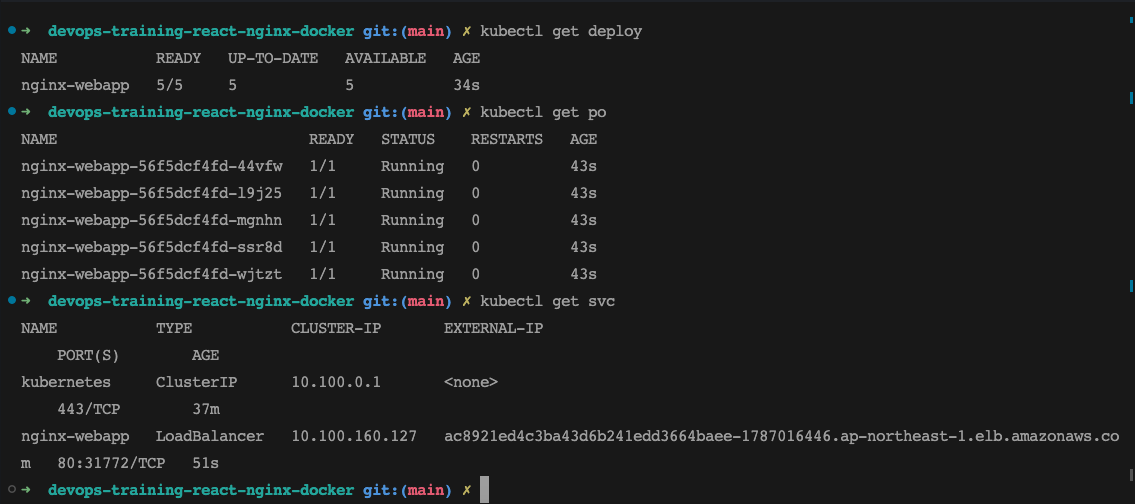

You can use the following commands to verify all the objects are in the desired state.

// list the deployment

kubectl get deploy

// list the pods

kubectl get po

// list the service

kubectl get svc

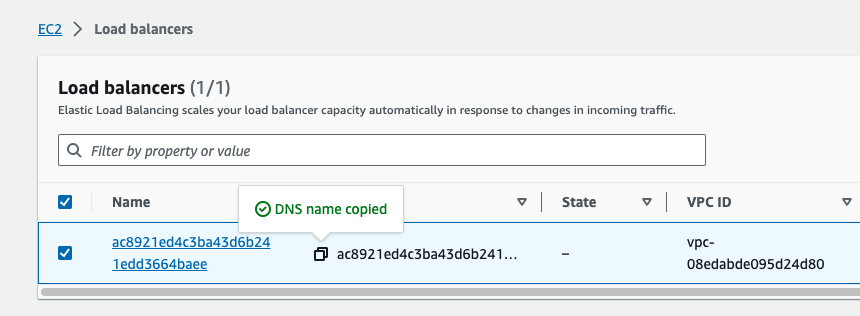

You can check the settings automatically made in ALB by going to the AWS Management Console in Amazon EC2 > Load Balancers > Copy DNS name. Paste the URL into your browser:

- Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that makes it easy for you to run Kubernetes on AWS without needing to stand up or maintain your own Kubernetes control plane.

- You need to create an AWS Account as a prerequisite.

- It’s not a best practice to use your root account to do any tasks instead you should create an IAM group that has permissions for administrator access and add a user to it and log in with that user.

- You should use this command

aws configurewith access key and secret key. - Amazon EKS is a managed service that makes it easy for you to run Kubernetes on AWS.

- Amazon Elastic Container Registry (ECR) is a fully-managed Docker container registry that makes it easy for developers to store, manage, and deploy Docker container images.

- Amazon ECR is integrated with Amazon Elastic Container Service (ECS), simplifying your development to production workflow.

- Amazon ECS works with any Docker registry such as Docker Hub, etc.

- You have to follow these steps to run apps on the Kubernetes cluster: we need to create an AWS EKS cluster with AWS console, SDK, or AWS CLI. Create a worker node group that registers with EKS Cluster, when your cluster is ready, you can configure kubectl to communicate with your cluster, Deploy and manage your applications on the cluster