This repository is the official implementation of Magic Clothing

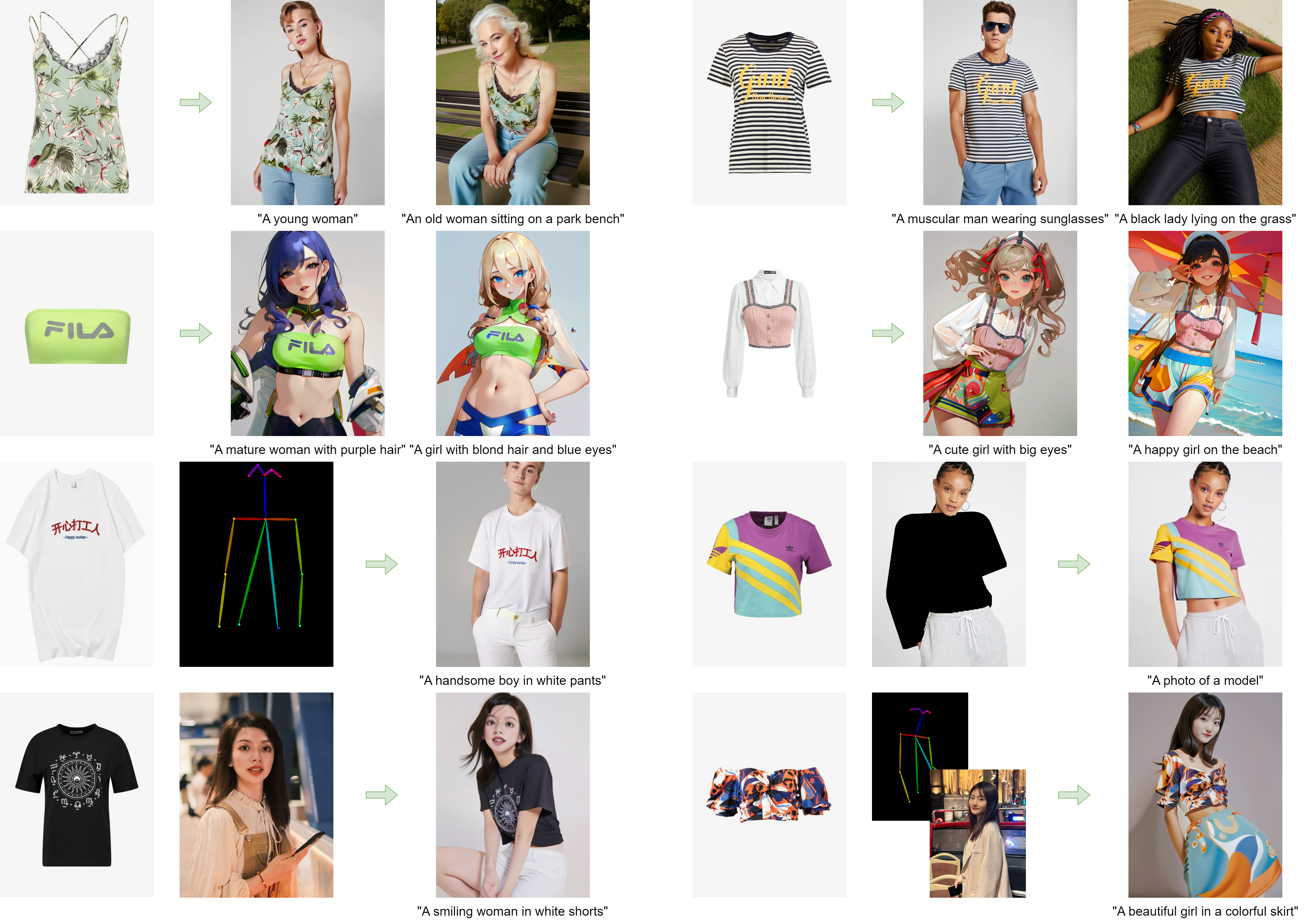

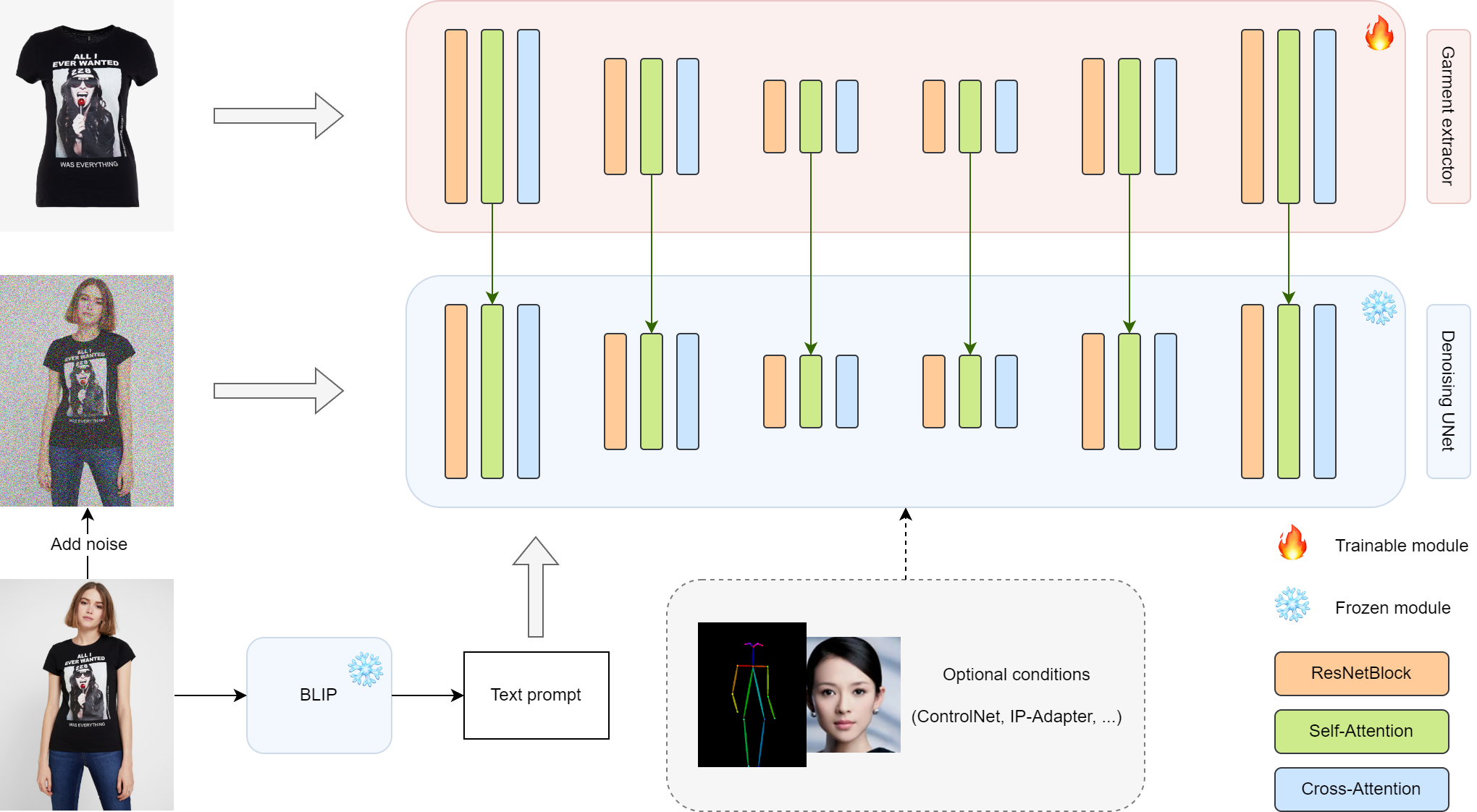

Magic Clothing is a branch version of OOTDiffusion, focusing on controllable garment-driven image synthesis

Magic Clothing: Controllable Garment-Driven Image Synthesis [arXiv paper]

Weifeng Chen*, Tao Gu*, Yuhao Xu+, Chengcai Chen

* Equal contribution + Corresponding author

Xiao-i Research

📢📢 We are continuing to improve this project. Please check earlyAccess branch for new features and updates : )

🔥 [2024/4/16] Our paper is available now!

🔥 [2024/3/8] We release the model weights trained on the 768 resolution. The strength of clothing and text prompts can be independently adjusted.

🔥 [2024/2/28] We support IP-Adapter-FaceID with ControlNet-Openpose now! A portrait and a reference pose image can be used as additional conditions.

Have fun with gradio_ipadapter_openpose.py

🔥 [2024/2/23] We support IP-Adapter-FaceID now! A portrait image can be used as an additional condition.

Have fun with gradio_ipadapter_faceid.py

- Clone the repository

git clone https://github.com/ShineChen1024/MagicClothing.git- Create a conda environment and install the required packages

conda create -n magicloth python==3.10

conda activate magicloth

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

pip install -r requirements.txt- Python demo

512 weights

python inference.py --cloth_path [your cloth path] --model_path [your model checkpoints path]768 weights

python inference.py --cloth_path [your cloth path] --model_path [your model checkpoints path] --enable_cloth_guidance- Gradio demo

512 weights

python gradio_generate.py --model_path [your model checkpoints path] 768 weights

python gradio_generate.py --model_path [your model checkpoints path] --enable_cloth_guidance@article{chen2024magic,

title={Magic Clothing: Controllable Garment-Driven Image Synthesis},

author={Chen, Weifeng and Gu, Tao and Xu, Yuhao and Chen, Chengcai},

journal={arXiv preprint arXiv:2404.09512},

year={2024}

}

- Paper

- Gradio demo

- Inference code

- Model weights

- Training code