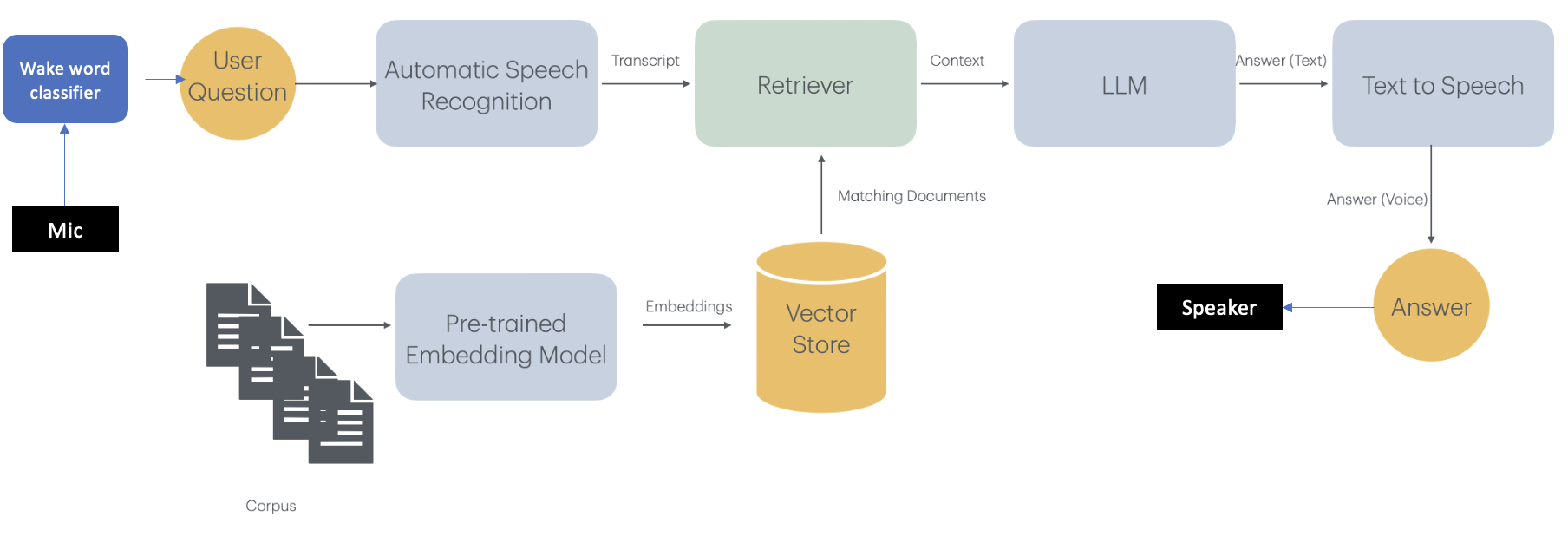

This python web app uses Retrieval Augmented Generation pattern to allow users to query their documents using a voice interface. Users can also select LLM's internal knowledge mode to ask questions without providing additional documentation/context. Follow the following steps to run the app.

- Install python version 3.11.9 or greater

- Create a virtual environment using the following command

python -m venv create vrag - Run

source vrag/bin/activatethrough macOS/Linux Terminal/shell or run the activate from cmd for Windows to activate the virtual environment - Clone this repo using

git clone https://github.com/umairacheema/vrag.git cd vrag- Install required libraries using

pip install -r requirements.txt - Install ffmpeg for your operating system

- Download the required models and config files etc from huggingface in their corresponding subfolders under models folder (Read the README files in each model subfolder for more info)

- Edit the vrag.yaml file to confirm the path and any other configuration changes (device='mps' if your mac machine has GPU, replace it with 'cpu' or cuda etc for your platform as needed).

- Copy your PDF documents that you would like to ask questions about in src/documents folder

cd vrag/src- Run

python vectorstore.pyto create vector database. Check if there are files created in src/vectorstore/database after this module exits - Run the Web User interface through

streamlit run vrag.py - Now Ask question by Saying the word 'SEVEN' followed by your question.

- As an example if your PDF documents are about financial metrics and have some information on IRR, the question could be asked by speaking in the mic 'SEVEN what is meant by internal rate of return'.

Following is the architecture of the pipeline

- Can be setup in less than 15 minutes

- Completely Offline

- Can be used with different LLMs

- Allows users to experiment with the quality of responses by changing the parameters in a single configuration file