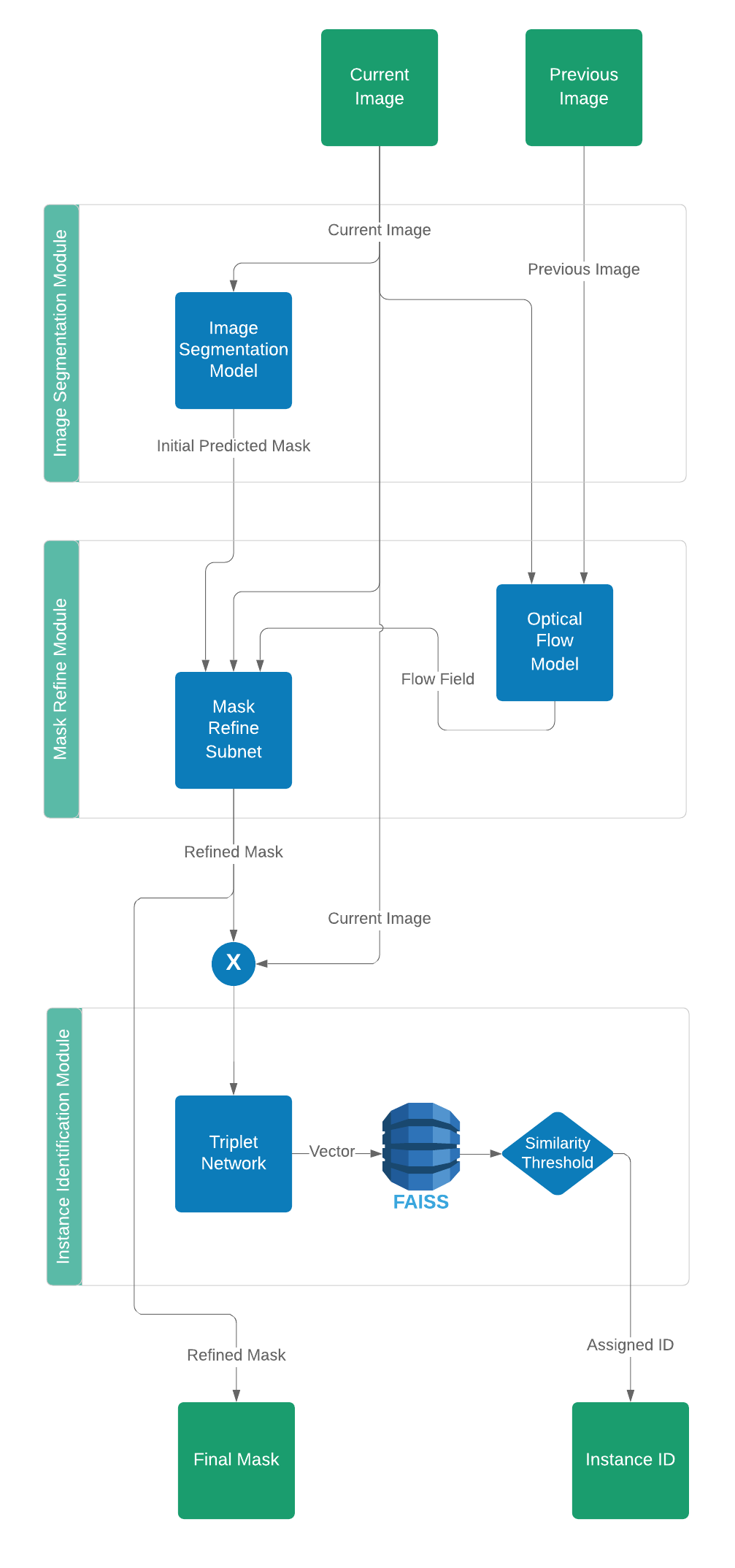

The goal of this model is to segment multiple object instances from both video and still images and track (identify) objects over consecutive frames. There are 3 major modules:

- Image Segmentation Module

- Mask R-CNN [image_seg]

- Mask Refine Module

- PWC-Net [opt_flow]

- U-Net [mask_refine]

- Identification Module

- Triplet Network [instance_id]

- FAISS Database [instance_id]

This project was developed on Python 3.6, Tensorflow 1.11, Keras 2.1, and Numpy 1.13. To ensure full compatibility, use these versions (but it may work with other versions).

If you are on the shared Google instance (for UMD FIRE COML), there is already a conda virtual env with the correct dependencies. To start it, run

source activate multisegOtherwise, run the setup.py script, which will also install the dependencies for this project (using pip):

python setup.py installIf the script fails, you may have to manually install each dependency using pip (pip is required for some dependencies; conda does not work).

You can acquire the full DAVIS 2017 and full WAD CVPR 2018 datasets at their respective sources (warning, the WAD dataset is extremely large, which is why we're providing alternatives), but we also have a "mini-DAVIS" and a "mini-WAD" dataset that has the same folder structure but only contains a very small subset of the images--which allows for easier testing and evaluation.

-

For CVPR WAD 2018, there is only the 'train' subset (and only certain images within the subset. No annotations.).

-

For DAVIS 2017, there is only the 'trainval' subset at 480p resolution (and only some of the images/videos within the subset).

The mini-datasets can be found on our github repository's releases page. We are currently on release version 0.1.

As a quick check, make sure the following paths exist (starting from the root data directory):

CVPR WAD 2018: .\train_color\

DAVIS 2017:

.\DAVIS-2017-trainval-480p\DAVIS\JPEGImages\480p\blackswan\

Currently, we have released pre-trained weights for the following modules only:

- Image Segmentation

- Default Directory:

.\image_seg

- Default Directory:

- Optical Flow

- Default Directory:

.\opt_flow\models\pwcnet-lg-6-2-multisteps-chairsthingsmix - Note: these weights are saved as a tensorflow checkpoint, so you will need to place all 3 files in this directory

- Default Directory:

- Mask Refine (coarse only)

- Default Directory:

.\mask_refine

- Default Directory:

As with our mini-datasets, the weights binaries for the image segmentation and mask refine modules can be found on our github repository's releases page.

For the optical flow model weights, you can find the latest versions here (make sure to download the one that matches the directory name specified above).

It's very easy to run these notebooks:

- In the first few cells, make sure to check that you've downloaded the file dependencies and have in the right location (or change the path in the code).

- Then, simply execute each cell in order.

- To rerun the inference for difference images, simply rerun the cells in the inference section of each notebook, and a new random image will be chosen.

Notebook: Instance Segmentation Notebook

Dataset: CVPR WAD 2018

Notebook: Mask Refine Demo Notebook

Dataset: DAVIS 2017

See separate repository (linked under the instance_id directory). In the future, when this module is more mature, it will be integrated into the current repository.

- integrate ImageSeg and MaskRefine

- develop triplet network in keras

- refine MaskRefine using new loss function

- integrate all 3 modules

- evaluation & metrics