# Dependencies

import tweepy

import json

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from datetime import datetime

import random as r

import seaborn as sn

from math import trunc

# Import and Initialize Sentiment Analyzer

from vaderSentiment.vaderSentiment import SentimentIntensityAnalyzer

analyzer = SentimentIntensityAnalyzer()# Twitter API Keys

consumer_key = "c8iPOfqAcm1qys97q3RW0wKOM"

consumer_secret = "6sUoqS7FtJkIKTfdoWaZHEf5quOEeqAfrJLsSJMzJd2Aw1myoF"

access_token = "229598666-EnGXtgqnFXT8zjHrMTI7medln9PlPdT5thbQhjJh"

access_token_secret = "Bdr3ujJgJqgf6wksE2b8OQ7CxkFTCAi5KolqLZrc9giNH"# Setup Tweepy API Authentication

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_token, access_token_secret)

api = tweepy.API(auth, parser=tweepy.parsers.JSONParser())# Target User Accounts

target_user = ('@BBC', '@CBS', '@CNN', '@Fox', '@New York Times')

#target_user = ('@CNN')# A list to hold tweet timestamps

tweet_times = []

user_list = []

compound_list = []

positive_list = []

negative_list = []

neutral_list = []

time_list = []

my_index = []

# Loop through each user

for user in target_user:

idx = 0

# Loop through 10 pages of tweets (total 200 tweets)

for x in range(5):

public_tweets = api.user_timeline(user)

# Loop through all tweets

for tweet in public_tweets:

# Run Vader Analysis on each tweet

compound = analyzer.polarity_scores(tweet["text"])["compound"]

pos = analyzer.polarity_scores(tweet["text"])["pos"]

neu = analyzer.polarity_scores(tweet["text"])["neu"]

neg = analyzer.polarity_scores(tweet["text"])["neg"]

# Add each value to the appropriate array

user_list.append(user)

compound_list.append(compound)

positive_list.append(pos)

negative_list.append(neg)

neutral_list.append(neu)

my_index.append(idx)

idx +=1

# Adding all the tweets into an Array

news_data = pd.DataFrame({'Agency': user_list, 'Compound': compound_list,'Positive': positive_list,

'Neutral':neutral_list,'Negative':negative_list,

'My_index':my_index

})

news_data.set_index('My_index')

<style>

.dataframe thead tr:only-child th {

text-align: right;

}

</style>

.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Agency | Compound | Negative | Neutral | Positive | |

|---|---|---|---|---|---|

| My_index | |||||

| 0 | @BBC | -0.4007 | 0.114 | 0.886 | 0.000 |

| 1 | @BBC | -0.5106 | 0.148 | 0.852 | 0.000 |

| 2 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 3 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 4 | @BBC | 0.3327 | 0.000 | 0.894 | 0.106 |

| 5 | @BBC | 0.5562 | 0.000 | 0.805 | 0.195 |

| 6 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 7 | @BBC | 0.5399 | 0.000 | 0.812 | 0.188 |

| 8 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 9 | @BBC | 0.4939 | 0.000 | 0.849 | 0.151 |

| 10 | @BBC | -0.3182 | 0.141 | 0.859 | 0.000 |

| 11 | @BBC | 0.3612 | 0.000 | 0.872 | 0.128 |

| 12 | @BBC | 0.4939 | 0.000 | 0.814 | 0.186 |

| 13 | @BBC | 0.4215 | 0.000 | 0.865 | 0.135 |

| 14 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 15 | @BBC | 0.4574 | 0.000 | 0.857 | 0.143 |

| 16 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 17 | @BBC | 0.7906 | 0.000 | 0.696 | 0.304 |

| 18 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 19 | @BBC | 0.6361 | 0.000 | 0.792 | 0.208 |

| 20 | @BBC | -0.4007 | 0.114 | 0.886 | 0.000 |

| 21 | @BBC | -0.5106 | 0.148 | 0.852 | 0.000 |

| 22 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 23 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 24 | @BBC | 0.3327 | 0.000 | 0.894 | 0.106 |

| 25 | @BBC | 0.5562 | 0.000 | 0.805 | 0.195 |

| 26 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 27 | @BBC | 0.5399 | 0.000 | 0.812 | 0.188 |

| 28 | @BBC | 0.0000 | 0.000 | 1.000 | 0.000 |

| 29 | @BBC | 0.4939 | 0.000 | 0.849 | 0.151 |

| ... | ... | ... | ... | ... | ... |

| 70 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 71 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 72 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 73 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 74 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 75 | @New York Times | 0.4588 | 0.000 | 0.250 | 0.750 |

| 76 | @New York Times | 0.8807 | 0.000 | 0.595 | 0.405 |

| 77 | @New York Times | 0.5574 | 0.000 | 0.783 | 0.217 |

| 78 | @New York Times | 0.6705 | 0.000 | 0.476 | 0.524 |

| 79 | @New York Times | 0.7824 | 0.000 | 0.623 | 0.377 |

| 80 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 81 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 82 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 83 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 84 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 85 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 86 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 87 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 88 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 89 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 90 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 91 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 92 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 93 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 94 | @New York Times | 0.0000 | 0.000 | 1.000 | 0.000 |

| 95 | @New York Times | 0.4588 | 0.000 | 0.250 | 0.750 |

| 96 | @New York Times | 0.8807 | 0.000 | 0.595 | 0.405 |

| 97 | @New York Times | 0.5574 | 0.000 | 0.783 | 0.217 |

| 98 | @New York Times | 0.6705 | 0.000 | 0.476 | 0.524 |

| 99 | @New York Times | 0.7824 | 0.000 | 0.623 | 0.377 |

500 rows × 5 columns

news_data['Agency'].unique()array(['@BBC', '@CBS', '@CNN', '@Fox', '@New York Times'], dtype=object)

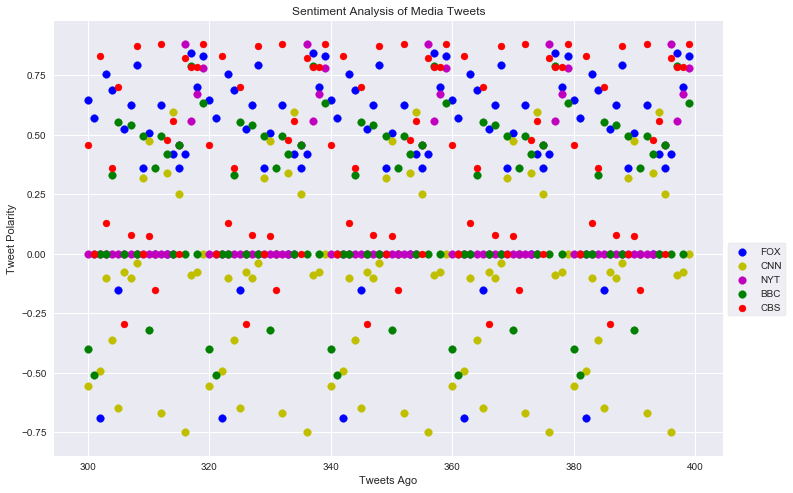

plt.figure(figsize=(12,8))

numbers = news_data[news_data['Agency'] == '@Fox'].index

plt.scatter(numbers, news_data[news_data['Agency']=='@Fox']['Compound'], marker ='o', color='b', s=60, label = 'FOX')

plt.scatter(numbers,news_data[news_data['Agency']=='@CNN']['Compound'], marker ='o', color='y', s=60, label = 'CNN')

plt.scatter(numbers, news_data[news_data['Agency']=='@New York Times']['Compound'], marker ='o', s=60, color='m', label = 'NYT')

plt.scatter(numbers,news_data[news_data['Agency']=='@BBC']['Compound'], marker ='o', s = 60, color='g', label = 'BBC')

plt.scatter(numbers,news_data[news_data['Agency']=='@CBS']['Compound'], marker ='o', color='r', label = 'CBS')

plt.gca().set(xlabel = 'Tweets Ago', ylabel = 'Tweet Polarity',title = 'Sentiment Analysis of Media Tweets')

plt.legend(loc = 'best',bbox_to_anchor=(1, 0.5), frameon=True)

plt.show()

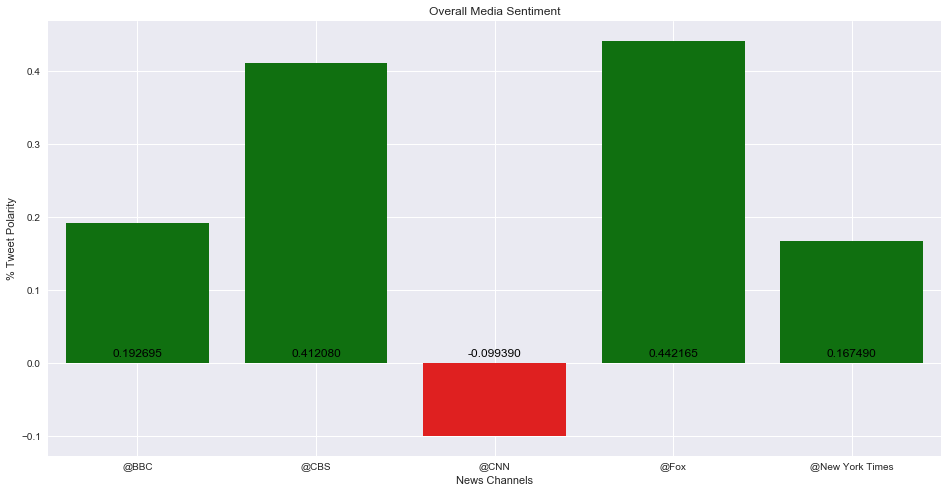

Compound_percentage = news_data.groupby('Agency').mean()['Compound'].to_frame("% Compound")

Compound_percentage

<style>

.dataframe thead tr:only-child th {

text-align: right;

}

</style>

.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| % Compound | |

|---|---|

| Agency | |

| @BBC | 0.192695 |

| @CBS | 0.412080 |

| @CNN | -0.099390 |

| @Fox | 0.442165 |

| @New York Times | 0.167490 |

x=Compound_percentage.index

y=Compound_percentage['% Compound']

plt.figure(figsize=(16,8))

colors = ['green' if _y >=0.0 else 'red' for _y in y]

ax = sn.barplot(x, y, palette=colors)

for n, (label, _y) in enumerate(zip(x, y)):

if _y <= 0.0:

ax.annotate(

s='{:f}'.format(_y), xy=(n, -0), ha='center',va='center',

xytext=(0,10), color='k', textcoords='offset points')

else:

ax.annotate(

s='{:f}'.format(_y), xy=(n, 0), ha='center',va='center',

xytext=(0,10), color='k', textcoords='offset points')

plt.gca().set(xlabel='News Channels', ylabel='% Tweet Polarity', title='Overall Media Sentiment')

plt.rc('grid', linestyle="--", color='black', linewidth=0.5)

plt.grid(True)

plt.show()