| Name | |

|---|---|

| Waleed Mansoor | [email protected] |

| Jaewoo Park | [email protected] |

| Sasha Jaksic | [email protected] |

| Dominik Marquardt | [email protected] |

| Juil O | [email protected] |

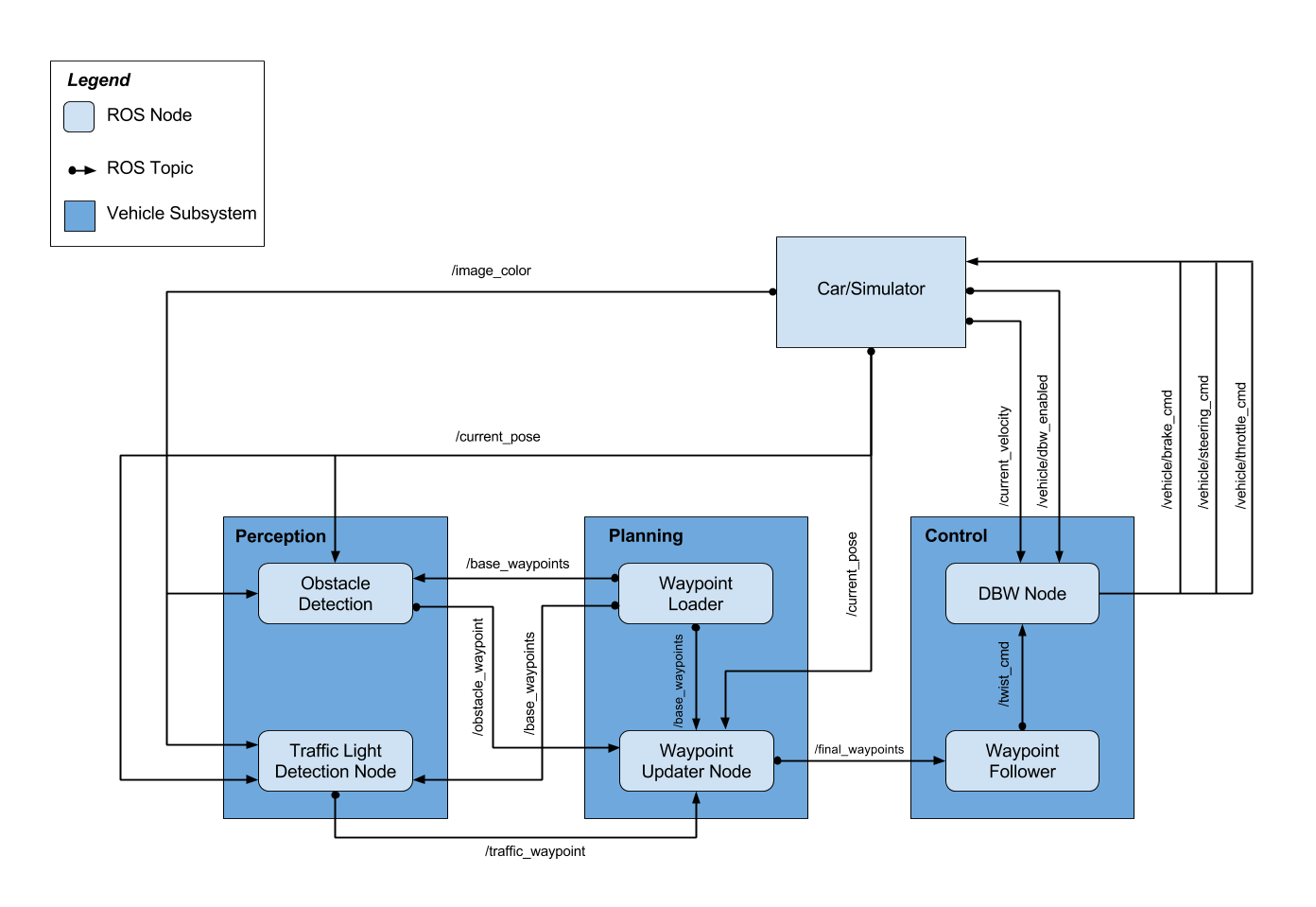

This system has three main parts.

The main role of this part is detecting the traffic light and publishing '/traffic_waypoint' topic so that the next planning part can generate an appropiate trajectory.

For this, we trained the pre-trained classifier using some dataset. The pre-trained graph is ssd_mobilenet_v1_coco

We used images from ROS topics of simulator and bag files as training dataset. We can see these images following commmands.

roslaunch launch/styx.launch

rosrun image_view image_view _sec_pre_frame:=0.1 image:=/image_color

rosbag play just_traffic_light.bag

rosrun image_view image_view image:=/image_raw

And then that can be saved with this command.

rosrun image_view image_saver _sec_per_frame:=0.1 image:=/image_raw

The waypoint to be stopped at when the light is red is provided by stop_line_positions config. So the classifier tries to find a traffic light when the car comes near the closests stop_line.

# find the closest visible traffic light (if one exists)

min_dist = 100000

for stop_line_wp_idx in self.stop_line_wp_idxs:

dist = stop_line_wp_idx - self.car_wp_idx

if dist >= 0 and dist < min_dist:

min_dist = dist

if min_dist < self.visible_distance_wps:

# It uses the stop_line postion rather than the traffic light position

light_wp = stop_line_wp_idx

# If there is a visible traffic light

if light_wp != -1:

# if camera is on: through classifier

if self.has_image:

state = self.get_light_state(light_wp)

return light_wp, state

# if camera is off: through ground truth

else:

It is probably easiest to install ROS and deploy everything with Docker, and although you could use the VM provided by Udacity here, it is recommended to deploy using Docker.

Clone the repository on your host OS to your PWD

cd $PWD/DragonIf you do not have Docker, feel free to install Docker for your host OS. Install Docker

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/Dragon -v /tmp/log:/root/.ros/ --rm -it capstoneSource the ROS env variables. (Change Kinematic to Indigo if using 14.04)

echo "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc

echo "source /Dragon/ros/devel/setup.bash" >> ~/.bashrcClone this project repository

Install python dependencies

cd /Dragon

pip install -r requirements.txtMake and run nodes

cd ros

catkin_make

source devel/setup.shAt this point, exit bash on Docker or proceed to Run steps below;

Note that for every new bash session if you are SSH-ing into the Docker container, you should open a new session and use the docker exec command.

docker psYou can choose to grep the container ID or name and pipe it into the exec command directly or just use the clipboard to copy and paste

docker exec -it <container name or id> /bin/bash

cd /Dragon/rosThis will successfully get you a new bash window to the container where your ros environment resides.

After intalling successfully, and run below respectively.

roscoreroslaunch launch/styx.launch- Open 2 different bash shells

- In both bash windows do the following. (If you have installed with the instructions above, skip this step.)

cd Dragon/ros

source devel/setup.bash- In window 1,

roslaunch launch/styx.launch- In window 2,

roslaunch tl_detector tl_detector.launchIn order to test dbw, you need to download the ros.bag file and save it to the $PWD/Dragon/data directory

cd /Dragon/data

curl -H "Authorization: Bearer YYYYY” https://www.googleapis.com/drive/v3/files/0B2_h37bMVw3iT0ZEdlF4N01QbHc?alt=media -o udacity_succesful_light_detection.bag

mv udacity_succesful_light_detection.bag dbw_test.rosbag.bagAfter downloading and renaming the file,

cd ../ros

roslaunch twist_controller dbw_test.launchThis will save 3 csv files which you can process to figure out how your DBW node is performing on various commands.

/actual/* are commands from the recorded bag while /vehicle/* are the output of your node.