Amidst the swirling sands of the cosmos, Ix stands as an enigmatic jewel,

where the brilliance of human ingenuity dances on the edge of forbidden

knowledge, casting a shadow of intrigue over the galaxy.

- Atreides Scribe, The Chronicles of Ixian Innovation

The platform supports deployment using Docker containers, ensuring a consistent environment and enabling easy scaling to multiple worker containers.

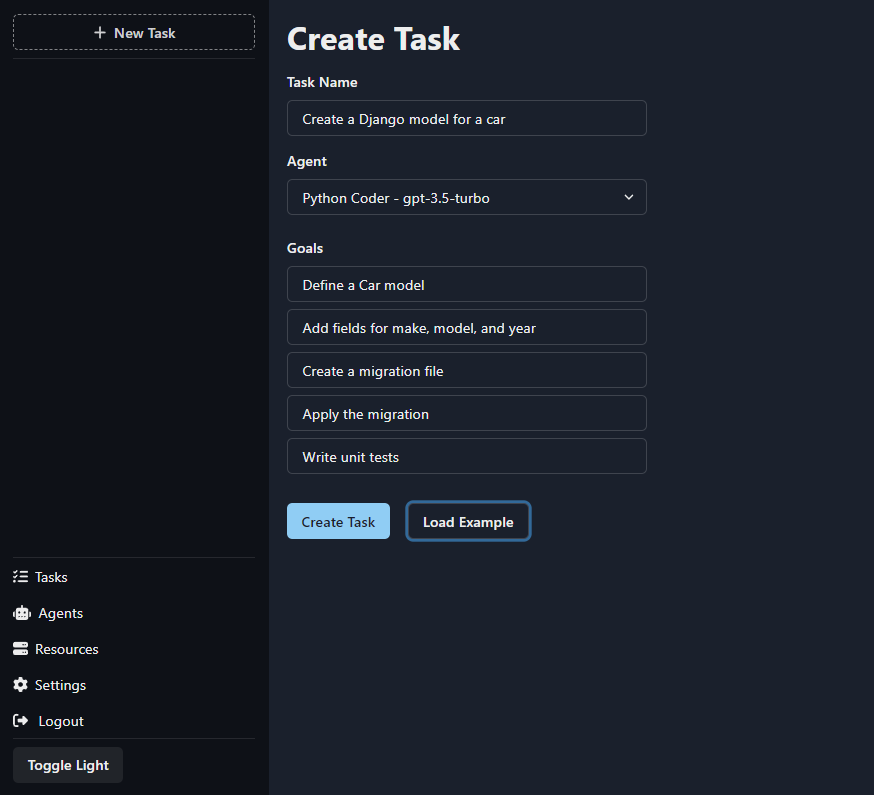

You provide a task and goals and an agent uses that direction to investigate, plan, and complete tasks. The agents are capable of searching the web, writing code, creating images, interacting with other APIs and services. If it can be coded, it's within the realm of possibility for an agent to assist.

-

Start by create a task and goals for the agent to work towards.

-

The agent will begin researching and planning to complete the task.

-

The chat system provides an interaction model for the agent and user to communicate. The agent will respond to user input and provide updates on its progress. The Agent will request additional information and authorization from the user as needed.

-

Agents are customizable and can be extended to support new functionality. The platform provides a plugin architecture for adding new agents and customizing existing agents.

- Scalable model for running a fleet of GPT agents.

- Responsive user interface. for interacting with agents.

- Persistent storage of interactions, processes, and metrics.

- Message queue for agent jobs and inter-agent communication.

- Extensible model for customizing agents.

- Deployment using Docker.

- Python 3.11

- Django 4.2

- PostgreSQL 14.4

- Graphql

- React 18

- Integrated with OpenAI GPT models

- Plugin architecture to support extending agent functionality (e.g. web browsing, writing code, etc)

- Generic framework for vector database based agent memory

- Pinecone

- Redis

- Milvus (soon)

- FAISS (soon)

Before getting started, ensure you have the following software installed on your system:

- Docker

- Docker Compose

Clone the repository:

git clone https://github.com/kreneskyp/ix.git

cd ixSetup config in .env

cp .env.template .envOPENAI_API_KEY=YOUR_KEY_HERE

# Pinecone

PINECONE_API_KEY=

PINECONE_ENV=

# search

GOOGLE_API_KEY=

GOOGLE_CX_ID=

WOLFRAM_APP_ID=

Build and run the dev image.

make dev_setup

Run the dev server

make runserverStart a worker

make workerVisit http://localhost:8000 to access the user interface and start creating tasks for the autonomous GPT-4 agents.

The platform will automatically spawn agent processes to research and complete tasks as needed.

Run as many worker processes as you want with make worker.

Here are some helpful commands for developers to set up and manage the development environment:

make runserver: Start the application in development mode on0.0.0.0:8000.make worker: Start an Agent worker.

make image: Build the Docker image.make frontend: Rebuild the front end (graphql, relay, webpack).make webpack: Rebuild javascript onlymake webpack-watch: Rebuild javascript on file changes

make migrate: Run Django database migrations.make migrations: Generate new Django database migration files.

make bash: Open a bash shell in the docker container.make shell: Open a Django shell_plus session.