Temporal Working Memory: Query-Guided Segment Refinement for Enhanced Multimodal Understanding [NAACL 2025]

Xingjian Diao, Chunhui Zhang, Weiyi Wu, Zhongyu Ouyang, Peijun Qing, Ming Cheng, Soroush Vosoughi, Jiang Gui

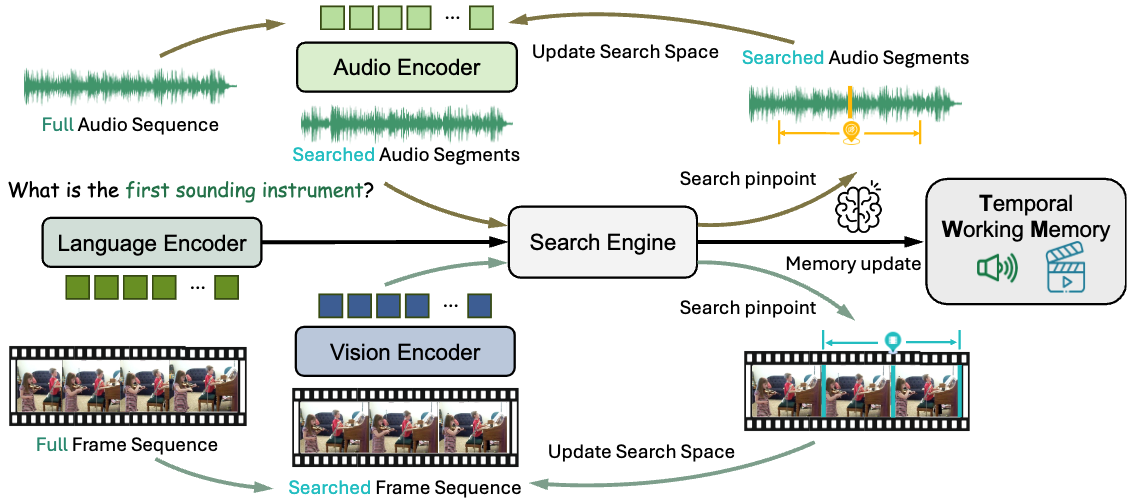

We introduce temporal working memory (TWM), which aims to enhance the temporal modeling capabilities of Multimodal foundation models (MFMs). It selectively retains task-relevant information across temporal dimensions, ensuring that critical details are preserved throughout the processing of video and audio content. The TWM uses a query-guided attention approach to focus on the most informative multimodal segments within temporal sequences. By retaining only the most relevant content, TWM optimizes the use of the model's limited capacity, enhancing its temporal modeling ability. This plug-and-play module can be easily integrated into existing MFMs. With our TWM, nine state-of-the-art models exhibit significant performance improvements across question answering, video captioning, and video-text retrieval tasks.

This code repository includes implementations of the Temporal Working Memory (TWM) mechanism algorithm applied to nine different state-of-the-art models. The steps to run the code are as follows:

- Download the repository: Clone this repository to your local environment.

- Data Preprocessing: Prepare data following the preprocessing steps in each original model repository.

- Training Temporal Working Memory (TWM): For each model, adjust the number of training epochs and relevant model-specific hyperparameters in the

main_alvs.pyfile within each model’s directory. Follow the recommendations in each model's original paper for parameter settings, and then train TWM. - Inference: Set

epochs = 0in each model'smain_alvs.pyfile, and run to utilize TWM.

- Vision Transformers are Parameter-Efficient Audio-Visual Learners (LAVisH)

- Cross-modal Prompts: Adapting Large Pre-trained Models for Audio-Visual Downstream Tasks (DG-SCT)

- Tackling Data Bias in MUSIC-AVQA: Crafting a Balanced Dataset for Unbiased Question-Answering (LAST-Att)

- GIT: A Generative Image-to-text Transformer for Vision and Language (Git)

- Action knowledge for video captioning with graph neural networks (AKGNN)

- NarrativeBridge: Enhancing Video Captioning with Causal-Temporal Narrative (CEN)

- VindLU: A Recipe for Effective Video-and-Language Pretraining (VINDLU)

- TESTA: Temporal-Spatial Token Aggregation for Long-form Video-Language Understanding (TESTA)

- Learning Video Context as Interleaved Multimodal Sequences (MovieSeq)

We thank the open-sourced paper mentioned above for the authors' outstanding work.

If our work has been helpful to your research, we would appreciate it if you could cite the following paper:

@inproceedings{diao2025twm,

title={Temporal Working Memory: Query-Guided Segment Refinement for

Enhanced Multimodal Understanding},

author={Diao, Xingjian and Zhang, Chunhui and Wu, Weiyi and Ouyang, Zhongyu and Qing, Peijun and Cheng, Ming and Vosoughi, Soroush and Gui, Jiang},

booktitle={Findings of the Association for Computational Linguistics: NAACL 2025},

year={2025}

}If you have any questions, suggestions, or bug reports, please contact

[email protected]