中文 | English

- Feb 17, 2025: 👋 We release the inference code and model weights of Step-Audio-Chat, Step-Audio-TTS-3B and Step-Audio-Tokenizer

- Feb 17, 2025: 👋 We release the multi-turn audio benchmark of StepEval-Audio-360.

- Feb 17, 2025: 👋 We release the technical report of Step-Audio.

Step-Audio is the first production-ready open-source framework for intelligent speech interaction that harmonizes comprehension and generation, supporting multilingual conversations (e.g., Chinese, English, Japanese), emotional tones (e.g., joy/sadness), regional dialects (e.g., Cantonese/Sichuanese), adjustable speech rates, and prosodic styles (e.g., rap). Step-Audio demonstrates four key technical innovations:

-

130B-Parameter Multimodal Model: A single unified model integrating comprehension and generation capabilities, performing speech recognition, semantic understanding, dialogue, voice cloning, and speech synthesis. We have made the 130B Step-Audio-Chat variant open source.

-

Generative Data Engine: Eliminates traditional TTS's reliance on manual data collection by generating high-quality audio through our 130B-parameter multimodal model. Leverages this data to train and publicly release a resource-efficient Step-Audio-TTS-3B model with enhanced instruction-following capabilities for controllable speech synthesis.

-

Granular Voice Control: Enables precise regulation through instruction-based control design, supporting multiple emotions (anger, joy, sadness), dialects (Cantonese, Sichuanese, etc.), and vocal styles (rap, a cappella humming) to meet diverse speech generation needs.

-

Enhanced Intelligence: Improves agent performance in complex tasks through ToolCall mechanism integration and role-playing enhancements.

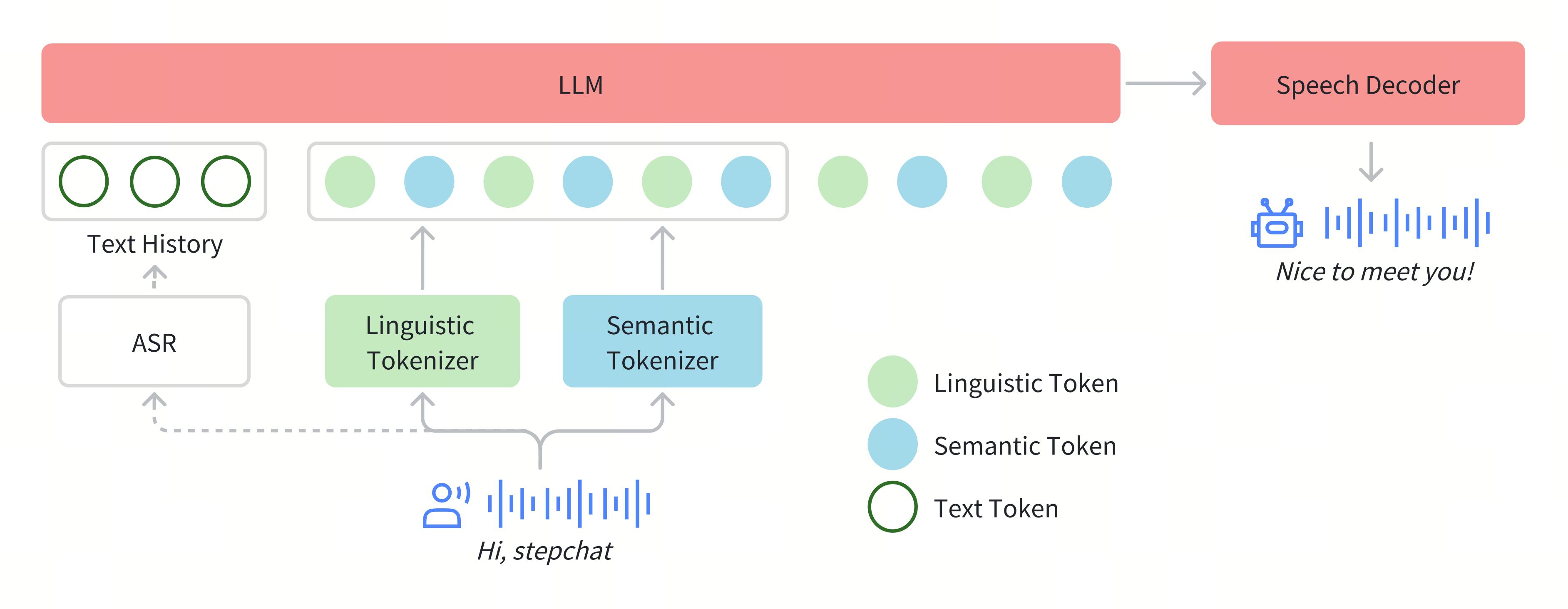

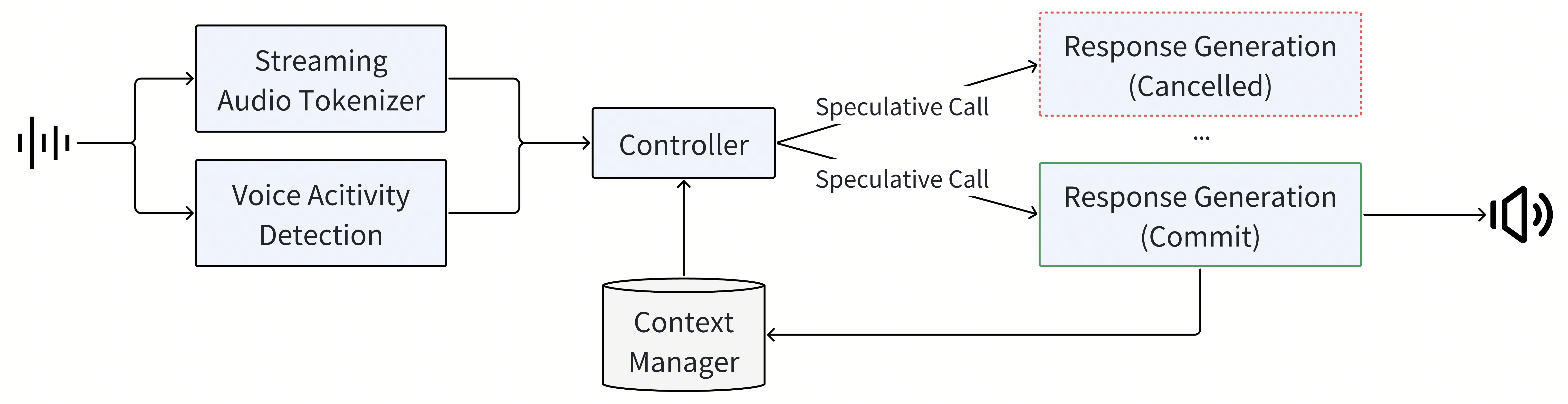

In Step-Audio, audio streams are tokenized via a dual-codebook framework, combining parallel semantic (16.7Hz, 1024-entry codebook) and acoustic (25Hz, 4096-entry codebook) tokenizers with 2:3 temporal interleaving. A 130B-parameter LLM foundation (Step-1) is further enhanced via audio-contextualized continual pretraining and task-specific post-training, enabling robust cross-modal speech understanding. A hybrid speech decoder combining flow matching with neural vocoding, optimized for real-time waveform generation. A streaming-aware architecture featuring speculative response generation (40% commit rate) and text-based context management (14:1 compression ratio) for efficient cross-modal alignment.

We implement a token-level interleaving approach to effectively integrate semantic tokenization and acoustic tokenization. The semantic tokenizer employs a codebook size of 1024, while the acoustic tokenizer utilizes a larger codebook size of 4096 to capture finer acoustic details. Given the differing token rates, we establish a temporal alignment ratio of 2:3, where every two semantic tokens are paired with three acoustic tokens.

To enhance Step-Audio’s ability to effectively process speech information and achieve accurate speech-text alignment, we conducted audio continual pretrain-ing based on Step-1, a 130-billion parameter pretrained text-based large language model (LLM).

The speech decoder in Step-Audio serves a critical function in converting discrete speech tokens, which contain both semantic and acoustic information, into continuous time-domain waveforms that represent natural speech. The decoder architecture incorporates a flow matching model and a mel-to-wave vocoder. To optimize the intelligibility and naturalness of the synthesized speech, the speech decoder is trained using a dual-code interleaving approach, ensuring seamless integration of semantic and acoustic features throughout the generation process.

To enable real-time interactions, we have designed an optimized inference pipeline. At its core, the Controller module manages state transitions, orchestrates speculative response generation, and ensures seamless coordination between critical subsystems. These subsystems include Voice Activity Detection (VAD) for detecting user speech, the Streaming Audio Tokenizer for processing audio in real-time, the Step-Audio language model and Speech Decoder for processing and generating responses, and the Context Manager for preserving conversational continuity.

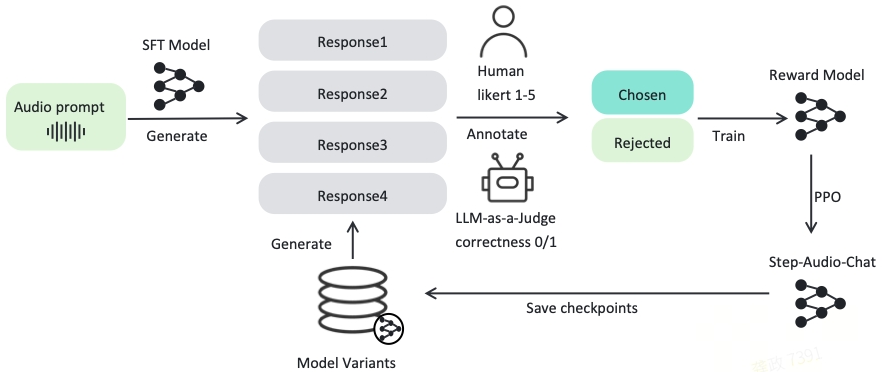

In the post-training phase, we conducted task-specific Supervised Fine-Tuning (SFT) for Automatic Speech Recognition (ASR) and Text-to-Speech (TTS). For Audio Input Text Output (AQTA) tasks, we implemented SFT using diversified high-quality datasets combined with Reinforcement Learning from Human Feedback (RLHF) to enhance response quality, enabling fine-grained control over emotional expression, speech speed, dialect, and prosody.

| Models | Links |

|---|---|

| Step-Audio-Tokenizer | 🤗huggingface |

| Step-Audio-Chat | 🤗huggingface |

| Step-Audio-TTS-3B | 🤗huggingface |

| Models | Links |

|---|---|

| Step-Audio-Tokenizer | modelscope |

| Step-Audio-Chat | modelscope |

| Step-Audio-TTS-3B | modelscope |

The following table shows the requirements for running Step-Audio model (batch size = 1):

| Model | Setting (sample frequency) |

GPU Minimum Memory |

|---|---|---|

| Step-Audio-Tokenizer | 41.6Hz | 1.5GB |

| Step-Audio-Chat | 41.6Hz | 265GB |

| Step-Audio-TTS-3B | 41.6Hz | 8GB |

- An NVIDIA GPU with CUDA support is required.

- The model is tested on a four A800 80G GPU.

- Recommended: We recommend using 4xA800/H800 GPU with 80GB memory for better generation quality.

- Tested operating system: Linux

- Python >= 3.10.0 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 2.3-cu121

- CUDA Toolkit

git clone https://github.com/stepfun-ai/Step-Audio.git

conda create -n stepaudio python=3.10

conda activate stepaudio

cd Step-Audio

pip install -r requirements.txt

git lfs install

git clone https://huggingface.co/stepfun-ai/Step-Audio-Tokenizer

git clone https://huggingface.co/stepfun-ai/Step-Audio-Chat

git clone https://huggingface.co/stepfun-ai/Step-Audio-TTS-3B

After downloading the models, where_you_download_dir should have the following structure:

where_you_download_dir

├── Step-Audio-Tokenizer

├── Step-Audio-Chat

├── Step-Audio-TTS-3B

Inference with e2e audio/text input and audio/text output.

python offline_inference.py --model-path where_you_download_dirInference tts with default speaker or clone with a new speaker

python tts_inference.py --model-path where_you_download_dir --output-path where_you_save_audio_dir --synthesis-type use_tts_or_cloneA speaker information dict is required for clone mode, formatted as follows:

{

"speaker": "speaker id",

"prompt_text": "content of prompt wav",

"wav_path": "prompt wav path"

}Start a local server for online inference. Assume you have 4 GPUs available and have already downloaded all the models.

python app.py --model-path where_you_download_dir| Hidden Feature Modeling | Discrete Audio Token Modeling | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Whisper Large-v3 | Qwen2-Audio | MinMo | LUCY | Moshi | GLM-4-voice Base | GLM-4-voice Chat | Step-Audio Pretrain | Step-Audio-Chat | |

| Aishell-1 | 5.14 | 1.53 | - | 2.4 | - | 2.46 | 226.47 | 0.87 | 1.95 |

| Aishell-2 ios | 4.76 | 3.06 | 2.69 | - | - | - | 211.3 | 2.91 | 3.57 |

| Wenetspeech test-net | 9.68 | 7.72 | 6.64 | 8.78 | - | - | 146.05 | 7.62 | 8.75 |

| Wenet test-meeting | 18.54 | 8.4 | 7.6 | 10.42 | - | - | 140.82 | 7.78 | 9.52 |

| Librispeech test-clean | 1.9 | 1.6 | 1.6 | 3.36 | 5.7 | 2.82 | 75.39 | 2.36 | 3.11 |

| Librispeech test-other | 3.65 | 3.6 | 3.82 | 8.05 | - | 7.66 | 80.3 | 6.32 | 8.44 |

| AVG | 7.28 | 4.32 | - | - | - | - | 146.74 | 4.64 | 5.89 |

| Model | test-zh | test-en |

|---|---|---|

| CER (%) ↓ | WER (%) ↓ | |

| GLM-4-Voice | 2.19 | 2.91 |

| MinMo | 2.48 | 2.90 |

| Step-Audio | 1.53 | 2.71 |

- StepAudio-TTS-3B-Single denotes dual-codebook backbone with single-codebook vocoder*

| Model | test-zh | test-en | ||

|---|---|---|---|---|

| CER (%) ↓ | SS ↑ | WER (%) ↓ | SS ↑ | |

| FireRedTTS | 1.51 | 0.630 | 3.82 | 0.460 |

| MaskGCT | 2.27 | 0.774 | 2.62 | 0.774 |

| CosyVoice | 3.63 | 0.775 | 4.29 | 0.699 |

| CosyVoice 2 | 1.45 | 0.806 | 2.57 | 0.736 |

| CosyVoice 2-S | 1.45 | 0.812 | 2.38 | 0.743 |

| Step-Audio-TTS-3B-Single | 1.37 | 0.802 | 2.52 | 0.704 |

| Step-Audio-TTS-3B | 1.31 | 0.733 | 2.31 | 0.660 |

| Step-Audio-TTS | 1.17 | 0.73 | 2.0 | 0.660 |

| Token | test-zh | test-en | ||

|---|---|---|---|---|

| CER (%) ↓ | SS ↑ | WER (%) ↓ | SS ↑ | |

| Groundtruth | 0.972 | - | 2.156 | - |

| CosyVoice | 2.857 | 0.849 | 4.519 | 0.807 |

| Step-Audio-TTS-3B | 2.192 | 0.784 | 3.585 | 0.742 |

We release StepEval-Audio-360 as a new benchmark, which consists of 100 multi-turn Chinese prompts sourced from real users and is designed to evaluate the quality of generated response across the following dimensions: Voice Instruction Following, Voice Understanding, Logical Reasoning, Role-playing, Creativity, Sing, Language Ability, Speech Emotion Control, Gaming.

| Model | Factuality (% ↑) | Relevance (% ↑) | Chat Score ↑ |

|---|---|---|---|

| GLM4-Voice | 54.7 | 66.4 | 3.49 |

| Qwen2-Audio | 22.6 | 26.3 | 2.27 |

| Moshi* | 1.0 | 0 | 1.49 |

| Step-Audio-Chat | 66.4 | 75.2 | 4.11 |

*Note: Moshi are marked with "*" and should be considered for reference only.

| Model | Llama Question | Web Questions | TriviaQA* | ComplexBench | HSK-6 |

|---|---|---|---|---|---|

| GLM4-Voice | 64.7 | 32.2 | 39.1 | 66.0 | 74.0 |

| Moshi | 62.3 | 26.6 | 22.8 | - | - |

| Freeze-Omni | 72.0 | 44.7 | 53.9 | - | - |

| LUCY | 59.7 | 29.3 | 27.0 | - | - |

| MinMo | 78.9 | 55.0 | 48.3 | - | - |

| Qwen2-Audio | 52.0 | 27.0 | 37.3 | 54.0 | - |

| Step-Audio-Chat | 81.0 | 75.1 | 58.0 | 74.0 | 86.0 |

Note: Results marked with "*" on TriviaQA dataset are considered for reference only.

TriviaQA dataset marked with "*" indicates results are for reference only.

| Category | Instruction Following | Audio Quality | ||

|---|---|---|---|---|

| GLM-4-Voice | Step-Audio | GLM-4-Voice | Step-Audio | |

| Languages | 1.9 | 3.8 | 2.9 | 3.3 |

| Role-playing | 3.8 | 4.2 | 3.2 | 3.6 |

| Singing / RAP | 2.1 | 2.4 | 2.4 | 4 |

| Voice Control | 3.6 | 4.4 | 3.3 | 4.1 |

The online version of Step-Audio can be accessed from app version of 跃问, where some impressive examples can be found as well.

| role | prompt wav | clone wav |

|---|---|---|

| 于谦 | google drive audio file |

google drive audio file |

| 李雪琴 | google drive audio file |

google drive audio file |

| prompt | response |

|---|---|

| Human: 说一个绕口令 Assistant: 吃葡萄不吐葡萄皮,不吃葡萄倒吐葡萄皮 Human: 哎,你能把这个绕口令说的再快一点吗? |

google drive audio file |

| Human: 说一个绕口令 Assistant: 吃葡萄不吐葡萄皮,不吃葡萄倒吐葡萄皮 Human: 哎,你能把这个绕口令说的再快一点吗? Assistant: 吃葡萄不吐葡萄皮,不吃葡萄倒吐葡萄皮 Human: 呃,你再用非常非常慢的速度说一遍的。 |

google drive audio file |

| prompt | response |

|---|---|

| Human: 你这语气又不撒娇又不卖萌的,要不你撒个娇卖个萌吧。 | google drive audio file |

| Human: 怎么办?我感觉我的人生很失败。 | google drive audio file |

| Human: 小跃。你真的是。特别厉害。 | google drive audio file |

| prompt | response |

|---|---|

| Human: What did the speaker mean when they said, it's raining cats and dogs? Assistant: When they say "It's raining cats and dogs," it just means it's raining really hard. The speaker isn't literally saying cats and dogs are falling from the sky! It's just a fun way to describe heavy rain. |

google drive audio file |

| Human: こんにちは。(你好) Assistant:こんにちは!何か手伝いましょうか?(您好!我可以帮你做点什么吗?) |

google drive audio file |

| prompt | response |

|---|---|

| human:唱一段rap | google drive audio file |

Our manuscript has been submitted to arXiv and is currently under review. The official preprint link and citation will be provided once the review is complete.

@misc{stepaudiotechnicalreport,

title={Step-Audio: Unified Understanding and Generation in Intelligent Speech Interaction},

author={Step-Audio Team},

year={2025},

}