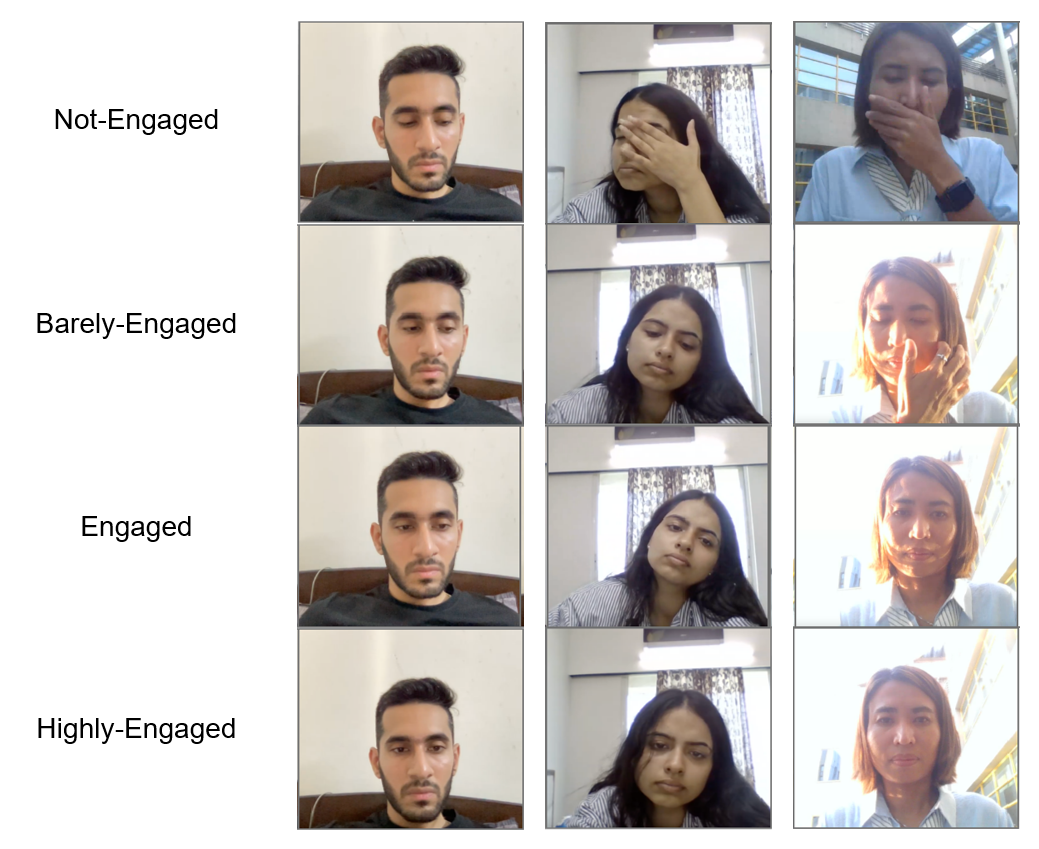

ACM EmotiW2024 challenge, we focused the subchallenge: Engagement classification on videos.

Checkout our PowerPoint here

We worked with EngageNet, with a pre-ensemble baseline.

By data augmentation (flip & color-filter), we ensured that each classes have a minimum of 3500 videos.

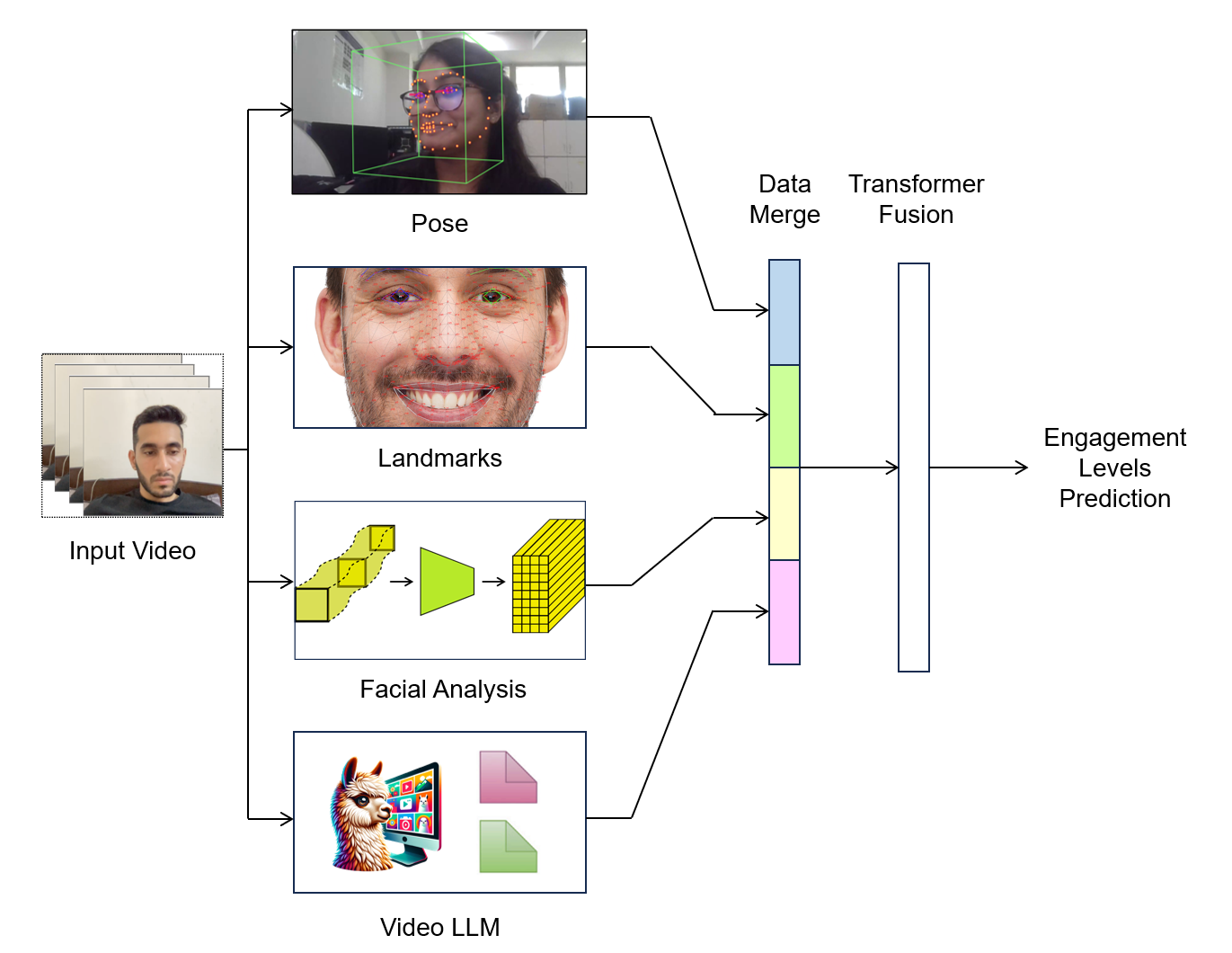

The model is ensembled from: Pose Tracking, Facial Landmarks, Facial Features, Video Understanding

Structure:

- notebooks/augmentation - Data augmentation

- notebooks/preprocessing - Data preprocessing pipelines

- notebooks/ensemble - Model ensemble from different modalities

Based on EngageNet Test dataset

| Modality | Accuracy | F1-Score |

|---|---|---|

| Pose | 0.698 | 0.69 |

| Landmark | 0.614 | 0.58 |

| Face | 0.689 | 0.67 |

| Video Understanding | 0.652 | 0.61 |

| Ensemble | Accuracy |

|---|---|

| Late-Fusion (Hard) | 0.676 |

| Late-Fusion (Soft) | 0.718 |

| Late-Fusion (Weighted) | 0.694 |

| Early-Fusion (Transformer Fusion) | 0.744 |

| Ensemble | Accuracy |

|---|---|

| Pose-Land-Face | 0.743 |

| Pose-Land-Vid | 0.740 |

| Pose-Face-Vid | 0.747 |

| Land-Face-Vid | 0.695 |

| Dataset | Accuracy |

|---|---|

| Validation | 0.713 |

| Test | 0.747 |

Yichen Kang, Yanchun Zhang, Jun Wu

EESM5900V - HKUST

The Hong Kong University of Science and Technology (HKUST)