Yunlong Tang1, Junjia Guo1, Pinxin Liu1, Zhiyuan Wang2, Hang Hua1, Jia-Xing Zhong3, Yunzhong Xiao4, Chao Huang1, Luchuan Song1, Susan Liang1, Yizhi Song5, Liu He5, Jing Bi1,*, Mingqian Feng1, Xinyang Li1, Zeliang Zhang1, Chenliang Xu1

1University of Rochester, 2UCSB, 3University of Oxford, 4CMU, 5Purdue University

|

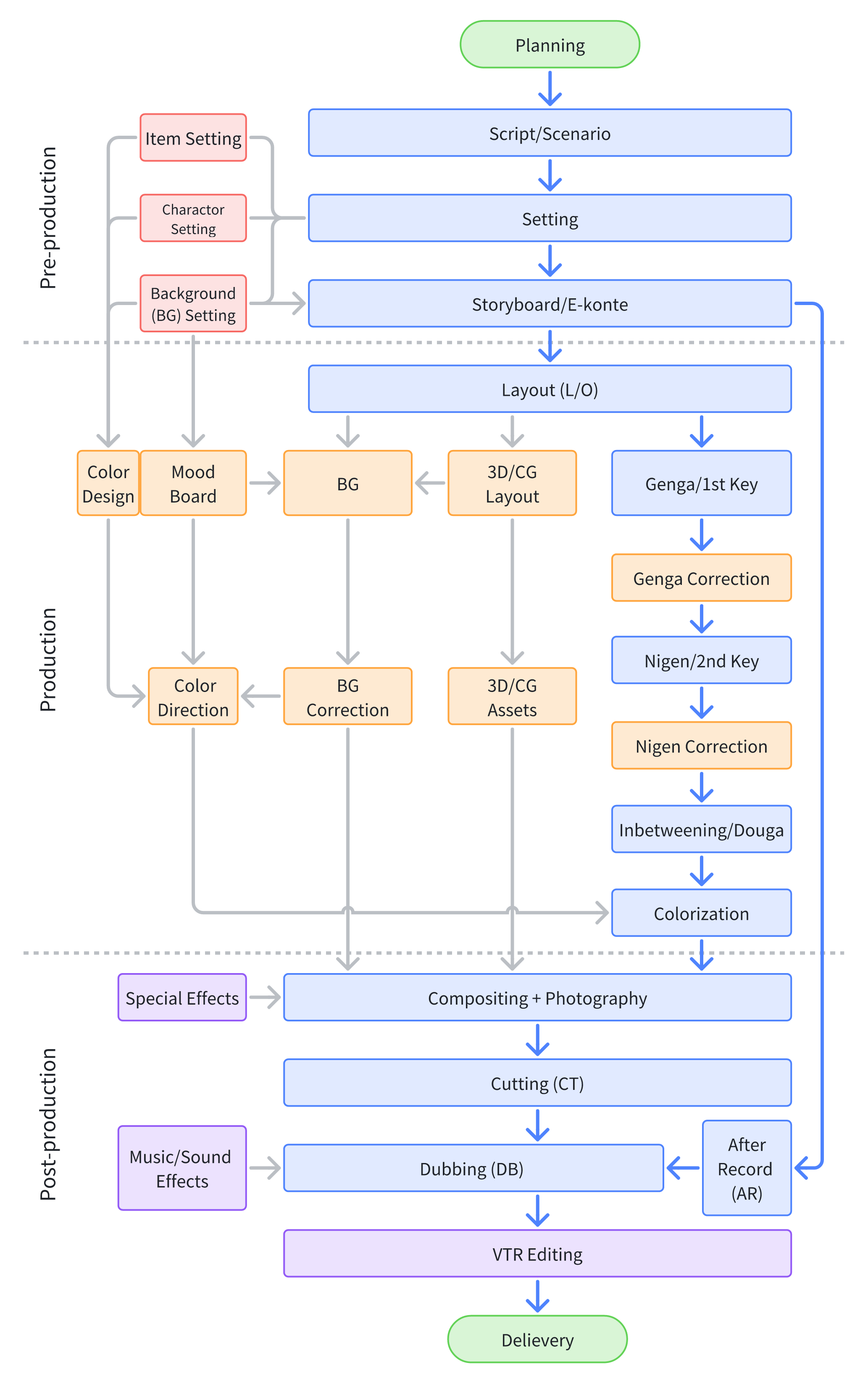

This is the production process of traditional 2D animation. We will list these research topics roughly in this sequence.

|

A production example showing the transformation of a scene from storyboard to final compositing, demonstrating key stages including layout (L/O), keyframe animation, coloring, and background integration.

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| HoLLMwood: Unleashing the Creativity of Large Language Models in Screenwriting via Role Playing | Jing Chen, Xinyu Zhu, Cheng Yang, Chufan Shi, Yadong Xi, Yuxiang Zhang, Junjie Wang, Jiashu Pu, Rongsheng Zhang, Yujiu Yang, Tian Feng | ||

| Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context | Gemini Team Google | ||

| Claude 3.5 Sonnet | Anthropic | ||

| InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks | Zhe Chen, Jiannan Wu, Wenhai Wang, Weijie Su, Guo Chen, Sen Xing, Muyan Zhong, Qinglong Zhang, Xizhou Zhu, Lewei Lu, Bin Li, Ping Luo, Tong Lu, Yu Qiao, Jifeng Dai | Code | CVPR 2024 |

| Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond | Jinze Bai, Shuai Bai, Shusheng Yang, Shijie Wang, Sinan Tan, Peng Wang, Junyang Lin, Chang Zhou, Jingren Zhou | Code | |

| GPT-4 | OpenAI |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| High-Resolution Image Synthesis with Latent Diffusion Models | Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Björn Ommer | Demo Code | CVPR 2022 |

| MidJourney | MidJourney Team |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| CogCartoon: Towards Practical Story Visualization | Zhongyang Zhu, Jie Tang | ||

| Sketch-Guided Scene Image Generation | Tianyu Zhang, Xiaoxuan Xie, Xusheng Du, Haoran Xie | ||

| VideoComposer: Compositional Video Synthesis with Motion Controllability | Xiang Wang, Hangjie Yuan, Shiwei Zhang, Dayou Chen, Jiuniu Wang, Yingya Zhang, Yujun Shen, Deli Zhao, Jingren Zhou | Project Page Code | NIPS 2023 |

| LayoutGAN: Generating Graphic Layouts with Wireframe Discriminators | Jianan Li, Jimei Yang, Aaron Hertzmann, Jianming Zhang, Tingfa Xu | Code | ICLR 2019 |

| VideoDirectorGPT: Consistent Multi-scene Video Generation via LLM-Guided Planning | Han Lin, Abhay Zala, Jaemin Cho, Mohit Bansal | Project Page Code | COLM 2024 |

| DiffSensei: Bridging Multi-Modal LLMs and Diffusion Models for Customized Manga Generation | Jianzong Wu, Chao Tang, Jingbo Wang, Yanhong Zeng, Xiangtai Li, Yunhai Tong | Project Page Code Dataset | |

| Manga Generation via Layout-controllable Diffusion | Siyu Chen, Dengjie Li, Zenghao Bao, Yao Zhou, Lingfeng Tan, Yujie Zhong, Zheng Zhao | Project Page Code | |

| CameraCtrl: Enabling Camera Control for Text-to-Video Generation | Hao He, Yinghao Xu, Yuwei Guo, Gordon Wetzstein, Bo Dai, Hongsheng Li, Ceyuan Yang | Project Page Demo |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| ToonCrafter: Generative Cartoon Interpolation | Jinbo Xing, Hanyuan Liu, Menghan Xia, Yong Zhang, Xintao Wang, Ying Shan, Tien-Tsin Wong | Code Project | arXiv 2024 |

| Joint Stroke Tracing and Correspondence for 2D Animation | Haoran Mo, Chengying Gao, Ruomei Wang | Project Code | SIGGRAPH 2024 |

| Deep Geometrized Cartoon Line Inbetweening | Li Siyao, Tianpei Gu, Weiye Xiao, Henghui Ding, Ziwei Liu, Chen Change Loy | Demo Code Dataset | ICCV 2023 |

| Exploring Inbetween Charts with Trajectory-Guided Sliders for Cutout Animation | T Fukusato, A Maejima, T Igarashi, T Yotsukura | MTA 2023 | |

| Enhanced Deep Animation Video Interpolation | Wang Shen, Cheng Ming, Wenbo Bao, Guangtao Zhai, Li Chen, Zhiyong Gao | arXiv 2022 | |

| Improving the Perceptual Quality of 2D Animation Interpolation | Shuhong Chen, Matthias Zwicker | arXiv 2021 | |

| Deep Animation Video Interpolation in the Wild | Li Siyao, Shiyu Zhao, Weijiang Yu, Wenxiu Sun, Dimitris N. Metaxas, Chen Change Loy, Ziwei Liu | Code Dataset | arXiv 2021 |

| Deep Sketch-Guided Cartoon Video Inbetweening | Xiaoyu Li, Bo Zhang, Jing Liao, Pedro V. Sander | arXiv 2020 | |

| Optical Flow Based Line Drawing Frame Interpolation Using Distance Transform to Support Inbetweenings | Rei Narita, Keigo Hirakawa, Kiyoharu Aizawa | IEEE 2019 | |

| DiLight: Digital Light Table – Inbetweening for 2D Animations Using Guidelines | Leonardo Carvalho, Ricardo Marroquim, Emilio Vital Brazil | Elsevier 2017 |

|

|

|

|

|

|

From: ToonCrafter: Generative Cartoon Interpolation

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Learning Inclusion Matching for Animation Paint Bucket Colorization | Yuekun Dai, Shangchen Zhou, Qinyue Li, Chongyi Li, Chen Change Loy | Project Demo Code Dataset | CVPR 2024 |

| AniDoc: Animation Creation Made Easier | Yihao Meng, Hao Ouyang, Hanlin Wang, Qiuyu Wang, Wen Wang, Ka Leong Cheng, Zhiheng Liu, Yujun Shen, Huamin Qu | Project Code | arXiv 2024 |

| ToonCrafter: Generative Cartoon Interpolation | Jinbo Xing, Hanyuan Liu, Menghan Xia, Yong Zhang, Xintao Wang, Ying Shan, Tien-Tsin Wong | Project Code | TOG 2024 |

| VToonify: Controllable High-Resolution Portrait Video Style Transfer | Shuai Yang, Liming Jiang, Ziwei Liu, Chen Change Loy | Project Code | TOG 2022 |

| StyleGANEX: StyleGAN-Based Manipulation Beyond Cropped Aligned Faces | Shuai Yang, Liming Jiang, Ziwei Liu, Chen Change Loy | Project Code Demo | ICCV 2023 |

| FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation | Shuai Yang, Yifan Zhou, Ziwei Liu, Chen Change Loy | Project Code Demo | CVPR 2024 |

| TokenFlow: Consistent Diffusion Features for Consistent Video Editing | Michal Geyer, Omer Bar-Tal, Shai Bagon, Tali Dekely | Project Code Demo | ICLR 2024 |

| PromptFix: You Prompt and We Fix the Photo | Yongsheng Yu, Ziyun Zeng, Hang Hua, Jianlong Fu, Jiebo Luo | Project Code | NIPS 2024 |

| LVCD: Reference-based Lineart Video Colorization with Diffusion Models | Zhitong Huang, Mohan Zhang, Jing Liao | Project Code | TOG 2024 |

| Coloring Anime Line Art Videos with Transformation Region Enhancement Network | Ning Wang, Muyao Niu, Zhi Dou, Zhihui Wang, Zhiyong Wang, Zhaoyan Ming, Bin Liu, Haojie Li | Pattern Recognition 2023 | |

| SketchBetween: Video-to-Video Synthesis for Sprite Animation via Sketches | Dagmar Lukka Loftsdóttir, Matthew Guzdial | Code | ECCV 2022 |

| Animation Line Art Colorization Based on Optical Flow Method | Yifeng Yu, Jiangbo Qian, Chong Wang, Yihong Dong, Baisong Liu | SSNR 2022 | |

| The Animation Transformer: Visual Correspondence via Segment Matching | Evan Casey, Víctor Pérez, Zhuoru Li, Harry Teitelman, Nick Boyajian, Tim Pulver, Mike Manh, William Grisaitis | Demo | arXiv 2021 |

| Artist-Guided Semiautomatic Animation Colorization | Harrish Thasarathan, Mehran Ebrahimi | arXiv 2020 | |

| Line Art Correlation Matching Feature Transfer Network for Automatic Animation Colorization | Zhang Qian, Wang Bo, Wen Wei, Li Hai, Liu Jun Hui | arXiv 2020 | |

| Deep Line Art Video Colorization with a Few References | Min Shi, Jia-Qi Zhang, Shu-Yu Chen, Lin Gao, Yu-Kun Lai, Fang-Lue Zhang | arXiv 2020 | |

| Automatic Temporally Coherent Video Colorization | Harrish Thasarathan, Kamyar Nazeri, Mehran Ebrahimi | Code | arXiv 2019 |

| Toona | Toona Team |

From: Learning Inclusion Matching for Animation Paint Bucket Colorization

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Scaling In-the-Wild Training for Diffusion-based Illumination Harmonization and Editing by Imposing Consistent Light Transport | |||

| DoveNet: Deep Image Harmonization via Domain Verification | Wenyan Cong, Jianfu Zhang, Li Niu, Liu Liu, Zhixin Ling, Weiyuan Li, Liqing Zhang | Code Demo Dataset(Baidu Cloud(access code: kqz3)) Dataset(OneDrive) | CVPR 2020 |

| High-Resolution Image Harmonization via Collaborative Dual Transformations | Wenyan Cong, Xinhao Tao, Li Niu, Jing Liang, Xuesong Gao, Qihao Sun, Liqing Zhang | Code | CVPR 2022 |

| PCT-Net: Full Resolution Image Harmonization Using Pixel-Wise Color Transformations | Julian Jorge Andrade Guerreiro, Mitsuru Nakazawa, Björn Stenger | Code | CVPR 2023 |

| SSH: A Self-Supervised Framework for Image Harmonization | Yifan Jiang, He Zhang, Jianming Zhang, Yilin Wang, Zhe Lin, Kalyan Sunkavalli, Simon Chen, Sohrab Amirghodsi, Sarah Kong, Zhangyang Wang | Code | ICCV 2021 |

| Thinking Outside the BBox: Unconstrained Generative Object Compositing | Gemma Canet Tarrés, Zhe Lin, Zhifei Zhang, Jianming Zhang, Yizhi Song, Dan Ruta, Andrew Gilbert, John Collomosse, Soo Ye Kim | ECCV 2024 | |

| Dr.Bokeh: DiffeRentiable Occlusion-aware Bokeh Rendering | Yichen Sheng, Zixun Yu, Lu Ling, Zhiwen Cao, Cecilia Zhang, Xin Lu, Ke Xian, Haiting Lin, Bedrich Benes | Code | |

| Floating No More: Object-Ground Reconstruction from a Single Image | Yunze Man, Yichen Sheng, Jianming Zhang, Liang-Yan Gui, Yu-Xiong Wang | Project Page | |

| ObjectDrop: Bootstrapping Counterfactuals for Photorealistic Object Removal and Insertion | Daniel Winter, Matan Cohen, Shlomi Fruchter, Yael Pritch, Alex Rav-Acha, Yedid Hoshen | Project Page | |

| Alchemist: Parametric Control of Material Properties with Diffusion Models | Prafull Sharma, Varun Jampani, Yuanzhen Li, Xuhui Jia, Dmitry Lagun, Fredo Durand, William T. Freeman, Mark Matthews | Project Page | CVPR 2024 |

| DisenStudio: Customized Multi-Subject Text-to-Video Generation with Disentangled Spatial Control | Hong Chen, Xin Wang, Yipeng Zhang, Yuwei Zhou, Zeyang Zhang, Siao Tang, Wenwu Zhu | Code | ACMMM 2024 |

| SSN: Soft Shadow Network for Image Compositing | Yichen Sheng, Jianming Zhang, Bedrich Benes | Project Page Code | CVPR 2021 |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Multi-modal Segment Assemblage Network for Ad Video Editing with Importance-Coherence Reward | Yunlong Tang, Siting Xu, Teng Wang, Qin Lin, Qinglin Lu, Feng Zheng | Code | ACCV 2022 |

| Reframe Anything: LLM Agent for Open World Video Reframing | Jiawang Cao, Yongliang Wu, Weiheng Chi, Wenbo Zhu, Ziyue Su, Jay Wu | ||

| OpusClip | OpusClip Team |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Foley Music: Learning to Generate Music from Videos | Chuang Gan, Deng Huang, Peihao Chen, Joshua B. Tenenbaum, Antonio Torralba | Code | ECCV 2020 |

| Video2Music: Suitable Music Generation from Videos using an Affective Multimodal Transformer model | Demo Project Page Code Dataset | ||

| V2Meow: Meowing to the Visual Beat via Video-to-Music Generation | Kun Su, Judith Yue Li, Qingqing Huang, Dima Kuzmin, Joonseok Lee, Chris Donahue, Fei Sha, Aren Jansen, Yu Wang, Mauro Verzetti, Timo I. Denk | Project Page | AAAI 2024 |

| MeLFusion: Synthesizing Music from Image and Language Cues using Diffusion Models | Sanjoy Chowdhury, Sayan Nag, K J Joseph, Balaji Vasan Srinivasan, Dinesh Manocha | Project Page Code Dataset | CVPR 2024 |

| VidMuse: A Simple Video-to-Music Generation Framework with Long-Short-Term Modeling | Zeyue Tian, Zhaoyang Liu, Ruibin Yuan, Jiahao Pan, Qifeng Liu, Xu Tan, Qifeng Chen, Wei Xue, Yike Guo | Code | |

| Taming Visually Guided Sound Generation | Vladimir Iashin, Esa Rahtu | Project Page Demo Code | BMVC 2021 |

| I Hear Your True Colors: Image Guided Audio Generation | Roy Sheffer, Yossi Adi | Project Page Code | ICASSP 2023 |

| FoleyGen: Visually-Guided Audio Generation | Xinhao Mei, Varun Nagaraja, Gael Le Lan, Zhaoheng Ni, Ernie Chang, Yangyang Shi, Vikas Chandra | Project Page | |

| Diff-Foley: Synchronized Video-to-Audio Synthesis with Latent Diffusion Models | Simian Luo, Chuanhao Yan, Chenxu Hu, Hang Zhao | Project Page Code | NeurIPS 2023 |

| Action2Sound: Ambient-Aware Generation of Action Sounds from Egocentric Videos | Changan Chen, Puyuan Peng, Ami Baid, Zihui Xue, Wei-Ning Hsu, David Harwath, Kristen Grauman | Project Page Code | ECCV 2024 |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| StyleDubber: Towards Multi-Scale Style Learning for Movie Dubbing | Gaoxiang Cong, Yuankai Qi, Liang Li, Amin Beheshti, Zhedong Zhang, Anton van den Hengel, Ming-Hsuan Yang, Chenggang Yan, Qingming Huang | Code | ACL 2024 |

| ANIM-400K: A Large-Scale Dataset for Automated End-To-End Dubbing of Video | Kevin Cai, Chonghua Liu, David M. Chan | Code Dataset | ICASSP 2024 |

| EmoDubber: Towards High Quality and Emotion Controllable Movie Dubbing | Gaoxiang Cong, Jiadong Pan, Liang Li, Yuankai Qi, Yuxin Peng, Anton van den Hengel, Jian Yang, Qingming Huang | Project Page & Demo | |

| From Speaker to Dubber: Movie Dubbing with Prosody and Duration Consistency Learning | Zhedong Zhang, Liang Li, Gaoxiang Cong, Haibing Yin, Yuhan Gao, Chenggang Yan, Anton van den Hengel, Yuankai Qi | Code | ACM MM 2024 |

| Learning to Dub Movies via Hierarchical Prosody Models | Gaoxiang Cong, Liang Li, Yuankai Qi, Zhengjun Zha, Qi Wu, Wenyu Wang, Bin Jiang, Ming-Hsuan Yang, Qingming Huang | Code | CVPR 2023 |

| V2C: Visual Voice Cloning | Qi Chen, Yuanqing Li, Yuankai Qi, Jiaqiu Zhou, Mingkui Tan, Qi Wu | Code | CVPR 2022 |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Re:Draw -- Context Aware Translation as a Controllable Method for Artistic Production | Joao Liborio Cardoso, Francesco Banterle, Paolo Cignoni, Michael Wimmer | TBA 2024 | |

| Scaling Concept With Text-Guided Diffusion Models | Chao Huang, Susan Liang, Yunlong Tang, Yapeng Tian, Anurag Kumar, Chenliang Xu | Project Page Code |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Sprite-from-Sprite: Cartoon Animation Decomposition with Self-supervised Sprite Estimation | Lvmin Zhang, Tien-Tsin Wong, Yuxin Liu | Code | ACM 2022 |

| Generative Omnimatte: Learning to Decompose Video into Layers | Yao-Chih Lee, Erika Lu, Sarah Rumbley, Michal Geyer, Jia-Bin Huang, Tali Dekel, Forrester Cole | Project Page |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Toonsynth: Example-based Synthesis of Hand-Colored Cartoon Animations | M Dvorožnák, W Li, VG Kim, D Sýkora | TOG 2018 | |

| Collaborative Neural Rendering using Anime Character Sheets | Zuzeng Lin, Ailin Huang, Zhewei Huang | Code Dataset | IJCAI 2023 |

| DrawingSpinUp: 3D Animation from Single Character Drawings | Jie Zhou, Chufeng Xiao, Miu-Ling Lam, Hongbo Fu | Code | Siggraph Asia 2024 |

| Model/Paper | Authors/Team | Links | Venue |

|---|---|---|---|

| Sakuga-42M Dataset: Scaling Up Cartoon Research | Zhenglin Pan, Yu Zhu, Yuxuan Mu | Dataset | arXiv 2024 |

| Anisora: Exploring the frontiers of animation video generation in the sora era | Yudong Jiang, Baohan Xu, Siqian Yang, Mingyu Yin, Jing Liu, Chao Xu, Siqi Wang, Yidi Wu, Bingwen Zhu, Xinwen Zhang, Xingyu Zheng, Jixuan Xu, Yue Zhang, Jinlong Hou, Huyang Sun | Code | |

| ANIM-400K: A Large-Scale Dataset for Automated End-To-End Dubbing of Video | Kevin Cai, Chonghua Liu, David M. Chan | Code Dataset | ICASSP 2024 |

| V2C: Visual Voice Cloning | Qi Chen, Yuanqing Li, Yuankai Qi, Jiaqiu Zhou, Mingkui Tan, Qi Wu | Code | CVPR 2022 |

| DoveNet: Deep Image Harmonization via Domain Verification | Wenyan Cong, Jianfu Zhang, Li Niu, Liu Liu, Zhixin Ling, Weiyuan Li, Liqing Zhang | Code Demo Dataset (Baidu Cloud) Dataset (OneDrive) | CVPR 2020 |

| SSH: A Self-Supervised Framework for Image Harmonization | Yifan Jiang, He Zhang, Jianming Zhang, Yilin Wang, Zhe Lin, Kalyan Sunkavalli, Simon Chen, Sohrab Amirghodsi, Sarah Kong, Zhangyang Wang | Code Dataset | ICCV 2021 |

| Intrinsic Image Harmonization | Zonghui Guo, Haiyong Zheng, Yufeng Jiang, Zhaorui Gu, Bing Zheng | Code Dataset (Baidu Cloud) Dataset (Google Drive) | CVPR 2021 |

| Alchemist: Parametric Control of Material Properties with Diffusion Models | Prafull Sharma, Varun Jampani, Yuanzhen Li, Xuhui Jia, Dmitry Lagun, Fredo Durand, William T. Freeman, Mark Matthews | Project Page | CVPR 2024 |

| Learning Inclusion Matching for Animation Paint Bucket Colorization | Yuekun Dai, Shangchen Zhou, Qinyue Li, Chongyi Li, Chen Change Loy | Project Demo Code Dataset | CVPR 2024 |

| Deep Animation Video Interpolation in the Wild | Li Siyao, Shiyu Zhao, Weijiang Yu, Wenxiu Sun, Dimitris N. Metaxas, Chen Change Loy, Ziwei Liu | Code Data (Google Drive) Data (OneDrive) Video Demo | CVPR 2021 |

| Deep Geometrized Cartoon Line Inbetweening | Li Siyao, Tianpei Gu, Weiye Xiao, Henghui Ding, Ziwei Liu, Chen Change Loy | Demo Code Dataset | ICCV 2023 |

| AnimeRun: 2D Animation Visual Correspondence from Open Source 3D Movies | Li Siyao, Yuhang Li, Bo Li, Chao Dong, Ziwei Liu, Chen Change Loy | Project & Dataset Code | NeurIPS 2022 |

From: Sakuga-42M Dataset: Scaling Up Cartoon Research

@article{tang2025ai4anime,

title={Generative AI for Cel-Animation: A Survey},

author={Tang, Yunlong and Guo, Junjia and Liu, Pinxin and Wang, Zhiyuan and Hua, Hang and Zhong, Jia-Xing and Xiao, Yunzhong and Huang, Chao and Song, Luchuan and Liang, Susan and Song, Yizhi and He, Liu and Bi, Jing and Feng, Mingqian and Li, Xinyang and Zhang, Zeliang and Xu, Chenliang},

journal={arXiv preprint arXiv:2501.06250},

url={https://arxiv.org/abs/2501.06250},

year={2025}

}