This repository contains PyTorch (v0.4.0) implementations of typical policy gradient (PG) algorithms.

- Vanilla Policy Gradient [1]

- Truncated Natural Policy Gradient [4]

- Trust Region Policy Optimization [5]

- Proximal Policy Optimization [7].

We have implemented and trained the agents with the PG algorithms using the following benchmarks. Trained agents are also provided in our repo!

- mujoco-py: https://github.com/openai/mujoco-py

- Unity ml-agent: https://github.com/Unity-Technologies/ml-agents

For reference, solid reviews of the below papers related to PG (in Korean) are located in https://reinforcement-learning-kr.github.io/2018/06/29/0_pg-travel-guide/. Enjoy!

- [1] R. Sutton, et al., "Policy Gradient Methods for Reinforcement Learning with Function Approximation", NIPS 2000.

- [2] D. Silver, et al., "Deterministic Policy Gradient Algorithms", ICML 2014.

- [3] T. Lillicrap, et al., "Continuous Control with Deep Reinforcement Learning", ICLR 2016.

- [4] S. Kakade, "A Natural Policy Gradient", NIPS 2002.

- [5] J. Schulman, et al., "Trust Region Policy Optimization", ICML 2015.

- [6] J. Schulman, et al., "High-Dimensional Continuous Control using Generalized Advantage Estimation", ICLR 2016.

- [7] J. Schulman, et al., "Proximal Policy Optimization Algorithms", arXiv, https://arxiv.org/pdf/1707.06347.pdf.

Table of Contents

Navigate to pg_travel/mujoco folder

Train hopper agent with PPO using Hopper-v2 without rendering.

python main.py

- Note that models are saved in

save_modelfolder automatically for every 100th iteration.

python main.py --algorithm TRPO --env HalfCheetah-v2 --render --load_model ckpt_736.pth.tar

- algorithm: PG, TNPG, TRPO, PPO(default)

- env: Ant-v2, HalfCheetah-v2, Hopper-v2(default), Humanoid-v2, HumanoidStandup-v2, InvertedPendulum-v2, Reacher-v2, Swimmer-v2, Walker2d-v2

Hyperparameters are listed in hparams.py.

Change the hyperparameters according to your preference.

We have integrated TensorboardX to observe training progresses.

- Note that the results of trainings are automatically saved in

runsfolder. - TensorboardX is the Tensorboard like visualization tool for Pytorch.

Navigate to the pg_travel/mujoco folder

tensorboard --logdir runs

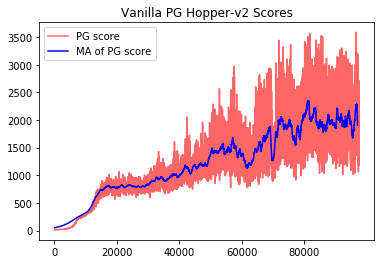

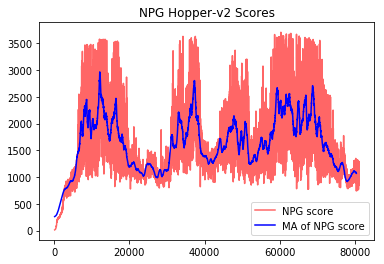

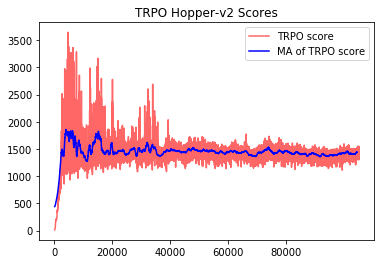

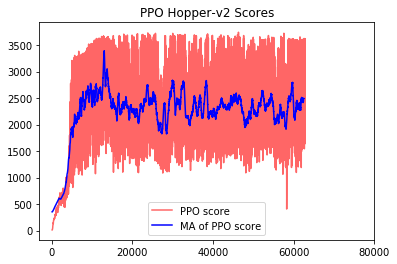

We have trained the agents with four different PG algortihms using Hopper-v2 env.

| Algorithm | Score | GIF |

|---|---|---|

| Vanilla PG |  |

|

| NPG |  |

|

| TRPO |  |

|

| PPO |  |

We have modified Walker environment provided by Unity ml-agents.

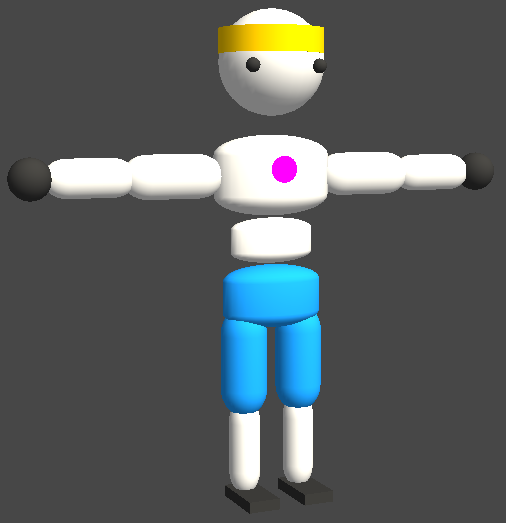

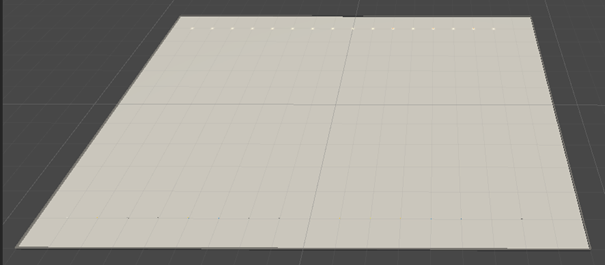

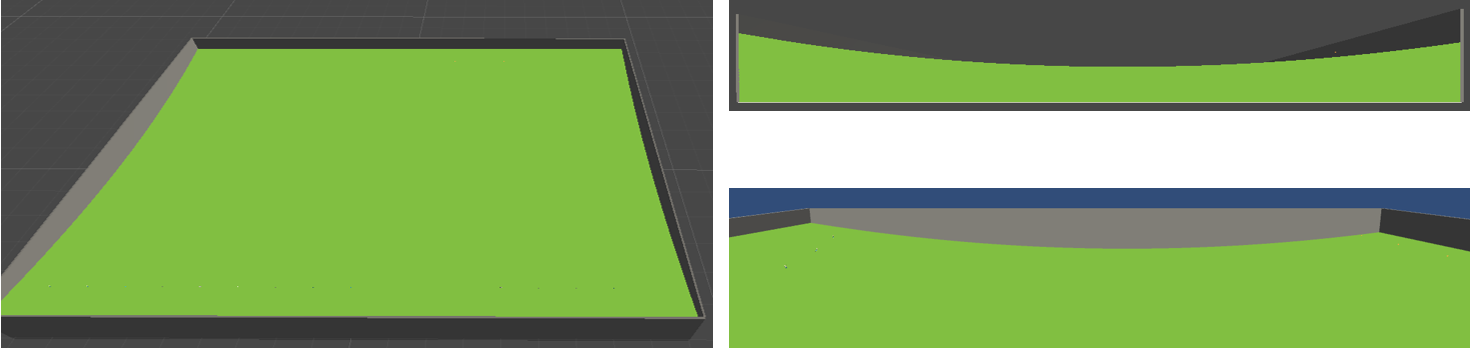

| Overview | image |

|---|---|

| Walker |  |

| Plane Env |  |

| Curved Env |  |

Description

- 215 continuous observation spaces

- 39 continuous action spaces

- 16 walker agents in both Plane and Curved envs

Reward- +0.03 times body velocity in the goal direction.

- +0.01 times head y position.

- +0.01 times body direction alignment with goal direction.

- -0.01 times head velocity difference from body velocity.

- +1000 for reaching the target

Done- When the body parts other than the right and left foots of the walker agent touch the ground or walls

- When the walker agent reaches the target

- Contains Plane and Curved walker environments for Linux / Mac / Windows!

- Linux headless envs are also provided for faster training and server-side training.

- Download the corresponding environments, unzip, and put them in the

pg_travel/unity_multiagent/env

Navigate to the pg_travel/unity_multiagent folder

pg_travel/unityis provided to make it easier to follow the code. Only one agent is used for training even if the multiple agents are provided in the environment.

Train walker agent with PPO using Plane environment without rendering.

python main.py --train

- See arguments in main.py. You can change hyper parameters for the ppo algorithm, network architecture, etc.

- Note that models are saved in

save_modelfolder automatically for every 100th iteration.

If you just want to see how the trained agent walks

python main.py --render --load_model ckpt_736.pth.tar

If you want to train from the saved point with rendering

python main.py --render --load_model ckpt_736.pth.tar --train

We have integrated TensorboardX to observe training progresses.

Navigate to the pg_travel/unity_multiagent folder

tensorboard --logdir runs

We have trained the agents with PPO using plane and curved envs.

| Env | GIF |

|---|---|

| Plane |  |

| Curved |  |

We referenced the codes from the below repositories.