Welcome to our project dedicated to providing up-to-date data on newly registered .zip domains. With the recent introduction of the .zip top-level domain (TLD) by Google, concerns have arisen within the community regarding potential attack vectors associated with this TLD. To address these concerns and ensure the safety of internet users, we have initiated this workflow aimed at gathering comprehensive information about .zip domains as they are registered.

Our mission is to provide a reliable and regularly updated dataset that contains valuable insights into newly registered .zip domains. By systematically collecting and analyzing information, we aim to shed light on potential risks and help the community make informed decisions when interacting with these domains. Our project focuses on promoting online security and mitigating any potential threats associated with the .zip TLD.

While we strive to provide accurate and up-to-date information, it is important to note that our project serves as a supplementary resource and should not be considered a definitive indicator of the security status of any .zip domain. It is crucial for users to exercise their own judgment and employ additional security measures when interacting with any domain, including those under the .zip TLD.

This repository contains DNS data organized into CSV files. Each CSV file represents a collection of DNS records and includes the following properties for each record:

- Host: The hostname or domain associated with the record.

- A: The IP address corresponding to the host.

- SOA: Start of Authority record for the domain.

- NS: Name Servers responsible for the domain.

- Status Code: The DNS response code for the query.

- CDN: Indicates whether the record is served through a Content Delivery Network (CDN).

- CDN Name: The name of the CDN used, if applicable.

Here's an example of how the data is structured in the CSV files:

| Filename | Hosts | SOA | NS | Status Code | A | CDN | CDN Name | AAAA | MX | TXT | CNAME | CAA | PTR | Has Internal IPs | Internal IPs |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| backup.zip | ns1.name.com, hostmaster.nsone.net | ns4fmw.name.com, ns2dky.name.com, ns1bdg.name.com, ns3dkz.name.com | NOERROR | 91.195.240.94, 163.114.216.17, 163.114.216.49, 163.114.217.17, 163.114.217.49 | 2a00:edc0:107::49 | ||||||||||

| microsoft-office.zip | ns1.name.com, hostmaster.nsone.net | ns4fmw.name.com, ns2dky.name.com, ns1bdg.name.com, ns3dkz.name.com | NOERROR | 91.195.240.94, 163.114.216.17, 163.114.216.49, 163.114.217.17, 163.114.217.49 | 2a00:edc0:107::49 |

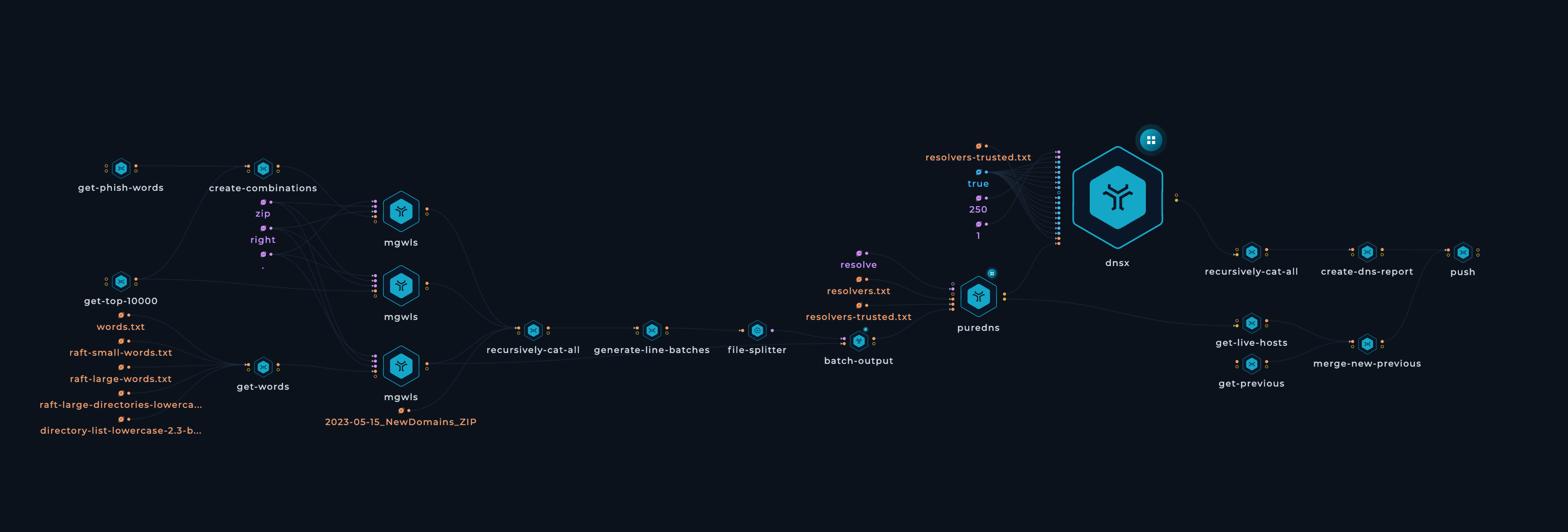

- Download 479k English words to be used as wordlist

- Download top 10 Million Websites. Use a bash script to delete .tlds and get only the company names

- Use

custom-scriptto create permutations for company names with wordlists (backup,update, etc)

while read -r word; do

while read -r line; do

echo "${word}${line}"

echo "${line}${word}"

echo "${line}-${word}"

echo "${word}-${line}"

done < domains.txt

done < words.txt

- Download NewDomains_ZIP File and merge it with all generated data

- Use raft-small-words, raft-large-words, raft-large-directories-lowercase, directory-list-lowercase-2.3-big

- Use mgwls to generate

.ziptlds - Generate batched pattern to be able to execute the workflow in parallel on 50 machines or more

- Use puredns for faster resolving

- Use dnsx to resolve and get JSON data

- Merge the data from parallel executions

- Create CSV

zip-domains.csvwithpython - Push to repository

All contributions/ideas/suggestions are welcome! Feel free to create a new ticket via GitHub issues, tweet at us @trick3st, or join the conversation on Discord.

We believe in the value of tinkering; cookie-cutter solutions rarely cut it. Sign up to Trickest to customize this workflow to your use case, get access to many more workflows, or build your own workflows from scratch!