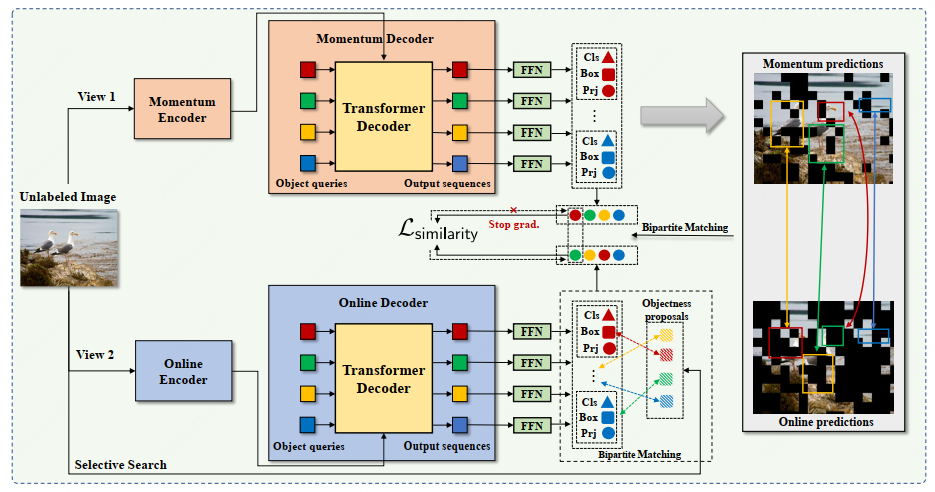

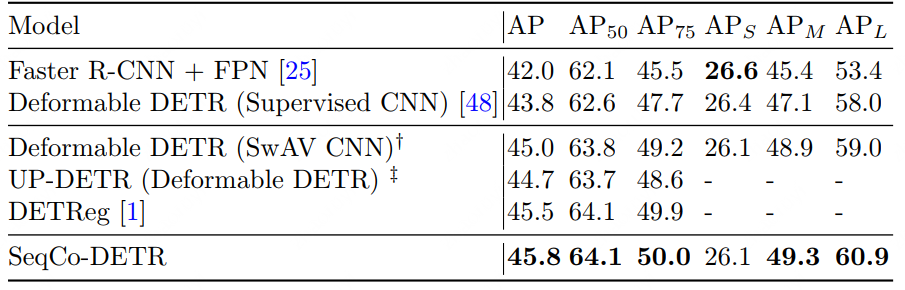

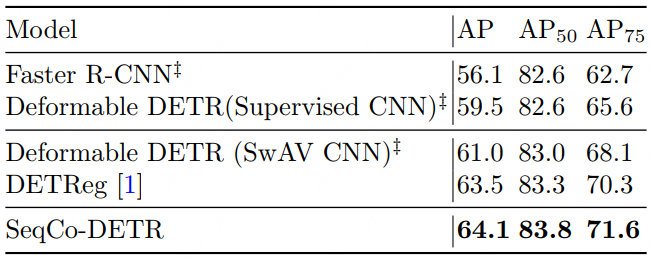

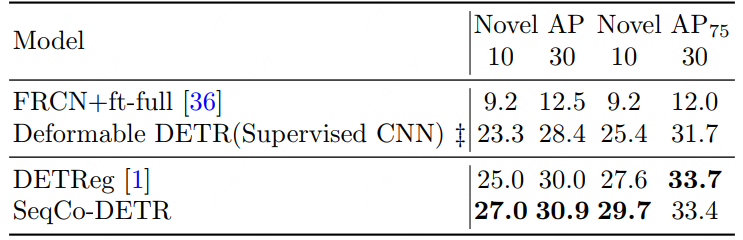

This is the implementation of the paper SeqCo-DETR: Sequence Consistency Training for Self-Supervised Object Detection with Transformers.

Paper is under review, code is under preparation, please be patient.

- Self-supervised learning

- Object detection

- Sequence consistency

- Detection with transformers