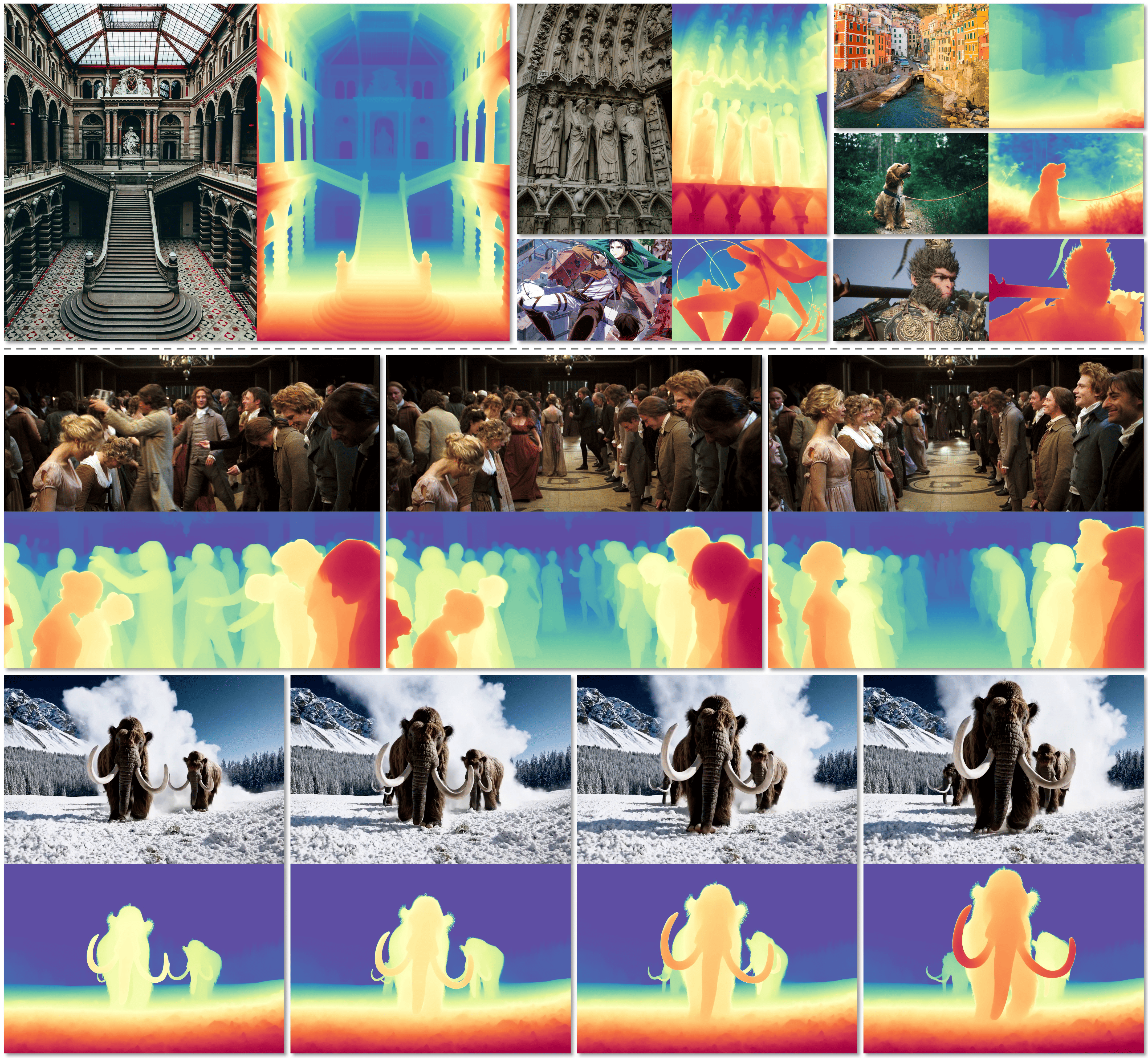

Depth Any Video introduces a scalable synthetic data pipeline, capturing 40,000 video clips from diverse games, and leverages powerful priors of generative video diffusion models to advance video depth estimation. By incorporating rotary position encoding, flow matching, and a mixed-duration training strategy, it robustly handles varying video lengths and frame rates. Additionally, a novel depth interpolation method enables high-resolution depth inference, achieving superior spatial accuracy and temporal consistency over previous models.

This repository is the official implementation of the paper:

Depth Any Video with Scalable Synthetic Data

Honghui Yang*, Di Huang*, Wei Yin, Chunhua Shen, Haifeng Liu, Xiaofei He, Binbin Lin+, Wanli Ouyang, Tong He+

[2024-10-20] The Replicate Demo and API is added here.

[2024-10-20] The Hugging Face online demo is live here.

[2024-10-15] The arXiv submission is available here.

Setting up the environment with conda. With support for the app.

git clone https://github.com/Nightmare-n/DepthAnyVideo

cd DepthAnyVideo

# create env using conda

conda create -n dav python==3.10

conda activate dav

pip install -r requirements.txt

pip install gradio- To run inference on an image, use the following command:

python run_infer.py --data_path ./demos/arch_2.jpg --output_dir ./outputs/ --max_resolution 2048- To run inference on a video, use the following command:

python run_infer.py --data_path ./demos/wooly_mammoth.mp4 --output_dir ./outputs/ --max_resolution 960If you find our work useful, please cite:

@article{yang2024depthanyvideo,

author = {Honghui Yang and Di Huang and Wei Yin and Chunhua Shen and Haifeng Liu and Xiaofei He and Binbin Lin and Wanli Ouyang and Tong He},

title = {Depth Any Video with Scalable Synthetic Data},

journal = {arXiv preprint arXiv:2410.10815},

year = {2024}

}